Xuyang Zhang

UniForce: A Unified Latent Force Model for Robot Manipulation with Diverse Tactile Sensors

Feb 01, 2026Abstract:Force sensing is essential for dexterous robot manipulation, but scaling force-aware policy learning is hindered by the heterogeneity of tactile sensors. Differences in sensing principles (e.g., optical vs. magnetic), form factors, and materials typically require sensor-specific data collection, calibration, and model training, thereby limiting generalisability. We propose UniForce, a novel unified tactile representation learning framework that learns a shared latent force space across diverse tactile sensors. UniForce reduces cross-sensor domain shift by jointly modeling inverse dynamics (image-to-force) and forward dynamics (force-to-image), constrained by force equilibrium and image reconstruction losses to produce force-grounded representations. To avoid reliance on expensive external force/torque (F/T) sensors, we exploit static equilibrium and collect force-paired data via direct sensor--object--sensor interactions, enabling cross-sensor alignment with contact force. The resulting universal tactile encoder can be plugged into downstream force-aware robot manipulation tasks with zero-shot transfer, without retraining or finetuning. Extensive experiments on heterogeneous tactile sensors including GelSight, TacTip, and uSkin, demonstrate consistent improvements in force estimation over prior methods, and enable effective cross-sensor coordination in Vision-Tactile-Language-Action (VTLA) models for a robotic wiping task. Code and datasets will be released.

SimTac: A Physics-Based Simulator for Vision-Based Tactile Sensing with Biomorphic Structures

Nov 14, 2025Abstract:Tactile sensing in biological organisms is deeply intertwined with morphological form, such as human fingers, cat paws, and elephant trunks, which enables rich and adaptive interactions through a variety of geometrically complex structures. In contrast, vision-based tactile sensors in robotics have been limited to simple planar geometries, with biomorphic designs remaining underexplored. To address this gap, we present SimTac, a physics-based simulation framework for the design and validation of biomorphic tactile sensors. SimTac consists of particle-based deformation modeling, light-field rendering for photorealistic tactile image generation, and a neural network for predicting mechanical responses, enabling accurate and efficient simulation across a wide range of geometries and materials. We demonstrate the versatility of SimTac by designing and validating physical sensor prototypes inspired by biological tactile structures and further demonstrate its effectiveness across multiple Sim2Real tactile tasks, including object classification, slip detection, and contact safety assessment. Our framework bridges the gap between bio-inspired design and practical realisation, expanding the design space of tactile sensors and paving the way for tactile sensing systems that integrate morphology and sensing to enable robust interaction in unstructured environments.

ViTacGen: Robotic Pushing with Vision-to-Touch Generation

Oct 15, 2025Abstract:Robotic pushing is a fundamental manipulation task that requires tactile feedback to capture subtle contact forces and dynamics between the end-effector and the object. However, real tactile sensors often face hardware limitations such as high costs and fragility, and deployment challenges involving calibration and variations between different sensors, while vision-only policies struggle with satisfactory performance. Inspired by humans' ability to infer tactile states from vision, we propose ViTacGen, a novel robot manipulation framework designed for visual robotic pushing with vision-to-touch generation in reinforcement learning to eliminate the reliance on high-resolution real tactile sensors, enabling effective zero-shot deployment on visual-only robotic systems. Specifically, ViTacGen consists of an encoder-decoder vision-to-touch generation network that generates contact depth images, a standardized tactile representation, directly from visual image sequence, followed by a reinforcement learning policy that fuses visual-tactile data with contrastive learning based on visual and generated tactile observations. We validate the effectiveness of our approach in both simulation and real world experiments, demonstrating its superior performance and achieving a success rate of up to 86\%.

Hadaptive-Net: Efficient Vision Models via Adaptive Cross-Hadamard Synergy

May 28, 2025Abstract:Recent studies have revealed the immense potential of Hadamard product in enhancing network representational capacity and dimensional compression. However, despite its theoretical promise, this technique has not been systematically explored or effectively applied in practice, leaving its full capabilities underdeveloped. In this work, we first analyze and identify the advantages of Hadamard product over standard convolutional operations in cross-channel interaction and channel expansion. Building upon these insights, we propose a computationally efficient module: Adaptive Cross-Hadamard (ACH), which leverages adaptive cross-channel Hadamard products for high-dimensional channel expansion. Furthermore, we introduce Hadaptive-Net (Hadamard Adaptive Network), a lightweight network backbone for visual tasks, which is demonstrated through experiments that it achieves an unprecedented balance between inference speed and accuracy through our proposed module.

General Force Sensation for Tactile Robot

Mar 02, 2025

Abstract:Robotic tactile sensors, including vision-based and taxel-based sensors, enable agile manipulation and safe human-robot interaction through force sensation. However, variations in structural configurations, measured signals, and material properties create domain gaps that limit the transferability of learned force sensation across different tactile sensors. Here, we introduce GenForce, a general framework for achieving transferable force sensation across both homogeneous and heterogeneous tactile sensors in robotic systems. By unifying tactile signals into marker-based binary tactile images, GenForce enables the transfer of existing force labels to arbitrary target sensors using a marker-to-marker translation technique with a few paired data. This process equips uncalibrated tactile sensors with force prediction capabilities through spatiotemporal force prediction models trained on the transferred data. Extensive experimental results validate GenForce's generalizability, accuracy, and robustness across sensors with diverse marker patterns, structural designs, material properties, and sensing principles. The framework significantly reduces the need for costly and labor-intensive labeled data collection, enabling the rapid deployment of multiple tactile sensors on robotic hands requiring force sensing capabilities.

RoTip: A Finger-Shaped Tactile Sensor with Active Rotation

Oct 01, 2024Abstract:In recent years, advancements in optical tactile sensor technology have primarily centred on enhancing sensing precision and expanding the range of sensing modalities. To meet the requirements for more skilful manipulation, there should be a movement towards making tactile sensors more dynamic. In this paper, we introduce RoTip, a novel vision-based tactile sensor that is uniquely designed with an independently controlled joint and the capability to sense contact over its entire surface. The rotational capability of the sensor is particularly crucial for manipulating everyday objects, especially thin and flexible ones, as it enables the sensor to mobilize while in contact with the object's surface. The manipulation experiments demonstrate the ability of our proposed RoTip to manipulate rigid and flexible objects, and the full-finger tactile feedback and active rotation capabilities have the potential to explore more complex and precise manipulation tasks.

TransForce: Transferable Force Prediction for Vision-based Tactile Sensors with Sequential Image Translation

Sep 15, 2024

Abstract:Vision-based tactile sensors (VBTSs) provide high-resolution tactile images crucial for robot in-hand manipulation. However, force sensing in VBTSs is underutilized due to the costly and time-intensive process of acquiring paired tactile images and force labels. In this study, we introduce a transferable force prediction model, TransForce, designed to leverage collected image-force paired data for new sensors under varying illumination colors and marker patterns while improving the accuracy of predicted forces, especially in the shear direction. Our model effectively achieves translation of tactile images from the source domain to the target domain, ensuring that the generated tactile images reflect the illumination colors and marker patterns of the new sensors while accurately aligning the elastomer deformation observed in existing sensors, which is beneficial to force prediction of new sensors. As such, a recurrent force prediction model trained with generated sequential tactile images and existing force labels is employed to estimate higher-accuracy forces for new sensors with lowest average errors of 0.69N (5.8\% in full work range) in $x$-axis, 0.70N (5.8\%) in $y$-axis, and 1.11N (6.9\%) in $z$-axis compared with models trained with single images. The experimental results also reveal that pure marker modality is more helpful than the RGB modality in improving the accuracy of force in the shear direction, while the RGB modality show better performance in the normal direction.

RoTipBot: Robotic Handling of Thin and Flexible Objects using Rotatable Tactile Sensors

Jun 13, 2024

Abstract:This paper introduces RoTipBot, a novel robotic system for handling thin, flexible objects. Different from previous works that are limited to singulating them using suction cups or soft grippers, RoTipBot can grasp and count multiple layers simultaneously, emulating human handling in various environments. Specifically, we develop a novel vision-based tactile sensor named RoTip that can rotate and sense contact information around its tip. Equipped with two RoTip sensors, RoTipBot feeds multiple layers of thin, flexible objects into the centre between its fingers, enabling effective grasping and counting. RoTip's tactile sensing ensures both fingers maintain good contact with the object, and an adjustment approach is designed to allow the gripper to adapt to changes in the object. Extensive experiments demonstrate the efficacy of the RoTip sensor and the RoTipBot approach. The results show that RoTipBot not only achieves a higher success rate but also grasps and counts multiple layers simultaneously -- capabilities not possible with previous methods. Furthermore, RoTipBot operates up to three times faster than state-of-the-art methods. The success of RoTipBot paves the way for future research in object manipulation using mobilised tactile sensors. All the materials used in this paper are available at \url{https://sites.google.com/view/rotipbot}.

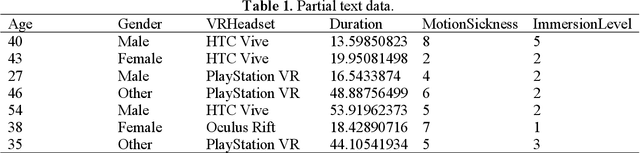

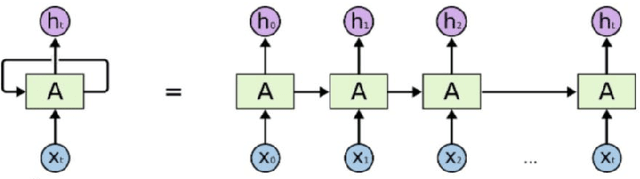

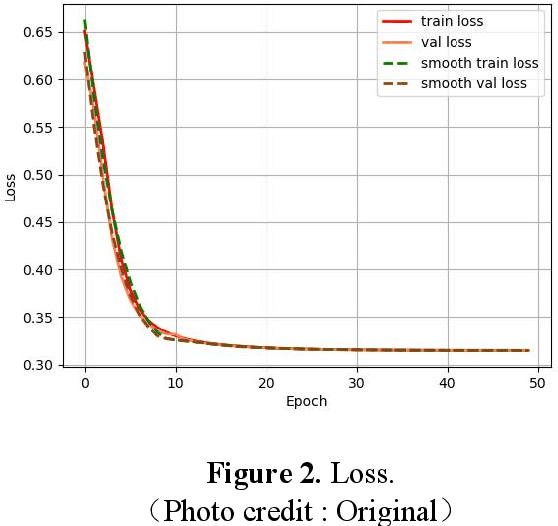

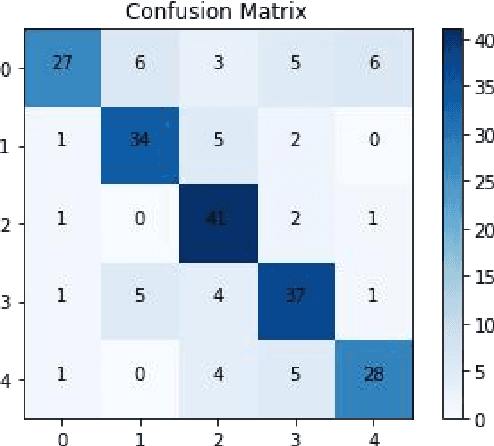

Improved AdaBoost for Virtual Reality Experience Prediction Based on Long Short-Term Memory Network

May 17, 2024

Abstract:A classification prediction algorithm based on Long Short-Term Memory Network (LSTM) improved AdaBoost is used to predict virtual reality (VR) user experience. The dataset is randomly divided into training and test sets in the ratio of 7:3.During the training process, the model's loss value decreases from 0.65 to 0.31, which shows that the model gradually reduces the discrepancy between the prediction results and the actual labels, and improves the accuracy and generalisation ability.The final loss value of 0.31 indicates that the model fits the training data well, and is able to make predictions and classifications more accurately. The confusion matrix for the training set shows a total of 177 correct predictions and 52 incorrect predictions, with an accuracy of 77%, precision of 88%, recall of 77% and f1 score of 82%. The confusion matrix for the test set shows a total of 167 correct and 53 incorrect predictions with 75% accuracy, 87% precision, 57% recall and 69% f1 score. In summary, the classification prediction algorithm based on LSTM with improved AdaBoost shows good prediction ability for virtual reality user experience. This study is of great significance to enhance the application of virtual reality technology in user experience. By combining LSTM and AdaBoost algorithms, significant progress has been made in user experience prediction, which not only improves the accuracy and generalisation ability of the model, but also provides useful insights for related research in the field of virtual reality. This approach can help developers better understand user requirements, optimise virtual reality product design, and enhance user satisfaction, promoting the wide application of virtual reality technology in various fields.

A Unified NOMA Framework in Beam-Hopping Satellite Communication Systems

Jan 17, 2024

Abstract:This paper investigates the application of a unified non-orthogonal multiple access framework in beam hopping (U-NOMA-BH) based satellite communication systems. More specifically, the proposed U-NOMA-BH framework can be applied to code-domain NOMA based BH (CD-NOMA-BH) and power-domain NOMA based BH (PD-NOMA-BH) systems. To satisfy dynamic-uneven traffic demands, we formulate the optimization problem to minimize the square of discrete difference by jointly optimizing power allocation, carrier assignment and beam scheduling. The non-convexity of the objective function and the constraint condition is solved through Dinkelbach's transform and variable relaxation. As a further development, the closed-from and asymptotic expressions of outage probability are derived for CD/PD-NOMA-BH systems. Based on approximated results, the diversity orders of a pair of users are obtained in detail. In addition, the system throughput of U-NOMA-BH is discussed in delay-limited transmission mode. Numerical results verify that: i) The gap between traffic requests of CD/PD-NOMA-BH systems appears to be more closely compared with orthogonal multiple access based BH (OMA-BH); ii) The CD-NOMA-BH system is capable of providing the enhanced traffic request and capacity provision; and iii) The outage behaviors of CD/PD-NOMA-BH are better than that of OMA-BH.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge