Xinwen Liu

Apple Intelligence Foundation Language Models

Jul 29, 2024

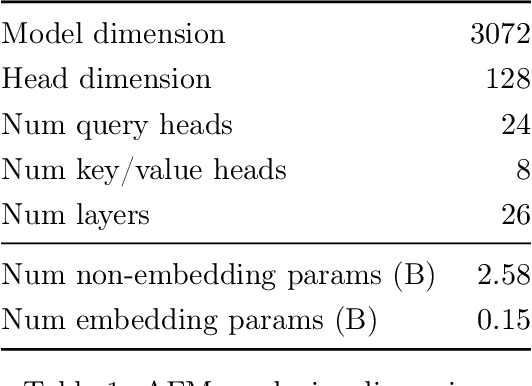

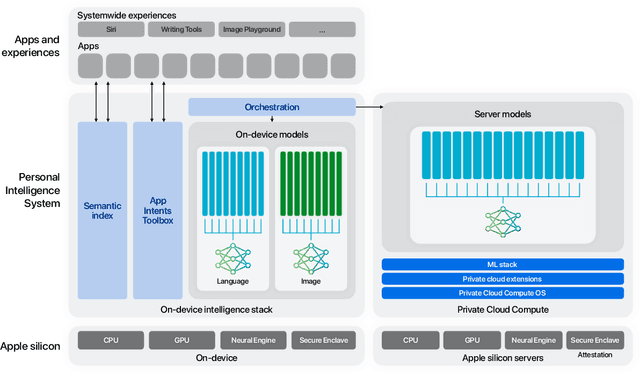

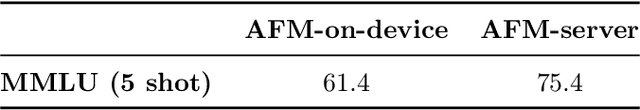

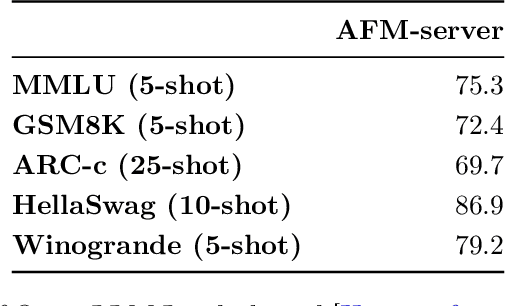

Abstract:We present foundation language models developed to power Apple Intelligence features, including a ~3 billion parameter model designed to run efficiently on devices and a large server-based language model designed for Private Cloud Compute. These models are designed to perform a wide range of tasks efficiently, accurately, and responsibly. This report describes the model architecture, the data used to train the model, the training process, how the models are optimized for inference, and the evaluation results. We highlight our focus on Responsible AI and how the principles are applied throughout the model development.

DuDoUniNeXt: Dual-domain unified hybrid model for single and multi-contrast undersampled MRI reconstruction

Mar 08, 2024

Abstract:Multi-contrast (MC) Magnetic Resonance Imaging (MRI) reconstruction aims to incorporate a reference image of auxiliary modality to guide the reconstruction process of the target modality. Known MC reconstruction methods perform well with a fully sampled reference image, but usually exhibit inferior performance, compared to single-contrast (SC) methods, when the reference image is missing or of low quality. To address this issue, we propose DuDoUniNeXt, a unified dual-domain MRI reconstruction network that can accommodate to scenarios involving absent, low-quality, and high-quality reference images. DuDoUniNeXt adopts a hybrid backbone that combines CNN and ViT, enabling specific adjustment of image domain and k-space reconstruction. Specifically, an adaptive coarse-to-fine feature fusion module (AdaC2F) is devised to dynamically process the information from reference images of varying qualities. Besides, a partially shared shallow feature extractor (PaSS) is proposed, which uses shared and distinct parameters to handle consistent and discrepancy information among contrasts. Experimental results demonstrate that the proposed model surpasses state-of-the-art SC and MC models significantly. Ablation studies show the effectiveness of the proposed hybrid backbone, AdaC2F, PaSS, and the dual-domain unified learning scheme.

Evidence-aware multi-modal data fusion and its application to total knee replacement prediction

Mar 24, 2023

Abstract:Deep neural networks have been widely studied for predicting a medical condition, such as total knee replacement (TKR). It has shown that data of different modalities, such as imaging data, clinical variables and demographic information, provide complementary information and thus can improve the prediction accuracy together. However, the data sources of various modalities may not always be of high quality, and each modality may have only partial information of medical condition. Thus, predictions from different modalities can be opposite, and the final prediction may fail in the presence of such a conflict. Therefore, it is important to consider the reliability of each source data and the prediction output when making a final decision. In this paper, we propose an evidence-aware multi-modal data fusion framework based on the Dempster-Shafer theory (DST). The backbone models contain an image branch, a non-image branch and a fusion branch. For each branch, there is an evidence network that takes the extracted features as input and outputs an evidence score, which is designed to represent the reliability of the output from the current branch. The output probabilities along with the evidence scores from multiple branches are combined with the Dempster's combination rule to make a final prediction. Experimental results on the public OA initiative (OAI) dataset for the TKR prediction task show the superiority of the proposed fusion strategy on various backbone models.

Undersampled MRI Reconstruction with Side Information-Guided Normalisation

Mar 07, 2022

Abstract:Magnetic resonance (MR) images exhibit various contrasts and appearances based on factors such as different acquisition protocols, views, manufacturers, scanning parameters, etc. This generally accessible appearance-related side information affects deep learning-based undersampled magnetic resonance imaging (MRI) reconstruction frameworks, but has been overlooked in the majority of current works. In this paper, we investigate the use of such side information as normalisation parameters in a convolutional neural network (CNN) to improve undersampled MRI reconstruction. Specifically, a Side Information-Guided Normalisation (SIGN) module, containing only few layers, is proposed to efficiently encode the side information and output the normalisation parameters. We examine the effectiveness of such a module on two popular reconstruction architectures, D5C5 and OUCR. The experimental results on both brain and knee images under various acceleration rates demonstrate that the proposed method improves on its corresponding baseline architectures with a significant margin.

Deep Simultaneous Optimisation of Sampling and Reconstruction for Multi-contrast MRI

Mar 31, 2021

Abstract:MRI images of the same subject in different contrasts contain shared information, such as the anatomical structure. Utilizing the redundant information amongst the contrasts to sub-sample and faithfully reconstruct multi-contrast images could greatly accelerate the imaging speed, improve image quality and shorten scanning protocols. We propose an algorithm that generates the optimised sampling pattern and reconstruction scheme of one contrast (e.g. T2-weighted image) when images with different contrast (e.g. T1-weighted image) have been acquired. The proposed algorithm achieves increased PSNR and SSIM with the resulting optimal sampling pattern compared to other acquisition patterns and single contrast methods.

Universal Undersampled MRI Reconstruction

Mar 09, 2021

Abstract:Deep neural networks have been extensively studied for undersampled MRI reconstruction. While achieving state-of-the-art performance, they are trained and deployed specifically for one anatomy with limited generalization ability to another anatomy. Rather than building multiple models, a universal model that reconstructs images across different anatomies is highly desirable for efficient deployment and better generalization. Simply mixing images from multiple anatomies for training a single network does not lead to an ideal universal model due to the statistical shift among datasets of various anatomies, the need to retrain from scratch on all datasets with the addition of a new dataset, and the difficulty in dealing with imbalanced sampling when the new dataset is further of a smaller size. In this paper, for the first time, we propose a framework to learn a universal deep neural network for undersampled MRI reconstruction. Specifically, anatomy-specific instance normalization is proposed to compensate for statistical shift and allow easy generalization to new datasets. Moreover, the universal model is trained by distilling knowledge from available independent models to further exploit representations across anatomies. Experimental results show the proposed universal model can reconstruct both brain and knee images with high image quality. Also, it is easy to adapt the trained model to new datasets of smaller size, i.e., abdomen, cardiac and prostate, with little effort and superior performance.

Extraction of Behavioral Features from Smartphone and Wearable Data

Jan 09, 2019Abstract:The rich set of sensors in smartphones and wearable devices provides the possibility to passively collect streams of data in the wild. The raw data streams, however, can rarely be directly used in the modeling pipeline. We provide a generic framework that can process raw data streams and extract useful features related to non-verbal human behavior. This framework can be used by researchers in the field who are interested in processing data from smartphones and Wearable devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge