Afsaneh Doryab

Improving and Accelerating Offline RL in Large Discrete Action Spaces with Structured Policy Initialization

Jan 07, 2026Abstract:Reinforcement learning in discrete combinatorial action spaces requires searching over exponentially many joint actions to simultaneously select multiple sub-actions that form coherent combinations. Existing approaches either simplify policy learning by assuming independence across sub-actions, which often yields incoherent or invalid actions, or attempt to learn action structure and control jointly, which is slow and unstable. We introduce Structured Policy Initialization (SPIN), a two-stage framework that first pre-trains an Action Structure Model (ASM) to capture the manifold of valid actions, then freezes this representation and trains lightweight policy heads for control. On challenging discrete DM Control benchmarks, SPIN improves average return by up to 39% over the state of the art while reducing time to convergence by up to 12.8$\times$.

SAINT: Attention-Based Modeling of Sub-Action Dependencies in Multi-Action Policies

May 17, 2025Abstract:The combinatorial structure of many real-world action spaces leads to exponential growth in the number of possible actions, limiting the effectiveness of conventional reinforcement learning algorithms. Recent approaches for combinatorial action spaces impose factorized or sequential structures over sub-actions, failing to capture complex joint behavior. We introduce the Sub-Action Interaction Network using Transformers (SAINT), a novel policy architecture that represents multi-component actions as unordered sets and models their dependencies via self-attention conditioned on the global state. SAINT is permutation-invariant, sample-efficient, and compatible with standard policy optimization algorithms. In 15 distinct combinatorial environments across three task domains, including environments with nearly 17 million joint actions, SAINT consistently outperforms strong baselines.

Pairwise Spatiotemporal Partial Trajectory Matching for Co-movement Analysis

Dec 03, 2024Abstract:Spatiotemporal pairwise movement analysis involves identifying shared geographic-based behaviors between individuals within specific time frames. Traditionally, this task relies on sequence modeling and behavior analysis techniques applied to tabular or video-based data, but these methods often lack interpretability and struggle to capture partial matching. In this paper, we propose a novel method for pairwise spatiotemporal partial trajectory matching that transforms tabular spatiotemporal data into interpretable trajectory images based on specified time windows, allowing for partial trajectory analysis. This approach includes localization of trajectories, checking for spatial overlap, and pairwise matching using a Siamese Neural Network. We evaluate our method on a co-walking classification task, demonstrating its effectiveness in a novel co-behavior identification application. Our model surpasses established methods, achieving an F1-score up to 0.73. Additionally, we explore the method's utility for pair routine pattern analysis in real-world scenarios, providing insights into the frequency, timing, and duration of shared behaviors. This approach offers a powerful, interpretable framework for spatiotemporal behavior analysis, with potential applications in social behavior research, urban planning, and healthcare.

Detection of Racial Bias from Physiological Responses

Feb 02, 2021

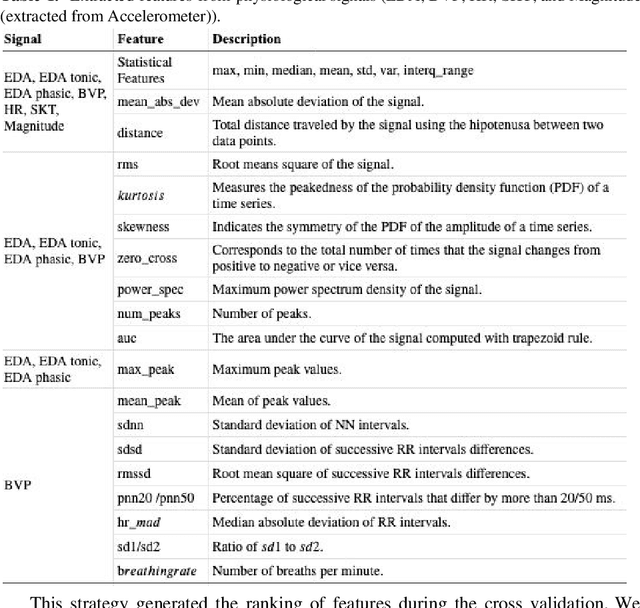

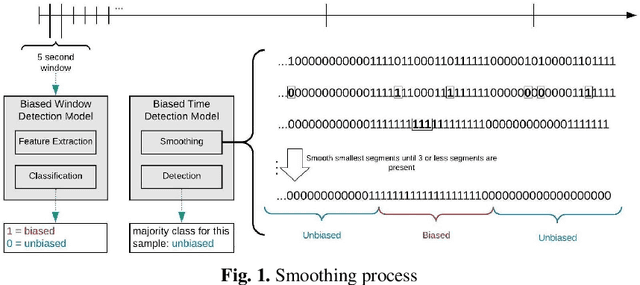

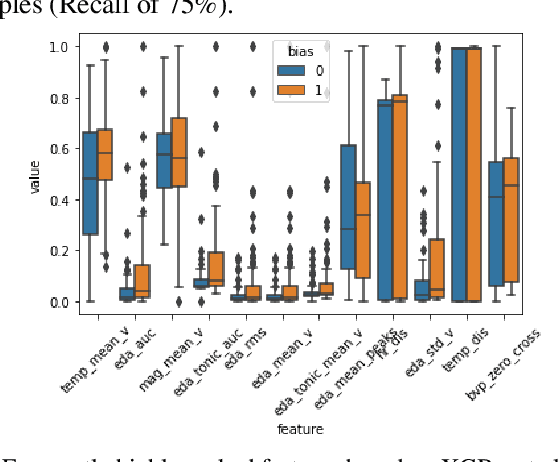

Abstract:Despite the evolution of norms and regulations to mitigate the harm from biases, harmful discrimination linked to an individual's unconscious biases persists. Our goal is to better understand and detect the physiological and behavioral indicators of implicit biases. This paper investigates whether we can reliably detect racial bias from physiological responses, including heart rate, conductive skin response, skin temperature, and micro-body movements. We analyzed data from 46 subjects whose physiological data was collected with Empatica E4 wristband while taking an Implicit Association Test (IAT). Our machine learning and statistical analysis show that implicit bias can be predicted from physiological signals with 76.1% accuracy. Our results also show that the EDA signal associated with skin response has the strongest correlation with racial bias and that there are significant differences between the values of EDA features for biased and unbiased participants.

HHAR-net: Hierarchical Human Activity Recognition using Neural Networks

Nov 10, 2020

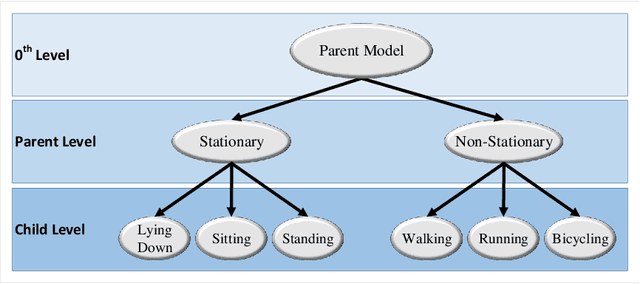

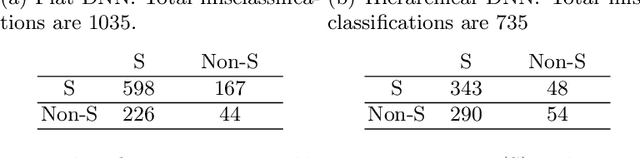

Abstract:Activity recognition using built-in sensors in smart and wearable devices provides great opportunities to understand and detect human behavior in the wild and gives a more holistic view of individuals' health and well being. Numerous computational methods have been applied to sensor streams to recognize different daily activities. However, most methods are unable to capture different layers of activities concealed in human behavior. Also, the performance of the models starts to decrease with increasing the number of activities. This research aims at building a hierarchical classification with Neural Networks to recognize human activities based on different levels of abstraction. We evaluate our model on the Extrasensory dataset; a dataset collected in the wild and containing data from smartphones and smartwatches. We use a two-level hierarchy with a total of six mutually exclusive labels namely, "lying down", "sitting", "standing in place", "walking", "running", and "bicycling" divided into "stationary" and "non-stationary". The results show that our model can recognize low-level activities (stationary/non-stationary) with 95.8% accuracy and overall accuracy of 92.8% over six labels. This is 3% above our best performing baseline.

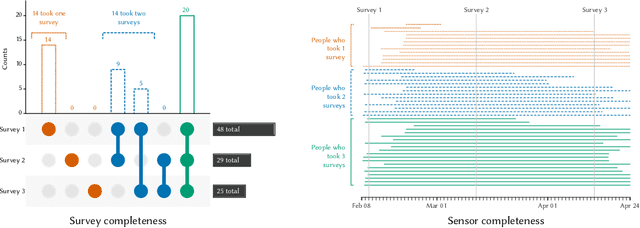

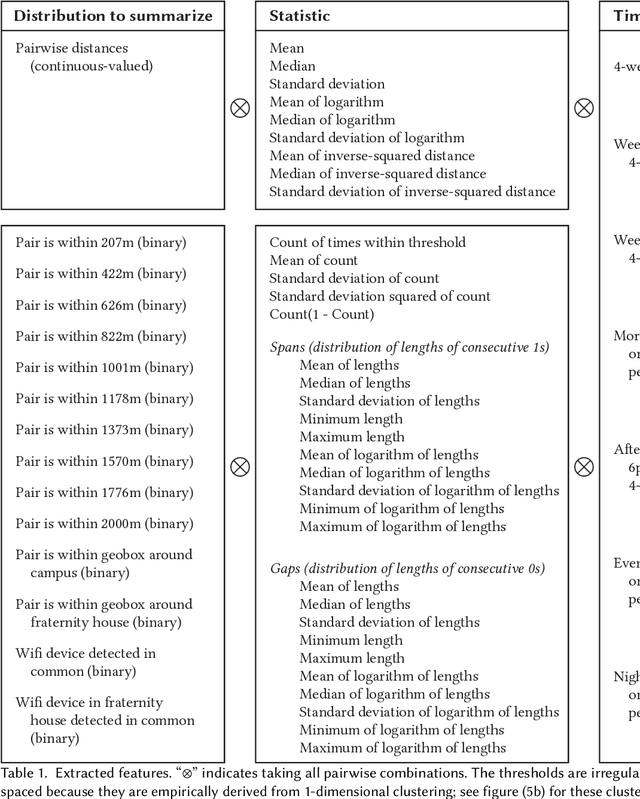

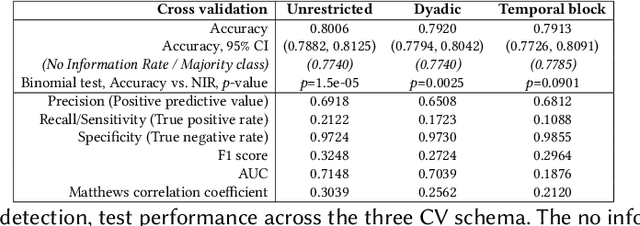

Can Smartphone Co-locations Detect Friendship? It Depends How You Model It

Aug 31, 2020

Abstract:We present a study to detect friendship, its strength, and its change from smartphone location data collectedamong members of a fraternity. We extract a rich set of co-location features and build classifiers that detectfriendships and close friendship at 30% above a random baseline. We design cross-validation schema to testour model performance in specific application settings, finding it robust to seeing new dyads and to temporalvariance.

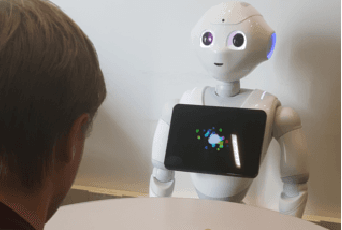

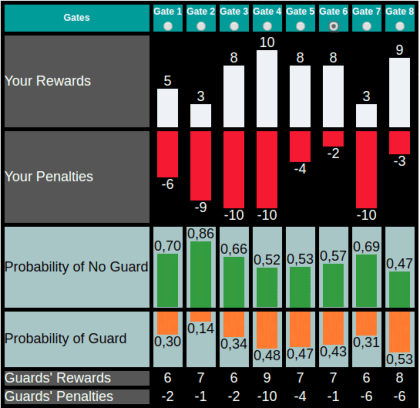

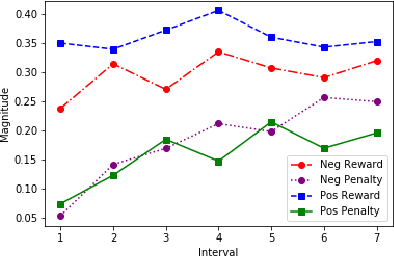

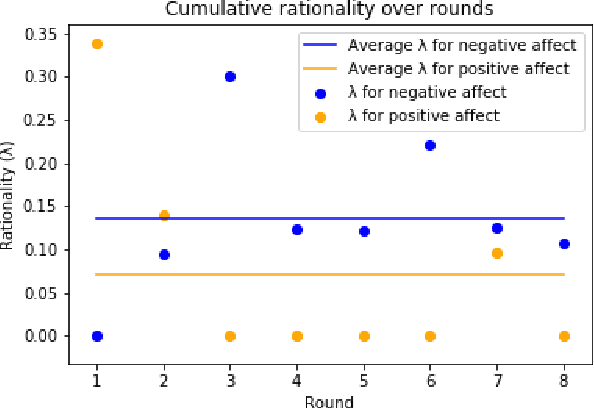

A Robot's Expressive Language Affects Human Strategy and Perceptions in a Competitive Game

Oct 24, 2019

Abstract:As robots are increasingly endowed with social and communicative capabilities, they will interact with humans in more settings, both collaborative and competitive. We explore human-robot relationships in the context of a competitive Stackelberg Security Game. We vary humanoid robot expressive language (in the form of "encouraging" or "discouraging" verbal commentary) and measure the impact on participants' rationality, strategy prioritization, mood, and perceptions of the robot. We learn that a robot opponent that makes discouraging comments causes a human to play a game less rationally and to perceive the robot more negatively. We also contribute a simple open source Natural Language Processing framework for generating expressive sentences, which was used to generate the speech of our autonomous social robot.

* RO-MAN 2019; 8 pages, 4 figures, 1 table

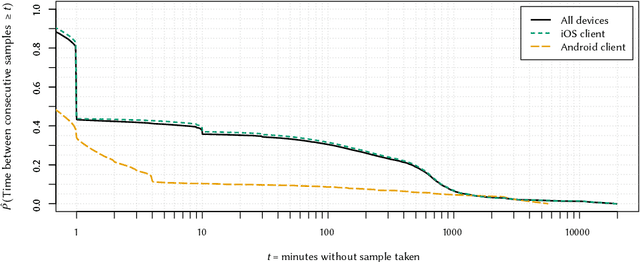

Extraction of Behavioral Features from Smartphone and Wearable Data

Jan 09, 2019Abstract:The rich set of sensors in smartphones and wearable devices provides the possibility to passively collect streams of data in the wild. The raw data streams, however, can rarely be directly used in the modeling pipeline. We provide a generic framework that can process raw data streams and extract useful features related to non-verbal human behavior. This framework can be used by researchers in the field who are interested in processing data from smartphones and Wearable devices.

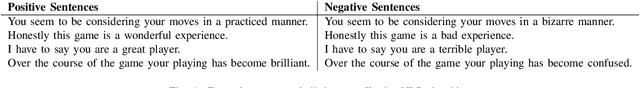

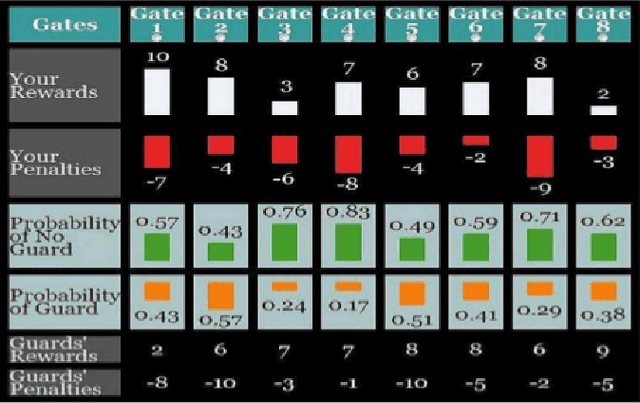

The Impact of Humanoid Affect Expression on Human Behavior in a Game-Theoretic Setting

Jun 10, 2018

Abstract:With the rapid development of robot and other intelligent and autonomous agents, how a human could be influenced by a robot's expressed mood when making decisions becomes a crucial question in human-robot interaction. In this pilot study, we investigate (1) in what way a robot can express a certain mood to influence a human's decision making behavioral model; (2) how and to what extent the human will be influenced in a game theoretic setting. More specifically, we create an NLP model to generate sentences that adhere to a specific affective expression profile. We use these sentences for a humanoid robot as it plays a Stackelberg security game against a human. We investigate the behavioral model of the human player.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge