Wenyan Yang

GCHR : Goal-Conditioned Hindsight Regularization for Sample-Efficient Reinforcement Learning

Aug 08, 2025Abstract:Goal-conditioned reinforcement learning (GCRL) with sparse rewards remains a fundamental challenge in reinforcement learning. While hindsight experience replay (HER) has shown promise by relabeling collected trajectories with achieved goals, we argue that trajectory relabeling alone does not fully exploit the available experiences in off-policy GCRL methods, resulting in limited sample efficiency. In this paper, we propose Hindsight Goal-conditioned Regularization (HGR), a technique that generates action regularization priors based on hindsight goals. When combined with hindsight self-imitation regularization (HSR), our approach enables off-policy RL algorithms to maximize experience utilization. Compared to existing GCRL methods that employ HER and self-imitation techniques, our hindsight regularizations achieve substantially more efficient sample reuse and the best performances, which we empirically demonstrate on a suite of navigation and manipulation tasks.

Smoothing Slot Attention Iterations and Recurrences

Aug 07, 2025Abstract:Slot Attention (SA) and its variants lie at the heart of mainstream Object-Centric Learning (OCL). Objects in an image can be aggregated into respective slot vectors, by \textit{iteratively} refining cold-start query vectors, typically three times, via SA on image features. For video, such aggregation is \textit{recurrently} shared across frames, with queries cold-started on the first frame while transitioned from the previous frame's slots on non-first frames. However, the cold-start queries lack sample-specific cues thus hinder precise aggregation on the image or video's first frame; Also, non-first frames' queries are already sample-specific thus require transforms different from the first frame's aggregation. We address these issues for the first time with our \textit{SmoothSA}: (1) To smooth SA iterations on the image or video's first frame, we \textit{preheat} the cold-start queries with rich information of input features, via a tiny module self-distilled inside OCL; (2) To smooth SA recurrences across all video frames, we \textit{differentiate} the homogeneous transforms on the first and non-first frames, by using full and single iterations respectively. Comprehensive experiments on object discovery, recognition and downstream benchmarks validate our method's effectiveness. Further analyses intuitively illuminate how our method smooths SA iterations and recurrences. Our code is available in the supplement.

Symbolically-Guided Visual Plan Inference from Uncurated Video Data

May 13, 2025Abstract:Visual planning, by offering a sequence of intermediate visual subgoals to a goal-conditioned low-level policy, achieves promising performance on long-horizon manipulation tasks. To obtain the subgoals, existing methods typically resort to video generation models but suffer from model hallucination and computational cost. We present Vis2Plan, an efficient, explainable and white-box visual planning framework powered by symbolic guidance. From raw, unlabeled play data, Vis2Plan harnesses vision foundation models to automatically extract a compact set of task symbols, which allows building a high-level symbolic transition graph for multi-goal, multi-stage planning. At test time, given a desired task goal, our planner conducts planning at the symbolic level and assembles a sequence of physically consistent intermediate sub-goal images grounded by the underlying symbolic representation. Our Vis2Plan outperforms strong diffusion video generation-based visual planners by delivering 53\% higher aggregate success rate in real robot settings while generating visual plans 35$\times$ faster. The results indicate that Vis2Plan is able to generate physically consistent image goals while offering fully inspectable reasoning steps.

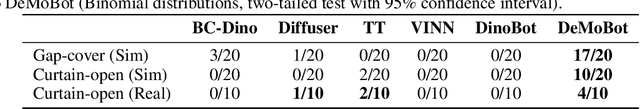

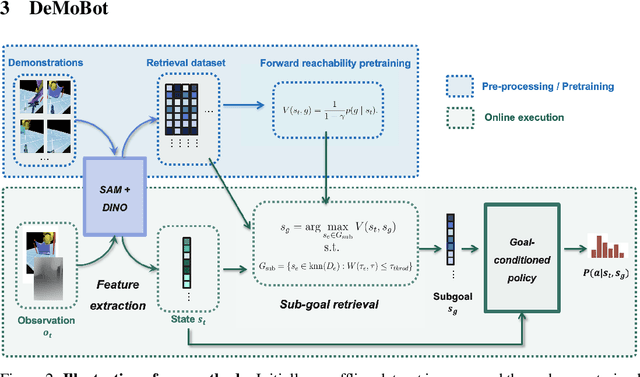

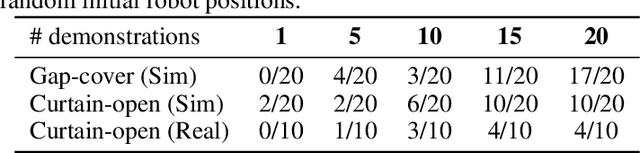

DeMoBot: Deformable Mobile Manipulation with Vision-based Sub-goal Retrieval

Aug 28, 2024

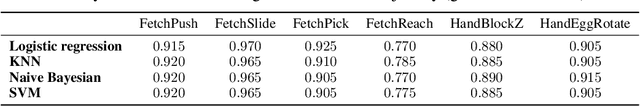

Abstract:Imitation learning (IL) algorithms typically distill experience into parametric behavior policies to mimic expert demonstrations. Despite their effectiveness, previous methods often struggle with data efficiency and accurately aligning the current state with expert demonstrations, especially in deformable mobile manipulation tasks characterized by partial observations and dynamic object deformations. In this paper, we introduce \textbf{DeMoBot}, a novel IL approach that directly retrieves observations from demonstrations to guide robots in \textbf{De}formable \textbf{Mo}bile manipulation tasks. DeMoBot utilizes vision foundation models to identify relevant expert data based on visual similarity and matches the current trajectory with demonstrated trajectories using trajectory similarity and forward reachability constraints to select suitable sub-goals. Once a goal is determined, a motion generation policy will guide the robot to the next state until the task is completed. We evaluated DeMoBot using a Spot robot in several simulated and real-world settings, demonstrating its effectiveness and generalizability. With only 20 demonstrations, DeMoBot significantly outperforms the baselines, reaching a 50\% success rate in curtain opening and 85\% in gap covering in simulation.

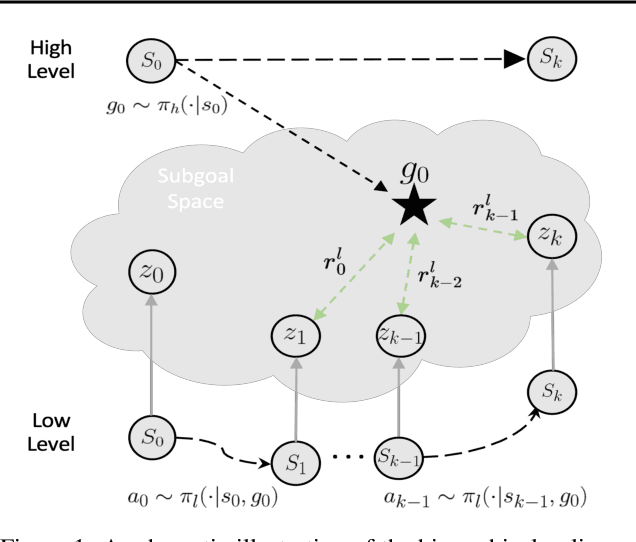

Probabilistic Subgoal Representations for Hierarchical Reinforcement learning

Jun 24, 2024

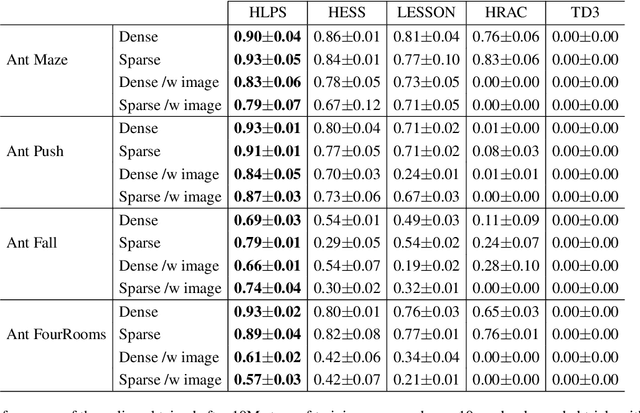

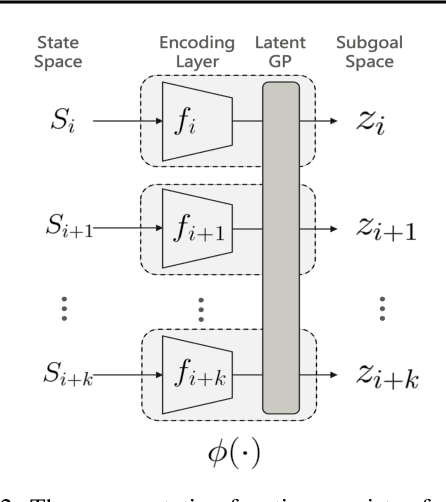

Abstract:In goal-conditioned hierarchical reinforcement learning (HRL), a high-level policy specifies a subgoal for the low-level policy to reach. Effective HRL hinges on a suitable subgoal represen tation function, abstracting state space into latent subgoal space and inducing varied low-level behaviors. Existing methods adopt a subgoal representation that provides a deterministic mapping from state space to latent subgoal space. Instead, this paper utilizes Gaussian Processes (GPs) for the first probabilistic subgoal representation. Our method employs a GP prior on the latent subgoal space to learn a posterior distribution over the subgoal representation functions while exploiting the long-range correlation in the state space through learnable kernels. This enables an adaptive memory that integrates long-range subgoal information from prior planning steps allowing to cope with stochastic uncertainties. Furthermore, we propose a novel learning objective to facilitate the simultaneous learning of probabilistic subgoal representations and policies within a unified framework. In experiments, our approach outperforms state-of-the-art baselines in standard benchmarks but also in environments with stochastic elements and under diverse reward conditions. Additionally, our model shows promising capabilities in transferring low-level policies across different tasks.

Seq2Seq Imitation Learning for Tactile Feedback-based Manipulation

Mar 05, 2023Abstract:Robot control for tactile feedback-based manipulation can be difficult due to the modeling of physical contacts, partial observability of the environment, and noise in perception and control. This work focuses on solving partial observability of contact-rich manipulation tasks as a Sequence-to-Sequence (Seq2Seq)} Imitation Learning (IL) problem. The proposed Seq2Seq model produces a robot-environment interaction sequence to estimate the partially observable environment state variables. Then, the observed interaction sequence is transformed to a control sequence for the task itself. The proposed Seq2Seq IL for tactile feedback-based manipulation is experimentally validated on a door-open task in a simulated environment and a snap-on insertion task with a real robot. The model is able to learn both tasks from only 50 expert demonstrations, while state-of-the-art reinforcement learning and imitation learning methods fail.

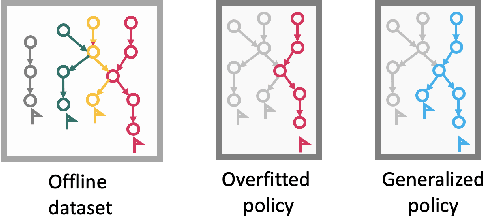

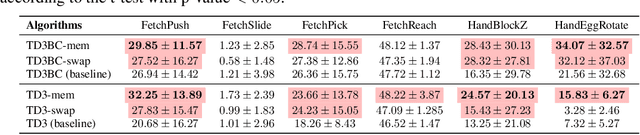

Swapped goal-conditioned offline reinforcement learning

Feb 17, 2023Abstract:Offline goal-conditioned reinforcement learning (GCRL) can be challenging due to overfitting to the given dataset. To generalize agents' skills outside the given dataset, we propose a goal-swapping procedure that generates additional trajectories. To alleviate the problem of noise and extrapolation errors, we present a general offline reinforcement learning method called deterministic Q-advantage policy gradient (DQAPG). In the experiments, DQAPG outperforms state-of-the-art goal-conditioned offline RL methods in a wide range of benchmark tasks, and goal-swapping further improves the test results. It is noteworthy, that the proposed method obtains good performance on the challenging dexterous in-hand manipulation tasks for which the prior methods failed.

Prioritized offline Goal-swapping Experience Replay

Feb 15, 2023

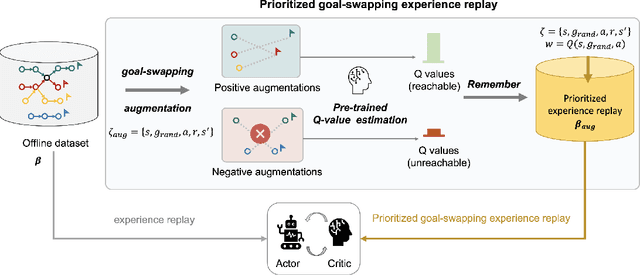

Abstract:In goal-conditioned offline reinforcement learning, an agent learns from previously collected data to go to an arbitrary goal. Since the offline data only contains a finite number of trajectories, a main challenge is how to generate more data. Goal-swapping generates additional data by switching trajectory goals but while doing so produces a large number of invalid trajectories. To address this issue, we propose prioritized goal-swapping experience replay (PGSER). PGSER uses a pre-trained Q function to assign higher priority weights to goal swapped transitions that allow reaching the goal. In experiments, PGSER significantly improves over baselines in a wide range of benchmark tasks, including challenging previously unsuccessful dexterous in-hand manipulation tasks.

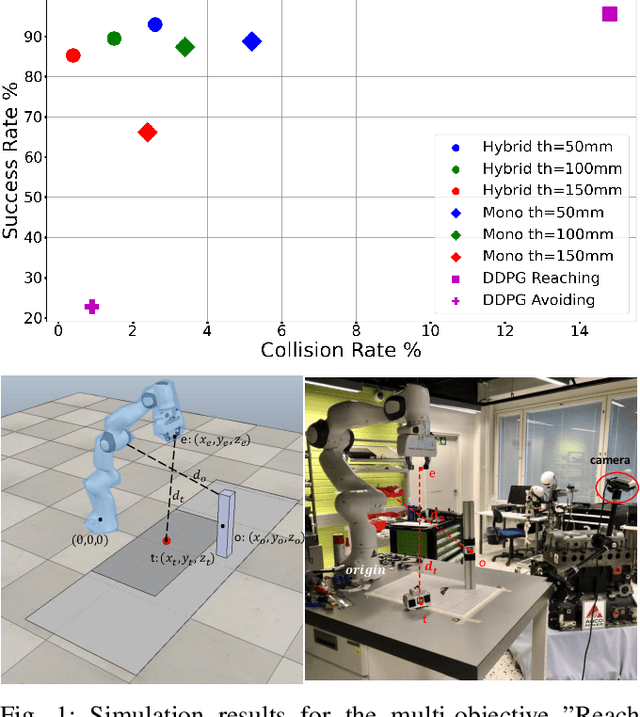

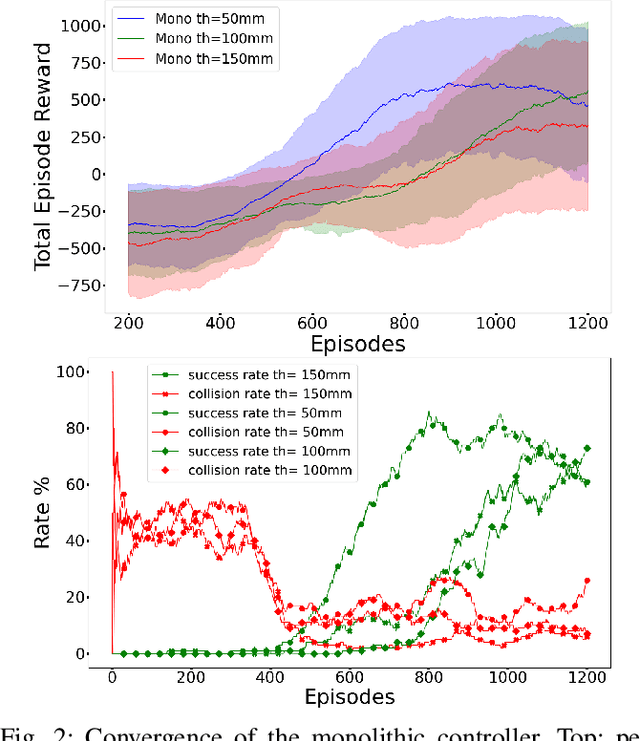

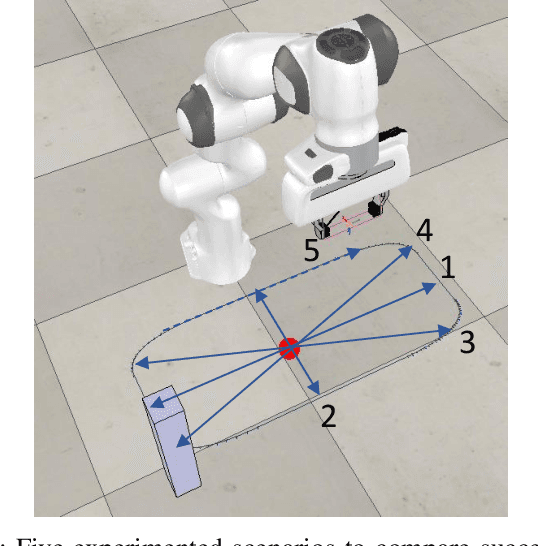

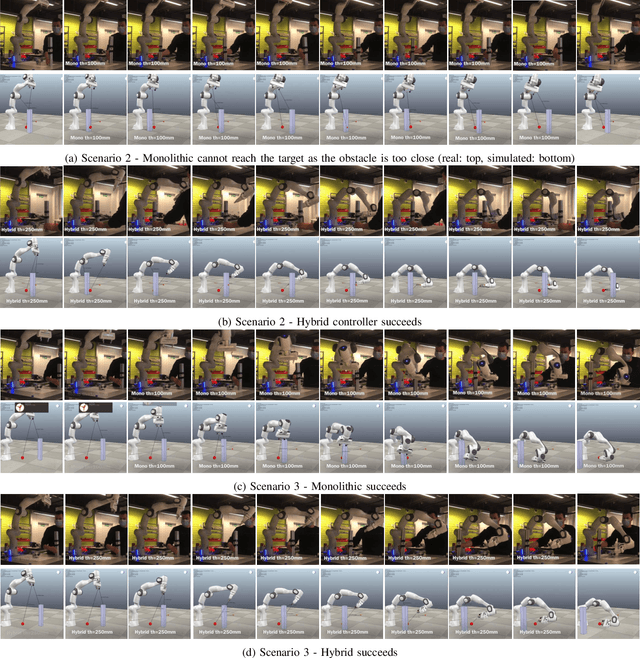

Monolithic vs. hybrid controller for multi-objective Sim-to-Real learning

Aug 17, 2021

Abstract:Simulation to real (Sim-to-Real) is an attractive approach to construct controllers for robotic tasks that are easier to simulate than to analytically solve. Working Sim-to-Real solutions have been demonstrated for tasks with a clear single objective such as "reach the target". Real world applications, however, often consist of multiple simultaneous objectives such as "reach the target" but "avoid obstacles". A straightforward solution in the context of reinforcement learning (RL) is to combine multiple objectives into a multi-term reward function and train a single monolithic controller. Recently, a hybrid solution based on pre-trained single objective controllers and a switching rule between them was proposed. In this work, we compare these two approaches in the multi-objective setting of a robot manipulator to reach a target while avoiding an obstacle. Our findings show that the training of a hybrid controller is easier and obtains a better success-failure trade-off than a monolithic controller. The controllers trained in simulator were verified by a real set-up.

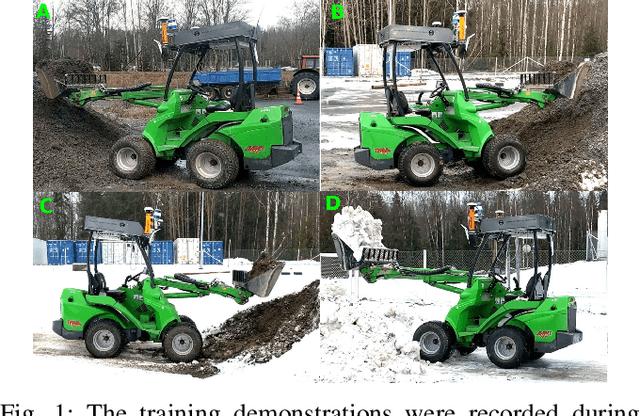

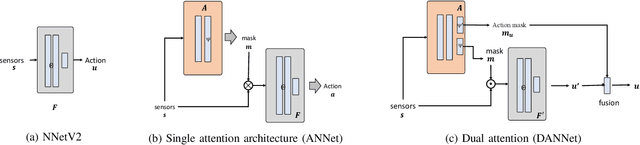

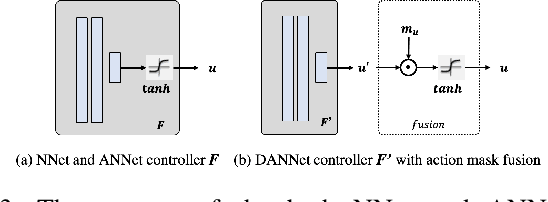

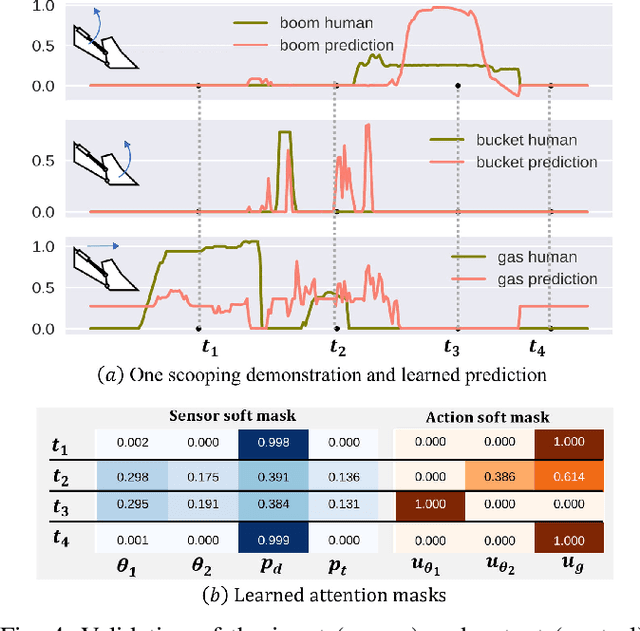

Neural Network Controller for Autonomous Pile Loading Revised

Mar 23, 2021

Abstract:We have recently proposed two pile loading controllers that learn from human demonstrations: a neural network (NNet) [1] and a random forest (RF) controller [2]. In the field experiments the RF controller obtained clearly better success rates. In this work, the previous findings are drastically revised by experimenting summer time trained controllers in winter conditions. The winter experiments revealed a need for additional sensors, more training data, and a controller that can take advantage of these. Therefore, we propose a revised neural controller (NNetV2) which has a more expressive structure and uses a neural attention mechanism to focus on important parts of the sensor and control signals. Using the same data and sensors to train and test the three controllers, NNetV2 achieves better robustness against drastically changing conditions and superior success rate. To the best of our knowledge, this is the first work testing a learning-based controller for a heavy-duty machine in drastically varying outdoor conditions and delivering high success rate in winter, being trained in summer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge