Weisheng Dong

Towards Syn-to-Real IQA: A Novel Perspective on Reshaping Synthetic Data Distributions

Jan 01, 2026Abstract:Blind Image Quality Assessment (BIQA) has advanced significantly through deep learning, but the scarcity of large-scale labeled datasets remains a challenge. While synthetic data offers a promising solution, models trained on existing synthetic datasets often show limited generalization ability. In this work, we make a key observation that representations learned from synthetic datasets often exhibit a discrete and clustered pattern that hinders regression performance: features of high-quality images cluster around reference images, while those of low-quality images cluster based on distortion types. Our analysis reveals that this issue stems from the distribution of synthetic data rather than model architecture. Consequently, we introduce a novel framework SynDR-IQA, which reshapes synthetic data distribution to enhance BIQA generalization. Based on theoretical derivations of sample diversity and redundancy's impact on generalization error, SynDR-IQA employs two strategies: distribution-aware diverse content upsampling, which enhances visual diversity while preserving content distribution, and density-aware redundant cluster downsampling, which balances samples by reducing the density of densely clustered areas. Extensive experiments across three cross-dataset settings (synthetic-to-authentic, synthetic-to-algorithmic, and synthetic-to-synthetic) demonstrate the effectiveness of our method. The code is available at https://github.com/Li-aobo/SynDR-IQA.

LoopExpose: An Unsupervised Framework for Arbitrary-Length Exposure Correction

Nov 08, 2025Abstract:Exposure correction is essential for enhancing image quality under challenging lighting conditions. While supervised learning has achieved significant progress in this area, it relies heavily on large-scale labeled datasets, which are difficult to obtain in practical scenarios. To address this limitation, we propose a pseudo label-based unsupervised method called LoopExpose for arbitrary-length exposure correction. A nested loop optimization strategy is proposed to address the exposure correction problem, where the correction model and pseudo-supervised information are jointly optimized in a two-level framework. Specifically, the upper-level trains a correction model using pseudo-labels generated through multi-exposure fusion at the lower level. A feedback mechanism is introduced where corrected images are fed back into the fusion process to refine the pseudo-labels, creating a self-reinforcing learning loop. Considering the dominant role of luminance calibration in exposure correction, a Luminance Ranking Loss is introduced to leverage the relative luminance ordering across the input sequence as a self-supervised constraint. Extensive experiments on different benchmark datasets demonstrate that LoopExpose achieves superior exposure correction and fusion performance, outperforming existing state-of-the-art unsupervised methods. Code is available at https://github.com/FALALAS/LoopExpose.

SeqAffordSplat: Scene-level Sequential Affordance Reasoning on 3D Gaussian Splatting

Jul 31, 2025Abstract:3D affordance reasoning, the task of associating human instructions with the functional regions of 3D objects, is a critical capability for embodied agents. Current methods based on 3D Gaussian Splatting (3DGS) are fundamentally limited to single-object, single-step interactions, a paradigm that falls short of addressing the long-horizon, multi-object tasks required for complex real-world applications. To bridge this gap, we introduce the novel task of Sequential 3D Gaussian Affordance Reasoning and establish SeqAffordSplat, a large-scale benchmark featuring 1800+ scenes to support research on long-horizon affordance understanding in complex 3DGS environments. We then propose SeqSplatNet, an end-to-end framework that directly maps an instruction to a sequence of 3D affordance masks. SeqSplatNet employs a large language model that autoregressively generates text interleaved with special segmentation tokens, guiding a conditional decoder to produce the corresponding 3D mask. To handle complex scene geometry, we introduce a pre-training strategy, Conditional Geometric Reconstruction, where the model learns to reconstruct complete affordance region masks from known geometric observations, thereby building a robust geometric prior. Furthermore, to resolve semantic ambiguities, we design a feature injection mechanism that lifts rich semantic features from 2D Vision Foundation Models (VFM) and fuses them into the 3D decoder at multiple scales. Extensive experiments demonstrate that our method sets a new state-of-the-art on our challenging benchmark, effectively advancing affordance reasoning from single-step interactions to complex, sequential tasks at the scene level.

Controlled Data Rebalancing in Multi-Task Learning for Real-World Image Super-Resolution

Jun 05, 2025Abstract:Real-world image super-resolution (Real-SR) is a challenging problem due to the complex degradation patterns in low-resolution images. Unlike approaches that assume a broadly encompassing degradation space, we focus specifically on achieving an optimal balance in how SR networks handle different degradation patterns within a fixed degradation space. We propose an improved paradigm that frames Real-SR as a data-heterogeneous multi-task learning problem, our work addresses task imbalance in the paradigm through coordinated advancements in task definition, imbalance quantification, and adaptive data rebalancing. Specifically, we introduce a novel task definition framework that segments the degradation space by setting parameter-specific boundaries for degradation operators, effectively reducing the task quantity while maintaining task discrimination. We then develop a focal loss based multi-task weighting mechanism that precisely quantifies task imbalance dynamics during model training. Furthermore, to prevent sporadic outlier samples from dominating the gradient optimization of the shared multi-task SR model, we strategically convert the quantified task imbalance into controlled data rebalancing through deliberate regulation of task-specific training volumes. Extensive quantitative and qualitative experiments demonstrate that our method achieves consistent superiority across all degradation tasks.

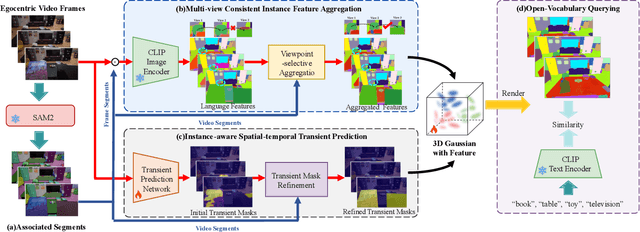

EgoSplat: Open-Vocabulary Egocentric Scene Understanding with Language Embedded 3D Gaussian Splatting

Mar 14, 2025

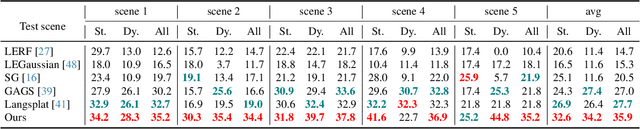

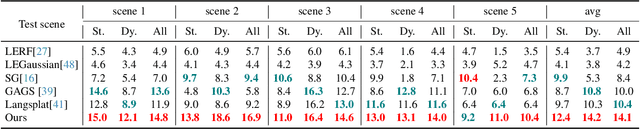

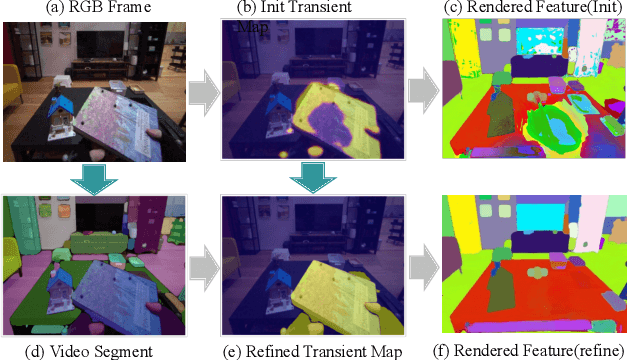

Abstract:Egocentric scenes exhibit frequent occlusions, varied viewpoints, and dynamic interactions compared to typical scene understanding tasks. Occlusions and varied viewpoints can lead to multi-view semantic inconsistencies, while dynamic objects may act as transient distractors, introducing artifacts into semantic feature modeling. To address these challenges, we propose EgoSplat, a language-embedded 3D Gaussian Splatting framework for open-vocabulary egocentric scene understanding. A multi-view consistent instance feature aggregation method is designed to leverage the segmentation and tracking capabilities of SAM2 to selectively aggregate complementary features across views for each instance, ensuring precise semantic representation of scenes. Additionally, an instance-aware spatial-temporal transient prediction module is constructed to improve spatial integrity and temporal continuity in predictions by incorporating spatial-temporal associations across multi-view instances, effectively reducing artifacts in the semantic reconstruction of egocentric scenes. EgoSplat achieves state-of-the-art performance in both localization and segmentation tasks on two datasets, outperforming existing methods with a 8.2% improvement in localization accuracy and a 3.7% improvement in segmentation mIoU on the ADT dataset, and setting a new benchmark in open-vocabulary egocentric scene understanding. The code will be made publicly available.

ERVD: An Efficient and Robust ViT-Based Distillation Framework for Remote Sensing Image Retrieval

Dec 24, 2024

Abstract:ERVD: An Efficient and Robust ViT-Based Distillation Framework for Remote Sensing Image Retrieval

Multi-Teacher Multi-Objective Meta-Learning for Zero-Shot Hyperspectral Band Selection

Jun 12, 2024

Abstract:Band selection plays a crucial role in hyperspectral image classification by removing redundant and noisy bands and retaining discriminative ones. However, most existing deep learning-based methods are aimed at dealing with a specific band selection dataset, and need to retrain parameters for new datasets, which significantly limits their generalizability.To address this issue, a novel multi-teacher multi-objective meta-learning network (M$^3$BS) is proposed for zero-shot hyperspectral band selection. In M$^3$BS, a generalizable graph convolution network (GCN) is constructed to generate dataset-agnostic base, and extract compatible meta-knowledge from multiple band selection tasks. To enhance the ability of meta-knowledge extraction, multiple band selection teachers are introduced to provide diverse high-quality experiences.strategy Finally, subsequent classification tasks are attached and jointly optimized with multi-teacher band selection tasks through multi-objective meta-learning in an end-to-end trainable way. Multi-objective meta-learning guarantees to coordinate diverse optimization objectives automatically and adapt to various datasets simultaneously. Once the optimization is accomplished, the acquired meta-knowledge can be directly transferred to unseen datasets without any retraining or fine-tuning. Experimental results demonstrate the effectiveness and efficiency of our proposed method on par with state-of-the-art baselines for zero-shot hyperspectral band selection.

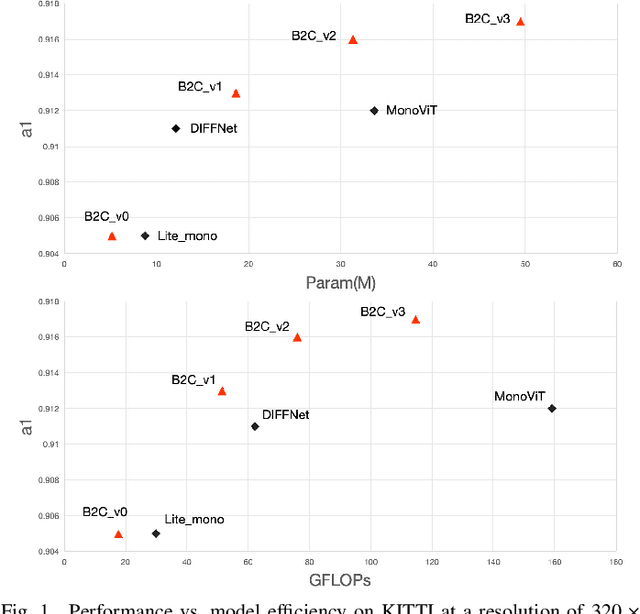

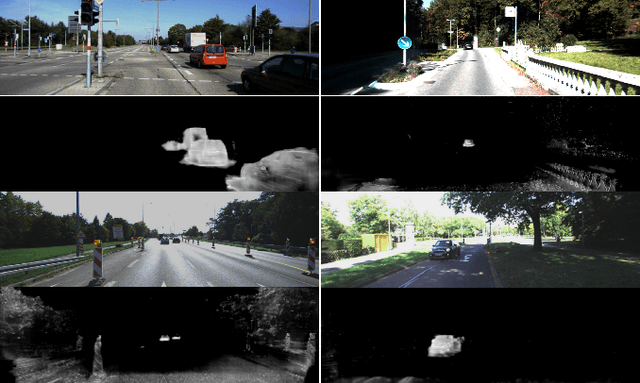

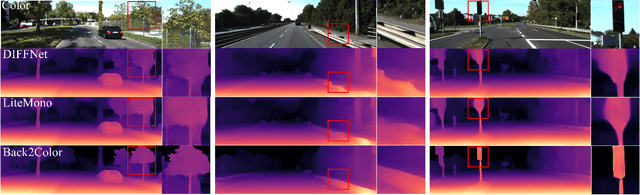

Back to the Color: Learning Depth to Specific Color Transformation for Unsupervised Depth Estimation

Jun 11, 2024

Abstract:Virtual engines have the capability to generate dense depth maps for various synthetic scenes, making them invaluable for training depth estimation models. However, synthetic colors often exhibit significant discrepancies compared to real-world colors, thereby posing challenges for depth estimation in real-world scenes, particularly in complex and uncertain environments encountered in unsupervised monocular depth estimation tasks. To address this issue, we propose Back2Color, a framework that predicts realistic colors from depth utilizing a model trained on real-world data, thus facilitating the transformation of synthetic colors into real-world counterparts. Additionally, by employing the Syn-Real CutMix method for joint training with both real-world unsupervised and synthetic supervised depth samples, we achieve improved performance in monocular depth estimation for real-world scenes. Moreover, to comprehensively address the impact of non-rigid motions on depth estimation, we propose an auto-learning uncertainty temporal-spatial fusion method (Auto-UTSF), which integrates the benefits of unsupervised learning in both temporal and spatial dimensions. Furthermore, we design a depth estimation network (VADepth) based on the Vision Attention Network. Our Back2Color framework demonstrates state-of-the-art performance, as evidenced by improvements in performance metrics and the production of fine-grained details in our predictions, particularly on challenging datasets such as Cityscapes for unsupervised depth estimation.

External Knowledge Enhanced 3D Scene Generation from Sketch

Mar 21, 2024

Abstract:Generating realistic 3D scenes is challenging due to the complexity of room layouts and object geometries.We propose a sketch based knowledge enhanced diffusion architecture (SEK) for generating customized, diverse, and plausible 3D scenes. SEK conditions the denoising process with a hand-drawn sketch of the target scene and cues from an object relationship knowledge base. We first construct an external knowledge base containing object relationships and then leverage knowledge enhanced graph reasoning to assist our model in understanding hand-drawn sketches. A scene is represented as a combination of 3D objects and their relationships, and then incrementally diffused to reach a Gaussian distribution.We propose a 3D denoising scene transformer that learns to reverse the diffusion process, conditioned by a hand-drawn sketch along with knowledge cues, to regressively generate the scene including the 3D object instances as well as their layout. Experiments on the 3D-FRONT dataset show that our model improves FID, CKL by 17.41%, 37.18% in 3D scene generation and FID, KID by 19.12%, 20.06% in 3D scene completion compared to the nearest competitor DiffuScene.

3D Object Detection from Point Cloud via Voting Step Diffusion

Mar 21, 2024

Abstract:3D object detection is a fundamental task in scene understanding. Numerous research efforts have been dedicated to better incorporate Hough voting into the 3D object detection pipeline. However, due to the noisy, cluttered, and partial nature of real 3D scans, existing voting-based methods often receive votes from the partial surfaces of individual objects together with severe noises, leading to sub-optimal detection performance. In this work, we focus on the distributional properties of point clouds and formulate the voting process as generating new points in the high-density region of the distribution of object centers. To achieve this, we propose a new method to move random 3D points toward the high-density region of the distribution by estimating the score function of the distribution with a noise conditioned score network. Specifically, we first generate a set of object center proposals to coarsely identify the high-density region of the object center distribution. To estimate the score function, we perturb the generated object center proposals by adding normalized Gaussian noise, and then jointly estimate the score function of all perturbed distributions. Finally, we generate new votes by moving random 3D points to the high-density region of the object center distribution according to the estimated score function. Extensive experiments on two large scale indoor 3D scene datasets, SUN RGB-D and ScanNet V2, demonstrate the superiority of our proposed method. The code will be released at https://github.com/HHrEtvP/DiffVote.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge