Yufan Zhu

A Personalised Learning Tool for Physics Undergraduate Students Built On a Large Language Model for Symbolic Regression

Jun 17, 2024

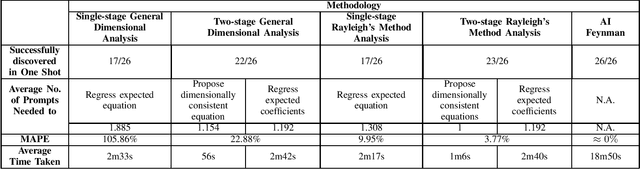

Abstract:Interleaved practice enhances the memory and problem-solving ability of students in undergraduate courses. We introduce a personalized learning tool built on a Large Language Model (LLM) that can provide immediate and personalized attention to students as they complete homework containing problems interleaved from undergraduate physics courses. Our tool leverages the dimensional analysis method, enhancing students' qualitative thinking and problem-solving skills for complex phenomena. Our approach combines LLMs for symbolic regression with dimensional analysis via prompt engineering and offers students a unique perspective to comprehend relationships between physics variables. This fosters a broader and more versatile understanding of physics and mathematical principles and complements a conventional undergraduate physics education that relies on interpreting and applying established equations within specific contexts. We test our personalized learning tool on the equations from Feynman's lectures on physics. Our tool can correctly identify relationships between physics variables for most equations, underscoring its value as a complementary personalized learning tool for undergraduate physics students.

Back to the Color: Learning Depth to Specific Color Transformation for Unsupervised Depth Estimation

Jun 11, 2024

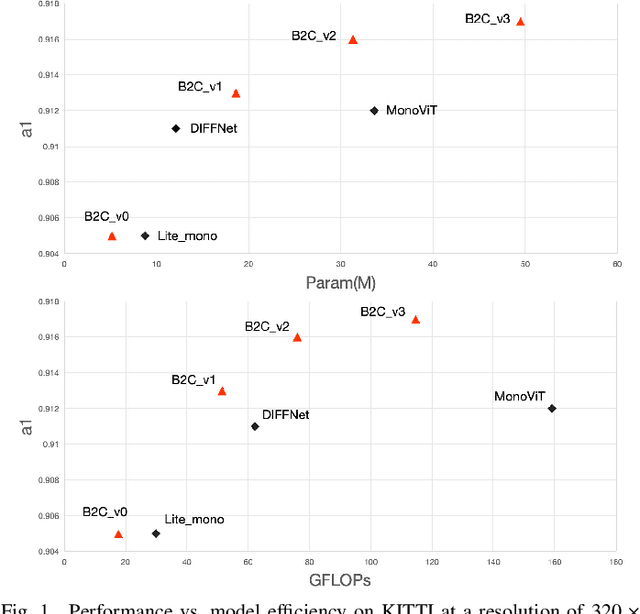

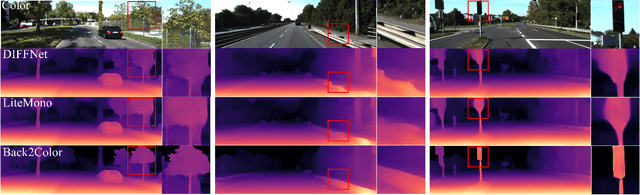

Abstract:Virtual engines have the capability to generate dense depth maps for various synthetic scenes, making them invaluable for training depth estimation models. However, synthetic colors often exhibit significant discrepancies compared to real-world colors, thereby posing challenges for depth estimation in real-world scenes, particularly in complex and uncertain environments encountered in unsupervised monocular depth estimation tasks. To address this issue, we propose Back2Color, a framework that predicts realistic colors from depth utilizing a model trained on real-world data, thus facilitating the transformation of synthetic colors into real-world counterparts. Additionally, by employing the Syn-Real CutMix method for joint training with both real-world unsupervised and synthetic supervised depth samples, we achieve improved performance in monocular depth estimation for real-world scenes. Moreover, to comprehensively address the impact of non-rigid motions on depth estimation, we propose an auto-learning uncertainty temporal-spatial fusion method (Auto-UTSF), which integrates the benefits of unsupervised learning in both temporal and spatial dimensions. Furthermore, we design a depth estimation network (VADepth) based on the Vision Attention Network. Our Back2Color framework demonstrates state-of-the-art performance, as evidenced by improvements in performance metrics and the production of fine-grained details in our predictions, particularly on challenging datasets such as Cityscapes for unsupervised depth estimation.

Robust Depth Completion with Uncertainty-Driven Loss Functions

Dec 28, 2021

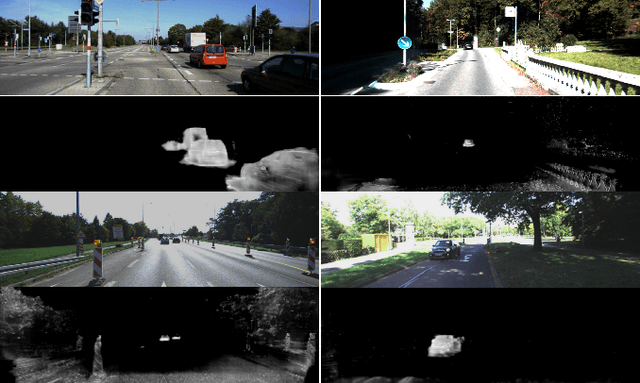

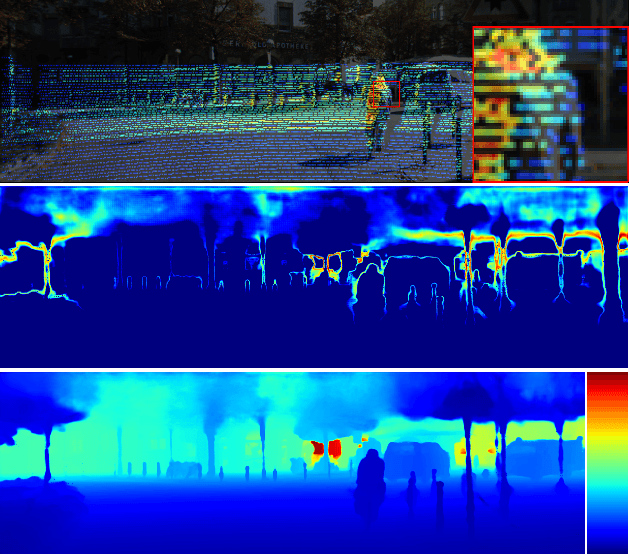

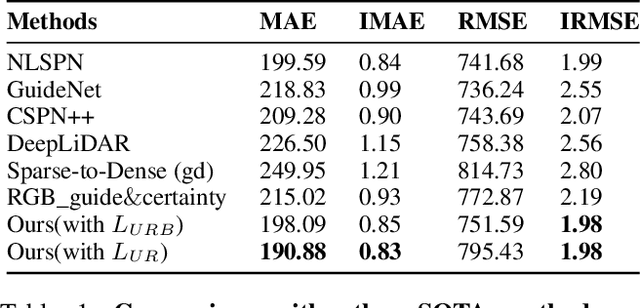

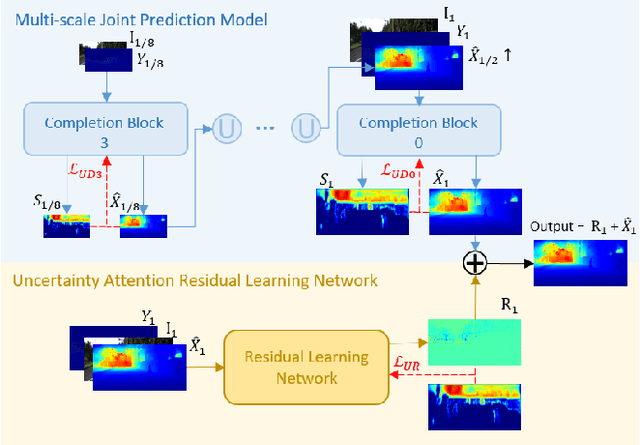

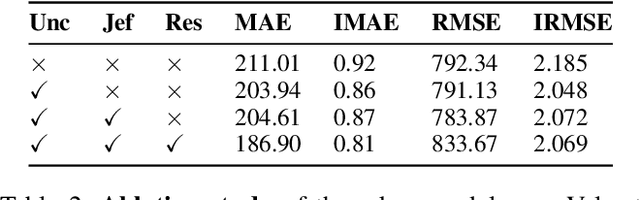

Abstract:Recovering a dense depth image from sparse LiDAR scans is a challenging task. Despite the popularity of color-guided methods for sparse-to-dense depth completion, they treated pixels equally during optimization, ignoring the uneven distribution characteristics in the sparse depth map and the accumulated outliers in the synthesized ground truth. In this work, we introduce uncertainty-driven loss functions to improve the robustness of depth completion and handle the uncertainty in depth completion. Specifically, we propose an explicit uncertainty formulation for robust depth completion with Jeffrey's prior. A parametric uncertain-driven loss is introduced and translated to new loss functions that are robust to noisy or missing data. Meanwhile, we propose a multiscale joint prediction model that can simultaneously predict depth and uncertainty maps. The estimated uncertainty map is also used to perform adaptive prediction on the pixels with high uncertainty, leading to a residual map for refining the completion results. Our method has been tested on KITTI Depth Completion Benchmark and achieved the state-of-the-art robustness performance in terms of MAE, IMAE, and IRMSE metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge