Junpeng Zhang

Towards the Three-Phase Dynamics of Generalization Power of a DNN

May 11, 2025Abstract:This paper proposes a new perspective for analyzing the generalization power of deep neural networks (DNNs), i.e., directly disentangling and analyzing the dynamics of generalizable and non-generalizable interaction encoded by a DNN through the training process. Specifically, this work builds upon the recent theoretical achievement in explainble AI, which proves that the detailed inference logic of DNNs can be can be strictly rewritten as a small number of AND-OR interaction patterns. Based on this, we propose an efficient method to quantify the generalization power of each interaction, and we discover a distinct three-phase dynamics of the generalization power of interactions during training. In particular, the early phase of training typically removes noisy and non-generalizable interactions and learns simple and generalizable ones. The second and the third phases tend to capture increasingly complex interactions that are harder to generalize. Experimental results verify that the learning of non-generalizable interactions is the the direct cause for the gap between the training and testing losses.

Randomness of Low-Layer Parameters Determines Confusing Samples in Terms of Interaction Representations of a DNN

Feb 12, 2025Abstract:In this paper, we find that the complexity of interactions encoded by a deep neural network (DNN) can explain its generalization power. We also discover that the confusing samples of a DNN, which are represented by non-generalizable interactions, are determined by its low-layer parameters. In comparison, other factors, such as high-layer parameters and network architecture, have much less impact on the composition of confusing samples. Two DNNs with different low-layer parameters usually have fully different sets of confusing samples, even though they have similar performance. This finding extends the understanding of the lottery ticket hypothesis, and well explains distinctive representation power of different DNNs.

Towards the Dynamics of a DNN Learning Symbolic Interactions

Jul 27, 2024Abstract:This study proves the two-phase dynamics of a deep neural network (DNN) learning interactions. Despite the long disappointing view of the faithfulness of post-hoc explanation of a DNN, in recent years, a series of theorems have been proven to show that given an input sample, a small number of interactions between input variables can be considered as primitive inference patterns, which can faithfully represent every detailed inference logic of the DNN on this sample. Particularly, it has been observed that various DNNs all learn interactions of different complexities with two-phase dynamics, and this well explains how a DNN's generalization power changes from under-fitting to over-fitting. Therefore, in this study, we prove the dynamics of a DNN gradually encoding interactions of different complexities, which provides a theoretically grounded mechanism for the over-fitting of a DNN. Experiments show that our theory well predicts the real learning dynamics of various DNNs on different tasks.

Two-Phase Dynamics of Interactions Explains the Starting Point of a DNN Learning Over-Fitted Features

May 16, 2024Abstract:This paper investigates the dynamics of a deep neural network (DNN) learning interactions. Previous studies have discovered and mathematically proven that given each input sample, a well-trained DNN usually only encodes a small number of interactions (non-linear relationships) between input variables in the sample. A series of theorems have been derived to prove that we can consider the DNN's inference equivalent to using these interactions as primitive patterns for inference. In this paper, we discover the DNN learns interactions in two phases. The first phase mainly penalizes interactions of medium and high orders, and the second phase mainly learns interactions of gradually increasing orders. We can consider the two-phase phenomenon as the starting point of a DNN learning over-fitted features. Such a phenomenon has been widely shared by DNNs with various architectures trained for different tasks. Therefore, the discovery of the two-phase dynamics provides a detailed mechanism for how a DNN gradually learns different inference patterns (interactions). In particular, we have also verified the claim that high-order interactions have weaker generalization power than low-order interactions. Thus, the discovered two-phase dynamics also explains how the generalization power of a DNN changes during the training process.

SA-MixNet: Structure-aware Mixup and Invariance Learning for Scribble-supervised Road Extraction in Remote Sensing Images

Mar 03, 2024Abstract:Mainstreamed weakly supervised road extractors rely on highly confident pseudo-labels propagated from scribbles, and their performance often degrades gradually as the image scenes tend various. We argue that such degradation is due to the poor model's invariance to scenes with different complexities, whereas existing solutions to this problem are commonly based on crafted priors that cannot be derived from scribbles. To eliminate the reliance on such priors, we propose a novel Structure-aware Mixup and Invariance Learning framework (SA-MixNet) for weakly supervised road extraction that improves the model invariance in a data-driven manner. Specifically, we design a structure-aware Mixup scheme to paste road regions from one image onto another for creating an image scene with increased complexity while preserving the road's structural integrity. Then an invariance regularization is imposed on the predictions of constructed and origin images to minimize their conflicts, which thus forces the model to behave consistently on various scenes. Moreover, a discriminator-based regularization is designed for enhancing the connectivity meanwhile preserving the structure of roads. Combining these designs, our framework demonstrates superior performance on the DeepGlobe, Wuhan, and Massachusetts datasets outperforming the state-of-the-art techniques by 1.47%, 2.12%, 4.09% respectively in IoU metrics, and showing its potential of plug-and-play. The code will be made publicly available.

Knowledge Distillation for Oriented Object Detection on Aerial Images

Jun 20, 2022

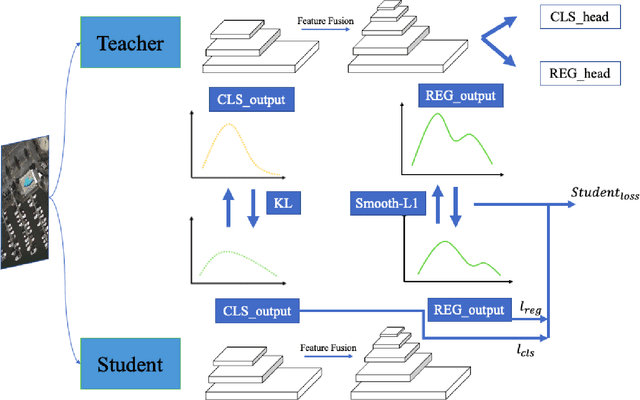

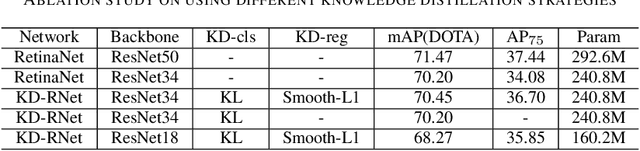

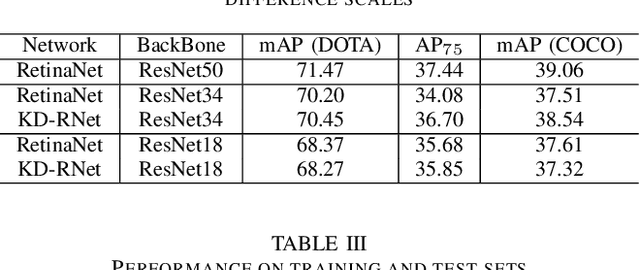

Abstract:Deep convolutional neural network with increased number of parameters has achieved improved precision in task of object detection on natural images, where objects of interests are annotated with horizontal boundary boxes. On aerial images captured from the bird-view perspective, these improvements on model architecture and deeper convolutional layers can also boost the performance on oriented object detection task. However, it is hard to directly apply those state-of-the-art object detectors on the devices with limited computation resources, which necessitates lightweight models through model compression. In order to address this issue, we present a model compression method for rotated object detection on aerial images by knowledge distillation, namely KD-RNet. With a well-trained teacher oriented object detector with a large number of parameters, the obtained object category and location information are both transferred to a compact student network in KD-RNet by collaborative training strategy. Transferring the category information is achieved by knowledge distillation on predicted probability distribution, and a soft regression loss is adopted for handling displacement in location information transfer. The experimental result on a large-scale aerial object detection dataset (DOTA) demonstrates that the proposed KD-RNet model can achieve improved mean-average precision (mAP) with reduced number of parameters, at the same time, KD-RNet boost the performance on providing high quality detections with higher overlap with groundtruth annotations.

MidNet: An Anchor-and-Angle-Free Detector for Oriented Ship Detection in Aerial Images

Nov 22, 2021

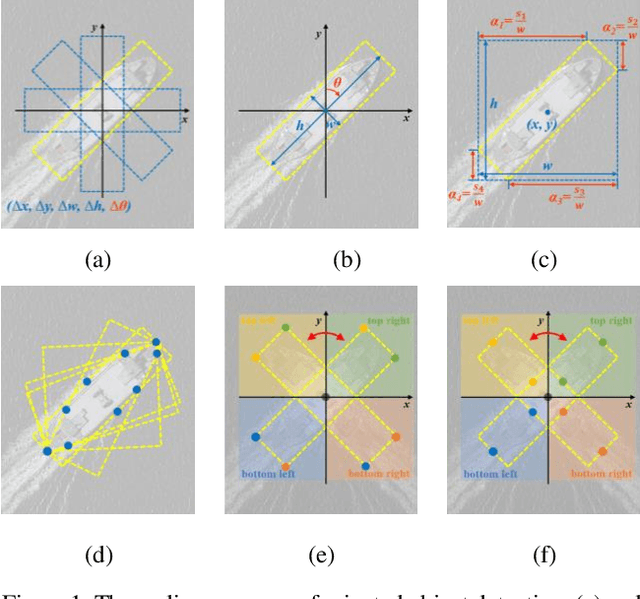

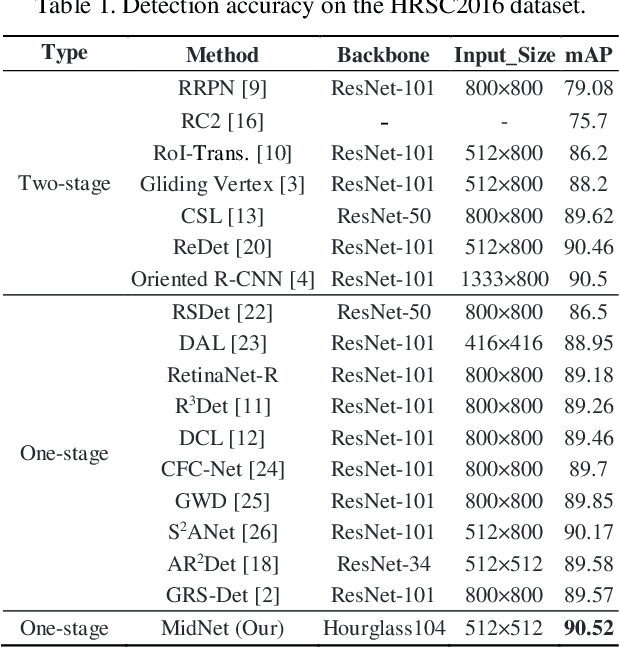

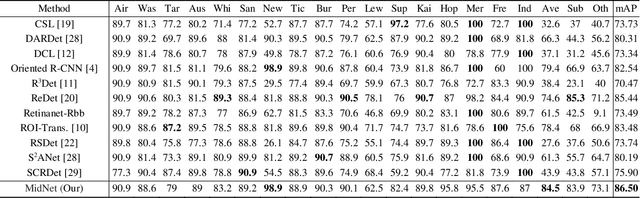

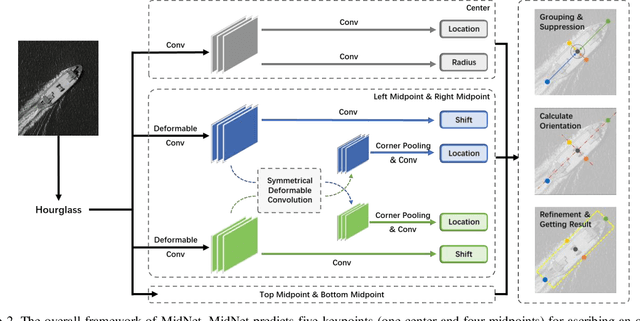

Abstract:Ship detection in aerial images remains an active yet challenging task due to arbitrary object orientation and complex background from a bird's-eye perspective. Most of the existing methods rely on angular prediction or predefined anchor boxes, making these methods highly sensitive to unstable angular regression and excessive hyper-parameter setting. To address these issues, we replace the angular-based object encoding with an anchor-and-angle-free paradigm, and propose a novel detector deploying a center and four midpoints for encoding each oriented object, namely MidNet. MidNet designs a symmetrical deformable convolution customized for enhancing the midpoints of ships, then the center and midpoints for an identical ship are adaptively matched by predicting corresponding centripetal shift and matching radius. Finally, a concise analytical geometry algorithm is proposed to refine the centers and midpoints step-wisely for building precise oriented bounding boxes. On two public ship detection datasets, HRSC2016 and FGSD2021, MidNet outperforms the state-of-the-art detectors by achieving APs of 90.52% and 86.50%. Additionally, MidNet obtains competitive results in the ship detection of DOTA.

Online Structured Sparsity-based Moving Object Detection from Satellite Videos

Dec 10, 2019

Abstract:Inspired by the recent developments in computer vision, low-rank and structured sparse matrix decomposition can be potentially be used for extract moving objects in satellite videos. This set of approaches seeks for rank minimization on the background that typically requires batch-based optimization over a sequence of frames, which causes delays in processing and limits their applications. To remedy this delay, we propose an Online Low-rank and Structured Sparse Decomposition (O-LSD). O-LSD reformulates the batch-based low-rank matrix decomposition with the structured sparse penalty to its equivalent frame-wise separable counterpart, which then defines a stochastic optimization problem for online subspace basis estimation. In order to promote online processing, O-LSD conducts the foreground and background separation and the subspace basis update alternatingly for every frame in a video. We also show the convergence of O-LSD theoretically. Experimental results on two satellite videos demonstrate the performance of O-LSD in term of accuracy and time consumption is comparable with the batch-based approaches with significantly reduced delay in processing.

Error Bounded Foreground and Background Modeling for Moving Object Detection in Satellite Videos

Aug 26, 2019

Abstract:Detecting moving objects from ground-based videos is commonly achieved by using background subtraction techniques. Low-rank matrix decomposition inspires a set of state-of-the-art approaches for this task. It is integrated with structured sparsity regularization to achieve background subtraction in the developed method of Low-rank and Structured Sparse Decomposition (LSD). However, when this method is applied to satellite videos where spatial resolution is poor and targets' contrast to the background is low, its performance is limited as the data no longer fits adequately either the foreground structure or the background model. In this paper, we handle these unexplained data explicitly and address the moving target detection from space as one of the pioneer studies. We propose a technique by extending the decomposition formulation with bounded errors, named Extended Low-rank and Structured Sparse Decomposition (E-LSD). This formulation integrates low-rank background, structured sparse foreground and their residuals in a matrix decomposition problem. We provide an effective solution by introducing an alternative treatment and adopting the direct extension of Alternating Direction Method of Multipliers (ADMM). The proposed E-LSD was validated on two satellite videos, and experimental results demonstrate the improvement in background modeling with boosted moving object detection precision over state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge