Weiquan Liu

A New Adversarial Perspective for LiDAR-based 3D Object Detection

Dec 17, 2024

Abstract:Autonomous vehicles (AVs) rely on LiDAR sensors for environmental perception and decision-making in driving scenarios. However, ensuring the safety and reliability of AVs in complex environments remains a pressing challenge. To address this issue, we introduce a real-world dataset (ROLiD) comprising LiDAR-scanned point clouds of two random objects: water mist and smoke. In this paper, we introduce a novel adversarial perspective by proposing an attack framework that utilizes water mist and smoke to simulate environmental interference. Specifically, we propose a point cloud sequence generation method using a motion and content decomposition generative adversarial network named PCS-GAN to simulate the distribution of random objects. Furthermore, leveraging the simulated LiDAR scanning characteristics implemented with Range Image, we examine the effects of introducing random object perturbations at various positions on the target vehicle. Extensive experiments demonstrate that adversarial perturbations based on random objects effectively deceive vehicle detection and reduce the recognition rate of 3D object detection models.

Depth Matters: Exploring Deep Interactions of RGB-D for Semantic Segmentation in Traffic Scenes

Sep 12, 2024

Abstract:RGB-D has gradually become a crucial data source for understanding complex scenes in assisted driving. However, existing studies have paid insufficient attention to the intrinsic spatial properties of depth maps. This oversight significantly impacts the attention representation, leading to prediction errors caused by attention shift issues. To this end, we propose a novel learnable Depth interaction Pyramid Transformer (DiPFormer) to explore the effectiveness of depth. Firstly, we introduce Depth Spatial-Aware Optimization (Depth SAO) as offset to represent real-world spatial relationships. Secondly, the similarity in the feature space of RGB-D is learned by Depth Linear Cross-Attention (Depth LCA) to clarify spatial differences at the pixel level. Finally, an MLP Decoder is utilized to effectively fuse multi-scale features for meeting real-time requirements. Comprehensive experiments demonstrate that the proposed DiPFormer significantly addresses the issue of attention misalignment in both road detection (+7.5%) and semantic segmentation (+4.9% / +1.5%) tasks. DiPFormer achieves state-of-the-art performance on the KITTI (97.57% F-score on KITTI road and 68.74% mIoU on KITTI-360) and Cityscapes (83.4% mIoU) datasets.

Density-guided Translator Boosts Synthetic-to-Real Unsupervised Domain Adaptive Segmentation of 3D Point Clouds

Mar 27, 2024Abstract:3D synthetic-to-real unsupervised domain adaptive segmentation is crucial to annotating new domains. Self-training is a competitive approach for this task, but its performance is limited by different sensor sampling patterns (i.e., variations in point density) and incomplete training strategies. In this work, we propose a density-guided translator (DGT), which translates point density between domains, and integrates it into a two-stage self-training pipeline named DGT-ST. First, in contrast to existing works that simultaneously conduct data generation and feature/output alignment within unstable adversarial training, we employ the non-learnable DGT to bridge the domain gap at the input level. Second, to provide a well-initialized model for self-training, we propose a category-level adversarial network in stage one that utilizes the prototype to prevent negative transfer. Finally, by leveraging the designs above, a domain-mixed self-training method with source-aware consistency loss is proposed in stage two to narrow the domain gap further. Experiments on two synthetic-to-real segmentation tasks (SynLiDAR $\rightarrow$ semanticKITTI and SynLiDAR $\rightarrow$ semanticPOSS) demonstrate that DGT-ST outperforms state-of-the-art methods, achieving 9.4$\%$ and 4.3$\%$ mIoU improvements, respectively. Code is available at \url{https://github.com/yuan-zm/DGT-ST}.

E2PNet: Event to Point Cloud Registration with Spatio-Temporal Representation Learning

Nov 30, 2023Abstract:Event cameras have emerged as a promising vision sensor in recent years due to their unparalleled temporal resolution and dynamic range. While registration of 2D RGB images to 3D point clouds is a long-standing problem in computer vision, no prior work studies 2D-3D registration for event cameras. To this end, we propose E2PNet, the first learning-based method for event-to-point cloud registration. The core of E2PNet is a novel feature representation network called Event-Points-to-Tensor (EP2T), which encodes event data into a 2D grid-shaped feature tensor. This grid-shaped feature enables matured RGB-based frameworks to be easily used for event-to-point cloud registration, without changing hyper-parameters and the training procedure. EP2T treats the event input as spatio-temporal point clouds. Unlike standard 3D learning architectures that treat all dimensions of point clouds equally, the novel sampling and information aggregation modules in EP2T are designed to handle the inhomogeneity of the spatial and temporal dimensions. Experiments on the MVSEC and VECtor datasets demonstrate the superiority of E2PNet over hand-crafted and other learning-based methods. Compared to RGB-based registration, E2PNet is more robust to extreme illumination or fast motion due to the use of event data. Beyond 2D-3D registration, we also show the potential of EP2T for other vision tasks such as flow estimation, event-to-image reconstruction and object recognition. The source code can be found at: https://github.com/Xmu-qcj/E2PNet.

RiskQ: Risk-sensitive Multi-Agent Reinforcement Learning Value Factorization

Nov 03, 2023Abstract:Multi-agent systems are characterized by environmental uncertainty, varying policies of agents, and partial observability, which result in significant risks. In the context of Multi-Agent Reinforcement Learning (MARL), learning coordinated and decentralized policies that are sensitive to risk is challenging. To formulate the coordination requirements in risk-sensitive MARL, we introduce the Risk-sensitive Individual-Global-Max (RIGM) principle as a generalization of the Individual-Global-Max (IGM) and Distributional IGM (DIGM) principles. This principle requires that the collection of risk-sensitive action selections of each agent should be equivalent to the risk-sensitive action selection of the central policy. Current MARL value factorization methods do not satisfy the RIGM principle for common risk metrics such as the Value at Risk (VaR) metric or distorted risk measurements. Therefore, we propose RiskQ to address this limitation, which models the joint return distribution by modeling quantiles of it as weighted quantile mixtures of per-agent return distribution utilities. RiskQ satisfies the RIGM principle for the VaR and distorted risk metrics. We show that RiskQ can obtain promising performance through extensive experiments. The source code of RiskQ is available in https://github.com/xmu-rl-3dv/RiskQ.

DSMNet: Deep High-precision 3D Surface Modeling from Sparse Point Cloud Frames

Apr 09, 2023

Abstract:Existing point cloud modeling datasets primarily express the modeling precision by pose or trajectory precision rather than the point cloud modeling effect itself. Under this demand, we first independently construct a set of LiDAR system with an optical stage, and then we build a HPMB dataset based on the constructed LiDAR system, a High-Precision, Multi-Beam, real-world dataset. Second, we propose an modeling evaluation method based on HPMB for object-level modeling to overcome this limitation. In addition, the existing point cloud modeling methods tend to generate continuous skeletons of the global environment, hence lacking attention to the shape of complex objects. To tackle this challenge, we propose a novel learning-based joint framework, DSMNet, for high-precision 3D surface modeling from sparse point cloud frames. DSMNet comprises density-aware Point Cloud Registration (PCR) and geometry-aware Point Cloud Sampling (PCS) to effectively learn the implicit structure feature of sparse point clouds. Extensive experiments demonstrate that DSMNet outperforms the state-of-the-art methods in PCS and PCR on Multi-View Partial Point Cloud (MVP) database. Furthermore, the experiments on the open source KITTI and our proposed HPMB datasets show that DSMNet can be generalized as a post-processing of Simultaneous Localization And Mapping (SLAM), thereby improving modeling precision in environments with sparse point clouds.

Ins-ATP: Deep Estimation of ATP for Organoid Based on High Throughput Microscopic Images

Mar 15, 2023

Abstract:Adenosine triphosphate (ATP) is a high-energy phosphate compound and the most direct energy source in organisms. ATP is an essential biomarker for evaluating cell viability in biology. Researchers often use ATP bioluminescence to measure the ATP of organoid after drug to evaluate the drug efficacy. However, ATP bioluminescence has some limitations, leading to unreliable drug screening results. Performing ATP bioluminescence causes cell lysis of organoids, so it is impossible to observe organoids' long-term viability changes after medication continually. To overcome the disadvantages of ATP bioluminescence, we propose Ins-ATP, a non-invasive strategy, the first organoid ATP estimation model based on the high-throughput microscopic image. Ins-ATP directly estimates the ATP of organoids from high-throughput microscopic images, so that it does not influence the drug reactions of organoids. Therefore, the ATP change of organoids can be observed for a long time to obtain more stable results. Experimental results show that the ATP estimation by Ins-ATP is in good agreement with those determined by ATP bioluminescence. Specifically, the predictions of Ins-ATP are consistent with the results measured by ATP bioluminescence in the efficacy evaluation experiments of different drugs.

Interpreting Hidden Semantics in the Intermediate Layers of 3D Point Cloud Classification Neural Network

Mar 12, 2023Abstract:Although 3D point cloud classification neural network models have been widely used, the in-depth interpretation of the activation of the neurons and layers is still a challenge. We propose a novel approach, named Relevance Flow, to interpret the hidden semantics of 3D point cloud classification neural networks. It delivers the class Relevance to the activated neurons in the intermediate layers in a back-propagation manner, and associates the activation of neurons with the input points to visualize the hidden semantics of each layer. Specially, we reveal that the 3D point cloud classification neural network has learned the plane-level and part-level hidden semantics in the intermediate layers, and utilize the normal and IoU to evaluate the consistency of both levels' hidden semantics. Besides, by using the hidden semantics, we generate the adversarial attack samples to attack 3D point cloud classifiers. Experiments show that our proposed method reveals the hidden semantics of the 3D point cloud classification neural network on ModelNet40 and ShapeNet, which can be used for the unsupervised point cloud part segmentation without labels and attacking the 3D point cloud classifiers.

Adaptive Local Adversarial Attacks on 3D Point Clouds for Augmented Reality

Mar 12, 2023

Abstract:As the key technology of augmented reality (AR), 3D recognition and tracking are always vulnerable to adversarial examples, which will cause serious security risks to AR systems. Adversarial examples are beneficial to improve the robustness of the 3D neural network model and enhance the stability of the AR system. At present, most 3D adversarial attack methods perturb the entire point cloud to generate adversarial examples, which results in high perturbation costs and difficulty in reconstructing the corresponding real objects in the physical world. In this paper, we propose an adaptive local adversarial attack method (AL-Adv) on 3D point clouds to generate adversarial point clouds. First, we analyze the vulnerability of the 3D network model and extract the salient regions of the input point cloud, namely the vulnerable regions. Second, we propose an adaptive gradient attack algorithm that targets vulnerable regions. The proposed attack algorithm adaptively assigns different disturbances in different directions of the three-dimensional coordinates of the point cloud. Experimental results show that our proposed method AL-Adv achieves a higher attack success rate than the global attack method. Specifically, the adversarial examples generated by the AL-Adv demonstrate good imperceptibility and small generation costs.

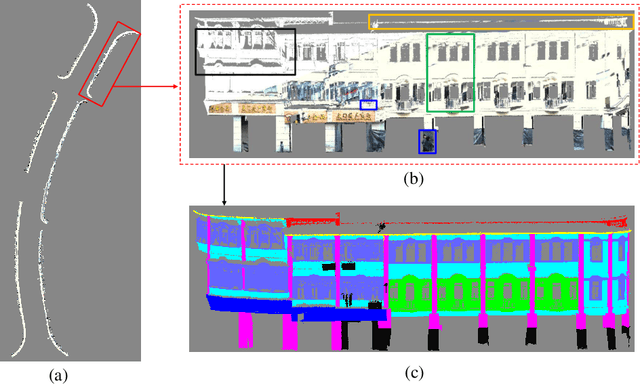

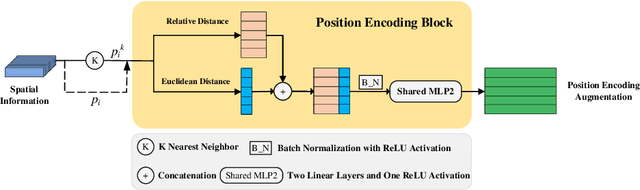

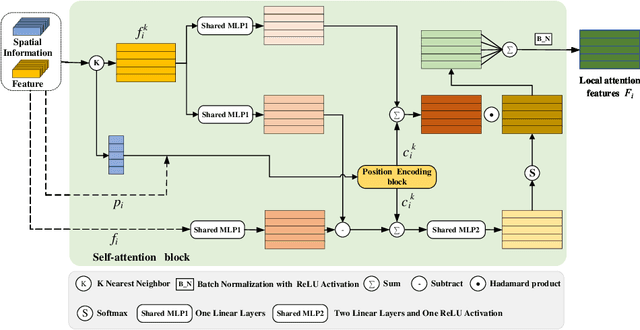

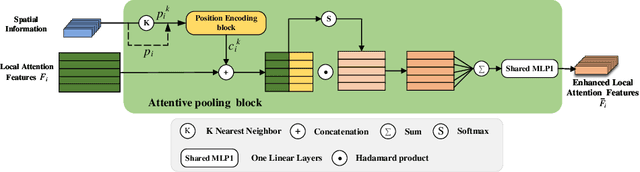

DLA-Net: Learning Dual Local Attention Features for Semantic Segmentation of Large-Scale Building Facade Point Clouds

Jun 01, 2021

Abstract:Semantic segmentation of building facade is significant in various applications, such as urban building reconstruction and damage assessment. As there is a lack of 3D point clouds datasets related to the fine-grained building facade, we construct the first large-scale building facade point clouds benchmark dataset for semantic segmentation. The existing methods of semantic segmentation cannot fully mine the local neighborhood information of point clouds. Addressing this problem, we propose a learnable attention module that learns Dual Local Attention features, called DLA in this paper. The proposed DLA module consists of two blocks, including the self-attention block and attentive pooling block, which both embed an enhanced position encoding block. The DLA module could be easily embedded into various network architectures for point cloud segmentation, naturally resulting in a new 3D semantic segmentation network with an encoder-decoder architecture, called DLA-Net in this work. Extensive experimental results on our constructed building facade dataset demonstrate that the proposed DLA-Net achieves better performance than the state-of-the-art methods for semantic segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge