Tomas Krajnik

Online Context Learning for Socially-compliant Navigation

Jun 17, 2024

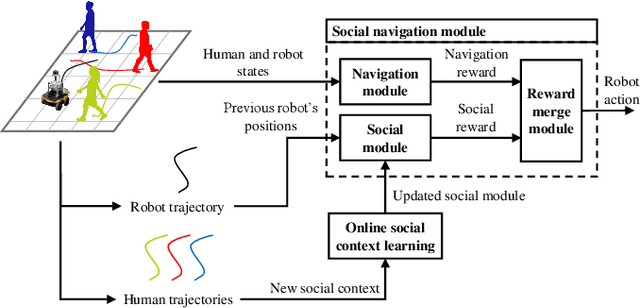

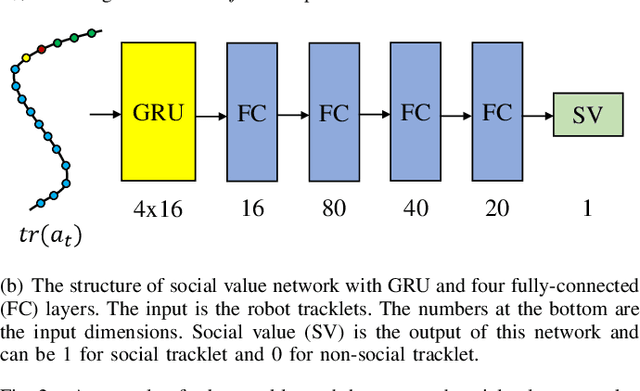

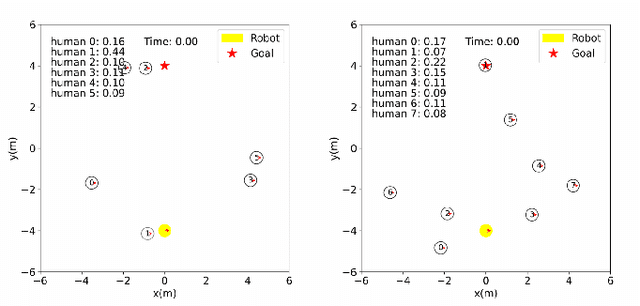

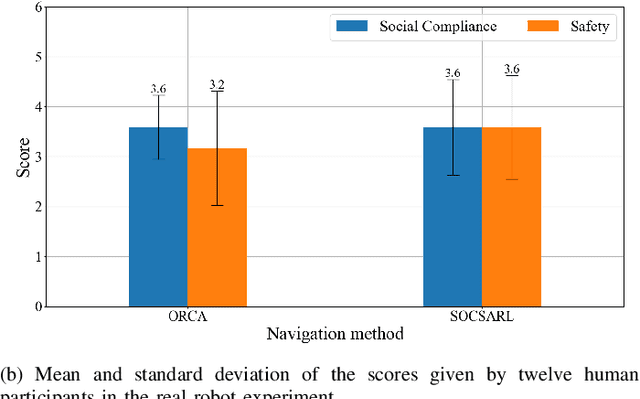

Abstract:Robot social navigation needs to adapt to different human factors and environmental contexts. However, since these factors and contexts are difficult to predict and cannot be exhaustively enumerated, traditional learning-based methods have difficulty in ensuring the social attributes of robots in long-term and cross-environment deployments. This letter introduces an online context learning method that aims to empower robots to adapt to new social environments online. The proposed method adopts a two-layer structure. The bottom layer is built using a deep reinforcement learning-based method to ensure the output of basic robot navigation commands. The upper layer is implemented using an online robot learning-based method to socialize the control commands suggested by the bottom layer. Experiments using a community-wide simulator show that our method outperforms the state-of-the-art ones. Experimental results in the most challenging scenarios show that our method improves the performance of the state-of-the-art by 8%. The source code of the proposed method, the data used, and the tools for the per-training step will be publicly available at https://github.com/Nedzhaken/SOCSARL-OL.

Towards Long-term Autonomy: A Perspective from Robot Learning

Jan 02, 2023Abstract:In the future, service robots are expected to be able to operate autonomously for long periods of time without human intervention. Many work striving for this goal have been emerging with the development of robotics, both hardware and software. Today we believe that an important underpinning of long-term robot autonomy is the ability of robots to learn on site and on-the-fly, especially when they are deployed in changing environments or need to traverse different environments. In this paper, we examine the problem of long-term autonomy from the perspective of robot learning, especially in an online way, and discuss in tandem its premise "data" and the subsequent "deployment".

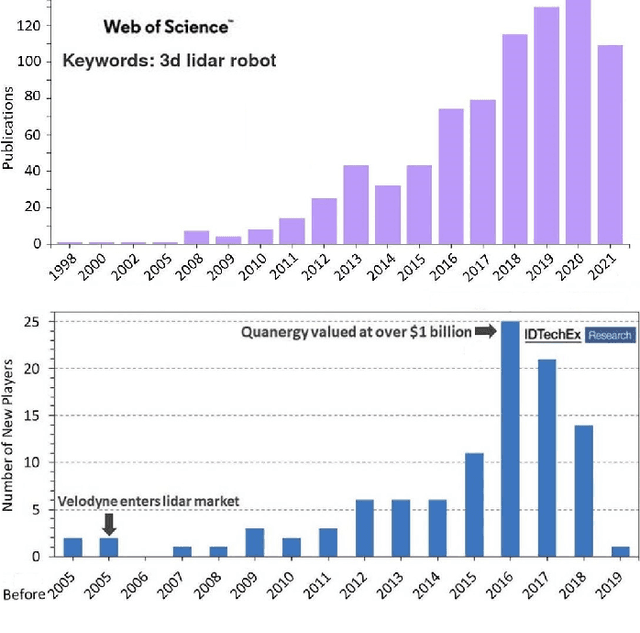

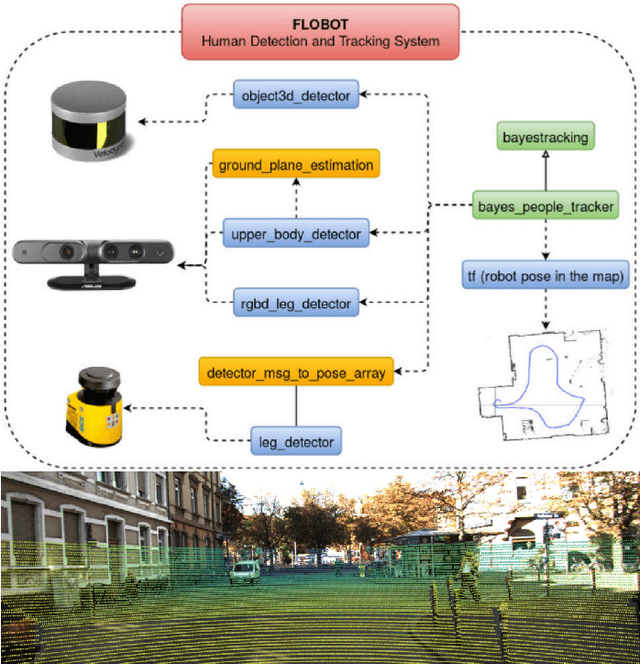

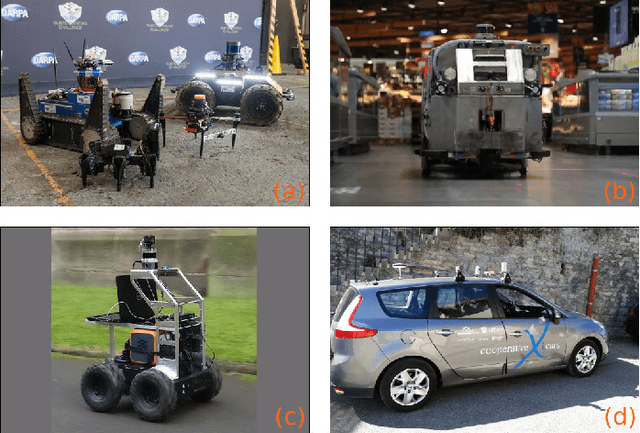

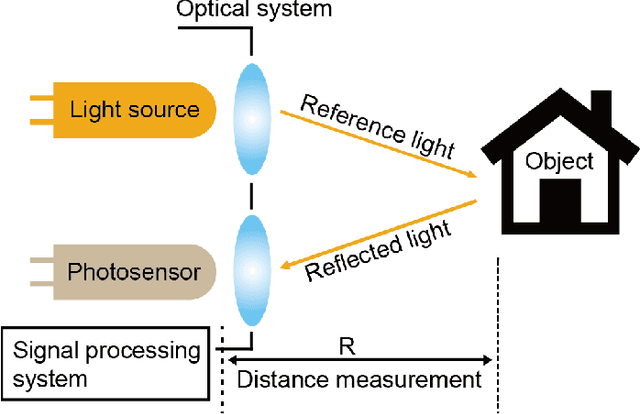

3D ToF LiDAR in Mobile Robotics: A Review

Feb 22, 2022

Abstract:In the past ten years, the use of 3D Time-of-Flight (ToF) LiDARs in mobile robotics has grown rapidly. Based on our accumulation of relevant research, this article systematically reviews and analyzes the use 3D ToF LiDARs in research and industrial applications. The former includes object detection, robot localization, long-term autonomy, LiDAR data processing under adverse weather conditions, and sensor fusion. The latter encompasses service robots, assisted and autonomous driving, and recent applications performed in response to public health crises. We hope that our efforts can effectively provide readers with relevant references and promote the deployment of existing mature technologies in real-world systems.

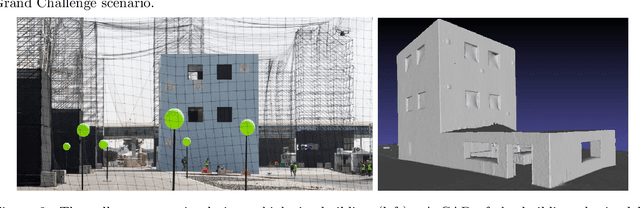

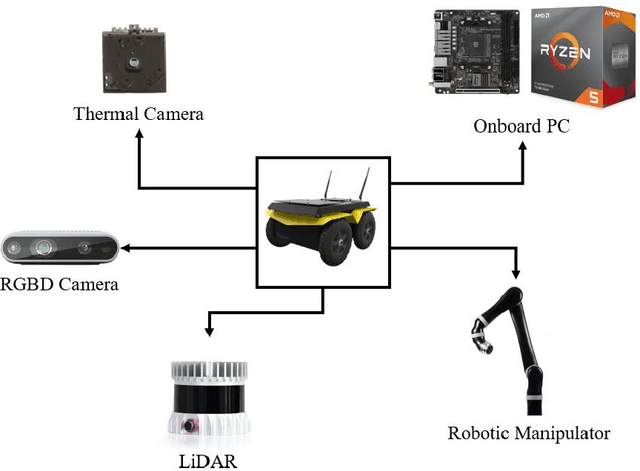

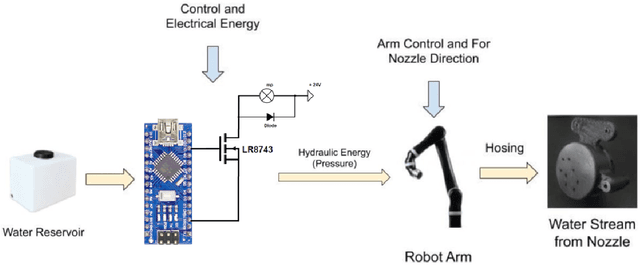

Design and Deployment of an Autonomous Unmanned Ground Vehicle for Urban Firefighting Scenarios

Jul 08, 2021

Abstract:Autonomous mobile robots have the potential to solve missions that are either too complex or dangerous to be accomplished by humans. In this paper, we address the design and autonomous deployment of a ground vehicle equipped with a robotic arm for urban firefighting scenarios. We describe the hardware design and algorithm approaches for autonomous navigation, planning, fire source identification and abatement in unstructured urban scenarios. The approach employs on-board sensors for autonomous navigation and thermal camera information for source identification. A custom electro{mechanical pump is responsible to eject water for fire abatement. The proposed approach is validated through several experiments, where we show the ability to identify and abate a sample heat source in a building. The whole system was developed and deployed during the Mohamed Bin Zayed International Robotics Challenge (MBZIRC) 2020, for Challenge No. 3 Fire Fighting Inside a High-Rise Building and during the Grand Challenge where our approach scored the highest number of points among all UGV solutions and was instrumental to win the first place.

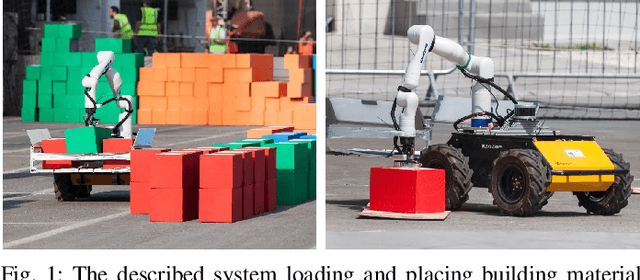

Mobile Manipulator for Autonomous Localization, Grasping and Precise Placement of Construction Material in a Semi-structured Environment

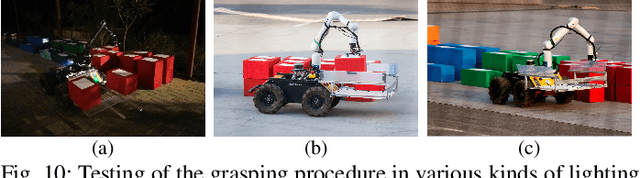

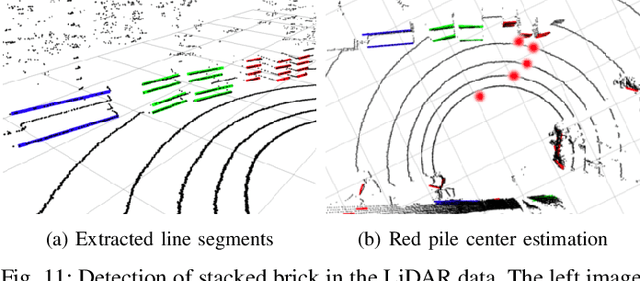

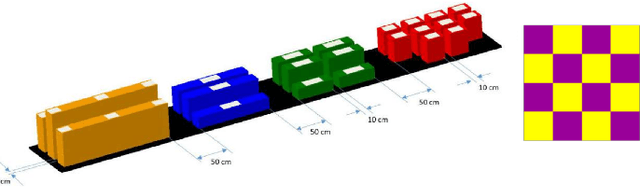

Nov 16, 2020

Abstract:Mobile manipulators have the potential to revolutionize modern agriculture, logistics and manufacturing. In this work, we present the design of a ground-based mobile manipulator for automated structure assembly. The proposed system is capable of autonomous localization, grasping, transportation and deployment of construction material in a semi-structured environment. Special effort was put into making the system invariant to lighting changes, and not reliant on external positioning systems. Therefore, the presented system is self-contained and capable of operating in outdoor and indoor conditions alike. Finally, we present means to extend the perceptive radius of the vehicle by using it in cooperation with an autonomous drone, which provides aerial reconnaissance. Performance of the proposed system has been evaluated in a series of experiments conducted in real-world conditions.

EU Long-term Dataset with Multiple Sensors for Autonomous Driving

Sep 07, 2019

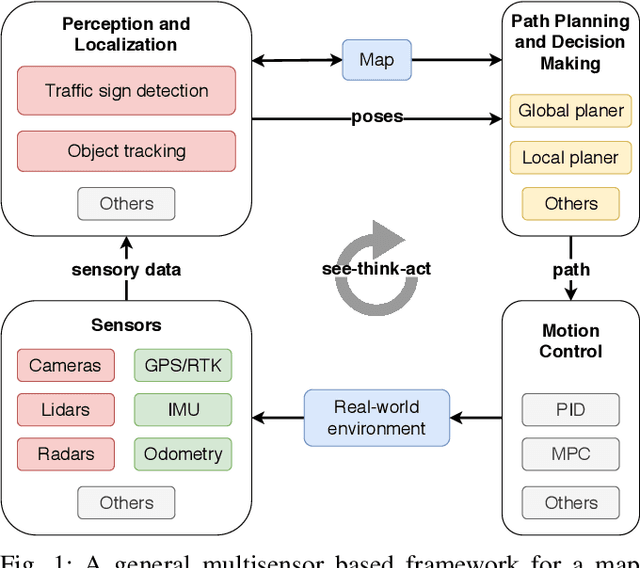

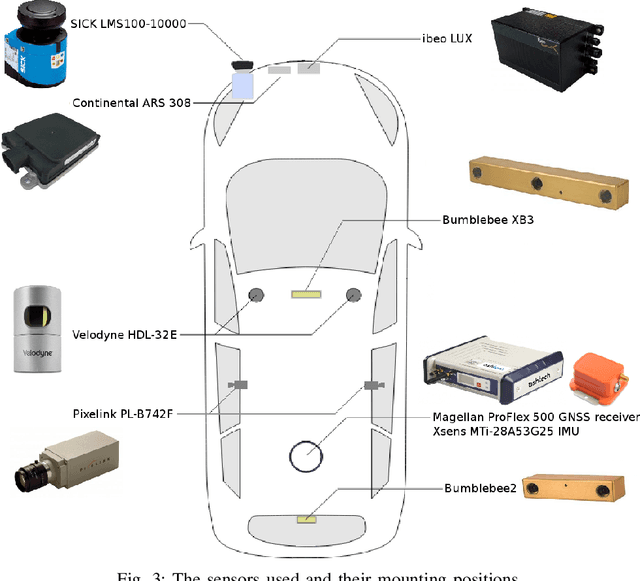

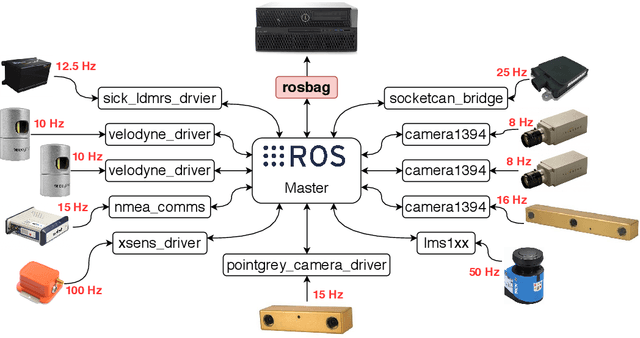

Abstract:The field of autonomous driving has grown tremendously over the past few years, along with the rapid progress in sensor technology. One of the major purposes of using sensors is to provide environment perception for vehicle understanding, learning and reasoning, and ultimately interacting with the environment. In this article, we introduce a multisensor framework allowing vehicle to perceive its surroundings and locate itself in a more efficient and accurate way. Our framework integrates up to eleven heterogeneous sensors including various cameras and lidars, a radar, an IMU (Inertial Measurement Unit), and a GPS/RTK (Global Positioning System / Real-Time Kinematic), while exploits a ROS (Robot Operating System) based software to process the sensory data. In addition, we present a new dataset (https://epan-utbm.github.io/utbm_robocar_dataset/) for autonomous driving captured many new research challenges (e.g. highly dynamic environment), and especially for long-term autonomy (e.g. creating and maintaining maps), collected with our instrumented vehicle, publicly available to the community.

Warped Hypertime Representations for Long-term Autonomy of Mobile Robots

Oct 09, 2018

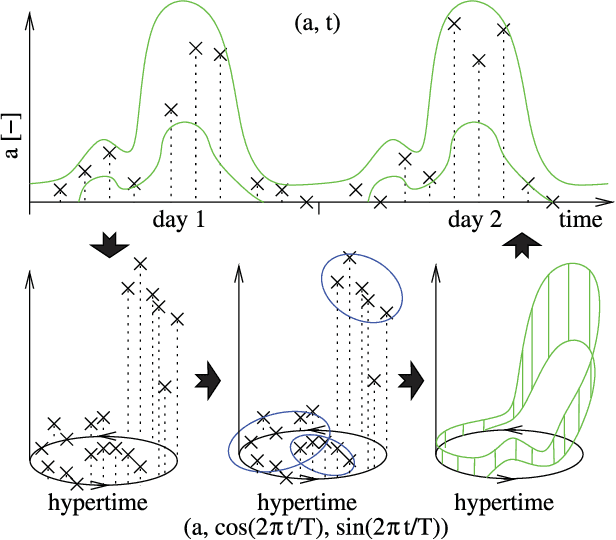

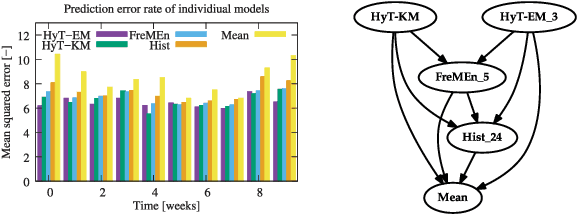

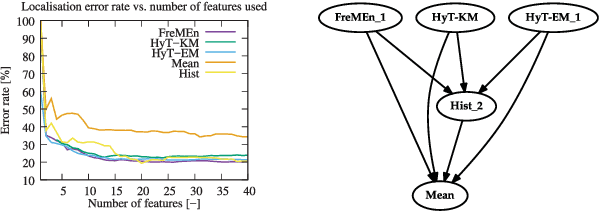

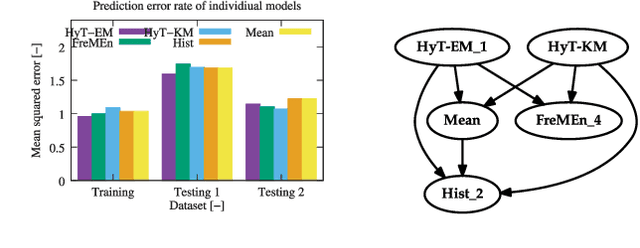

Abstract:This paper presents a novel method for introducing time into discrete and continuous spatial representations used in mobile robotics, by modelling long-term, pseudo-periodic variations caused by human activities. Unlike previous approaches, the proposed method does not treat time and space separately, and its continuous nature respects both the temporal and spatial continuity of the modeled phenomena. The method extends the given spatial model with a set of wrapped dimensions that represent the periodicities of observed changes. By performing clustering over this extended representation, we obtain a model that allows us to predict future states of both discrete and continuous spatial representations. We apply the proposed algorithm to several long-term datasets and show that the method enables a robot to predict future states of repre- sentations with different dimensions. The experiments further show that the method achieves more accurate predictions than the previous state of the art.

Navigation without localisation: reliable teach and repeat based on the convergence theorem

Jul 30, 2018

Abstract:We present a novel concept for teach-and-repeat visual navigation. The proposed concept is based on a mathematical model, which indicates that in teach-and-repeat navigation scenarios, mobile robots do not need to perform explicit localisation. Rather than that, a mobile robot which repeats a previously taught path can simply `replay' the learned velocities, while using its camera information only to correct its heading relative to the intended path. To support our claim, we establish a position error model of a robot, which traverses a taught path by only correcting its heading. Then, we outline a mathematical proof which shows that this position error does not diverge over time. Based on the insights from the model, we present a simple monocular teach-and-repeat navigation method. The method is computationally efficient, it does not require camera calibration, and it can learn and autonomously traverse arbitrarily-shaped paths. In a series of experiments, we demonstrate that the method can reliably guide mobile robots in realistic indoor and outdoor conditions, and can cope with imperfect odometry, landmark deficiency, illumination variations and naturally-occurring environment changes. Furthermore, we provide the navigation system and the datasets gathered at http://www.github.com/gestom/stroll_bearnav.

* The paper will be presented at IROS 2018 in Madrid

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge