EU Long-term Dataset with Multiple Sensors for Autonomous Driving

Paper and Code

Sep 07, 2019

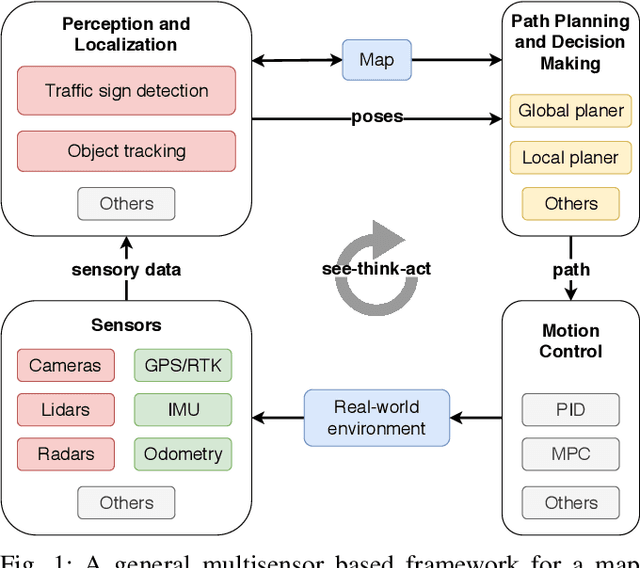

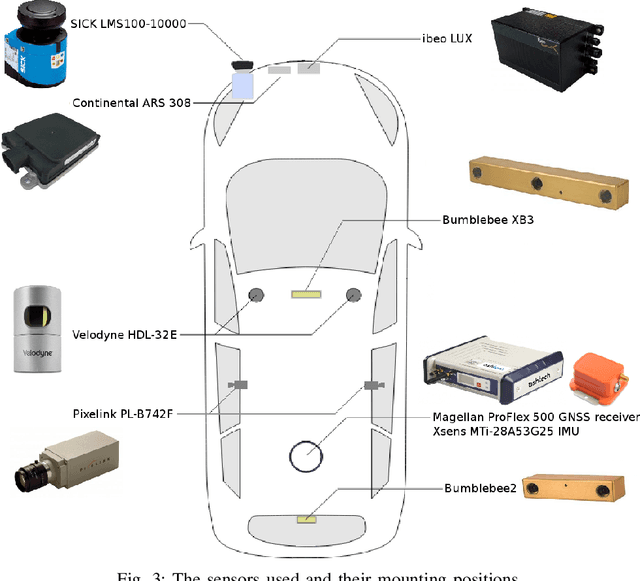

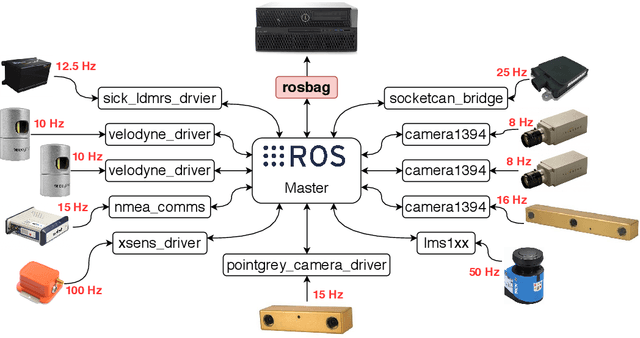

The field of autonomous driving has grown tremendously over the past few years, along with the rapid progress in sensor technology. One of the major purposes of using sensors is to provide environment perception for vehicle understanding, learning and reasoning, and ultimately interacting with the environment. In this article, we introduce a multisensor framework allowing vehicle to perceive its surroundings and locate itself in a more efficient and accurate way. Our framework integrates up to eleven heterogeneous sensors including various cameras and lidars, a radar, an IMU (Inertial Measurement Unit), and a GPS/RTK (Global Positioning System / Real-Time Kinematic), while exploits a ROS (Robot Operating System) based software to process the sensory data. In addition, we present a new dataset (https://epan-utbm.github.io/utbm_robocar_dataset/) for autonomous driving captured many new research challenges (e.g. highly dynamic environment), and especially for long-term autonomy (e.g. creating and maintaining maps), collected with our instrumented vehicle, publicly available to the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge