Martin Saska

FDA Flocking: Future Direction-Aware Flocking via Velocity Prediction

Feb 03, 2026Abstract:Understanding self-organization in natural collectives such as bird flocks inspires swarm robotics, yet most flocking models remain reactive, overlooking anticipatory cues that enhance coordination. Motivated by avian postural and wingbeat signals, as well as multirotor attitude tilts that precede directional changes, this work introduces a principled, bio-inspired anticipatory augmentation of reactive flocking termed Future Direction-Aware (FDA) flocking. In the proposed framework, agents blend reactive alignment with a predictive term based on short-term estimates of neighbors' future velocities, regulated by a tunable blending parameter that interpolates between reactive and anticipatory behaviors. This predictive structure enhances velocity consensus and cohesion-separation balance while mitigating the adverse effects of sensing and communication delays and measurement noise that destabilize reactive baselines. Simulation results demonstrate that FDA achieves faster and higher alignment, enhanced translational displacement of the flock, and improved robustness to delays and noise compared to a purely reactive model. Future work will investigate adaptive blending strategies, weighted prediction schemes, and experimental validation on multirotor drone swarms.

Vision-only UAV State Estimation for Fast Flights Without External Localization Systems: A2RL Drone Racing Finalist Approach

Feb 02, 2026Abstract:Fast flights with aggressive maneuvers in cluttered GNSS-denied environments require fast, reliable, and accurate UAV state estimation. In this paper, we present an approach for onboard state estimation of a high-speed UAV using a monocular RGB camera and an IMU. Our approach fuses data from Visual-Inertial Odometry (VIO), an onboard landmark-based camera measurement system, and an IMU to produce an accurate state estimate. Using onboard measurement data, we estimate and compensate for VIO drift through a novel mathematical drift model. State-of-the-art approaches often rely on more complex hardware (e.g., stereo cameras or rangefinders) and use uncorrected drifting VIO velocities, orientation, and angular rates, leading to errors during fast maneuvers. In contrast, our method corrects all VIO states (position, orientation, linear and angular velocity), resulting in accurate state estimation even during rapid and dynamic motion. Our approach was thoroughly validated through 1600 simulations and numerous real-world experiments. Furthermore, we applied the proposed method in the A2RL Drone Racing Challenge 2025, where our team advanced to the final four out of 210 teams and earned a medal.

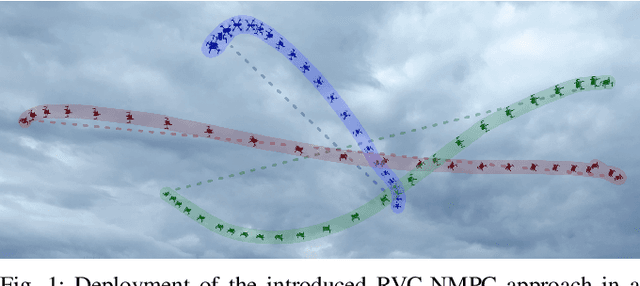

RVC-NMPC: Nonlinear Model Predictive Control with Reciprocal Velocity Constraints for Mutual Collision Avoidance in Agile UAV Flight

Dec 09, 2025

Abstract:This paper presents an approach to mutual collision avoidance based on Nonlinear Model Predictive Control (NMPC) with time-dependent Reciprocal Velocity Constraints (RVCs). Unlike most existing methods, the proposed approach relies solely on observable information about other robots, eliminating the necessity of excessive communication use. The computationally efficient algorithm for computing RVCs, together with the direct integration of these constraints into NMPC problem formulation on a controller level, allows the whole pipeline to run at 100 Hz. This high processing rate, combined with modeled nonlinear dynamics of the controlled Uncrewed Aerial Vehicles (UAVs), is a key feature that facilitates the use of the proposed approach for an agile UAV flight. The proposed approach was evaluated through extensive simulations emulating real-world conditions in scenarios involving up to 10 UAVs and velocities of up to 25 m/s, and in real-world experiments with accelerations up to 30 m/s$^2$. Comparison with state of the art shows 31% improvement in terms of flight time reduction in challenging scenarios, while maintaining a collision-free navigation in all trials.

RADRON: Cooperative Localization of Ionizing Radiation Sources by MAVs with Compton Cameras

Oct 29, 2025Abstract:We present a novel approach to localizing radioactive material by cooperating Micro Aerial Vehicles (MAVs). Our approach utilizes a state-of-the-art single-detector Compton camera as a highly sensitive, yet miniature detector of ionizing radiation. The detector's exceptionally low weight (40 g) opens up new possibilities of radiation detection by a team of cooperating agile MAVs. We propose a new fundamental concept of fusing the Compton camera measurements to estimate the position of the radiation source in real time even from extremely sparse measurements. The data readout and processing are performed directly onboard and the results are used in a dynamic feedback to drive the motion of the vehicles. The MAVs are stabilized in a tightly cooperating swarm to maximize the information gained by the Compton cameras, rapidly locate the radiation source, and even track a moving radiation source.

Degradation-Aware Cooperative Multi-Modal GNSS-Denied Localization Leveraging LiDAR-Based Robot Detections

Oct 23, 2025Abstract:Accurate long-term localization using onboard sensors is crucial for robots operating in Global Navigation Satellite System (GNSS)-denied environments. While complementary sensors mitigate individual degradations, carrying all the available sensor types on a single robot significantly increases the size, weight, and power demands. Distributing sensors across multiple robots enhances the deployability but introduces challenges in fusing asynchronous, multi-modal data from independently moving platforms. We propose a novel adaptive multi-modal multi-robot cooperative localization approach using a factor-graph formulation to fuse asynchronous Visual-Inertial Odometry (VIO), LiDAR-Inertial Odometry (LIO), and 3D inter-robot detections from distinct robots in a loosely-coupled fashion. The approach adapts to changing conditions, leveraging reliable data to assist robots affected by sensory degradations. A novel interpolation-based factor enables fusion of the unsynchronized measurements. LIO degradations are evaluated based on the approximate scan-matching Hessian. A novel approach of weighting odometry data proportionally to the Wasserstein distance between the consecutive VIO outputs is proposed. A theoretical analysis is provided, investigating the cooperative localization problem under various conditions, mainly in the presence of sensory degradations. The proposed method has been extensively evaluated on real-world data gathered with heterogeneous teams of an Unmanned Ground Vehicle (UGV) and Unmanned Aerial Vehicles (UAVs), showing that the approach provides significant improvements in localization accuracy in the presence of various sensory degradations.

On rapid parallel tuning of controllers of a swarm of MAVs -- distribution strategies of the updated gains

May 12, 2025Abstract:In this paper, we present a reliable, scalable, time deterministic, model-free procedure to tune swarms of Micro Aerial Vehicles (MAVs) using basic sensory data. Two approaches to taking advantage of parallel tuning are presented. First, the tuning with averaging of the results on the basis of performance indices reported from the swarm with identical gains to decrease the negative effect of the noise in the measurements. Second, the tuning with parallel testing of varying set of gains across the swarm to reduce the tuning time. The presented methods were evaluated both in simulation and real-world experiments. The achieved results show the ability of the proposed approach to improve the results of the tuning while decreasing the tuning time, ensuring at the same time a reliable tuning mechanism.

* 7 pages, 7 figures

Swarming in the Wild: A Distributed Communication-less Lloyd-based Algorithm dealing with Uncertainties

Apr 26, 2025Abstract:In this work, we present a distributed algorithm for swarming in complex environments that operates with no communication, no a priori information about the environment, and using only onboard sensing and computation capabilities. We provide sufficient conditions to guarantee that each robot reaches its goal region in a finite time, avoiding collisions with obstacles and other robots without exceeding a desired maximum distance from a predefined set of neighbors (flocking constraint). In addition, we show how the proposed algorithm can deal with tracking errors and onboard sensing errors without violating safety and proximity constraints, still providing the conditions for having convergence towards the goal region. To validate the approach, we provide experiments in the field. We tested our algorithm in GNSS-denied environments i.e., a dense forest, where fully autonomous aerial robots swarmed safely to the desired destinations, by relying only on onboard sensors, i.e., without a communication network. This work marks the initial deployment of a fully distributed system where there is no communication between the robots, nor reliance on any global localization system, which at the same time it ensures safety and convergence towards the goal within such complex environments.

Aerial Robots Persistent Monitoring and Target Detection: Deployment and Assessment in the Field

Apr 26, 2025Abstract:In this manuscript, we present a distributed algorithm for multi-robot persistent monitoring and target detection. In particular, we propose a novel solution that effectively integrates the Time-inverted Kuramoto model, three-dimensional Lissajous curves, and Model Predictive Control. We focus on the implementation of this algorithm on aerial robots, addressing the practical challenges involved in deploying our approach under real-world conditions. Our method ensures an effective and robust solution that maintains operational efficiency even in the presence of what we define as type I and type II failures. Type I failures refer to short-time disruptions, such as tracking errors and communication delays, while type II failures account for long-time disruptions, including malicious attacks, severe communication failures, and battery depletion. Our approach guarantees persistent monitoring and target detection despite these challenges. Furthermore, we validate our method with extensive field experiments involving up to eleven aerial robots, demonstrating the effectiveness, resilience, and scalability of our solution.

Variable Time-Step MPC for Agile Multi-Rotor UAV Interception of Dynamic Targets

Mar 18, 2025

Abstract:Agile trajectory planning can improve the efficiency of multi-rotor Uncrewed Aerial Vehicles (UAVs) in scenarios with combined task-oriented and kinematic trajectory planning, such as monitoring spatio-temporal phenomena or intercepting dynamic targets. Agile planning using existing non-linear model predictive control methods is limited by the number of planning steps as it becomes increasingly computationally demanding. That reduces the prediction horizon length, leading to a decrease in solution quality. Besides, the fixed time-step length limits the utilization of the available UAV dynamics in the target neighborhood. In this paper, we propose to address these limitations by introducing variable time steps and coupling them with the prediction horizon length. A simplified point-mass motion primitive is used to leverage the differential flatness of quadrotor dynamics and the generation of feasible trajectories in the flat output space. Based on the presented evaluation results and experimentally validated deployment, the proposed method increases the solution quality by enabling planning for long flight segments but allowing tightly sampled maneuvering.

CurviTrack: Curvilinear Trajectory Tracking for High-speed Chase of a USV

Feb 28, 2025Abstract:Heterogeneous robot teams used in marine environments incur time-and-energy penalties when the marine vehicle has to halt the mission to allow the autonomous aerial vehicle to land for recharging. In this paper, we present a solution for this problem using a novel drag-aware model formulation which is coupled with MPC, and therefore, enables tracking and landing during high-speed curvilinear trajectories of an USV without any communication. Compared to the state-of-the-art, our approach yields 40% decrease in prediction errors, and provides a 3-fold increase in certainty of predictions. Consequently, this leads to a 30% improvement in tracking performance and 40% higher success in landing on a moving USV even during aggressive turns that are unfeasible for conventional marine missions. We test our approach in two different real-world scenarios with marine vessels of two different sizes and further solidify our results through statistical analysis in simulation to demonstrate the robustness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge