Tiago Nascimento

CurviTrack: Curvilinear Trajectory Tracking for High-speed Chase of a USV

Feb 28, 2025Abstract:Heterogeneous robot teams used in marine environments incur time-and-energy penalties when the marine vehicle has to halt the mission to allow the autonomous aerial vehicle to land for recharging. In this paper, we present a solution for this problem using a novel drag-aware model formulation which is coupled with MPC, and therefore, enables tracking and landing during high-speed curvilinear trajectories of an USV without any communication. Compared to the state-of-the-art, our approach yields 40% decrease in prediction errors, and provides a 3-fold increase in certainty of predictions. Consequently, this leads to a 30% improvement in tracking performance and 40% higher success in landing on a moving USV even during aggressive turns that are unfeasible for conventional marine missions. We test our approach in two different real-world scenarios with marine vessels of two different sizes and further solidify our results through statistical analysis in simulation to demonstrate the robustness of our method.

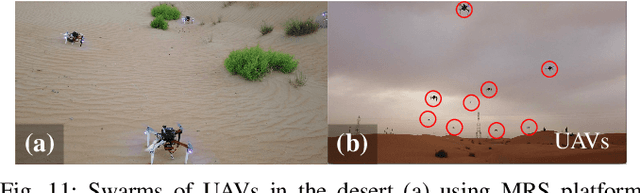

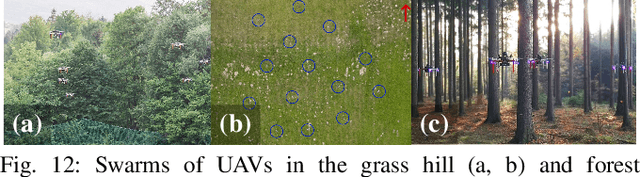

A Minimalistic 3D Self-Organized UAV Flocking Approach for Desert Exploration

Dec 03, 2024Abstract:In this work, we propose a minimalistic swarm flocking approach for multirotor unmanned aerial vehicles (UAVs). Our approach allows the swarm to achieve cohesively and aligned flocking (collective motion), in a random direction, without externally provided directional information exchange (alignment control). The method relies on minimalistic sensory requirements as it uses only the relative range and bearing of swarm agents in local proximity obtained through onboard sensors on the UAV. Thus, our method is able to stabilize and control the flock of a general shape above a steep terrain without any explicit communication between swarm members. To implement proximal control in a three-dimensional manner, the Lennard-Jones potential function is used to maintain cohesiveness and avoid collisions between robots. The performance of the proposed approach was tested in real-world conditions by experiments with a team of nine UAVs. Experiments also present the usage of our approach on UAVs that are independent of external positioning systems such as the Global Navigation Satellite System (GNSS). Relying only on a relative visual localization through the ultraviolet direction and ranging (UVDAR) system, previously proposed by our group, the experiments verify that our system can be applied in GNSS-denied environments. The degree achieved of alignment and cohesiveness was evaluated using the metrics of order and steady-state value.

A Generalized Thrust Estimation and Control Approach for Multirotors Micro Aerial Vehicles

Dec 03, 2024Abstract:This paper addresses the problem of thrust estimation and control for the rotors of small-sized multirotors Uncrewed Aerial Vehicles (UAVs). Accurate control of the thrust generated by each rotor during flight is one of the main challenges for robust control of quadrotors. The most common approach is to approximate the mapping of rotor speed to thrust with a simple quadratic model. This model is known to fail under non-hovering flight conditions, introducing errors into the control pipeline. One of the approaches to modeling the aerodynamics around the propellers is the Blade Element Momentum Theory (BEMT). Here, we propose a novel BEMT-based closed-loop thrust estimator and control to eliminate the laborious calibration step of finding several aerodynamic coefficients. We aim to reuse known values as a baseline and fit the thrust estimate to values closest to the real ones with a simple test bench experiment, resulting in a single scaling value. A feedforward PID thrust control was implemented for each rotor, and the methods were validated by outdoor experiments with two multirotor UAV platforms: 250mm and 500mm. A statistical analysis of the results showed that the thrust estimation and control provided better robustness under aerodynamically varying flight conditions compared to the quadratic model.

Proximal Control of UAVs with Federated Learning for Human-Robot Collaborative Domains

Dec 03, 2024

Abstract:The human-robot interaction (HRI) is a growing area of research. In HRI, complex command (action) classification is still an open problem that usually prevents the real applicability of such a technique. The literature presents some works that use neural networks to detect these actions. However, occlusion is still a major issue in HRI, especially when using uncrewed aerial vehicles (UAVs), since, during the robot's movement, the human operator is often out of the robot's field of view. Furthermore, in multi-robot scenarios, distributed training is also an open problem. In this sense, this work proposes an action recognition and control approach based on Long Short-Term Memory (LSTM) Deep Neural Networks with two layers in association with three densely connected layers and Federated Learning (FL) embedded in multiple drones. The FL enabled our approach to be trained in a distributed fashion, i.e., access to data without the need for cloud or other repositories, which facilitates the multi-robot system's learning. Furthermore, our multi-robot approach results also prevented occlusion situations, with experiments with real robots achieving an accuracy greater than 96%.

Intuitive Human-Robot Interface: A 3-Dimensional Action Recognition and UAV Collaboration Framework

Aug 17, 2024Abstract:Harnessing human movements to command an Unmanned Aerial Vehicle (UAV) holds the potential to revolutionize their deployment, rendering it more intuitive and user-centric. In this research, we introduce a novel methodology adept at classifying three-dimensional human actions, leveraging them to coordinate on-field with a UAV. Utilizing a stereo camera, we derive both RGB and depth data, subsequently extracting three-dimensional human poses from the continuous video feed. This data is then processed through our proposed k-nearest neighbour classifier, the results of which dictate the behaviour of the UAV. It also includes mechanisms ensuring the robot perpetually maintains the human within its visual purview, adeptly tracking user movements. We subjected our approach to rigorous testing involving multiple tests with real robots. The ensuing results, coupled with comprehensive analysis, underscore the efficacy and inherent advantages of our proposed methodology.

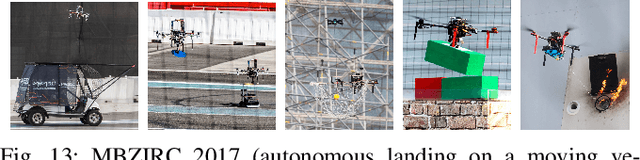

MRS Drone: A Modular Platform for Real-World Deployment of Aerial Multi-Robot Systems

Jun 12, 2023Abstract:This paper presents a modular autonomous Unmanned Aerial Vehicle (UAV) platform called the Multi-robot Systems (MRS) Drone that can be used in a large range of indoor and outdoor applications. The MRS Drone features unique modularity with respect to changes in actuators, frames, and sensory configuration. As the name suggests, the platform is specially tailored for deployment within a MRS group. The MRS Drone contributes to the state-of-the-art of UAV platforms by allowing smooth real-world deployment of multiple aerial robots, as well as by outperforming other platforms with its modularity. For real-world multi-robot deployment in various applications, the platform is easy to both assemble and modify. Moreover, it is accompanied by a realistic simulator to enable safe pre-flight testing and a smooth transition to complex real-world experiments. In this manuscript, we present mechanical and electrical designs, software architecture, and technical specifications to build a fully autonomous multi UAV system. Finally, we demonstrate the full capabilities and the unique modularity of the MRS Drone in various real-world applications that required a diverse range of platform configurations.

MRS Modular UAV Hardware Platforms for Supporting Research in Real-World Outdoor and Indoor Environments

Feb 09, 2023

Abstract:This paper presents a family of autonomous Unmanned Aerial Vehicles (UAVs) platforms designed for a diverse range of indoor and outdoor applications. The proposed UAV design is highly modular in terms of used actuators, sensor configurations, and even UAV frames. This allows to achieve, with minimal effort, a proper experimental setup for single, as well as, multi robot scenarios. Presented platforms are intended to facilitate the transition from simulations, and simplified laboratory experiments, into the deployment of aerial robots into uncertain and hard-to-model real-world conditions. We present mechanical designs, electric configurations, and dynamic models of the UAVs, followed by numerous recommendations and technical details required for building such a fully autonomous UAV system for experimental verification of scientific achievements. To show strength and high variability of the proposed system, we present results of tens of completely different real-robot experiments in various environments using distinct actuator and sensory configurations.

* 10 pages, 17 figures, conference

A Multi-Layer Software Architecture for Aerial Cognitive Multi-Robot Systems in Power Line Inspection Tasks

Feb 09, 2023

Abstract:This paper presents a multi-layer software architecture to perform cooperative missions with a fleet of quadrotors providing support in electrical power line inspection operations. The proposed software framework guarantees the compliance with safety requirements between drones and human workers while ensuring that the mission is carried out successfully. Besides, cognitive capabilities are integrated in the multi-vehicle system in order to reply to unforeseen events and external disturbances. The feasibility and effectiveness of the proposed architecture are demonstrated by means of realistic simulations.

* 6 pages, 7 figures, conference

Landing a UAV in Harsh Winds and Turbulent Open Waters

Dec 31, 2022

Abstract:Landing an unmanned aerial vehicle unmanned aerial vehicle (UAV) on top of an unmanned surface vehicle (USV) in harsh open waters is a challenging problem, owing to forces that can damage the UAV due to a severe roll and/or pitch angle of the USV during touchdown. To tackle this, we propose a novel model predictive control (MPC) approach enabling a UAV to land autonomously on a USV in these harsh conditions. The MPC employs a novel objective function and an online decomposition of the oscillatory motion of the vessel to predict, attempt, and accomplish the landing during near-zero tilt of the landing platform. The nonlinear prediction of the motion of the vessel is performed using visual data from an onboard camera. Therefore, the system does not require any communication with the USV or a control station. The proposed method was analyzed in numerous robotics simulations in harsh and extreme conditions and further validated in various real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge