Anibal Ollero

GRVC Robotics Lab, Seville, Spain

Range-Only Localization System for Small-Scale Flapping-Wing Robots

Jan 02, 2025

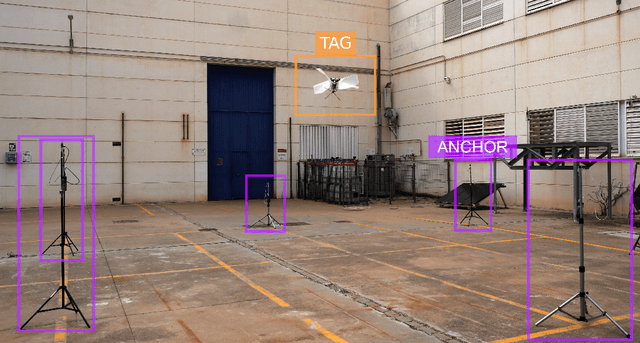

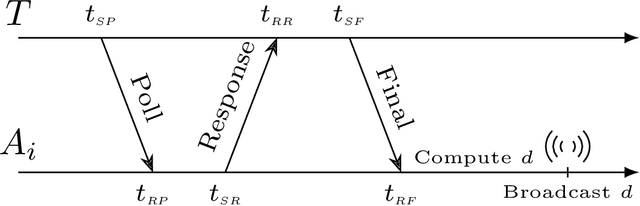

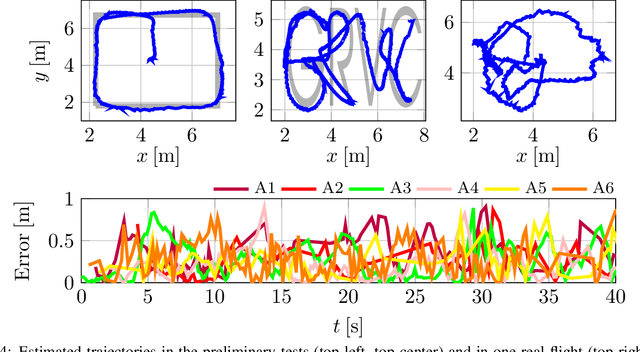

Abstract:The design of localization systems for small-scale flapping-wing aerial robots faces relevant challenges caused by the limited payload and onboard computational resources. This paper presents an ultra-wideband localization system particularly designed for small-scale flapping-wing robots. The solution relies on custom 5 grams ultra-wideband sensors and provides robust, very efficient (in terms of both computation and energy consumption), and accurate (mean error of 0.28 meters) 3D position estimation. We validate our system using a Flapper Nimble+ flapping-wing robot.

On the Benefits of Visual Stabilization for Frame- and Event-based Perception

Aug 28, 2024

Abstract:Vision-based perception systems are typically exposed to large orientation changes in different robot applications. In such conditions, their performance might be compromised due to the inherent complexity of processing data captured under challenging motion. Integration of mechanical stabilizers to compensate for the camera rotation is not always possible due to the robot payload constraints. This paper presents a processing-based stabilization approach to compensate the camera's rotational motion both on events and on frames (i.e., images). Assuming that the camera's attitude is available, we evaluate the benefits of stabilization in two perception applications: feature tracking and estimating the translation component of the camera's ego-motion. The validation is performed using synthetic data and sequences from well-known event-based vision datasets. The experiments unveil that stabilization can improve feature tracking and camera ego-motion estimation accuracy in 27.37% and 34.82%, respectively. Concurrently, stabilization can reduce the processing time of computing the camera's linear velocity by at least 25%. Code is available at https://github.com/tub-rip/visual_stabilization

* 8 pages, 4 figures, 4 tables, https://github.com/tub-rip/visual_stabilization

AERIAL-CORE: AI-Powered Aerial Robots for Inspection and Maintenance of Electrical Power Infrastructures

Jan 04, 2024Abstract:Large-scale infrastructures are prone to deterioration due to age, environmental influences, and heavy usage. Ensuring their safety through regular inspections and maintenance is crucial to prevent incidents that can significantly affect public safety and the environment. This is especially pertinent in the context of electrical power networks, which, while essential for energy provision, can also be sources of forest fires. Intelligent drones have the potential to revolutionize inspection and maintenance, eliminating the risks for human operators, increasing productivity, reducing inspection time, and improving data collection quality. However, most of the current methods and technologies in aerial robotics have been trialed primarily in indoor testbeds or outdoor settings under strictly controlled conditions, always within the line of sight of human operators. Additionally, these methods and technologies have typically been evaluated in isolation, lacking comprehensive integration. This paper introduces the first autonomous system that combines various innovative aerial robots. This system is designed for extended-range inspections beyond the visual line of sight, features aerial manipulators for maintenance tasks, and includes support mechanisms for human operators working at elevated heights. The paper further discusses the successful validation of this system on numerous electrical power lines, with aerial robots executing flights over 10 kilometers away from their ground control stations.

A novel concept for Titan robotic exploration based on soft morphing aerial robots

Nov 15, 2023

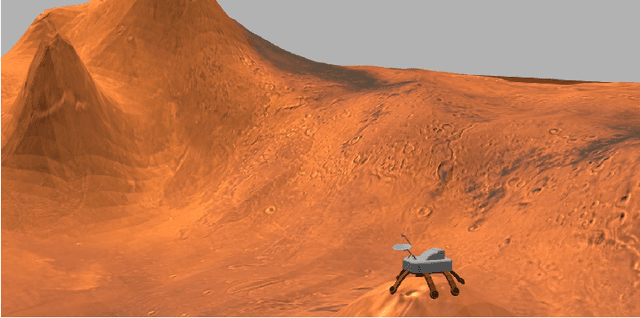

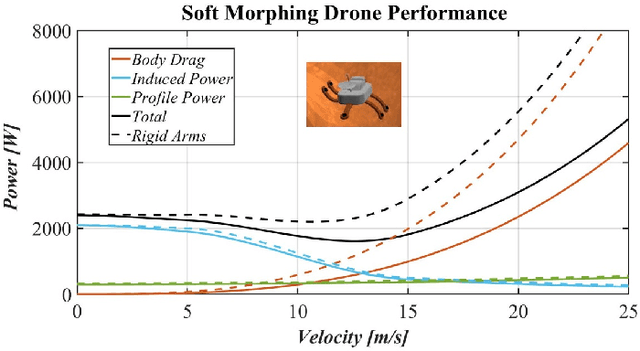

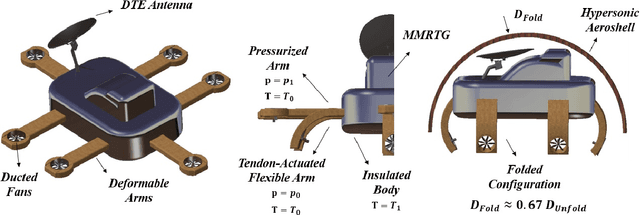

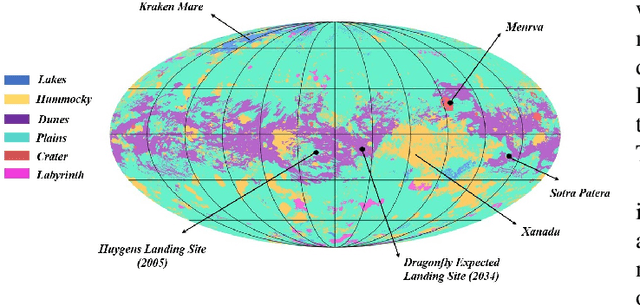

Abstract:This work introduces a novel approach for Titan exploration based on soft morphing aerial robots leveraging the use of flexible adaptive materials. The controlled deformation of the multirotor arms, actuated by a combination of a pneumatic system and a tendon mechanism, provides the explorer robot with the ability to perform full-body perching and land on rocky, irregular, or uneven terrains, thus unlocking new exploration horizons. In addition, after landing, they can be used for efficient sampling as tendon-driven continuum manipulators, with the pneumatic system drawing in the samples. The proposed arms enable the drone to cover long distances in Titan's atmosphere efficiently, by directing rotor thrust without rotating the body, reducing the aerodynamic drag. Given that the exploration concept is envisioned as a rotorcraft planetary lander, the robot's folding features enable over a 30$\%$ reduction in the hypersonic aeroshell's diameter. Building on this folding capability, the arms can morph partially in flight to navigate tight spaces. As for propulsion, the rotor design, justified through CFD simulations, utilizes a ducted fan configuration tailored for Titan's high Reynolds numbers. The rotors are integrated within the robot's deformable materials, facilitating smooth interactions with the environment. The research spotlights exploration simulations in the Gazebo environment, focusing on the Sotra-Patera cryovolcano region, a location with potential to clarify Titan's unique methane cycle and its Earth-like features. This work addresses one of the primary challenges of the concept by testing the behavior of small-scale deformable arms under conditions mimicking those of Titan. Groundbreaking experiments with liquid nitrogen at cryogenic temperatures were conducted on various materials, with Teflon (PTFE) at low infill rates (15-30%) emerging as a promising option.

A Comparison between Frame-based and Event-based Cameras for Flapping-Wing Robot Perception

Sep 11, 2023Abstract:Perception systems for ornithopters face severe challenges. The harsh vibrations and abrupt movements caused during flapping are prone to produce motion blur and strong lighting condition changes. Their strict restrictions in weight, size, and energy consumption also limit the type and number of sensors to mount onboard. Lightweight traditional cameras have become a standard off-the-shelf solution in many flapping-wing designs. However, bioinspired event cameras are a promising solution for ornithopter perception due to their microsecond temporal resolution, high dynamic range, and low power consumption. This paper presents an experimental comparison between frame-based and an event-based camera. Both technologies are analyzed considering the particular flapping-wing robot specifications and also experimentally analyzing the performance of well-known vision algorithms with data recorded onboard a flapping-wing robot. Our results suggest event cameras as the most suitable sensors for ornithopters. Nevertheless, they also evidence the open challenges for event-based vision on board flapping-wing robots.

Experimental method for perching flapping-wing aerial robots

Sep 04, 2023

Abstract:In this work, we present an experimental setup and guide to enable the perching of large flapping-wing robots. The combination of forward flight, limited payload, and flight oscillations imposes challenging conditions for localized perching. The described method details the different operations that are concurrently performed within the 4 second perching flight. We validate this experiment with a 700 g ornithopter and demonstrate the first autonomous perching flight of a flapping-wing robot on a branch. This work paves the way towards the application of flapping-wing robots for long-range missions, bird observation, manipulation, and outdoor flight.

Experimental Energy Consumption Analysis of a Flapping-Wing Robot

Jun 01, 2023

Abstract:One of the motivations for exploring flapping-wing aerial robotic systems is to seek energy reduction, by maintaining manoeuvrability, compared to conventional unmanned aerial systems. A Flapping Wing Flying Robot (FWFR) can glide in favourable wind conditions, decreasing energy consumption significantly. In addition, it is also necessary to investigate the power consumption of the components in the flapping-wing robot. In this work, two sets of the FWFR components are analyzed in terms of power consumption: a) motor/electronics components and b) a vision system for monitoring the environment during the flight. A measurement device is used to record the power utilization of the motors in the launching and ascending phases of the flight and also in cruising flight around the desired height. Additionally, an analysis of event cameras and stereo vision systems in terms of energy consumption has been performed. The results provide a first step towards decreasing battery usage and, consequently, providing additional flight time.

A Multi-Layer Software Architecture for Aerial Cognitive Multi-Robot Systems in Power Line Inspection Tasks

Feb 09, 2023

Abstract:This paper presents a multi-layer software architecture to perform cooperative missions with a fleet of quadrotors providing support in electrical power line inspection operations. The proposed software framework guarantees the compliance with safety requirements between drones and human workers while ensuring that the mission is carried out successfully. Besides, cognitive capabilities are integrated in the multi-vehicle system in order to reply to unforeseen events and external disturbances. The feasibility and effectiveness of the proposed architecture are demonstrated by means of realistic simulations.

* 6 pages, 7 figures, conference

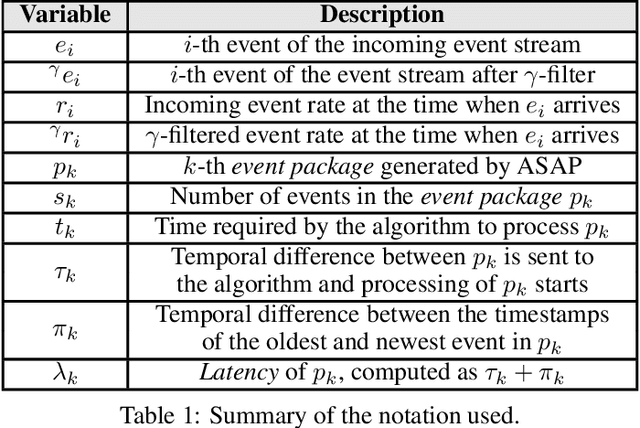

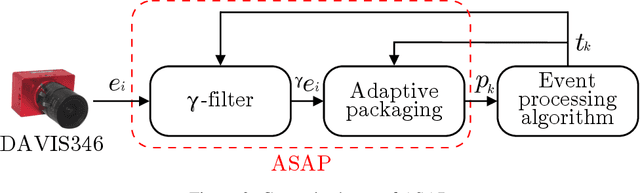

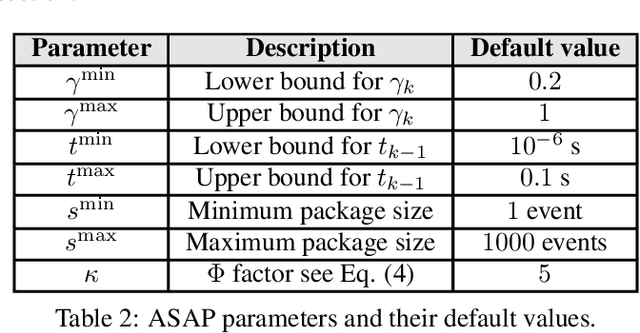

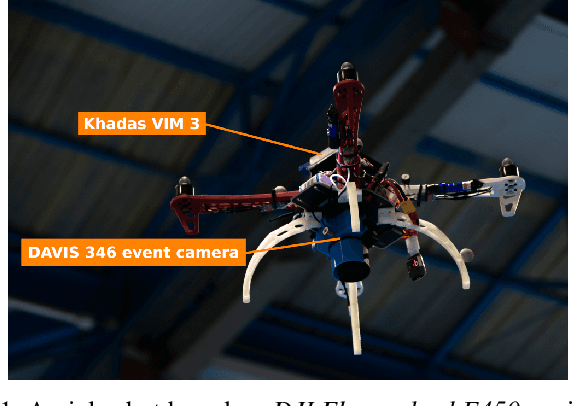

ASAP: Adaptive Transmission Scheme for Online Processing of Event-based Algorithms

Sep 18, 2022

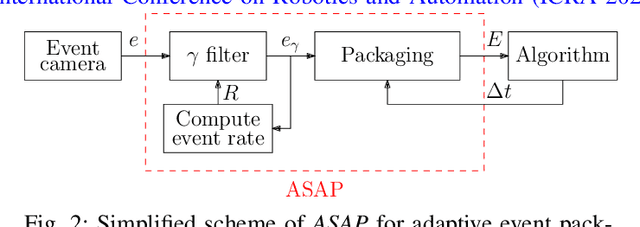

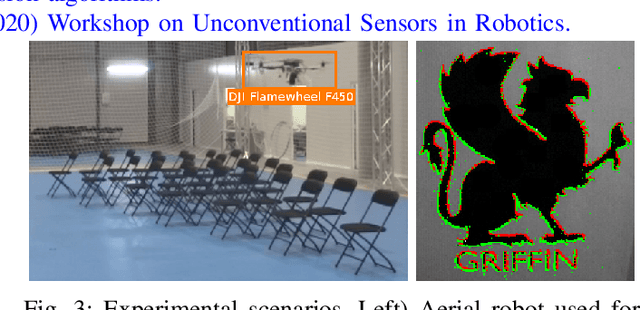

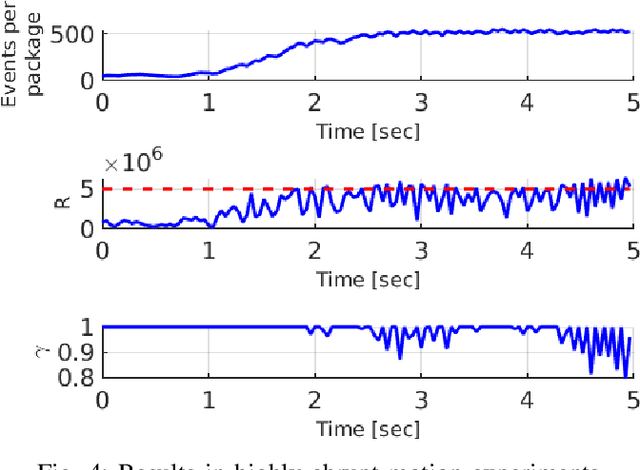

Abstract:Online event-based perception techniques on board robots navigating in complex, unstructured, and dynamic environments can suffer unpredictable changes in the incoming event rates and their processing times, which can cause computational overflow or loss of responsiveness. This paper presents ASAP: a novel event handling framework that dynamically adapts the transmission of events to the processing algorithm, keeping the system responsiveness and preventing overflows. ASAP is composed of two adaptive mechanisms. The first one prevents event processing overflows by discarding an adaptive percentage of the incoming events. The second mechanism dynamically adapts the size of the event packages to reduce the delay between event generation and processing. ASAP has guaranteed convergence and is flexible to the processing algorithm. It has been validated on board a quadrotor and an ornithopter robot in challenging conditions.

ASAP: Adaptive Scheme for Asynchronous Processing of Event-based Vision Algorithms

Sep 18, 2022

Abstract:Event cameras can capture pixel-level illumination changes with very high temporal resolution and dynamic range. They have received increasing research interest due to their robustness to lighting conditions and motion blur. Two main approaches exist in the literature to feed the event-based processing algorithms: packaging the triggered events in event packages and sending them one-by-one as single events. These approaches suffer limitations from either processing overflow or lack of responsivity. Processing overflow is caused by high event generation rates when the algorithm cannot process all the events in real-time. Conversely, lack of responsivity happens in cases of low event generation rates when the event packages are sent at too low frequencies. This paper presents ASAP, an adaptive scheme to manage the event stream through variable-size packages that accommodate to the event package processing times. The experimental results show that ASAP is capable of feeding an asynchronous event-by-event clustering algorithm in a responsive and efficient manner and at the same time prevents overflow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge