Daniel Hert

MRS Drone: A Modular Platform for Real-World Deployment of Aerial Multi-Robot Systems

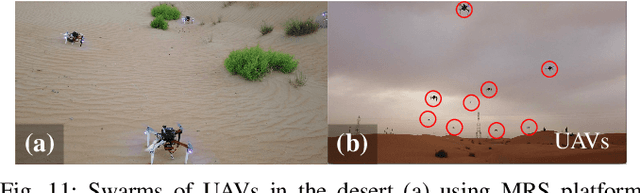

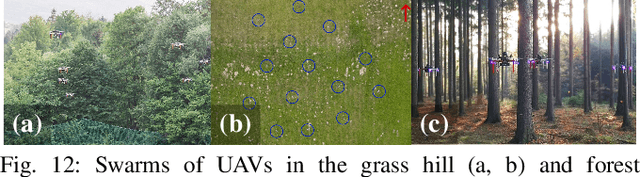

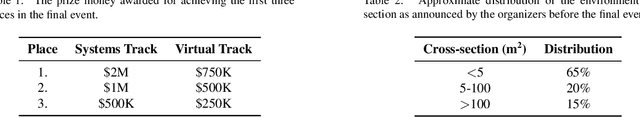

Jun 12, 2023Abstract:This paper presents a modular autonomous Unmanned Aerial Vehicle (UAV) platform called the Multi-robot Systems (MRS) Drone that can be used in a large range of indoor and outdoor applications. The MRS Drone features unique modularity with respect to changes in actuators, frames, and sensory configuration. As the name suggests, the platform is specially tailored for deployment within a MRS group. The MRS Drone contributes to the state-of-the-art of UAV platforms by allowing smooth real-world deployment of multiple aerial robots, as well as by outperforming other platforms with its modularity. For real-world multi-robot deployment in various applications, the platform is easy to both assemble and modify. Moreover, it is accompanied by a realistic simulator to enable safe pre-flight testing and a smooth transition to complex real-world experiments. In this manuscript, we present mechanical and electrical designs, software architecture, and technical specifications to build a fully autonomous multi UAV system. Finally, we demonstrate the full capabilities and the unique modularity of the MRS Drone in various real-world applications that required a diverse range of platform configurations.

MRS Modular UAV Hardware Platforms for Supporting Research in Real-World Outdoor and Indoor Environments

Feb 09, 2023

Abstract:This paper presents a family of autonomous Unmanned Aerial Vehicles (UAVs) platforms designed for a diverse range of indoor and outdoor applications. The proposed UAV design is highly modular in terms of used actuators, sensor configurations, and even UAV frames. This allows to achieve, with minimal effort, a proper experimental setup for single, as well as, multi robot scenarios. Presented platforms are intended to facilitate the transition from simulations, and simplified laboratory experiments, into the deployment of aerial robots into uncertain and hard-to-model real-world conditions. We present mechanical designs, electric configurations, and dynamic models of the UAVs, followed by numerous recommendations and technical details required for building such a fully autonomous UAV system for experimental verification of scientific achievements. To show strength and high variability of the proposed system, we present results of tens of completely different real-robot experiments in various environments using distinct actuator and sensory configurations.

* 10 pages, 17 figures, conference

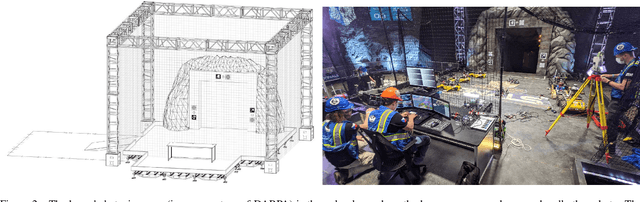

UAVs Beneath the Surface: Cooperative Autonomy for Subterranean Search and Rescue in DARPA SubT

Jun 16, 2022

Abstract:This paper presents a novel approach for autonomous cooperating UAVs in search and rescue operations in subterranean domains with complex topology. The proposed system was ranked second in the Virtual Track of the DARPA SubT Finals as part of the team CTU-CRAS-NORLAB. In contrast to the winning solution that was developed specifically for the Virtual Track, the proposed solution also proved to be a robust system for deployment onboard physical UAVs flying in the extremely harsh and confined environment of the real-world competition. The proposed approach enables fully autonomous and decentralized deployment of a UAV team with seamless simulation-to-world transfer, and proves its advantage over less mobile UGV teams in the flyable space of diverse environments. The main contributions of the paper are present in the mapping and navigation pipelines. The mapping approach employs novel map representations -- SphereMap for efficient risk-aware long-distance planning, FacetMap for surface coverage, and the compressed topological-volumetric LTVMap for allowing multi-robot cooperation under low-bandwidth communication. These representations are used in navigation together with novel methods for visibility-constrained informed search in a general 3D environment with no assumptions about the environment structure, while balancing deep exploration with sensor-coverage exploitation. The proposed solution also includes a visual-perception pipeline for on-board detection and localization of objects of interest in four RGB stream at 5 Hz each without a dedicated GPU. Apart from participation in the DARPA SubT, the performance of the UAV system is supported by extensive experimental verification in diverse environments with both qualitative and quantitative evaluation.

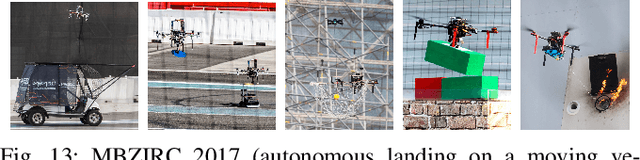

Autonomous Cooperative Wall Building by a Team of Unmanned Aerial Vehicles in the MBZIRC 2020 Competition

Dec 10, 2020

Abstract:This paper presents a system for autonomous cooperative wall building with a team of Unmanned Aerial Vehicles (UAVs). The system was developed for Challenge 2 of the Mohamed Bin Zayed International Robotics Challenge (MBZIRC) 2020. The wall-building scenario of Challenge 2 featured an initial stack of bricks and wall structure where the individual bricks had to be placed by a team of three UAVs. The objective of the task was to maximize collected points for placing the bricks within the restricted construction time while following the prescribed wall pattern. The proposed approach uses initial scanning to find a priori unknown locations of the bricks and the wall structure. Each UAV is then assigned to individual bricks and wall placing locations and further perform grasping and placement using onboard resources only. The developed system consists of methods for scanning a given area, RGB-D detection of bricks and wall placement locations, precise grasping and placing of bricks, and coordination of multiple UAVs. The paper describes the overall system, individual components, experimental verification in demanding outdoor conditions, the achieved results in the competition, and lessons learned. The presented CTU-UPenn-NYU approach achieved the overall best performance among all participants to won the MBZIRC competition by collecting the highest number of points by correct placement of a high number of bricks.

The MRS UAV System: Pushing the Frontiers of Reproducible Research, Real-world Deployment, and Education with Autonomous Unmanned Aerial Vehicles

Sep 01, 2020

Abstract:We present a multirotor Unmanned Aerial Vehicle control (UAV) and estimation system for supporting replicable research through realistic simulations and real-world experiments. We propose a unique multi-frame localization paradigm for estimating the states of a UAV in various frames of reference using multiple sensors simultaneously. The system enables complex missions in GNSS and GNSS-denied environments, including outdoor-indoor transitions and the execution of redundant estimators for backing up unreliable localization sources. Two feedback control designs are presented: one for precise and aggressive maneuvers, and the other for stable and smooth flight with a noisy state estimate. The proposed control and estimation pipeline are constructed without using the Euler/Tait-Bryan angle representation of orientation in 3D. Instead, we rely on rotation matrices and a novel heading-based convention to represent the one free rotational degree-of-freedom in 3D of a standard multirotor helicopter. We provide an actively maintained and well-documented open-source implementation, including realistic simulation of UAV, sensors, and localization systems. The proposed system is the product of years of applied research on multi-robot systems, aerial swarms, aerial manipulation, motion planning, and remote sensing. All our results have been supported by real-world system deployment that shaped the system into the form presented here. In addition, the system was utilized during the participation of our team from the CTU in Prague in the prestigious MBZIRC 2017 and 2020 robotics competitions, and also in the DARPA SubT challenge. Each time, our team was able to secure top places among the best competitors from all over the world. On each occasion, the challenges has motivated the team to improve the system and to gain a great amount of high-quality experience within tight deadlines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge