Matouš Vrba

Bio-inspired visual relative localization for large swarms of UAVs

Dec 03, 2024Abstract:We propose a new approach to visual perception for relative localization of agents within large-scale swarms of UAVs. Inspired by biological perception utilized by schools of sardines, swarms of bees, and other large groups of animals capable of moving in a decentralized yet coherent manner, our method does not rely on detecting individual neighbors by each agent and estimating their relative position, but rather we propose to regress a neighbor density over distance. This allows for a more accurate distance estimation as well as better scalability with respect to the number of neighbors. Additionally, a novel swarm control algorithm is proposed to make it compatible with the new relative localization method. We provide a thorough evaluation of the presented methods and demonstrate that the regressing approach to distance estimation is more robust to varying relative pose of the targets and that it is suitable to be used as the main source of relative localization for swarm stabilization.

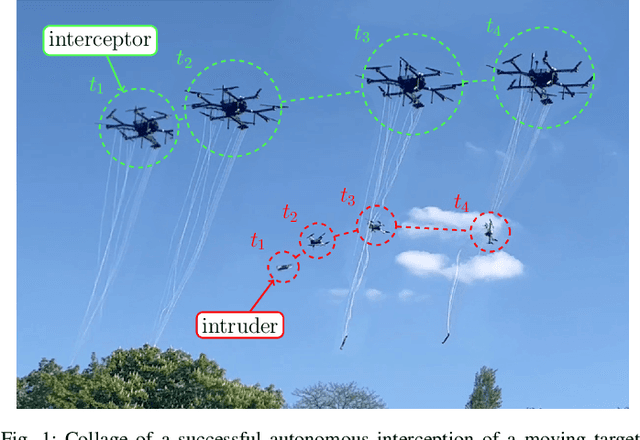

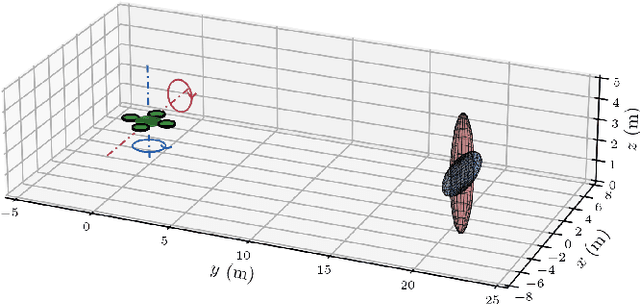

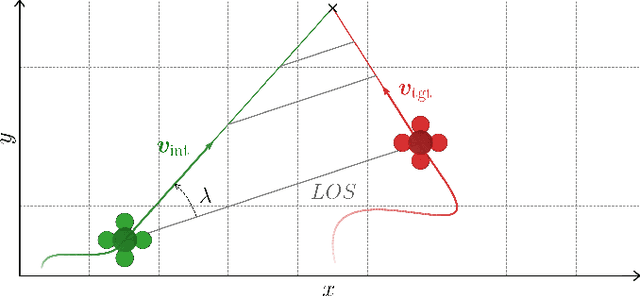

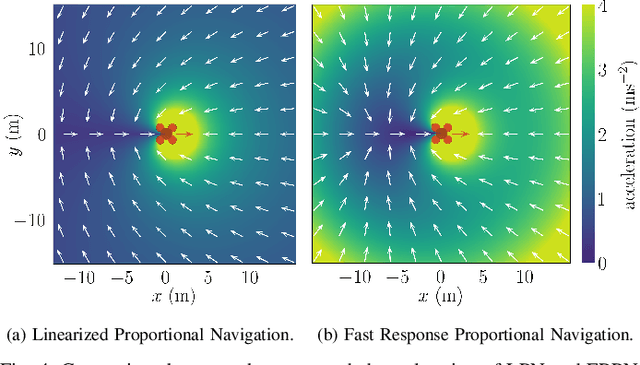

Towards Safe Mid-Air Drone Interception: Strategies for Tracking & Capture

May 22, 2024

Abstract:A unique approach for the mid-air autonomous aerial interception of non-cooperating UAV by a flying robot equipped with a net is presented in this paper. A novel interception guidance method dubbed EPN is proposed, designed to catch agile maneuvering targets while relying on onboard state estimation and tracking. The proposed method is compared with state-of-the-art approaches in simulations using 100 different trajectories of the target with varying complexity comprising almost 14 hours of flight data, and EPN demonstrates the shortest response time and the highest number of interceptions, which are key parameters of agile interception. To enable robust transfer from theory and simulation to a real-world implementation, we aim to avoid overfitting to specific assumptions about the target, and to tackle interception of a target following an unknown general trajectory. Furthermore, we identify several often overlooked problems related to tracking and estimation of the target's state that can have a significant influence on the overall performance of the system. We propose the use of a novel state estimation filter based on the IMM filter and a new measurement model. Simulated experiments show that the proposed solution provides significant improvements in estimation accuracy over the commonly employed KF approaches when considering general trajectories. Based on these results, we employ the proposed filtering and guidance methods to implement a complete autonomous interception system, which is thoroughly evaluated in realistic simulations and tested in real-world experiments with a maneuvering target going far beyond the performance of any state-of-the-art solution.

Gesture-Controlled Aerial Robot Formation for Human-Swarm Interaction in Safety Monitoring Applications

Mar 22, 2024

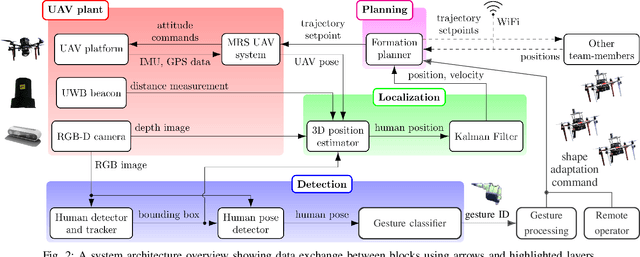

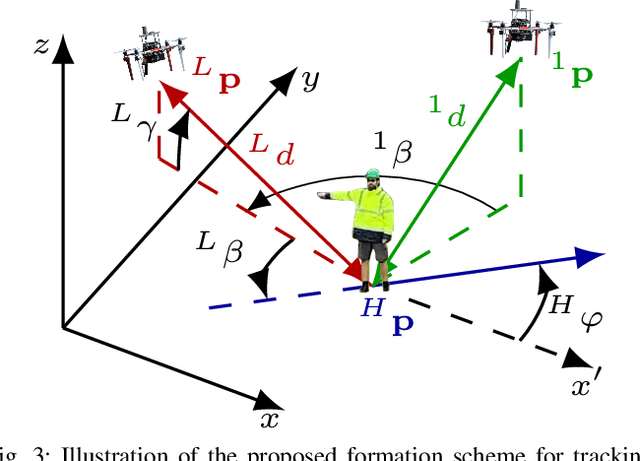

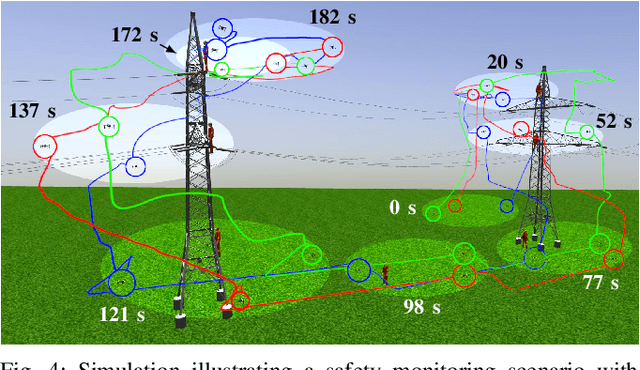

Abstract:This paper presents a formation control approach for contactless gesture-based Human-Swarm Interaction (HSI) between a team of multi-rotor Unmanned Aerial Vehicles (UAVs) and a human worker. The approach is intended for monitoring the safety of human workers, especially those working at heights. In the proposed dynamic formation scheme, one UAV acts as the leader of the formation and is equipped with sensors for human worker detection and gesture recognition. The follower UAVs maintain a predetermined formation relative to the worker's position, thereby providing additional perspectives of the monitored scene. Hand gestures allow the human worker to specify movements and action commands for the UAV team and initiate other mission-related commands without the need for an additional communication channel or specific markers. Together with a novel unified human detection and tracking algorithm, human pose estimation approach and gesture detection pipeline, the proposed approach forms a first instance of an HSI system incorporating all these modules onboard real-world UAVs. Simulations and field experiments with three UAVs and a human worker in a mock-up scenario showcase the effectiveness and responsiveness of the proposed approach.

Drones Guiding Drones: Cooperative Navigation of a Less-Equipped Micro Aerial Vehicle in Cluttered Environments

Dec 15, 2023

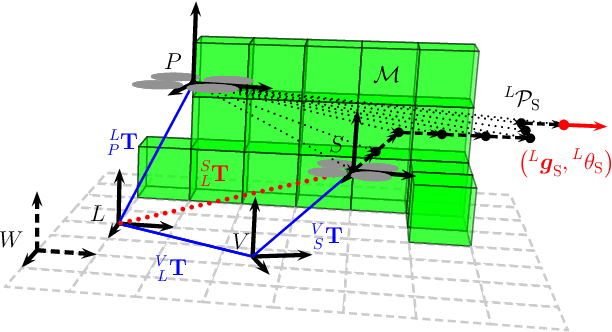

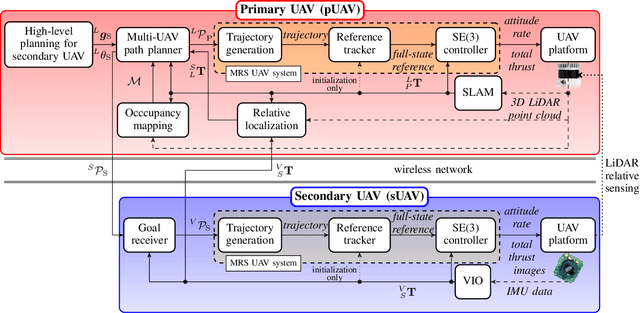

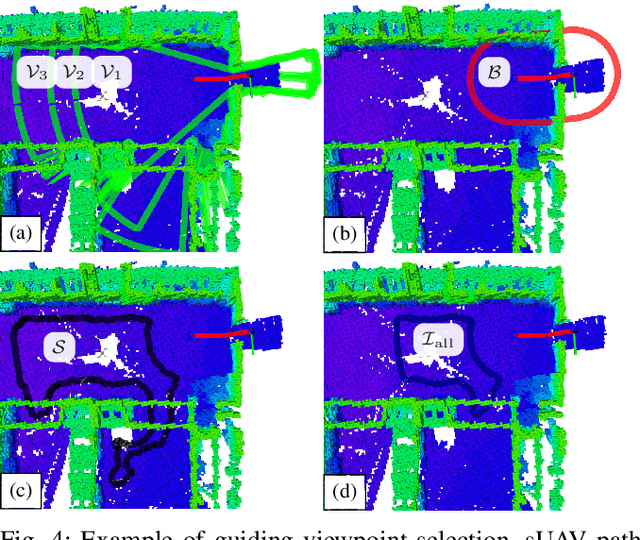

Abstract:Reliable deployment of Unmanned Aerial Vehicles (UAVs) in cluttered unknown environments requires accurate sensors for obstacle avoidance. Such a requirement limits the usage of cheap and micro-scale vehicles with constrained payload capacity if industrial-grade reliability and precision are required. This paper investigates the possibility of offloading the necessity to carry heavy and expensive obstacle sensors to another member of the UAV team while preserving the desired obstacle avoidance capability. A novel cooperative guidance framework offloading the obstacle sensing requirements from a minimalistic secondary UAV to a superior primary UAV is proposed. The primary UAV constructs a dense occupancy map of the environment and plans collision-free paths for both UAVs to ensure reaching the desired secondary UAV's goal. The primary UAV guides the secondary UAV to follow the planned path while tracking the UAV using Light Detection and Ranging (LiDAR)-based relative localization. The proposed approach was verified in real-world experiments with a heterogeneous team of a 3D LiDAR-equipped primary UAV and a camera-equipped secondary UAV moving autonomously through unknown cluttered Global Navigation Satellite System (GNSS)-denied environments with the proposed framework running completely on board the UAVs.

Fusion of Visual-Inertial Odometry with LiDAR Relative Localization for Cooperative Guidance of a Micro-Scale Aerial Vehicle

Jun 30, 2023Abstract:A novel relative localization approach for cooperative guidance of a micro-scale Unmanned Aerial Vehicle (UAV) fusing Visual-Inertial Odometry (VIO) with Light Detection and Ranging (LiDAR) is proposed in this paper. LiDAR-based localization is accurate and robust to challenging environmental conditions, but 3D LiDARs are relatively heavy and require large UAV platforms. Visual cameras are cheap and lightweight. However, visual-based self-localization methods exhibit lower accuracy and can suffer from significant drift with respect to the global reference frame. We focus on cooperative navigation in a heterogeneous team of a primary LiDAR-equipped UAV and secondary camera-equipped UAV. We propose a novel cooperative approach combining LiDAR relative localization data with VIO output on board the primary UAV to obtain an accurate pose of the secondary UAV. The pose estimate is used to guide the secondary UAV along trajectories defined in the primary UAV reference frame. The experimental evaluation has shown the superior accuracy of our method to the raw VIO output and demonstrated its capability to guide the secondary UAV along desired trajectories while mitigating VIO drift.

Distributed formation-enforcing control for UAVs robust to observation noise in relative pose measurements

Apr 06, 2023

Abstract:A technique that allows a formation-enforcing control (FEC) derived from graph rigidity theory to interface with a realistic relative localization system is proposed in this paper. Recent research in sensor-based multi-robot control has given rise to multiple modalities of mutual relative localization systems. In particular, vision-based relative localization has reached the stage where it can be carried onboard lightweight UAVs in order to retrieve the relative positions and relative orientations of cooperating units. A separate stream of development spawned distributed formation-enforcing control which can lead individual robots into a desired formation using relative localization of their neighbors. These two fields naturally complement each other by achieving real-world flights of UAVs in formation without the need for absolute localization in the world. However, real relative localization systems are, without exception, burdened by non-negligible sensory noise, which is typically not fully taken into account in formation-enforcing control algorithms. Such noise can lead to rapid changes in velocity, which further interferes with visual localization. Our approach provides a solution to these challenges, enabling practical deployment of FEC under realistic conditions, as we demonstrated in real-world experiments.

On Onboard LiDAR-based Flying Object Detection

Mar 09, 2023

Abstract:A new robust and accurate approach for the detection and localization of flying objects with the purpose of highly dynamic aerial interception and agile multi-robot interaction is presented in this paper. The approach is proposed for use onboard an autonomous aerial vehicle equipped with a 3D LiDAR sensor providing input data for the algorithm. It relies on a novel 3D occupancy voxel mapping method for the target detection and a cluster-based multiple hypothesis tracker to compensate uncertainty of the sensory data. When compared to state-of-the-art methods of onboard detection of other flying objects, the presented approach provides superior localization accuracy and robustness to different environments and appearance changes of the target, as well as a greater detection range. Furthermore, in combination with the proposed multi-target tracker, sporadic false positives are suppressed, state estimation of the target is provided and the detection latency is negligible. This makes the detector suitable for tasks of agile multi-robot interaction, such as autonomous aerial interception or formation control where precise, robust, and fast relative localization of other robots is crucial. We demonstrate the practical usability and performance of the system in simulated and real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge