Michal Pliska

Towards Safe Mid-Air Drone Interception: Strategies for Tracking & Capture

May 22, 2024

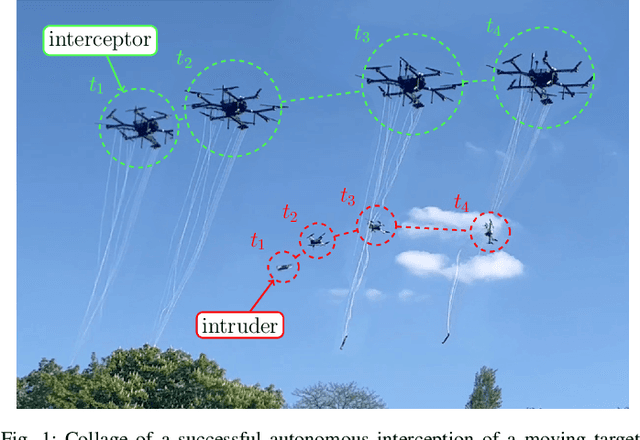

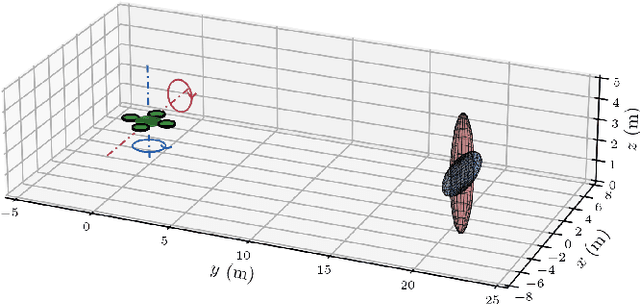

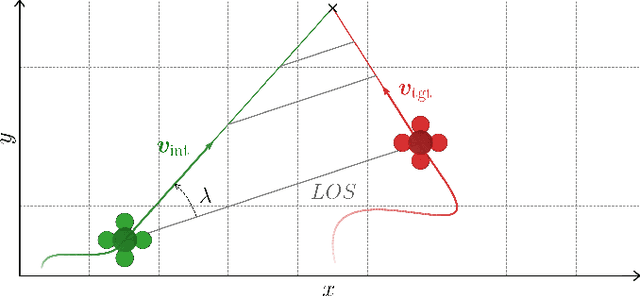

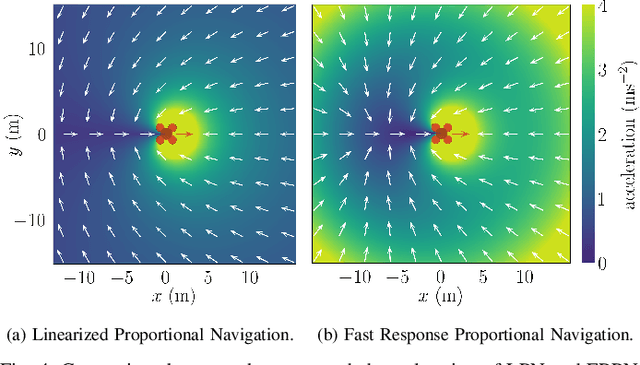

Abstract:A unique approach for the mid-air autonomous aerial interception of non-cooperating UAV by a flying robot equipped with a net is presented in this paper. A novel interception guidance method dubbed EPN is proposed, designed to catch agile maneuvering targets while relying on onboard state estimation and tracking. The proposed method is compared with state-of-the-art approaches in simulations using 100 different trajectories of the target with varying complexity comprising almost 14 hours of flight data, and EPN demonstrates the shortest response time and the highest number of interceptions, which are key parameters of agile interception. To enable robust transfer from theory and simulation to a real-world implementation, we aim to avoid overfitting to specific assumptions about the target, and to tackle interception of a target following an unknown general trajectory. Furthermore, we identify several often overlooked problems related to tracking and estimation of the target's state that can have a significant influence on the overall performance of the system. We propose the use of a novel state estimation filter based on the IMM filter and a new measurement model. Simulated experiments show that the proposed solution provides significant improvements in estimation accuracy over the commonly employed KF approaches when considering general trajectories. Based on these results, we employ the proposed filtering and guidance methods to implement a complete autonomous interception system, which is thoroughly evaluated in realistic simulations and tested in real-world experiments with a maneuvering target going far beyond the performance of any state-of-the-art solution.

Single-grasp deformable object discrimination: the effect of gripper morphology, sensing modalities, and action parameters

Apr 13, 2022

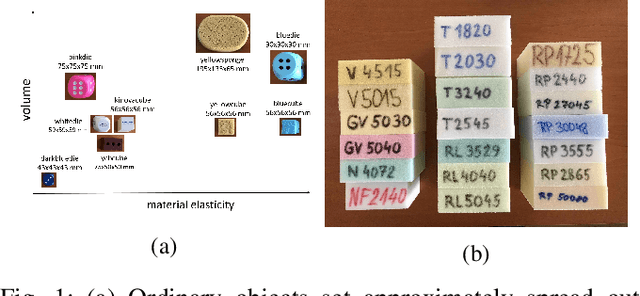

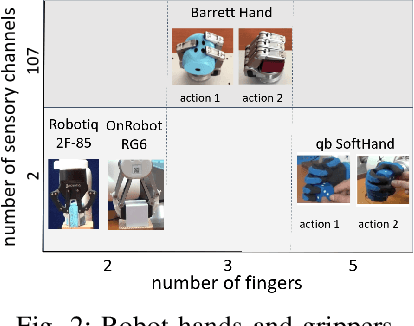

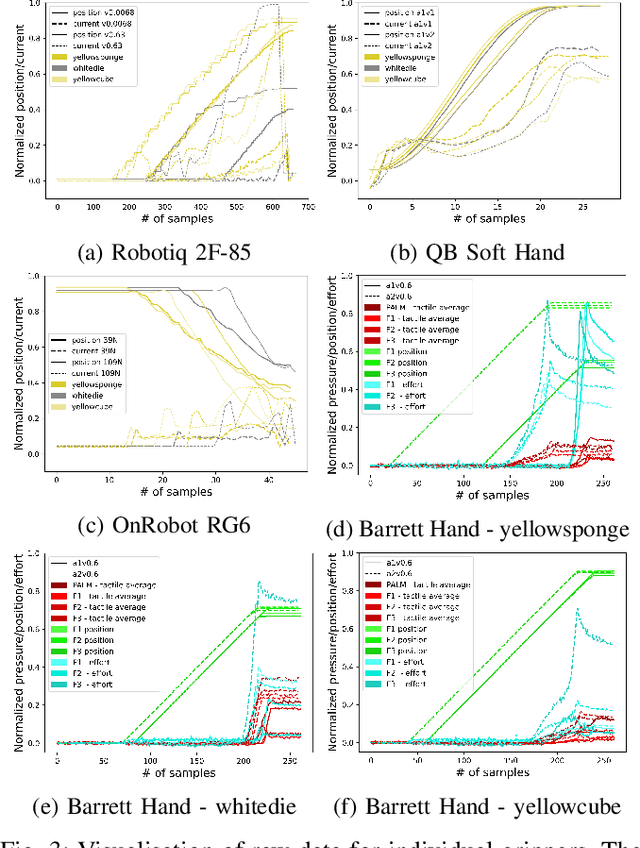

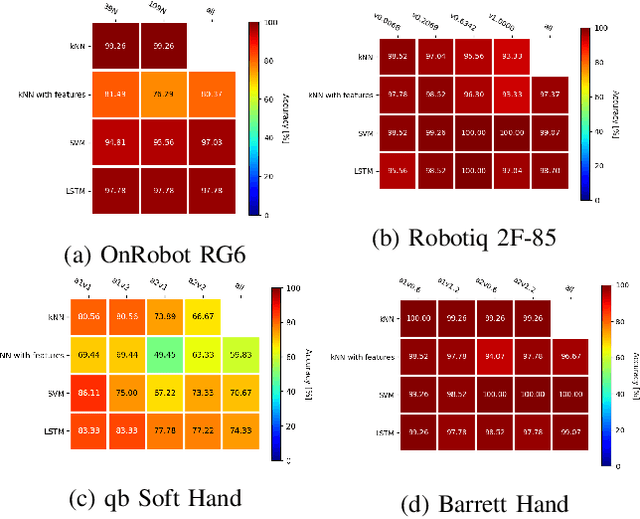

Abstract:We studied the discrimination of deformable objects by grasping them using 4 different robot hands / grippers: Barrett hand (3 fingers with adjustable configuration, 96 tactile, 8 position, 3 torque sensors), qb SoftHand (5 fingers, 1 motor, position and current feedback), and two industrial type parallel jaw grippers with position and effort feedback (Robotiq 2F-85 and OnRobot RG6). A set of 9 ordinary objects differing in size and stiffness and another highly challenging set of 20 polyurethane foams differing in material properties only was used. We systematically compare the grippers' performance, together with the effects of: (1) type of classifier (k-NN, SVM, LSTM) operating on raw time series or on features, (2) action parameters (grasping configuration and speed of squeezing), (3) contribution of sensory modalities. Classification results are complemented by visualization of the data using PCA. We found: (i) all the grippers but the qb SoftHand could reliably distinguish the ordinary objects set; (ii) Barrett Hand reached around 95% accuracy on the foams; OnRobot RG6 around 75% and Robotiq 2F-85 around 70%; (iii) across all grippers, SVM over features and LSTM on raw time series performed best; (iv) faster compression speeds degrade classification performance; (v) transfer learning between compression speeds worked well for the Barrett Hand only; transfer between grasping configurations is limited; (vi) ablation experiments provided intriguing insights -- sometimes a single sensory channel suffices for discrimination. Overall, the Barrett Hand as a complex and expensive device with rich sensory feedback provided best results, but uncalibrated parallel jaw grippers without tactile sensors can have sufficient performance for single-grasp object discrimination based on position and effort data only. Transfer learning between the different robot hands remains a challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge