Petr Štěpán

Drones Guiding Drones: Cooperative Navigation of a Less-Equipped Micro Aerial Vehicle in Cluttered Environments

Dec 15, 2023

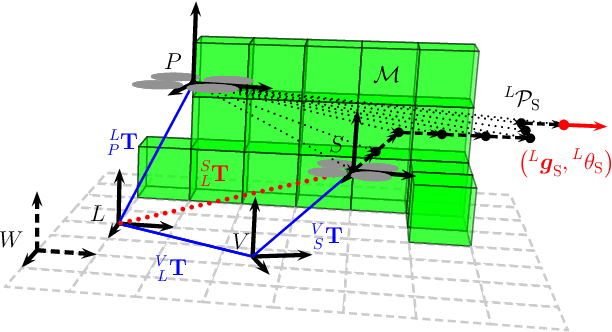

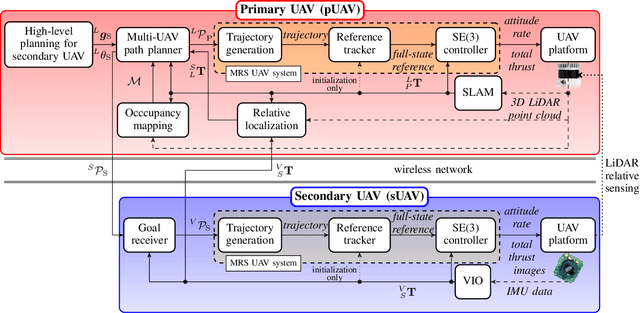

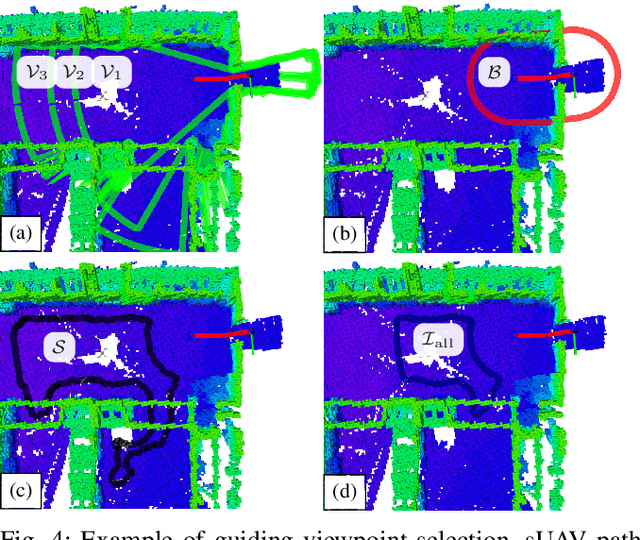

Abstract:Reliable deployment of Unmanned Aerial Vehicles (UAVs) in cluttered unknown environments requires accurate sensors for obstacle avoidance. Such a requirement limits the usage of cheap and micro-scale vehicles with constrained payload capacity if industrial-grade reliability and precision are required. This paper investigates the possibility of offloading the necessity to carry heavy and expensive obstacle sensors to another member of the UAV team while preserving the desired obstacle avoidance capability. A novel cooperative guidance framework offloading the obstacle sensing requirements from a minimalistic secondary UAV to a superior primary UAV is proposed. The primary UAV constructs a dense occupancy map of the environment and plans collision-free paths for both UAVs to ensure reaching the desired secondary UAV's goal. The primary UAV guides the secondary UAV to follow the planned path while tracking the UAV using Light Detection and Ranging (LiDAR)-based relative localization. The proposed approach was verified in real-world experiments with a heterogeneous team of a 3D LiDAR-equipped primary UAV and a camera-equipped secondary UAV moving autonomously through unknown cluttered Global Navigation Satellite System (GNSS)-denied environments with the proposed framework running completely on board the UAVs.

Fusion of Visual-Inertial Odometry with LiDAR Relative Localization for Cooperative Guidance of a Micro-Scale Aerial Vehicle

Jun 30, 2023Abstract:A novel relative localization approach for cooperative guidance of a micro-scale Unmanned Aerial Vehicle (UAV) fusing Visual-Inertial Odometry (VIO) with Light Detection and Ranging (LiDAR) is proposed in this paper. LiDAR-based localization is accurate and robust to challenging environmental conditions, but 3D LiDARs are relatively heavy and require large UAV platforms. Visual cameras are cheap and lightweight. However, visual-based self-localization methods exhibit lower accuracy and can suffer from significant drift with respect to the global reference frame. We focus on cooperative navigation in a heterogeneous team of a primary LiDAR-equipped UAV and secondary camera-equipped UAV. We propose a novel cooperative approach combining LiDAR relative localization data with VIO output on board the primary UAV to obtain an accurate pose of the secondary UAV. The pose estimate is used to guide the secondary UAV along trajectories defined in the primary UAV reference frame. The experimental evaluation has shown the superior accuracy of our method to the raw VIO output and demonstrated its capability to guide the secondary UAV along desired trajectories while mitigating VIO drift.

Autonomous Aerial Filming With Distributed Lighting by a Team of Unmanned Aerial Vehicles

Mar 02, 2023Abstract:This letter describes a method for autonomous aerial cinematography with distributed lighting by a team of unmanned aerial vehicles (UAVs). Although camera-carrying multi-rotor helicopters have become commonplace in cinematography, their usage is limited to scenarios with sufficient natural light or of lighting provided by static artificial lights. We propose to use a formation of unmanned aerial vehicles as a tool for filming a target under illumination from various directions, which is one of the fundamental techniques of traditional cinematography. We decompose the multi-UAV trajectory optimization problem to tackle non-linear cinematographic aspects and obstacle avoidance at separate stages, which allows us to re-plan in real time and react to changes in dynamic environments. The performance of our method has been evaluated in realistic simulation scenarios and field experiments, where we show how it increases the quality of the shots and that it is capable of planning safe trajectories even in cluttered environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge