Kshitij Jindal

Design and Deployment of an Autonomous Unmanned Ground Vehicle for Urban Firefighting Scenarios

Jul 08, 2021

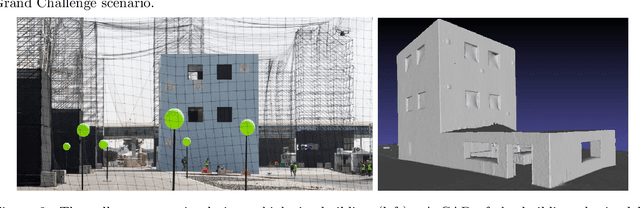

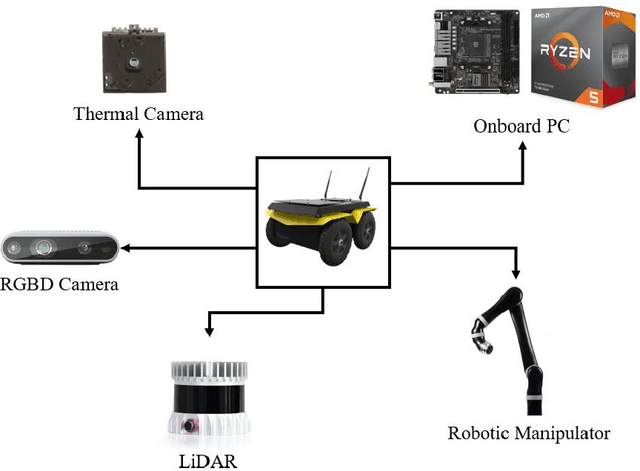

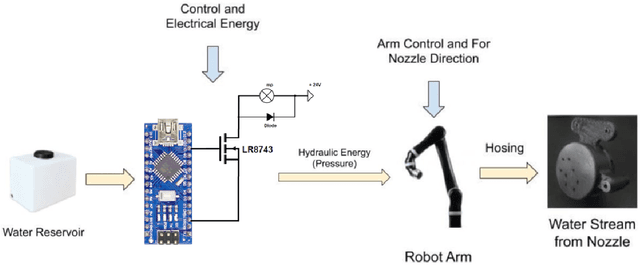

Abstract:Autonomous mobile robots have the potential to solve missions that are either too complex or dangerous to be accomplished by humans. In this paper, we address the design and autonomous deployment of a ground vehicle equipped with a robotic arm for urban firefighting scenarios. We describe the hardware design and algorithm approaches for autonomous navigation, planning, fire source identification and abatement in unstructured urban scenarios. The approach employs on-board sensors for autonomous navigation and thermal camera information for source identification. A custom electro{mechanical pump is responsible to eject water for fire abatement. The proposed approach is validated through several experiments, where we show the ability to identify and abate a sample heat source in a building. The whole system was developed and deployed during the Mohamed Bin Zayed International Robotics Challenge (MBZIRC) 2020, for Challenge No. 3 Fire Fighting Inside a High-Rise Building and during the Grand Challenge where our approach scored the highest number of points among all UGV solutions and was instrumental to win the first place.

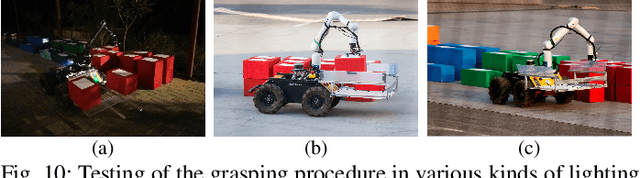

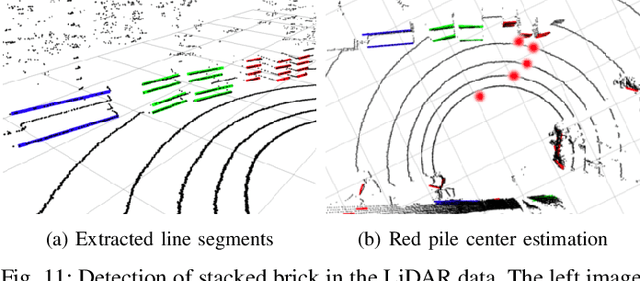

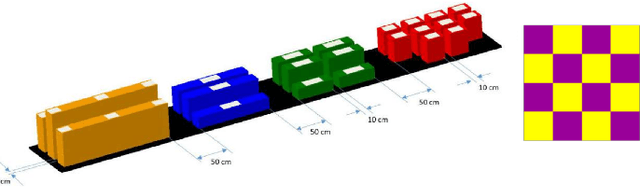

Mobile Manipulator for Autonomous Localization, Grasping and Precise Placement of Construction Material in a Semi-structured Environment

Nov 16, 2020

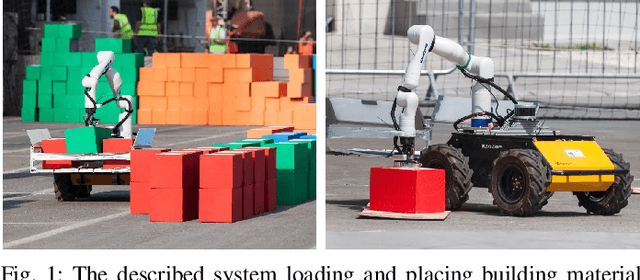

Abstract:Mobile manipulators have the potential to revolutionize modern agriculture, logistics and manufacturing. In this work, we present the design of a ground-based mobile manipulator for automated structure assembly. The proposed system is capable of autonomous localization, grasping, transportation and deployment of construction material in a semi-structured environment. Special effort was put into making the system invariant to lighting changes, and not reliant on external positioning systems. Therefore, the presented system is self-contained and capable of operating in outdoor and indoor conditions alike. Finally, we present means to extend the perceptive radius of the vehicle by using it in cooperation with an autonomous drone, which provides aerial reconnaissance. Performance of the proposed system has been evaluated in a series of experiments conducted in real-world conditions.

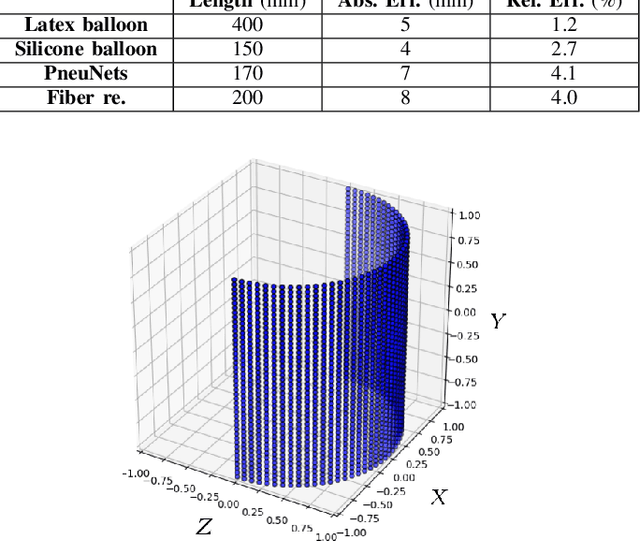

Real-time Soft Robot 3D Proprioception via Deep Vision-based Sensing

Apr 08, 2019

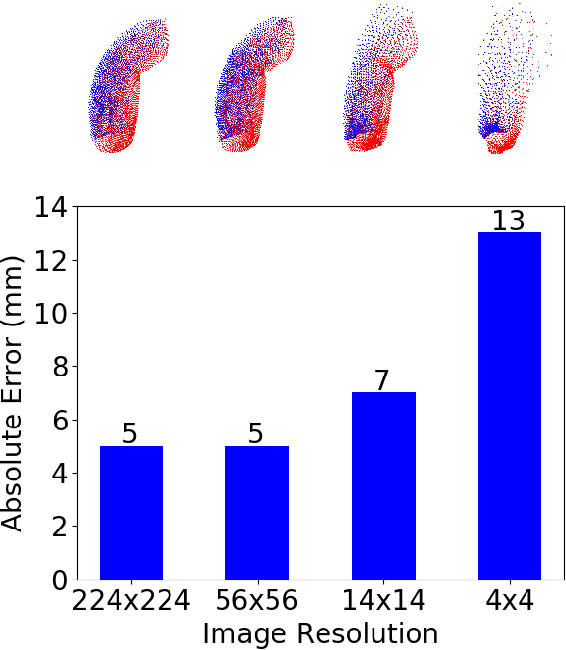

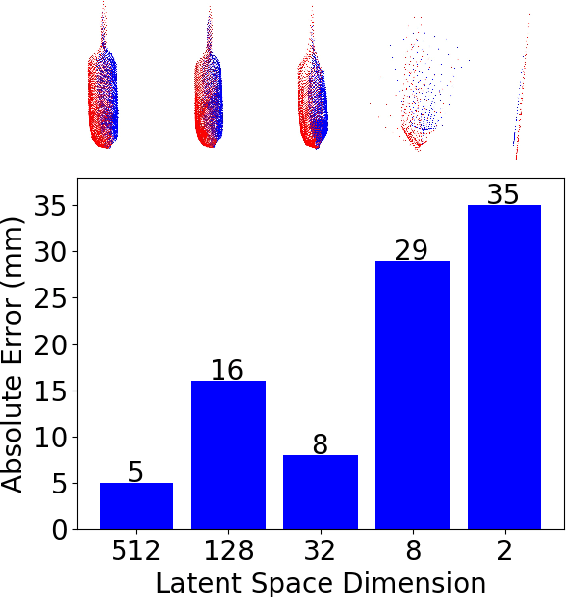

Abstract:The soft robots are welcomed in many robotic applications because of their high flexibility, which also poses a long-standing challenge on their proprioception, or measuring the real-time 3D shapes of the soft robots from internal sensors. The challenge exists in both the sensor design and robot modeling. In this paper, we propose a framework to measure the real-time high-resolution 3D shapes of soft robots. The framework is based on an embedded camera to capture the inside/outside patterns of the robots under different loading conditions, and a CNN to produce a latent code representing the robot state, which can then be used to reconstruct the 3D shape using a neural network improved from FoldingNet. We tested the framework on four different soft actuators with various kinds of deformations, and achieved real-time computation ($<$2ms/frame) for robust shape estimation of high precision ($<$5% relative error for 2025 points) at an arbitrary resolution. We believe the method could be widely applied to different designs of soft robots for proprioception, and enabling people to better control them under complicated environments. Our code is available at https://ai4ce.github.io/Deep-Soft-Prorioception/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge