Erdong Xiao

A Deep Reinforcement Learning Environment for Particle Robot Navigation and Object Manipulation

Mar 12, 2022

Abstract:Particle robots are novel biologically-inspired robotic systems where locomotion can be achieved collectively and robustly, but not independently. While its control is currently limited to a hand-crafted policy for basic locomotion tasks, such a multi-robot system could be potentially controlled via Deep Reinforcement Learning (DRL) for different tasks more efficiently. However, the particle robot system presents a new set of challenges for DRL differing from existing swarm robotics systems: the low degrees of freedom of each robot and the increased necessity of coordination between robots. We present a 2D particle robot simulator using the OpenAI Gym interface and Pymunk as the physics engine, and introduce new tasks and challenges to research the underexplored applications of DRL in the particle robot system. Moreover, we use Stable-baselines3 to provide a set of benchmarks for the tasks. Current baseline DRL algorithms show signs of achieving the tasks but are yet unable to reach the performance of the hand-crafted policy. Further development of DRL algorithms is necessary in order to accomplish the proposed tasks.

Real-time Soft Robot 3D Proprioception via Deep Vision-based Sensing

Apr 08, 2019

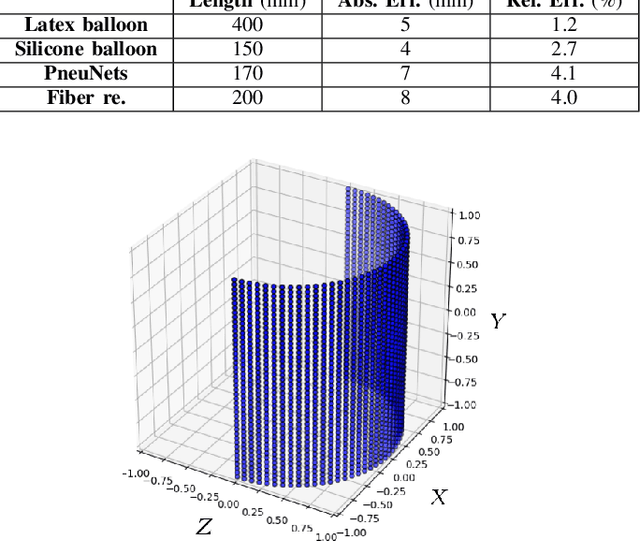

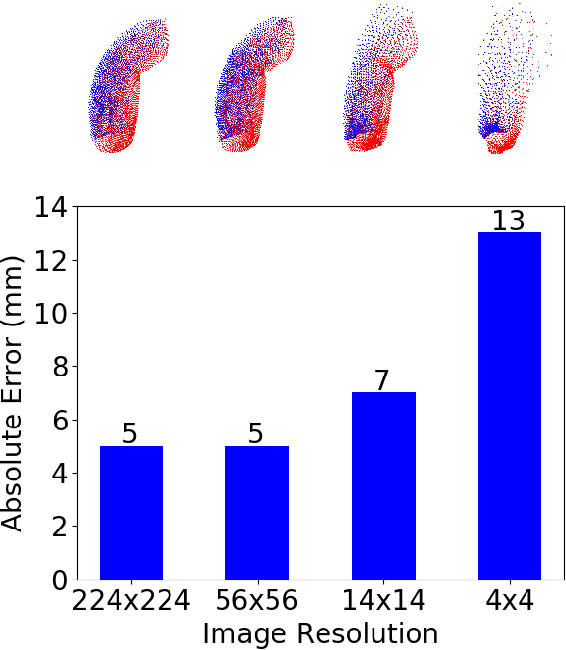

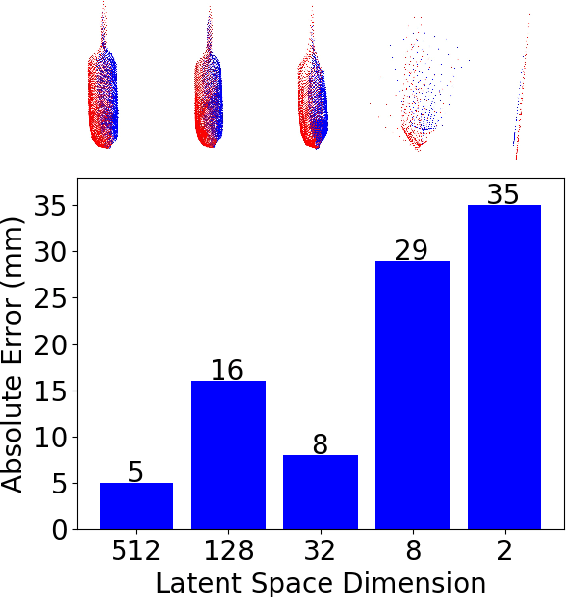

Abstract:The soft robots are welcomed in many robotic applications because of their high flexibility, which also poses a long-standing challenge on their proprioception, or measuring the real-time 3D shapes of the soft robots from internal sensors. The challenge exists in both the sensor design and robot modeling. In this paper, we propose a framework to measure the real-time high-resolution 3D shapes of soft robots. The framework is based on an embedded camera to capture the inside/outside patterns of the robots under different loading conditions, and a CNN to produce a latent code representing the robot state, which can then be used to reconstruct the 3D shape using a neural network improved from FoldingNet. We tested the framework on four different soft actuators with various kinds of deformations, and achieved real-time computation ($<$2ms/frame) for robust shape estimation of high precision ($<$5% relative error for 2025 points) at an arbitrary resolution. We believe the method could be widely applied to different designs of soft robots for proprioception, and enabling people to better control them under complicated environments. Our code is available at https://ai4ce.github.io/Deep-Soft-Prorioception/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge