Filip Majer

Mobile Manipulator for Autonomous Localization, Grasping and Precise Placement of Construction Material in a Semi-structured Environment

Nov 16, 2020

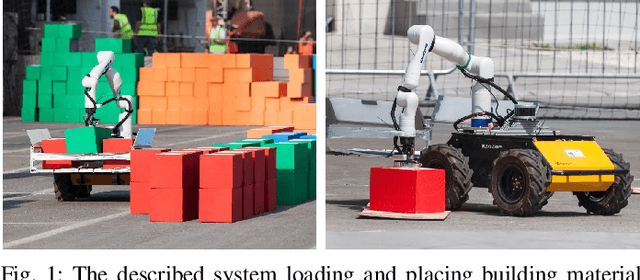

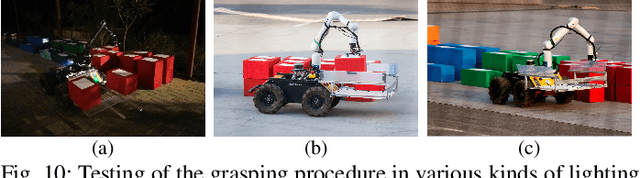

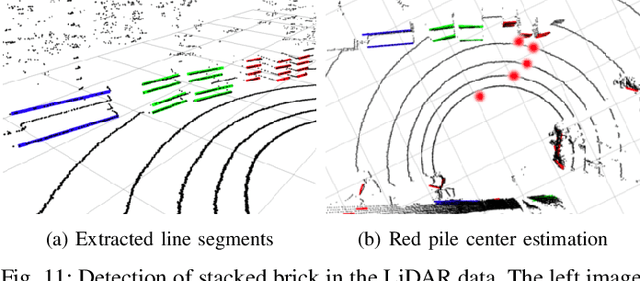

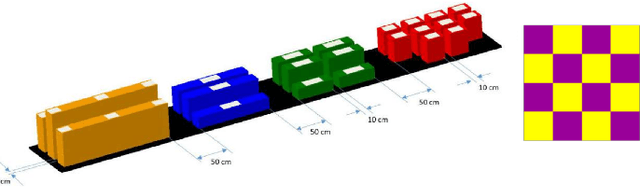

Abstract:Mobile manipulators have the potential to revolutionize modern agriculture, logistics and manufacturing. In this work, we present the design of a ground-based mobile manipulator for automated structure assembly. The proposed system is capable of autonomous localization, grasping, transportation and deployment of construction material in a semi-structured environment. Special effort was put into making the system invariant to lighting changes, and not reliant on external positioning systems. Therefore, the presented system is self-contained and capable of operating in outdoor and indoor conditions alike. Finally, we present means to extend the perceptive radius of the vehicle by using it in cooperation with an autonomous drone, which provides aerial reconnaissance. Performance of the proposed system has been evaluated in a series of experiments conducted in real-world conditions.

Navigation without localisation: reliable teach and repeat based on the convergence theorem

Jul 30, 2018

Abstract:We present a novel concept for teach-and-repeat visual navigation. The proposed concept is based on a mathematical model, which indicates that in teach-and-repeat navigation scenarios, mobile robots do not need to perform explicit localisation. Rather than that, a mobile robot which repeats a previously taught path can simply `replay' the learned velocities, while using its camera information only to correct its heading relative to the intended path. To support our claim, we establish a position error model of a robot, which traverses a taught path by only correcting its heading. Then, we outline a mathematical proof which shows that this position error does not diverge over time. Based on the insights from the model, we present a simple monocular teach-and-repeat navigation method. The method is computationally efficient, it does not require camera calibration, and it can learn and autonomously traverse arbitrarily-shaped paths. In a series of experiments, we demonstrate that the method can reliably guide mobile robots in realistic indoor and outdoor conditions, and can cope with imperfect odometry, landmark deficiency, illumination variations and naturally-occurring environment changes. Furthermore, we provide the navigation system and the datasets gathered at http://www.github.com/gestom/stroll_bearnav.

* The paper will be presented at IROS 2018 in Madrid

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge