Tim Fingscheidt

DisContSE: Single-Step Diffusion Speech Enhancement Based on Joint Discrete and Continuous Embeddings

Jan 29, 2026Abstract:Diffusion speech enhancement on discrete audio codec features gain immense attention due to their improved speech component reconstruction capability. However, they usually suffer from high inference computational complexity due to multiple reverse process iterations. Furthermore, they generally achieve promising results on non-intrusive metrics but show poor performance on intrusive metrics, as they may struggle in reconstructing the correct phones. In this paper, we propose DisContSE, an efficient diffusion-based speech enhancement model on joint discrete codec tokens and continuous embeddings. Our contributions are three-fold. First, we formulate both a discrete and a continuous enhancement module operating on discrete audio codec tokens and continuous embeddings, respectively, to achieve improved fidelity and intelligibility simultaneously. Second, a semantic enhancement module is further adopted to achieve optimal phonetic accuracy. Third, we achieve a single-step efficient reverse process in inference with a novel quantization error mask initialization strategy, which, according to our knowledge, is the first successful single-step diffusion speech enhancement based on an audio codec. Trained and evaluated on URGENT 2024 Speech Enhancement Challenge data splits, the proposed DisContSE excels top-reported time- and frequency-domain diffusion baseline methods in PESQ, POLQA, UTMOS, and in a subjective ITU-T P.808 listening test, clearly achieving an overall top rank.

Noise-Robust AV-ASR Using Visual Features Both in the Whisper Encoder and Decoder

Jan 26, 2026Abstract:In audiovisual automatic speech recognition (AV-ASR) systems, information fusion of visual features in a pre-trained ASR has been proven as a promising method to improve noise robustness. In this work, based on the prominent Whisper ASR, first, we propose a simple and effective visual fusion method -- use of visual features both in encoder and decoder (dual-use) -- to learn the audiovisual interactions in the encoder and to weigh modalities in the decoder. Second, we compare visual fusion methods in Whisper models of various sizes. Our proposed dual-use method shows consistent noise robustness improvement, e.g., a 35% relative improvement (WER: 4.41% vs. 6.83%) based on Whisper small, and a 57% relative improvement (WER: 4.07% vs. 9.53%) based on Whisper medium, compared to typical reference middle fusion in babble noise with a signal-to-noise ratio (SNR) of 0dB. Third, we conduct ablation studies examining the impact of various module designs and fusion options. Fine-tuned on 1929 hours of audiovisual data, our dual-use method using Whisper medium achieves 4.08% (MUSAN babble noise) and 4.43% (NoiseX babble noise) average WER across various SNRs, thereby establishing a new state-of-the-art in noisy conditions on the LRS3 AV-ASR benchmark. Our code is at https://github.com/ifnspaml/Dual-Use-AVASR

ICASSP 2026 URGENT Speech Enhancement Challenge

Jan 20, 2026Abstract:The ICASSP 2026 URGENT Challenge advances the series by focusing on universal speech enhancement (SE) systems that handle diverse distortions, domains, and input conditions. This overview paper details the challenge's motivation, task definitions, datasets, baseline systems, evaluation protocols, and results. The challenge is divided into two complementary tracks. Track 1 focuses on universal speech enhancement, while Track 2 introduces speech quality assessment for enhanced speech. The challenge attracted over 80 team registrations, with 29 submitting valid entries, demonstrating significant community interest in robust SE technologies.

Engineering of Hallucination in Generative AI: It's not a Bug, it's a Feature

Jan 11, 2026Abstract:Generative artificial intelligence (AI) is conquering our lives at lightning speed. Large language models such as ChatGPT answer our questions or write texts for us, large computer vision models such as GAIA-1 generate videos on the basis of text descriptions or continue prompted videos. These neural network models are trained using large amounts of text or video data, strictly according to the real data employed in training. However, there is a surprising observation: When we use these models, they only function satisfactorily when they are allowed a certain degree of fantasy (hallucination). While hallucination usually has a negative connotation in generative AI - after all, ChatGPT is expected to give a fact-based answer! - this article recapitulates some simple means of probability engineering that can be used to encourage generative AI to hallucinate to a limited extent and thus lead to the desired results. We have to ask ourselves: Is hallucination in gen-erative AI probably not a bug, but rather a feature?

OpenViGA: Video Generation for Automotive Driving Scenes by Streamlining and Fine-Tuning Open Source Models with Public Data

Sep 18, 2025

Abstract:Recent successful video generation systems that predict and create realistic automotive driving scenes from short video inputs assign tokenization, future state prediction (world model), and video decoding to dedicated models. These approaches often utilize large models that require significant training resources, offer limited insight into design choices, and lack publicly available code and datasets. In this work, we address these deficiencies and present OpenViGA, an open video generation system for automotive driving scenes. Our contributions are: Unlike several earlier works for video generation, such as GAIA-1, we provide a deep analysis of the three components of our system by separate quantitative and qualitative evaluation: Image tokenizer, world model, video decoder. Second, we purely build upon powerful pre-trained open source models from various domains, which we fine-tune by publicly available automotive data (BDD100K) on GPU hardware at academic scale. Third, we build a coherent video generation system by streamlining interfaces of our components. Fourth, due to public availability of the underlying models and data, we allow full reproducibility. Finally, we also publish our code and models on Github. For an image size of 256x256 at 4 fps we are able to predict realistic driving scene videos frame-by-frame with only one frame of algorithmic latency.

Interspeech 2025 URGENT Speech Enhancement Challenge

May 29, 2025Abstract:There has been a growing effort to develop universal speech enhancement (SE) to handle inputs with various speech distortions and recording conditions. The URGENT Challenge series aims to foster such universal SE by embracing a broad range of distortion types, increasing data diversity, and incorporating extensive evaluation metrics. This work introduces the Interspeech 2025 URGENT Challenge, the second edition of the series, to explore several aspects that have received limited attention so far: language dependency, universality for more distortion types, data scalability, and the effectiveness of using noisy training data. We received 32 submissions, where the best system uses a discriminative model, while most other competitive ones are hybrid methods. Analysis reveals some key findings: (i) some generative or hybrid approaches are preferred in subjective evaluations over the top discriminative model, and (ii) purely generative SE models can exhibit language dependency.

Improving Block-Wise LLM Quantization by 4-bit Block-Wise Optimal Float (BOF4): Analysis and Variations

May 10, 2025

Abstract:Large language models (LLMs) demand extensive memory capacity during both fine-tuning and inference. To enable memory-efficient fine-tuning, existing methods apply block-wise quantization techniques, such as NF4 and AF4, to the network weights. We show that these quantization techniques incur suboptimal quantization errors. Therefore, as a first novelty, we propose an optimization approach for block-wise quantization. Using this method, we design a family of quantizers named 4-bit block-wise optimal float (BOF4), which consistently reduces the quantization error compared to both baseline methods. We provide both a theoretical and a data-driven solution for the optimization process and prove their practical equivalence. Secondly, we propose a modification to the employed normalization method based on the signed absolute block maximum (BOF4-S), enabling further reduction of the quantization error and empirically achieving less degradation in language modeling performance. Thirdly, we explore additional variations of block-wise quantization methods applied to LLMs through an experimental study on the importance of accurately representing zero and large-amplitude weights on the one hand, and optimization towards various error metrics on the other hand. Lastly, we introduce a mixed-precision quantization strategy dubbed outlier-preserving quantization (OPQ) to address the distributional mismatch induced by outlier weights in block-wise quantization. By storing outlier weights in 16-bit precision (OPQ) while applying BOF4-S, we achieve top performance among 4-bit block-wise quantization techniques w.r.t. perplexity.

A Lightweight Image Super-Resolution Transformer Trained on Low-Resolution Images Only

Mar 30, 2025Abstract:Transformer architectures prominently lead single-image super-resolution (SISR) benchmarks, reconstructing high-resolution (HR) images from their low-resolution (LR) counterparts. Their strong representative power, however, comes with a higher demand for training data compared to convolutional neural networks (CNNs). For many real-world SR applications, the availability of high-quality HR training images is not given, sparking interest in LR-only training methods. The LR-only SISR benchmark mimics this condition by allowing only low-resolution (LR) images for model training. For a 4x super-resolution, this effectively reduces the amount of available training data to 6.25% of the HR image pixels, which puts the employment of a data-hungry transformer model into question. In this work, we are the first to utilize a lightweight vision transformer model with LR-only training methods addressing the unsupervised SISR LR-only benchmark. We adopt and configure a recent LR-only training method from microscopy image super-resolution to macroscopic real-world data, resulting in our multi-scale training method for bicubic degradation (MSTbic). Furthermore, we compare it with reference methods and prove its effectiveness both for a transformer and a CNN model. We evaluate on the classic SR benchmark datasets Set5, Set14, BSD100, Urban100, and Manga109, and show superior performance over state-of-the-art (so far: CNN-based) LR-only SISR methods. The code is available on GitHub: https://github.com/ifnspaml/SuperResolutionMultiscaleTraining.

Foundation Models for Amodal Video Instance Segmentation in Automated Driving

Sep 21, 2024Abstract:In this work, we study amodal video instance segmentation for automated driving. Previous works perform amodal video instance segmentation relying on methods trained on entirely labeled video data with techniques borrowed from standard video instance segmentation. Such amodally labeled video data is difficult and expensive to obtain and the resulting methods suffer from a trade-off between instance segmentation and tracking performance. To largely solve this issue, we propose to study the application of foundation models for this task. More precisely, we exploit the extensive knowledge of the Segment Anything Model (SAM), while fine-tuning it to the amodal instance segmentation task. Given an initial video instance segmentation, we sample points from the visible masks to prompt our amodal SAM. We use a point memory to store those points. If a previously observed instance is not predicted in a following frame, we retrieve its most recent points from the point memory and use a point tracking method to follow those points to the current frame, together with the corresponding last amodal instance mask. This way, while basing our method on an amodal instance segmentation, we nevertheless obtain video-level amodal instance segmentation results. Our resulting S-AModal method achieves state-of-the-art results in amodal video instance segmentation while resolving the need for amodal video-based labels. Code for S-AModal is available at https://github.com/ifnspaml/S-AModal.

Non-Causal to Causal SSL-Supported Transfer Learning: Towards a High-Performance Low-Latency Speech Vocode

Aug 07, 2024

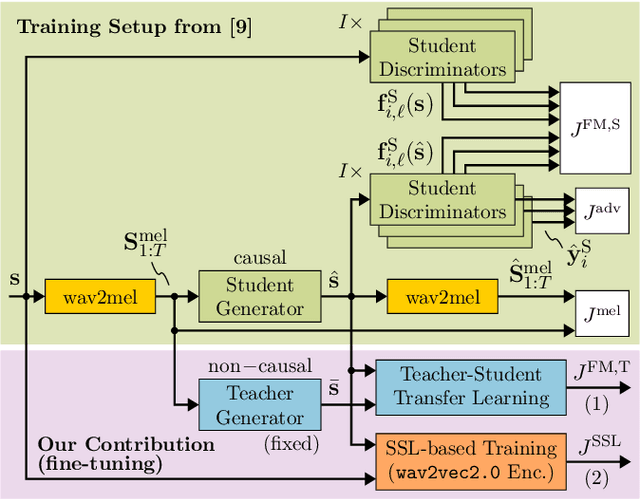

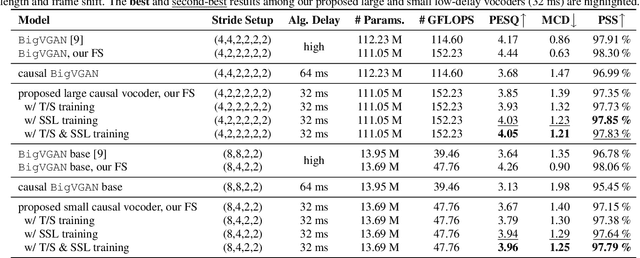

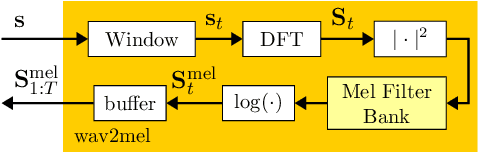

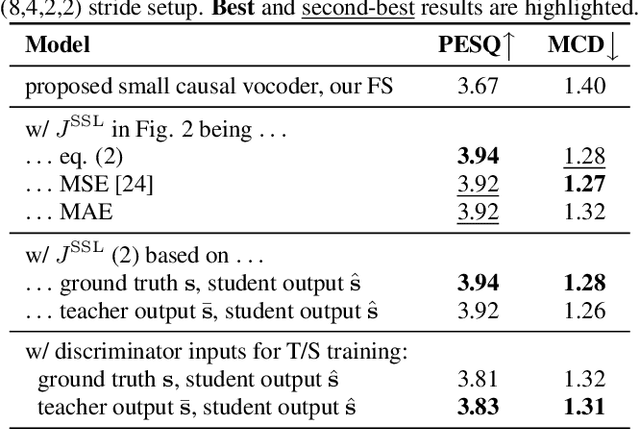

Abstract:Recently, BigVGAN has emerged as high-performance speech vocoder. Its sequence-to-sequence-based synthesis, however, prohibits usage in low-latency conversational applications. Our work addresses this shortcoming in three steps. First, we introduce low latency into BigVGAN via implementing causal convolutions, yielding decreased performance. Second, to regain performance, we propose a teacher-student transfer learning scheme to distill the high-delay non-causal BigVGAN into our low-latency causal vocoder. Third, taking advantage of a self-supervised learning (SSL) model, in our case wav2vec 2.0, we align its encoder speech representations extracted from our low-latency causal vocoder to the ground truth ones. In speaker-independent settings, both proposed training schemes notably elevate the performance of our low-latency vocoder, closing up to the original high-delay BigVGAN. At only 21% higher complexity, our best small causal vocoder achieves 3.96 PESQ and 1.25 MCD, excelling even the original small non-causal BigVGAN (3.64 PESQ) by 0.32 PESQ and 0.1 MCD points, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge