Thomas Low

Automating Vascular Shunt Insertion with the dVRK Surgical Robot

Nov 04, 2022Abstract:Vascular shunt insertion is a fundamental surgical procedure used to temporarily restore blood flow to tissues. It is often performed in the field after major trauma. We formulate a problem of automated vascular shunt insertion and propose a pipeline to perform Automated Vascular Shunt Insertion (AVSI) using a da Vinci Research Kit. The pipeline uses a learned visual model to estimate the locus of the vessel rim, plans a grasp on the rim, and moves to grasp at that point. The first robot gripper then pulls the rim to stretch open the vessel with a dilation motion. The second robot gripper then proceeds to insert a shunt into the vessel phantom (a model of the blood vessel) with a chamfer tilt followed by a screw motion. Results suggest that AVSI achieves a high success rate even with tight tolerances and varying vessel orientations up to 30{\deg}. Supplementary material, dataset, videos, and visualizations can be found at https://sites.google.com/berkeley.edu/autolab-avsi.

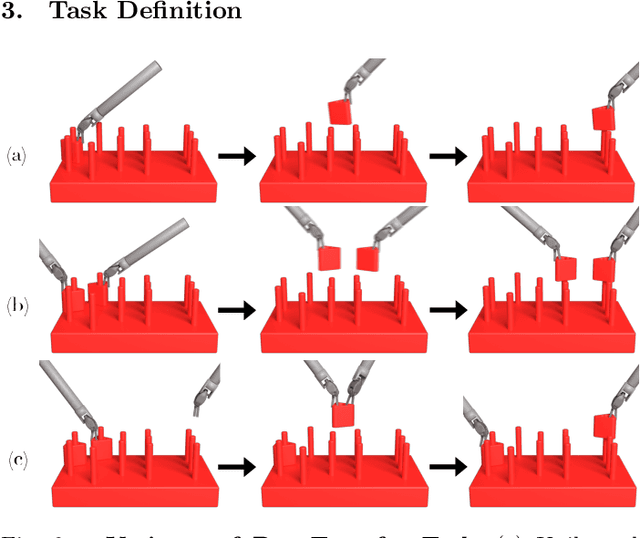

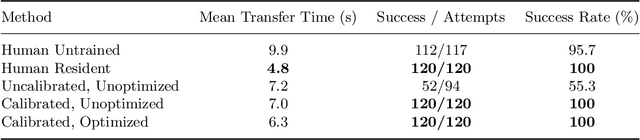

Superhuman Surgical Peg Transfer Using Depth-Sensing and Deep Recurrent Neural Networks

Dec 24, 2020

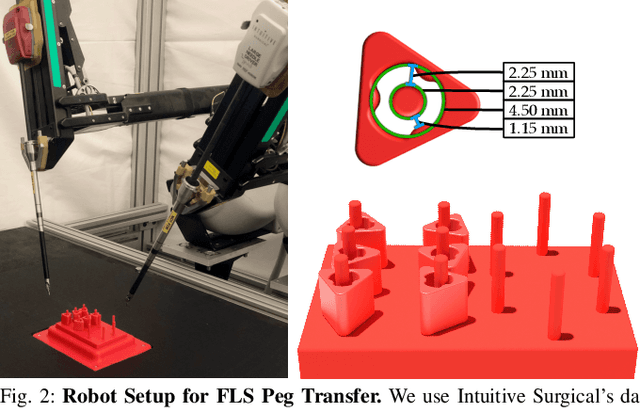

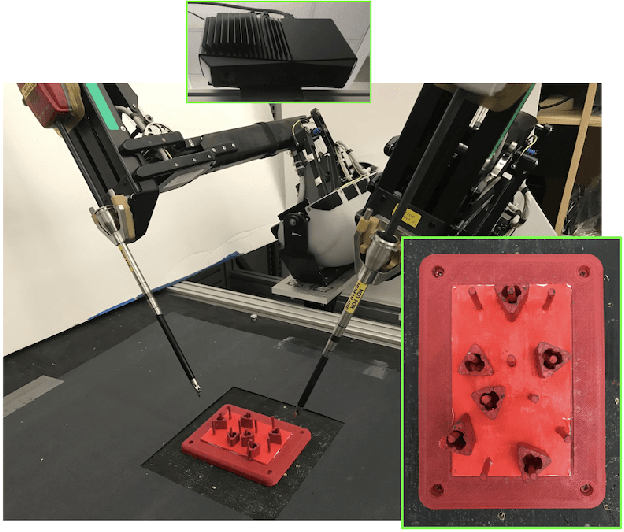

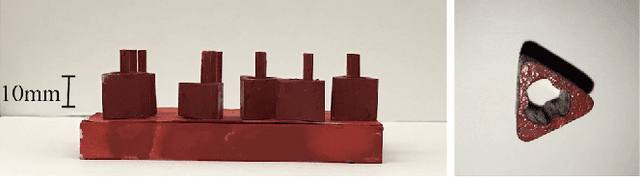

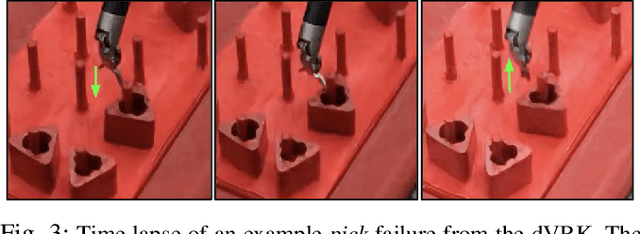

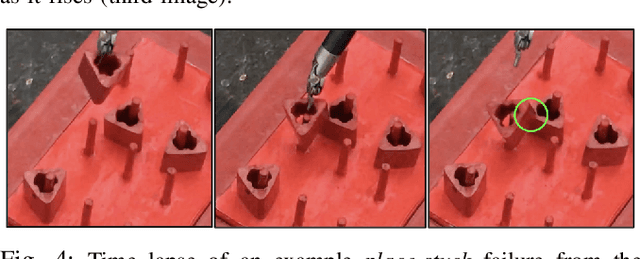

Abstract:We consider the automation of the well-known peg-transfer task from the Fundamentals of Laparoscopic Surgery (FLS). While human surgeons teleoperate robots to perform this task with great dexterity, it remains challenging to automate. We present an approach that leverages emerging innovations in depth sensing, deep learning, and Peiper's method for computing inverse kinematics with time-minimized joint motion. We use the da Vinci Research Kit (dVRK) surgical robot with a Zivid depth sensor, and automate three variants of the peg-transfer task: unilateral, bilateral without handovers, and bilateral with handovers. We use 3D-printed fiducial markers with depth sensing and a deep recurrent neural network to improve the precision of the dVRK to less than 1 mm. We report experimental results for 1800 block transfer trials. Results suggest that the fully automated system can outperform an experienced human surgical resident, who performs far better than untrained humans, in terms of both speed and success rate. For the most difficult variant of peg transfer (with handovers) we compare the performance of the surgical resident with performance of the automated system over 120 trials for each. The experienced surgical resident achieves success rate 93.2 % with mean transfer time of 8.6 seconds. The automated system achieves success rate 94.1 % with mean transfer time of 8.1 seconds. To our knowledge this is the first fully automated system to achieve "superhuman" performance in both speed and success on peg transfer. Supplementary material is available at https://sites.google.com/view/surgicalpegtransfer.

Intermittent Visual Servoing: Efficiently Learning Policies Robust to Instrument Changes for High-precision Surgical Manipulation

Nov 12, 2020

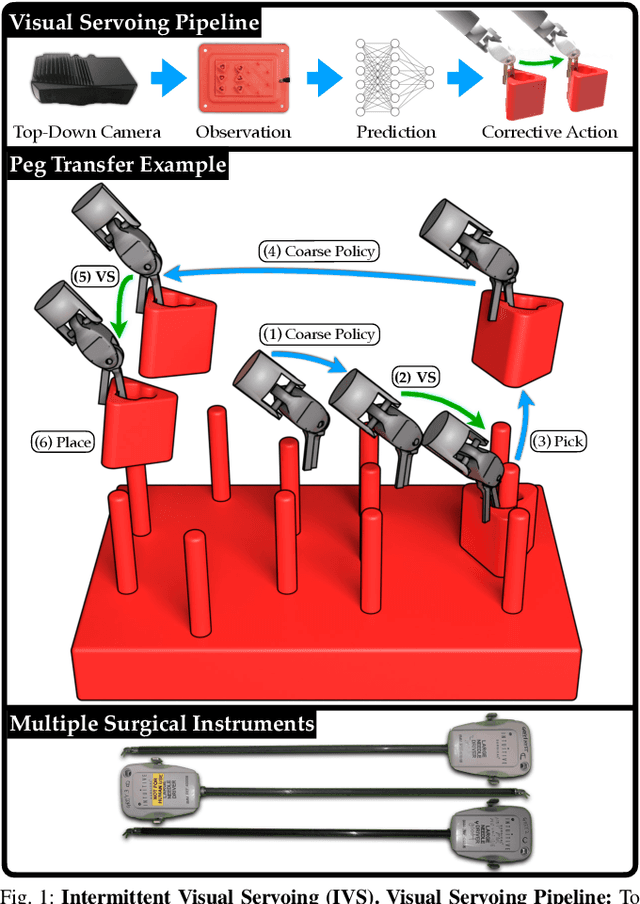

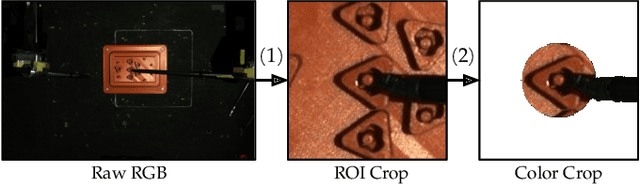

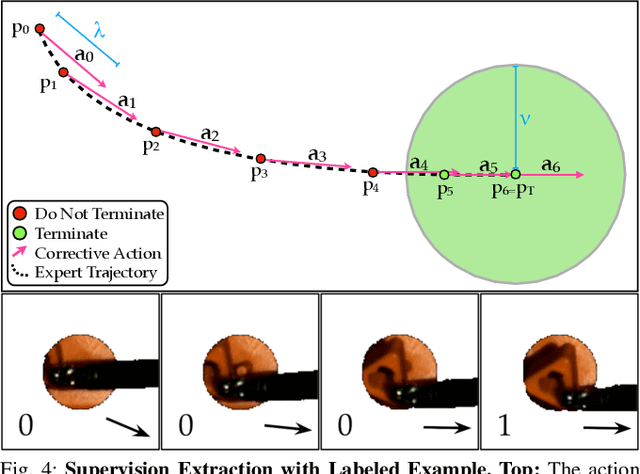

Abstract:Automation of surgical tasks using cable-driven robots is challenging due to backlash, hysteresis, and cable tension, and these issues are exacerbated as surgical instruments must often be changed during an operation. In this work, we propose a framework for automation of high-precision surgical tasks by learning sample efficient, accurate, closed-loop policies that operate directly on visual feedback instead of robot encoder estimates. This framework, which we call intermittent visual servoing (IVS), intermittently switches to a learned visual servo policy for high-precision segments of repetitive surgical tasks while relying on a coarse open-loop policy for the segments where precision is not necessary. To compensate for cable-related effects, we apply imitation learning to rapidly train a policy that maps images of the workspace and instrument from a top-down RGB camera to small corrective motions. We train the policy using only 180 human demonstrations that are roughly 2 seconds each. Results on a da Vinci Research Kit suggest that combining the coarse policy with half a second of corrections from the learned policy during each high-precision segment improves the success rate on the Fundamentals of Laparoscopic Surgery peg transfer task from 72.9% to 99.2%, 31.3% to 99.2%, and 47.2% to 100.0% for 3 instruments with differing cable-related effects. In the contexts we studied, IVS attains the highest published success rates for automated surgical peg transfer and is significantly more reliable than previous techniques when instruments are changed. Supplementary material is available at https://tinyurl.com/ivs-icra.

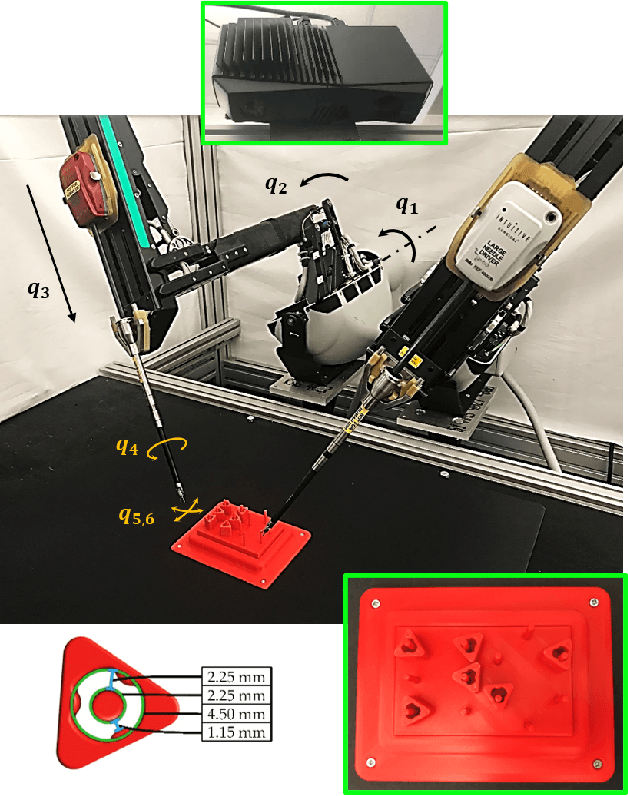

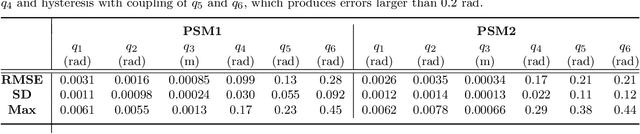

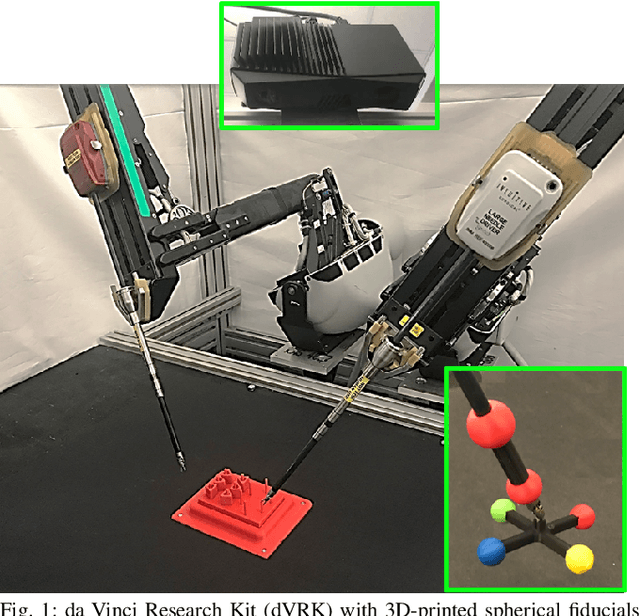

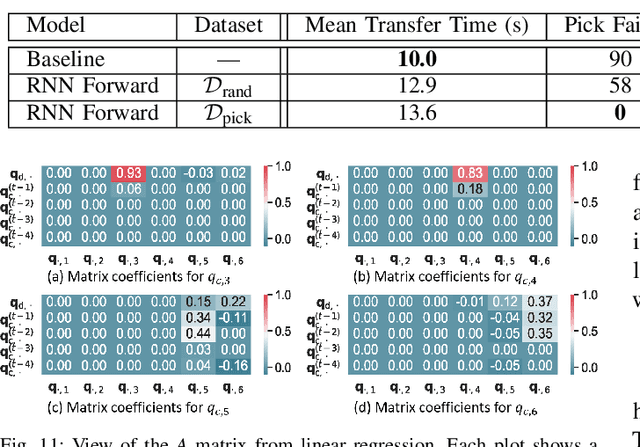

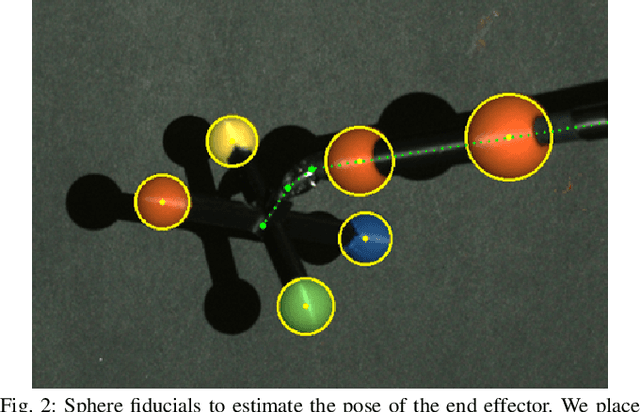

Efficiently Calibrating Cable-Driven Surgical Robots With RGBD Sensing, Temporal Windowing, and Linear and Recurrent Neural Network Compensation

Mar 19, 2020

Abstract:Automation of surgical subtasks using cable-driven robotic surgical assistants (RSAs) such as Intuitive Surgical's da Vinci Research Kit (dVRK) is challenging due to imprecision in control from cable-related effects such as backlash, stretch, and hysteresis. We propose a novel approach to efficiently calibrate a dVRK by placing a 3D printed fiducial coordinate frame on the arm and end-effector that is tracked using RGBD sensing. To measure the coupling effects between joints and history-dependent effects, we analyze data from sampled trajectories and consider 13 modeling approaches using LSTM recurrent neural networks and linear models with varying temporal window length to provide corrective feedback. With the proposed method, data collection takes 31 minutes to produce 1800 samples and model training takes less than a minute. Results suggest that the resulting model can reduce the mean tracking error of the physical robot from 2.96mm to 0.65mm on a test set of reference trajectories. We evaluate the model by executing open-loop trajectories of the FLS peg transfer surgeon training task. Results suggest that the best approach increases success rate from 39.4% to 96.7% comparable to the performance of an expert surgical resident. Supplementary material, including 3D-printable models, is available at https://sites.google.com/berkeley.edu/surgical-calibration.

Applying Depth-Sensing to Automated Surgical Manipulation with a da Vinci Robot

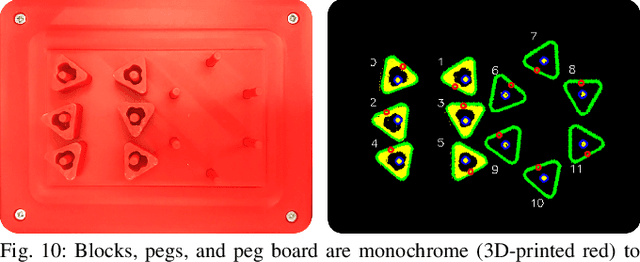

Feb 15, 2020

Abstract:Recent advances in depth-sensing have significantly increased accuracy, resolution, and frame rate, as shown in the 1920x1200 resolution and 13 frames per second Zivid RGBD camera. In this study, we explore the potential of depth sensing for efficient and reliable automation of surgical subtasks. We consider a monochrome (all red) version of the peg transfer task from the Fundamentals of Laparoscopic Surgery training suite implemented with the da Vinci Research Kit (dVRK). We use calibration techniques that allow the imprecise, cable-driven da Vinci to reduce error from 4-5 mm to 1-2 mm in the task space. We report experimental results for a handover-free version of the peg transfer task, performing 20 and 5 physical episodes with single- and bilateral-arm setups, respectively. Results over 236 and 49 total block transfer attempts for the single- and bilateral-arm peg transfer cases suggest that reliability can be attained with 86.9 % and 78.0 % for each individual block, with respective block transfer speeds of 10.02 and 5.72 seconds. Supplementary material is available at https://sites.google.com/view/peg-transfer.

DESK: A Robotic Activity Dataset for Dexterous Surgical Skills Transfer to Medical Robots

Mar 03, 2019

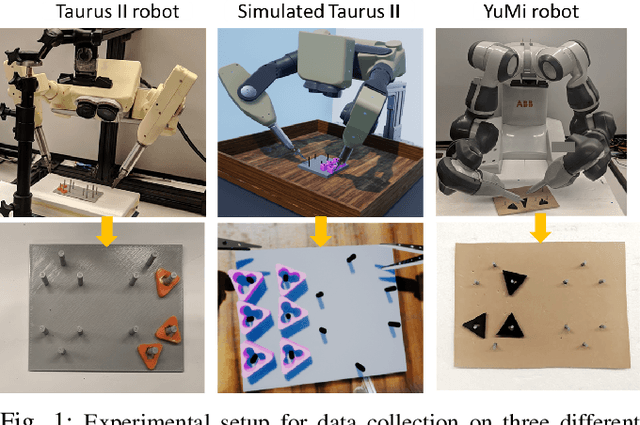

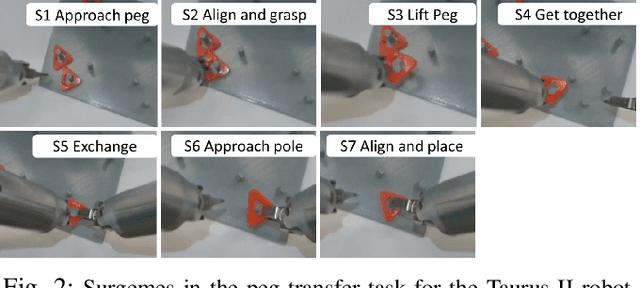

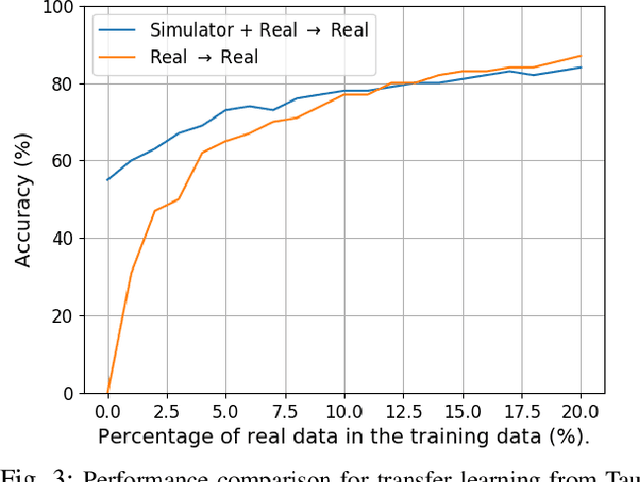

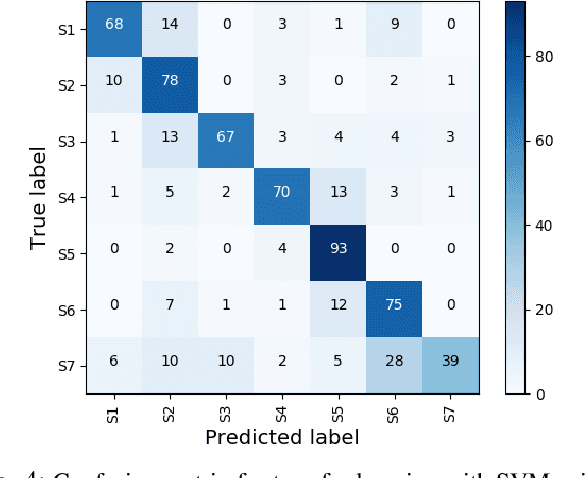

Abstract:Datasets are an essential component for training effective machine learning models. In particular, surgical robotic datasets have been key to many advances in semi-autonomous surgeries, skill assessment, and training. Simulated surgical environments can enhance the data collection process by making it faster, simpler and cheaper than real systems. In addition, combining data from multiple robotic domains can provide rich and diverse training data for transfer learning algorithms. In this paper, we present the DESK (Dexterous Surgical Skill) dataset. It comprises a set of surgical robotic skills collected during a surgical training task using three robotic platforms: the Taurus II robot, Taurus II simulated robot, and the YuMi robot. This dataset was used to test the idea of transferring knowledge across different domains (e.g. from Taurus to YuMi robot) for a surgical gesture classification task with seven gestures. We explored three different scenarios: 1) No transfer, 2) Transfer from simulated Taurus to real Taurus and 3) Transfer from Simulated Taurus to the YuMi robot. We conducted extensive experiments with three supervised learning models and provided baselines in each of these scenarios. Results show that using simulation data during training enhances the performance on the real robot where limited real data is available. In particular, we obtained an accuracy of 55% on the real Taurus data using a model that is trained only on the simulator data. Furthermore, we achieved an accuracy improvement of 34% when 3% of the real data is added into the training process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge