Minho Hwang

Vibration-Assisted Hysteresis Mitigation for Achieving High Compensation Efficiency

Mar 04, 2025Abstract:Tendon-sheath mechanisms (TSMs) are widely used in minimally invasive surgical (MIS) applications, but their inherent hysteresis-caused by friction, backlash, and tendon elongation-leads to significant tracking errors. Conventional modeling and compensation methods struggle with these nonlinearities and require extensive parameter tuning. To address this, we propose a vibration-assisted hysteresis compensation approach, where controlled vibrational motion is applied along the tendon's movement direction to mitigate friction and reduce dead zones. Experimental results demonstrate that the exerted vibration consistently reduces hysteresis across all tested frequencies, decreasing RMSE by up to 23.41% (from 2.2345 mm to 1.7113 mm) and improving correlation, leading to more accurate trajectory tracking. When combined with a Temporal Convolutional Network (TCN)-based compensation model, vibration further enhances performance, achieving an 85.2% reduction in MAE (from 1.334 mm to 0.1969 mm). Without vibration, the TCN-based approach still reduces MAE by 72.3% (from 1.334 mm to 0.370 mm) under the same parameter settings. These findings confirm that vibration effectively mitigates hysteresis, improving trajectory accuracy and enabling more efficient compensation models with fewer trainable parameters. This approach provides a scalable and practical solution for TSM-based robotic applications, particularly in MIS.

A Single Scale Doesn't Fit All: Adaptive Motion Scaling for Efficient and Precise Teleoperation

Mar 03, 2025Abstract:Teleoperation is increasingly employed in environments where direct human access is difficult, such as hazardous exploration or surgical field. However, if the motion scale factor(MSF) intended to compensate for workspace-size differences is set inappropriately, repeated clutching operations and reduced precision can significantly raise cognitive load. This paper presents a shared controller that dynamically applies the MSF based on the user's intended motion scale. Inspired by human motor skills, the leader arm trajectory is divided into coarse(fast, large-range movements) and fine(precise, small-range movements), with three features extracted to train a fuzzy C-means(FCM) clustering model that probabilistically classifies the user's motion scale. Scaling the robot's motion accordingly reduces unnecessary repetition for large-scale movements and enables more precise control for fine operations. Incorporating recent trajectory data into model updates and offering user feedback for adjusting the MSF range and response speed allows mutual adaptation between user and system. In peg transfer experiments, compared to using a fixed single scale, the proposed approach demonstrated improved task efficiency(number of clutching and task completion time decreased 38.46% and 11.96% respectively), while NASA-TLX scores confirmed a meaningful reduction(58.01% decreased) in cognitive load. This outcome suggests that a user-intent-based motion scale adjustment can effectively enhance both efficiency and precision in teleoperation.

OFF-CLIP: Improving Normal Detection Confidence in Radiology CLIP with Simple Off-Diagonal Term Auto-Adjustment

Mar 03, 2025

Abstract:Contrastive Language-Image Pre-Training (CLIP) has enabled zero-shot classification in radiology, reducing reliance on manual annotations. However, conventional contrastive learning struggles with normal case detection due to its strict intra-sample alignment, which disrupts normal sample clustering and leads to high false positives (FPs) and false negatives (FNs). To address these issues, we propose OFF-CLIP, a contrastive learning refinement that improves normal detection by introducing an off-diagonal term loss to enhance normal sample clustering and applying sentence-level text filtering to mitigate FNs by removing misaligned normal statements from abnormal reports. OFF-CLIP can be applied to radiology CLIP models without requiring any architectural modifications. Experimental results show that OFF-CLIP significantly improves normal classification, achieving a 0.61 Area under the curve (AUC) increase on VinDr-CXR over CARZero, the state-of-the-art zero-shot classification baseline, while maintaining or improving abnormal classification performance. Additionally, OFF-CLIP enhances zero-shot grounding by improving pointing game accuracy, confirming better anomaly localization. These results demonstrate OFF-CLIP's effectiveness as a robust and efficient enhancement for medical vision-language models.

SAM: Semi-Active Mechanism for Extensible Continuum Manipulator and Real-time Hysteresis Compensation Control Algorithm

Jun 27, 2024Abstract:Cable-Driven Continuum Manipulators (CDCMs) enable scar-free procedures via natural orifices and improve target lesion accessibility through curved paths. However, CDCMs face limitations in workspace and control accuracy due to non-linear cable effects causing hysteresis. This paper introduces an extensible CDCM with a Semi-active Mechanism (SAM) to expand the workspace via translational motion without additional mechanical elements or actuation. We collect a hysteresis dataset using 8 fiducial markers and RGBD sensing. Based on this dataset, we develop a real-time hysteresis compensation control algorithm using the trained Temporal Convolutional Network (TCN) with a 1ms time latency, effectively estimating the manipulator's hysteresis behavior. Performance validation through random trajectory tracking tests and box pointing tasks shows the proposed controller significantly reduces hysteresis by up to 69.5% in joint space and approximately 26% in the box pointing task.

Optimizing Base Placement of Surgical Robot: Kinematics Data-Driven Approach by Analyzing Working Pattern

Feb 25, 2024Abstract:In robot-assisted minimally invasive surgery (RAMIS), optimal placement of the surgical robot's base is crucial for successful surgery. Improper placement can hinder performance due to manipulator limitations and inaccessible workspaces. Traditionally, trained medical staff rely on experience for base placement, but this approach lacks objectivity. This paper proposes a novel method to determine the optimal base pose based on the individual surgeon's working pattern. The proposed method analyzes recorded end-effector poses using machine-learning based clustering technique to identify key positions and orientations preferred by the surgeon. To address joint limits and singularities problems, we introduce two scoring metrics: joint margin score and manipulability score. We then train a multi-layer perceptron (MLP) regressor to predict the optimal base pose based on these scores. Evaluation in a simulated environment using the da Vinci Research Kit (dVRK) showed unique base pose-score maps for four volunteers, highlighting the individuality of working patterns. After conducting tests on the base poses identified using the proposed method, we confirmed that they have a score approximately 28.2\% higher than when the robots were placed randomly, with respect to the score we defined. This emphasizes the need for operator-specific optimization in RAMIS base placement.

Hysteresis Compensation of Flexible Continuum Manipulator using RGBD Sensing and Temporal Convolutional Network

Feb 17, 2024

Abstract:Flexible continuum manipulators are valued for minimally invasive surgery, offering access to confined spaces through nonlinear paths. However, cable-driven manipulators face control difficulties due to hysteresis from cabling effects such as friction, elongation, and coupling. These effects are difficult to model due to nonlinearity and the difficulties become even more evident when dealing with long and multi-segmented manipulator. This paper proposes a data-driven approach based on recurrent neural networks to capture these nonlinear and previous states-dependent characteristics of cable actuation. We design customized fiducial markers to collect physical joint configurations as a dataset. Result on a study comparing the learning performance of four Deep Neural Network (DNN) models show that the Temporal Convolution Network (TCN) demonstrates the highest predictive capability. Leveraging trained TCNs, we build a control algorithm to compensate for hysteresis. Tracking tests in task space using unseen trajectories show that the best controller reduces the mean position and orientation error by 61.39% (from 13.7 mm to 5.29 mm) and 64.04% (from 31.17{\deg} to 11.21{\deg}), respectively.

Learning to Localize, Grasp, and Hand Over Unmodified Surgical Needles

Dec 08, 2021

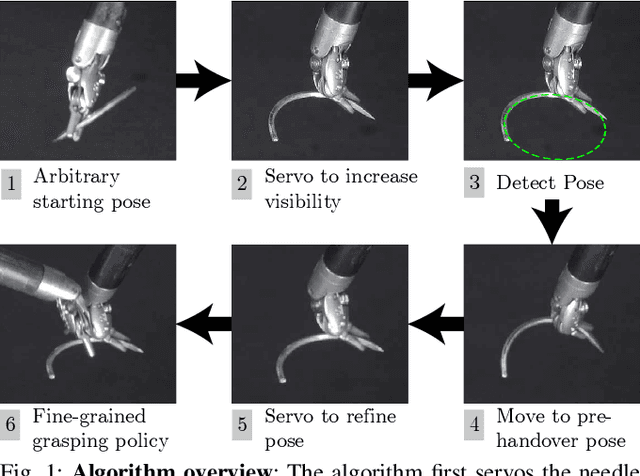

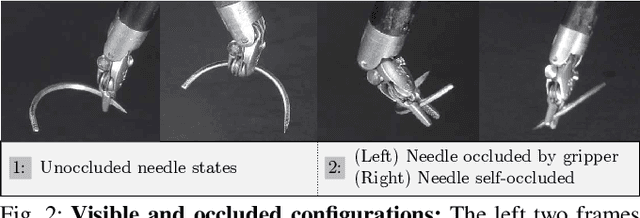

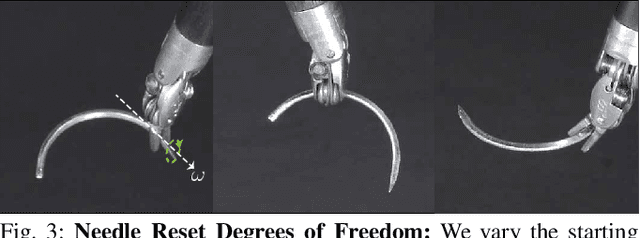

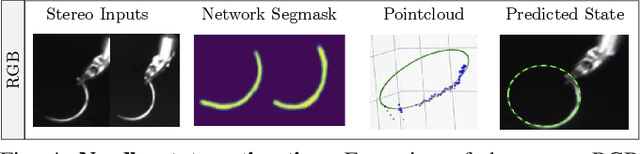

Abstract:Robotic Surgical Assistants (RSAs) are commonly used to perform minimally invasive surgeries by expert surgeons. However, long procedures filled with tedious and repetitive tasks such as suturing can lead to surgeon fatigue, motivating the automation of suturing. As visual tracking of a thin reflective needle is extremely challenging, prior work has modified the needle with nonreflective contrasting paint. As a step towards automation of a suturing subtask without modifying the needle, we propose HOUSTON: Handoff of Unmodified, Surgical, Tool-Obstructed Needles, a problem and algorithm that uses a learned active sensing policy with a stereo camera to localize and align the needle into a visible and accessible pose for the other arm. To compensate for robot positioning and needle perception errors, the algorithm then executes a high-precision grasping motion that uses multiple cameras. In physical experiments using the da Vinci Research Kit (dVRK), HOUSTON successfully passes unmodified surgical needles with a success rate of 96.7% and is able to perform handover sequentially between the arms 32.4 times on average before failure. On needles unseen in training, HOUSTON achieves a success rate of 75 - 92.9%. To our knowledge, this work is the first to study handover of unmodified surgical needles. See https://tinyurl.com/houston-surgery for additional materials.

Untangling Dense Non-Planar Knots by Learning Manipulation Features and Recovery Policies

Jun 29, 2021

Abstract:Robot manipulation for untangling 1D deformable structures such as ropes, cables, and wires is challenging due to their infinite dimensional configuration space, complex dynamics, and tendency to self-occlude. Analytical controllers often fail in the presence of dense configurations, due to the difficulty of grasping between adjacent cable segments. We present two algorithms that enhance robust cable untangling, LOKI and SPiDERMan, which operate alongside HULK, a high-level planner from prior work. LOKI uses a learned model of manipulation features to refine a coarse grasp keypoint prediction to a precise, optimized location and orientation, while SPiDERMan uses a learned model to sense task progress and apply recovery actions. We evaluate these algorithms in physical cable untangling experiments with 336 knots and over 1500 actions on real cables using the da Vinci surgical robot. We find that the combination of HULK, LOKI, and SPiDERMan is able to untangle dense overhand, figure-eight, double-overhand, square, bowline, granny, stevedore, and triple-overhand knots. The composition of these methods successfully untangles a cable from a dense initial configuration in 68.3% of 60 physical experiments and achieves 50% higher success rates than baselines from prior work. Supplementary material, code, and videos can be found at https://tinyurl.com/rssuntangling.

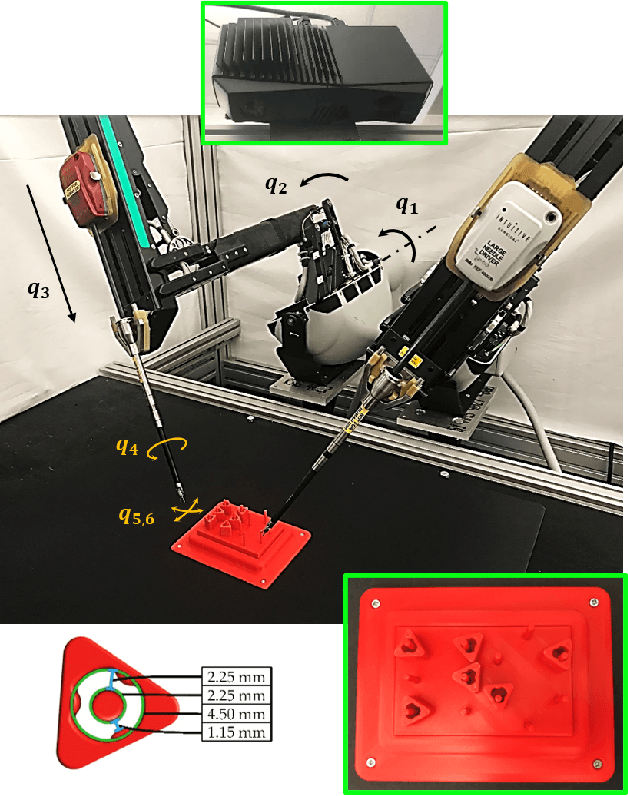

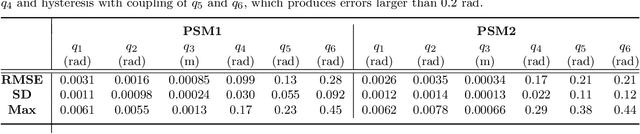

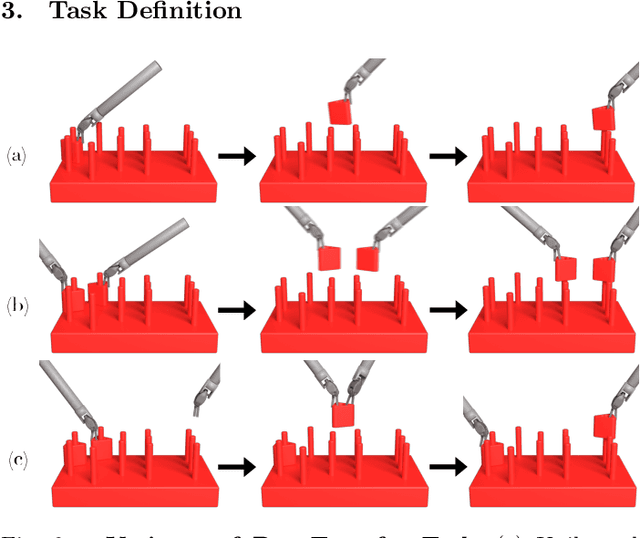

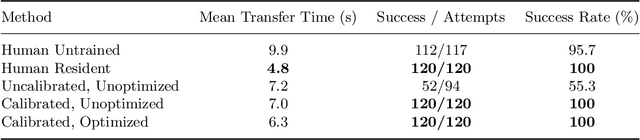

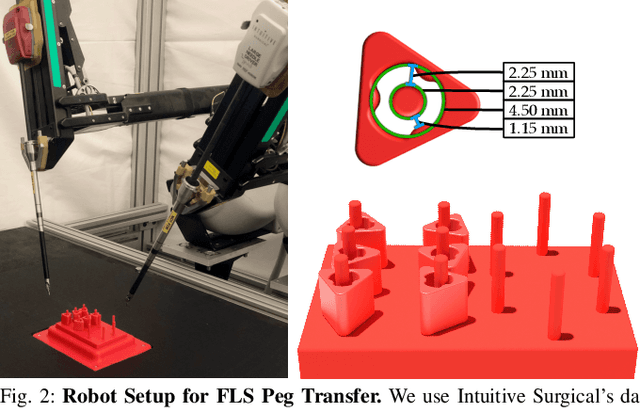

Superhuman Surgical Peg Transfer Using Depth-Sensing and Deep Recurrent Neural Networks

Dec 24, 2020

Abstract:We consider the automation of the well-known peg-transfer task from the Fundamentals of Laparoscopic Surgery (FLS). While human surgeons teleoperate robots to perform this task with great dexterity, it remains challenging to automate. We present an approach that leverages emerging innovations in depth sensing, deep learning, and Peiper's method for computing inverse kinematics with time-minimized joint motion. We use the da Vinci Research Kit (dVRK) surgical robot with a Zivid depth sensor, and automate three variants of the peg-transfer task: unilateral, bilateral without handovers, and bilateral with handovers. We use 3D-printed fiducial markers with depth sensing and a deep recurrent neural network to improve the precision of the dVRK to less than 1 mm. We report experimental results for 1800 block transfer trials. Results suggest that the fully automated system can outperform an experienced human surgical resident, who performs far better than untrained humans, in terms of both speed and success rate. For the most difficult variant of peg transfer (with handovers) we compare the performance of the surgical resident with performance of the automated system over 120 trials for each. The experienced surgical resident achieves success rate 93.2 % with mean transfer time of 8.6 seconds. The automated system achieves success rate 94.1 % with mean transfer time of 8.1 seconds. To our knowledge this is the first fully automated system to achieve "superhuman" performance in both speed and success on peg transfer. Supplementary material is available at https://sites.google.com/view/surgicalpegtransfer.

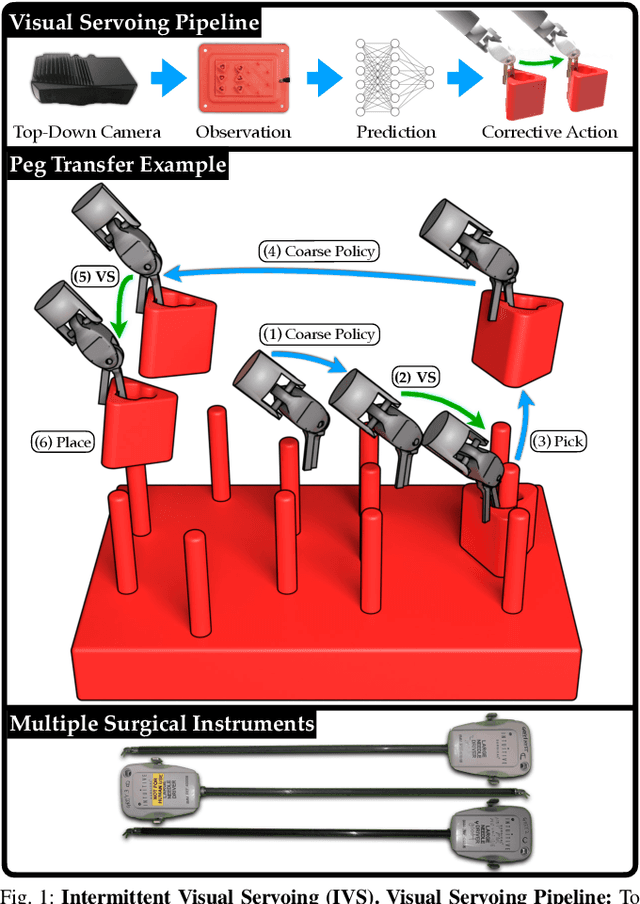

Intermittent Visual Servoing: Efficiently Learning Policies Robust to Instrument Changes for High-precision Surgical Manipulation

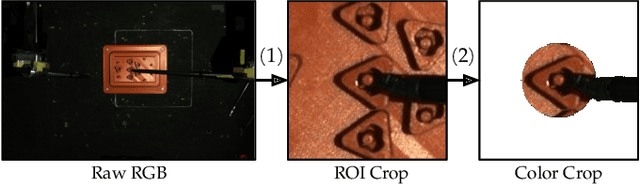

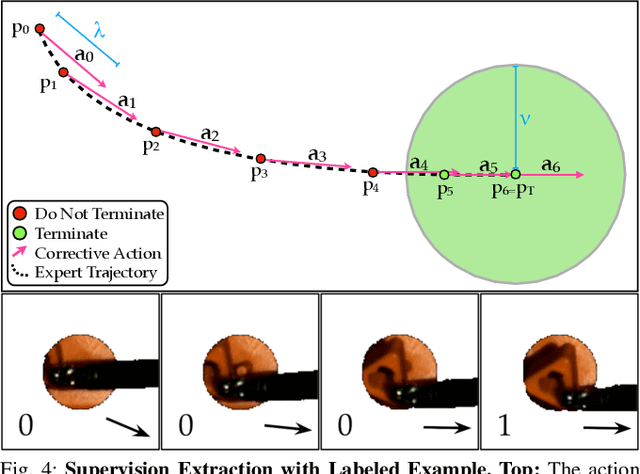

Nov 12, 2020

Abstract:Automation of surgical tasks using cable-driven robots is challenging due to backlash, hysteresis, and cable tension, and these issues are exacerbated as surgical instruments must often be changed during an operation. In this work, we propose a framework for automation of high-precision surgical tasks by learning sample efficient, accurate, closed-loop policies that operate directly on visual feedback instead of robot encoder estimates. This framework, which we call intermittent visual servoing (IVS), intermittently switches to a learned visual servo policy for high-precision segments of repetitive surgical tasks while relying on a coarse open-loop policy for the segments where precision is not necessary. To compensate for cable-related effects, we apply imitation learning to rapidly train a policy that maps images of the workspace and instrument from a top-down RGB camera to small corrective motions. We train the policy using only 180 human demonstrations that are roughly 2 seconds each. Results on a da Vinci Research Kit suggest that combining the coarse policy with half a second of corrections from the learned policy during each high-precision segment improves the success rate on the Fundamentals of Laparoscopic Surgery peg transfer task from 72.9% to 99.2%, 31.3% to 99.2%, and 47.2% to 100.0% for 3 instruments with differing cable-related effects. In the contexts we studied, IVS attains the highest published success rates for automated surgical peg transfer and is significantly more reliable than previous techniques when instruments are changed. Supplementary material is available at https://tinyurl.com/ivs-icra.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge