Donghwan Lee

Periodic Regularized Q-Learning

Feb 03, 2026Abstract:In reinforcement learning (RL), Q-learning is a fundamental algorithm whose convergence is guaranteed in the tabular setting. However, this convergence guarantee does not hold under linear function approximation. To overcome this limitation, a significant line of research has introduced regularization techniques to ensure stable convergence under function approximation. In this work, we propose a new algorithm, periodic regularized Q-learning (PRQ). We first introduce regularization at the level of the projection operator and explicitly construct a regularized projected value iteration (RP-VI), subsequently extending it to a sample-based RL algorithm. By appropriately regularizing the projection operator, the resulting projected value iteration becomes a contraction. By extending this regularized projection into the stochastic setting, we establish the PRQ algorithm and provide a rigorous theoretical analysis that proves finite-time convergence guarantees for PRQ under linear function approximation.

Regularized Gradient Temporal-Difference Learning

Jan 28, 2026Abstract:Gradient temporal-difference (GTD) learning algorithms are widely used for off-policy policy evaluation with function approximation. However, existing convergence analyses rely on the restrictive assumption that the so-called feature interaction matrix (FIM) is nonsingular. In practice, the FIM can become singular and leads to instability or degraded performance. In this paper, we propose a regularized optimization objective by reformulating the mean-square projected Bellman error (MSPBE) minimization. This formulation naturally yields a regularized GTD algorithms, referred to as R-GTD, which guarantees convergence to a unique solution even when the FIM is singular. We establish theoretical convergence guarantees and explicit error bounds for the proposed method, and validate its effectiveness through empirical experiments.

Analysis of Control Bellman Residual Minimization for Markov Decision Problem

Jan 26, 2026Abstract:Markov decision problems are most commonly solved via dynamic programming. Another approach is Bellman residual minimization, which directly minimizes the squared Bellman residual objective function. However, compared to dynamic programming, this approach has received relatively less attention, mainly because it is often less efficient in practice and can be more difficult to extend to model-free settings such as reinforcement learning. Nonetheless, Bellman residual minimization has several advantages that make it worth investigating, such as more stable convergence with function approximation for value functions. While Bellman residual methods for policy evaluation have been widely studied, methods for policy optimization (control tasks) have been scarcely explored. In this paper, we establish foundational results for the control Bellman residual minimization for policy optimization.

MATANet: A Multi-context Attention and Taxonomy-Aware Network for Fine-Grained Underwater Recognition of Marine Species

Jan 07, 2026Abstract:Fine-grained classification of marine animals supports ecology, biodiversity and habitat conservation, and evidence-based policy-making. However, existing methods often overlook contextual interactions from the surrounding environment and insufficiently incorporate the hierarchical structure of marine biological taxonomy. To address these challenges, we propose MATANet (Multi-context Attention and Taxonomy-Aware Network), a novel model designed for fine-grained marine species classification. MATANet mimics expert strategies by using taxonomy and environmental context to interpret ambiguous features of underwater animals. It consists of two key components: a Multi-Context Environmental Attention Module (MCEAM), which learns relationships between regions of interest (ROIs) and their surrounding environments, and a Hierarchical Separation-Induced Learning Module (HSLM), which encodes taxonomic hierarchy into the feature space. MATANet combines instance and environmental features with taxonomic structure to enhance fine-grained classification. Experiments on the FathomNet2025, FAIR1M, and LifeCLEF2015-Fish datasets demonstrate state-of-the-art performance. The source code is available at: https://github.com/dhlee-work/fathomnet-cvpr2025-ssl

EraseLoRA: MLLM-Driven Foreground Exclusion and Background Subtype Aggregation for Dataset-Free Object Removal

Dec 25, 2025

Abstract:Object removal differs from common inpainting, since it must prevent the masked target from reappearing and reconstruct the occluded background with structural and contextual fidelity, rather than merely filling a hole plausibly. Recent dataset-free approaches that redirect self-attention inside the mask fail in two ways: non-target foregrounds are often misinterpreted as background, which regenerates unwanted objects, and direct attention manipulation disrupts fine details and hinders coherent integration of background cues. We propose EraseLoRA, a novel dataset-free framework that replaces attention surgery with background-aware reasoning and test-time adaptation. First, Background-aware Foreground Exclusion (BFE), uses a multimodal large-language models to separate target foreground, non-target foregrounds, and clean background from a single image-mask pair without paired supervision, producing reliable background cues while excluding distractors. Second, Background-aware Reconstruction with Subtype Aggregation (BRSA), performs test-time optimization that treats inferred background subtypes as complementary pieces and enforces their consistent integration through reconstruction and alignment objectives, preserving local detail and global structure without explicit attention intervention. We validate EraseLoRA as a plug-in to pretrained diffusion models and across benchmarks for object removal, demonstrating consistent improvements over dataset-free baselines and competitive results against dataset-driven methods. The code will be made available upon publication.

Adaptive Policy Backbone via Shared Network

Sep 26, 2025Abstract:Reinforcement learning (RL) has achieved impressive results across domains, yet learning an optimal policy typically requires extensive interaction data, limiting practical deployment. A common remedy is to leverage priors, such as pre-collected datasets or reference policies, but their utility degrades under task mismatch between training and deployment. While prior work has sought to address this mismatch, it has largely been restricted to in-distribution settings. To address this challenge, we propose Adaptive Policy Backbone (APB), a meta-transfer RL method that inserts lightweight linear layers before and after a shared backbone, thereby enabling parameter-efficient fine-tuning (PEFT) while preserving prior knowledge during adaptation. Our results show that APB improves sample efficiency over standard RL and adapts to out-of-distribution (OOD) tasks where existing meta-RL baselines typically fail.

Pretraining a Shared Q-Network for Data-Efficient Offline Reinforcement Learning

May 09, 2025Abstract:Offline reinforcement learning (RL) aims to learn a policy from a static dataset without further interactions with the environment. Collecting sufficiently large datasets for offline RL is exhausting since this data collection requires colossus interactions with environments and becomes tricky when the interaction with the environment is restricted. Hence, how an agent learns the best policy with a minimal static dataset is a crucial issue in offline RL, similar to the sample efficiency problem in online RL. In this paper, we propose a simple yet effective plug-and-play pretraining method to initialize a feature of a $Q$-network to enhance data efficiency in offline RL. Specifically, we introduce a shared $Q$-network structure that outputs predictions of the next state and $Q$-value. We pretrain the shared $Q$-network through a supervised regression task that predicts a next state and trains the shared $Q$-network using diverse offline RL methods. Through extensive experiments, we empirically demonstrate that our method enhances the performance of existing popular offline RL methods on the D4RL, Robomimic and V-D4RL benchmarks. Furthermore, we show that our method significantly boosts data-efficient offline RL across various data qualities and data distributions trough D4RL and ExoRL benchmarks. Notably, our method adapted with only 10% of the dataset outperforms standard algorithms even with full datasets.

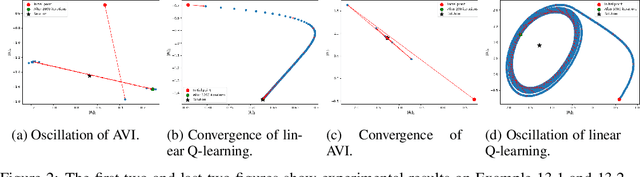

Understanding the theoretical properties of projected Bellman equation, linear Q-learning, and approximate value iteration

Apr 15, 2025

Abstract:In this paper, we study the theoretical properties of the projected Bellman equation (PBE) and two algorithms to solve this equation: linear Q-learning and approximate value iteration (AVI). We consider two sufficient conditions for the existence of a solution to PBE : strictly negatively row dominating diagonal (SNRDD) assumption and a condition motivated by the convergence of AVI. The SNRDD assumption also ensures the convergence of linear Q-learning, and its relationship with the convergence of AVI is examined. Lastly, several interesting observations on the solution of PBE are provided when using $\epsilon$-greedy policy.

Deep Q-Learning with Gradient Target Tracking

Mar 20, 2025Abstract:This paper introduces Q-learning with gradient target tracking, a novel reinforcement learning framework that provides a learned continuous target update mechanism as an alternative to the conventional hard update paradigm. In the standard deep Q-network (DQN), the target network is a copy of the online network's weights, held fixed for a number of iterations before being periodically replaced via a hard update. While this stabilizes training by providing consistent targets, it introduces a new challenge: the hard update period must be carefully tuned to achieve optimal performance. To address this issue, we propose two gradient-based target update methods: DQN with asymmetric gradient target tracking (AGT2-DQN) and DQN with symmetric gradient target tracking (SGT2-DQN). These methods replace the conventional hard target updates with continuous and structured updates using gradient descent, which effectively eliminates the need for manual tuning. We provide a theoretical analysis proving the convergence of these methods in tabular settings. Additionally, empirical evaluations demonstrate their advantages over standard DQN baselines, which suggest that gradient-based target updates can serve as an effective alternative to conventional target update mechanisms in Q-learning.

OFF-CLIP: Improving Normal Detection Confidence in Radiology CLIP with Simple Off-Diagonal Term Auto-Adjustment

Mar 03, 2025

Abstract:Contrastive Language-Image Pre-Training (CLIP) has enabled zero-shot classification in radiology, reducing reliance on manual annotations. However, conventional contrastive learning struggles with normal case detection due to its strict intra-sample alignment, which disrupts normal sample clustering and leads to high false positives (FPs) and false negatives (FNs). To address these issues, we propose OFF-CLIP, a contrastive learning refinement that improves normal detection by introducing an off-diagonal term loss to enhance normal sample clustering and applying sentence-level text filtering to mitigate FNs by removing misaligned normal statements from abnormal reports. OFF-CLIP can be applied to radiology CLIP models without requiring any architectural modifications. Experimental results show that OFF-CLIP significantly improves normal classification, achieving a 0.61 Area under the curve (AUC) increase on VinDr-CXR over CARZero, the state-of-the-art zero-shot classification baseline, while maintaining or improving abnormal classification performance. Additionally, OFF-CLIP enhances zero-shot grounding by improving pointing game accuracy, confirming better anomaly localization. These results demonstrate OFF-CLIP's effectiveness as a robust and efficient enhancement for medical vision-language models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge