Shunli Wang

Center of Ultra-precision Optoelectronic Instrument engineering, Harbin Institute of Technology, Key Lab of Ultra-precision Intelligent Instrumentation, Harbin Institute of Technology

Toward Robust Incomplete Multimodal Sentiment Analysis via Hierarchical Representation Learning

Nov 05, 2024

Abstract:Multimodal Sentiment Analysis (MSA) is an important research area that aims to understand and recognize human sentiment through multiple modalities. The complementary information provided by multimodal fusion promotes better sentiment analysis compared to utilizing only a single modality. Nevertheless, in real-world applications, many unavoidable factors may lead to situations of uncertain modality missing, thus hindering the effectiveness of multimodal modeling and degrading the model's performance. To this end, we propose a Hierarchical Representation Learning Framework (HRLF) for the MSA task under uncertain missing modalities. Specifically, we propose a fine-grained representation factorization module that sufficiently extracts valuable sentiment information by factorizing modality into sentiment-relevant and modality-specific representations through crossmodal translation and sentiment semantic reconstruction. Moreover, a hierarchical mutual information maximization mechanism is introduced to incrementally maximize the mutual information between multi-scale representations to align and reconstruct the high-level semantics in the representations. Ultimately, we propose a hierarchical adversarial learning mechanism that further aligns and adapts the latent distribution of sentiment-relevant representations to produce robust joint multimodal representations. Comprehensive experiments on three datasets demonstrate that HRLF significantly improves MSA performance under uncertain modality missing cases.

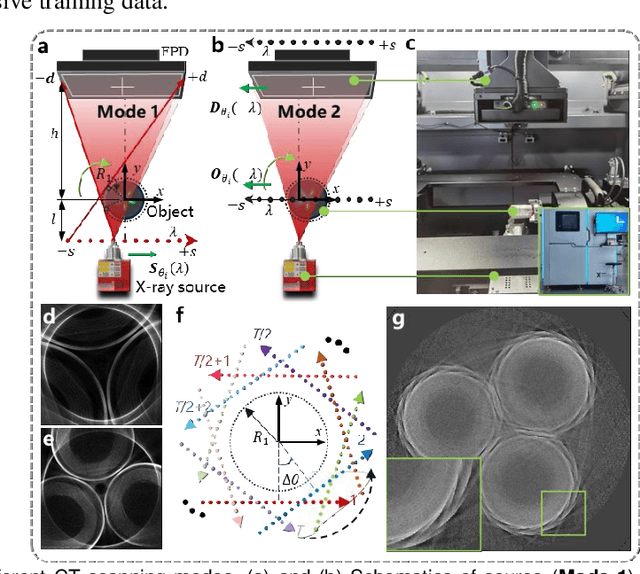

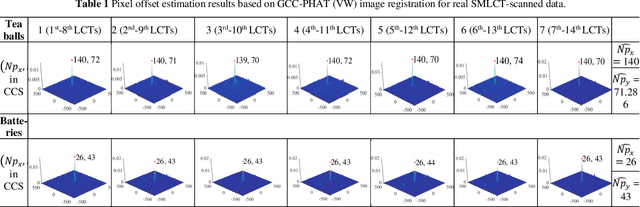

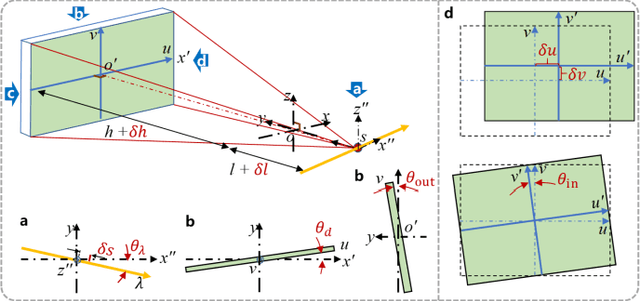

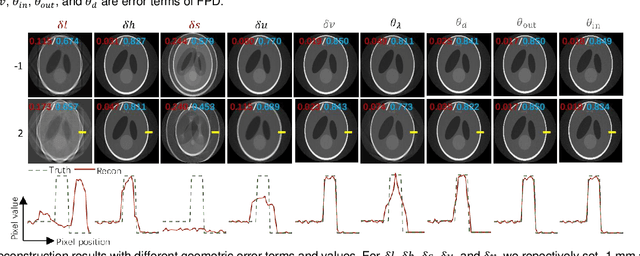

Geometric Artifact Correction for Symmetric Multi-Linear Trajectory CT: Theory, Method, and Generalization

Aug 27, 2024

Abstract:For extending CT field-of-view to perform non-destructive testing, the Symmetric Multi-Linear trajectory Computed Tomography (SMLCT) has been developed as a successful example of non-standard CT scanning modes. However, inevitable geometric errors can cause severe artifacts in the reconstructed images. The existing calibration method for SMLCT is both crude and inefficient. It involves reconstructing hundreds of images by exhaustively substituting each potential error, and then manually identifying the images with the fewest geometric artifacts to estimate the final geometric errors for calibration. In this paper, we comprehensively and efficiently address the challenging geometric artifacts in SMLCT, , and the corresponding works mainly involve theory, method, and generalization. In particular, after identifying sensitive parameters and conducting some theory analysis of geometric artifacts, we summarize several key properties between sensitive geometric parameters and artifact characteristics. Then, we further construct mathematical relationships that relate sensitive geometric errors to the pixel offsets of reconstruction images with artifact characteristics. To accurately extract pixel bias, we innovatively adapt the Generalized Cross-Correlation with Phase Transform (GCC-PHAT) algorithm, commonly used in sound processing, for our image registration task for each paired symmetric LCT. This adaptation leads to the design of a highly efficient rigid translation registration method. Simulation and physical experiments have validated the excellent performance of this work. Additionally, our results demonstrate significant generalization to common rotated CT and a variant of SMLCT.

MaskBEV: Towards A Unified Framework for BEV Detection and Map Segmentation

Aug 17, 2024

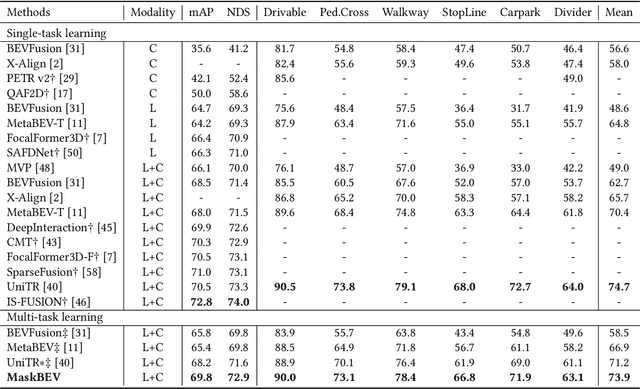

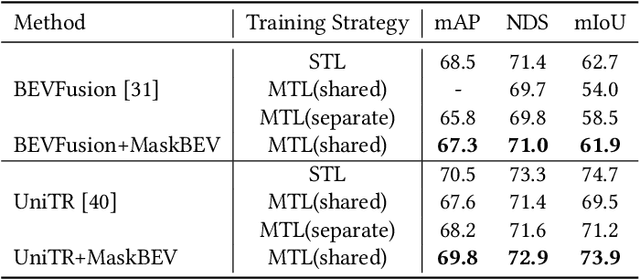

Abstract:Accurate and robust multimodal multi-task perception is crucial for modern autonomous driving systems. However, current multimodal perception research follows independent paradigms designed for specific perception tasks, leading to a lack of complementary learning among tasks and decreased performance in multi-task learning (MTL) due to joint training. In this paper, we propose MaskBEV, a masked attention-based MTL paradigm that unifies 3D object detection and bird's eye view (BEV) map segmentation. MaskBEV introduces a task-agnostic Transformer decoder to process these diverse tasks, enabling MTL to be completed in a unified decoder without requiring additional design of specific task heads. To fully exploit the complementary information between BEV map segmentation and 3D object detection tasks in BEV space, we propose spatial modulation and scene-level context aggregation strategies. These strategies consider the inherent dependencies between BEV segmentation and 3D detection, naturally boosting MTL performance. Extensive experiments on nuScenes dataset show that compared with previous state-of-the-art MTL methods, MaskBEV achieves 1.3 NDS improvement in 3D object detection and 2.7 mIoU improvement in BEV map segmentation, while also demonstrating slightly leading inference speed.

Faster Diffusion Action Segmentation

Aug 04, 2024

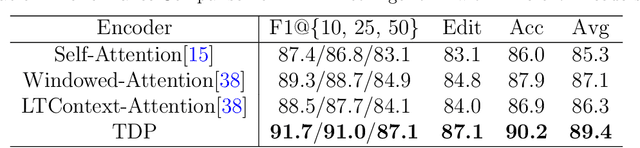

Abstract:Temporal Action Segmentation (TAS) is an essential task in video analysis, aiming to segment and classify continuous frames into distinct action segments. However, the ambiguous boundaries between actions pose a significant challenge for high-precision segmentation. Recent advances in diffusion models have demonstrated substantial success in TAS tasks due to their stable training process and high-quality generation capabilities. However, the heavy sampling steps required by diffusion models pose a substantial computational burden, limiting their practicality in real-time applications. Additionally, most related works utilize Transformer-based encoder architectures. Although these architectures excel at capturing long-range dependencies, they incur high computational costs and face feature-smoothing issues when processing long video sequences. To address these challenges, we propose EffiDiffAct, an efficient and high-performance TAS algorithm. Specifically, we develop a lightweight temporal feature encoder that reduces computational overhead and mitigates the rank collapse phenomenon associated with traditional self-attention mechanisms. Furthermore, we introduce an adaptive skip strategy that allows for dynamic adjustment of timestep lengths based on computed similarity metrics during inference, thereby further enhancing computational efficiency. Comprehensive experiments on the 50Salads, Breakfast, and GTEA datasets demonstrated the effectiveness of the proposed algorithm.

MedThink: Inducing Medical Large-scale Visual Language Models to Hallucinate Less by Thinking More

Jun 18, 2024

Abstract:When Large Vision Language Models (LVLMs) are applied to multimodal medical generative tasks, they suffer from significant model hallucination issues. This severely impairs the model's generative accuracy, making it challenging for LVLMs to be implemented in real-world medical scenarios to assist doctors in diagnosis. Enhancing the training data for downstream medical generative tasks is an effective way to address model hallucination. Moreover, the limited availability of training data in the medical field and privacy concerns greatly hinder the model's accuracy and generalization capabilities. In this paper, we introduce a method that mimics human cognitive processes to construct fine-grained instruction pairs and apply the concept of chain-of-thought (CoT) from inference scenarios to training scenarios, thereby proposing a method called MedThink. Our experiments on various LVLMs demonstrate that our novel data construction method tailored for the medical domain significantly improves the model's performance in medical image report generation tasks and substantially mitigates the hallucinations. All resources of this work will be released soon.

Detecting and Evaluating Medical Hallucinations in Large Vision Language Models

Jun 14, 2024

Abstract:Large Vision Language Models (LVLMs) are increasingly integral to healthcare applications, including medical visual question answering and imaging report generation. While these models inherit the robust capabilities of foundational Large Language Models (LLMs), they also inherit susceptibility to hallucinations-a significant concern in high-stakes medical contexts where the margin for error is minimal. However, currently, there are no dedicated methods or benchmarks for hallucination detection and evaluation in the medical field. To bridge this gap, we introduce Med-HallMark, the first benchmark specifically designed for hallucination detection and evaluation within the medical multimodal domain. This benchmark provides multi-tasking hallucination support, multifaceted hallucination data, and hierarchical hallucination categorization. Furthermore, we propose the MediHall Score, a new medical evaluative metric designed to assess LVLMs' hallucinations through a hierarchical scoring system that considers the severity and type of hallucination, thereby enabling a granular assessment of potential clinical impacts. We also present MediHallDetector, a novel Medical LVLM engineered for precise hallucination detection, which employs multitask training for hallucination detection. Through extensive experimental evaluations, we establish baselines for popular LVLMs using our benchmark. The findings indicate that MediHall Score provides a more nuanced understanding of hallucination impacts compared to traditional metrics and demonstrate the enhanced performance of MediHallDetector. We hope this work can significantly improve the reliability of LVLMs in medical applications. All resources of this work will be released soon.

PediatricsGPT: Large Language Models as Chinese Medical Assistants for Pediatric Applications

May 29, 2024

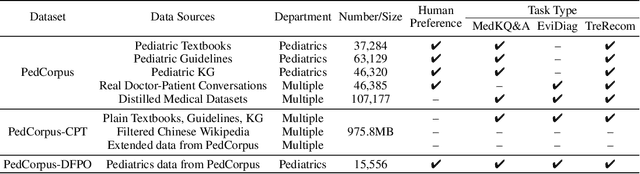

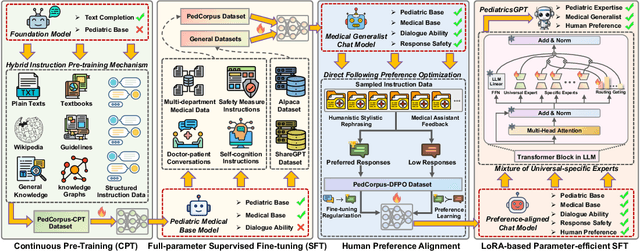

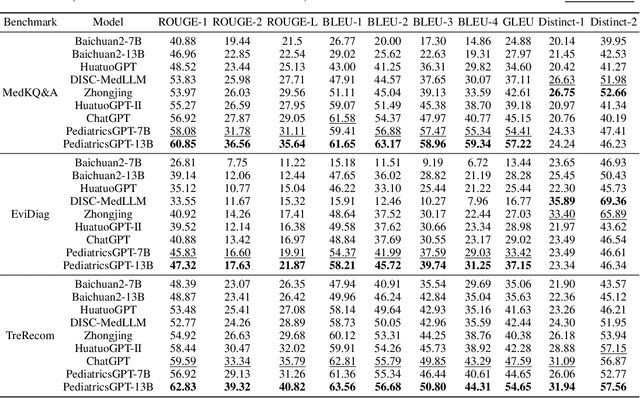

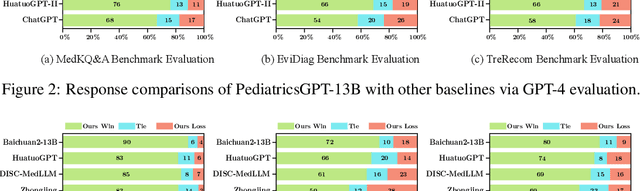

Abstract:Developing intelligent pediatric consultation systems offers promising prospects for improving diagnostic efficiency, especially in China, where healthcare resources are scarce. Despite recent advances in Large Language Models (LLMs) for Chinese medicine, their performance is sub-optimal in pediatric applications due to inadequate instruction data and vulnerable training procedures. To address the above issues, this paper builds PedCorpus, a high-quality dataset of over 300,000 multi-task instructions from pediatric textbooks, guidelines, and knowledge graph resources to fulfil diverse diagnostic demands. Upon well-designed PedCorpus, we propose PediatricsGPT, the first Chinese pediatric LLM assistant built on a systematic and robust training pipeline. In the continuous pre-training phase, we introduce a hybrid instruction pre-training mechanism to mitigate the internal-injected knowledge inconsistency of LLMs for medical domain adaptation. Immediately, the full-parameter Supervised Fine-Tuning (SFT) is utilized to incorporate the general medical knowledge schema into the models. After that, we devise a direct following preference optimization to enhance the generation of pediatrician-like humanistic responses. In the parameter-efficient secondary SFT phase, a mixture of universal-specific experts strategy is presented to resolve the competency conflict between medical generalist and pediatric expertise mastery. Extensive results based on the metrics, GPT-4, and doctor evaluations on distinct doctor downstream tasks show that PediatricsGPT consistently outperforms previous Chinese medical LLMs. Our model and dataset will be open-source for community development.

SMCD: High Realism Motion Style Transfer via Mamba-based Diffusion

May 05, 2024

Abstract:Motion style transfer is a significant research direction in multimedia applications. It enables the rapid switching of different styles of the same motion for virtual digital humans, thus vastly increasing the diversity and realism of movements. It is widely applied in multimedia scenarios such as movies, games, and the Metaverse. However, most of the current work in this field adopts the GAN, which may lead to instability and convergence issues, making the final generated motion sequence somewhat chaotic and unable to reflect a highly realistic and natural style. To address these problems, we consider style motion as a condition and propose the Style Motion Conditioned Diffusion (SMCD) framework for the first time, which can more comprehensively learn the style features of motion. Moreover, we apply Mamba model for the first time in the motion style transfer field, introducing the Motion Style Mamba (MSM) module to handle longer motion sequences. Thirdly, aiming at the SMCD framework, we propose Diffusion-based Content Consistency Loss and Content Consistency Loss to assist the overall framework's training. Finally, we conduct extensive experiments. The results reveal that our method surpasses state-of-the-art methods in both qualitative and quantitative comparisons, capable of generating more realistic motion sequences.

Robust Emotion Recognition in Context Debiasing

Mar 09, 2024Abstract:Context-aware emotion recognition (CAER) has recently boosted the practical applications of affective computing techniques in unconstrained environments. Mainstream CAER methods invariably extract ensemble representations from diverse contexts and subject-centred characteristics to perceive the target person's emotional state. Despite advancements, the biggest challenge remains due to context bias interference. The harmful bias forces the models to rely on spurious correlations between background contexts and emotion labels in likelihood estimation, causing severe performance bottlenecks and confounding valuable context priors. In this paper, we propose a counterfactual emotion inference (CLEF) framework to address the above issue. Specifically, we first formulate a generalized causal graph to decouple the causal relationships among the variables in CAER. Following the causal graph, CLEF introduces a non-invasive context branch to capture the adverse direct effect caused by the context bias. During the inference, we eliminate the direct context effect from the total causal effect by comparing factual and counterfactual outcomes, resulting in bias mitigation and robust prediction. As a model-agnostic framework, CLEF can be readily integrated into existing methods, bringing consistent performance gains.

HandGCAT: Occlusion-Robust 3D Hand Mesh Reconstruction from Monocular Images

Feb 27, 2024

Abstract:We propose a robust and accurate method for reconstructing 3D hand mesh from monocular images. This is a very challenging problem, as hands are often severely occluded by objects. Previous works often have disregarded 2D hand pose information, which contains hand prior knowledge that is strongly correlated with occluded regions. Thus, in this work, we propose a novel 3D hand mesh reconstruction network HandGCAT, that can fully exploit hand prior as compensation information to enhance occluded region features. Specifically, we designed the Knowledge-Guided Graph Convolution (KGC) module and the Cross-Attention Transformer (CAT) module. KGC extracts hand prior information from 2D hand pose by graph convolution. CAT fuses hand prior into occluded regions by considering their high correlation. Extensive experiments on popular datasets with challenging hand-object occlusions, such as HO3D v2, HO3D v3, and DexYCB demonstrate that our HandGCAT reaches state-of-the-art performance. The code is available at https://github.com/heartStrive/HandGCAT.

* 6 pages, 4 figures, ICME-2023 conference paper

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge