Shuangchi He

Hierarchical Agent-based Reinforcement Learning Framework for Automated Quality Assessment of Fetal Ultrasound Video

Apr 14, 2023

Abstract:Ultrasound is the primary modality to examine fetal growth during pregnancy, while the image quality could be affected by various factors. Quality assessment is essential for controlling the quality of ultrasound images to guarantee both the perceptual and diagnostic values. Existing automated approaches often require heavy structural annotations and the predictions may not necessarily be consistent with the assessment results by human experts. Furthermore, the overall quality of a scan and the correlation between the quality of frames should not be overlooked. In this work, we propose a reinforcement learning framework powered by two hierarchical agents that collaboratively learn to perform both frame-level and video-level quality assessments. It is equipped with a specially-designed reward mechanism that considers temporal dependency among frame quality and only requires sparse binary annotations to train. Experimental results on a challenging fetal brain dataset verify that the proposed framework could perform dual-level quality assessment and its predictions correlate well with the subjective assessment results.

Sketch guided and progressive growing GAN for realistic and editable ultrasound image synthesis

Apr 19, 2022

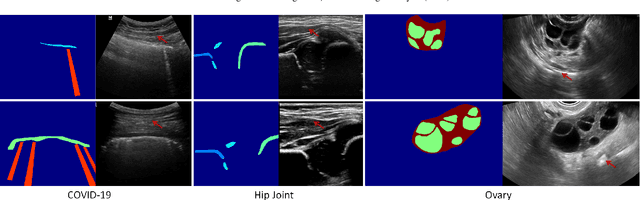

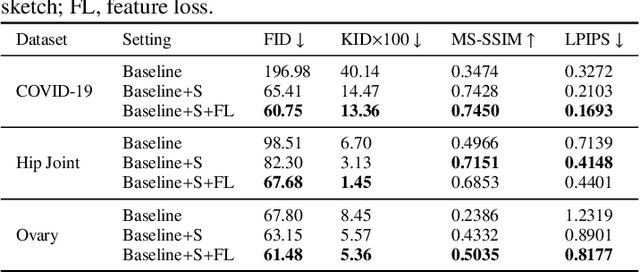

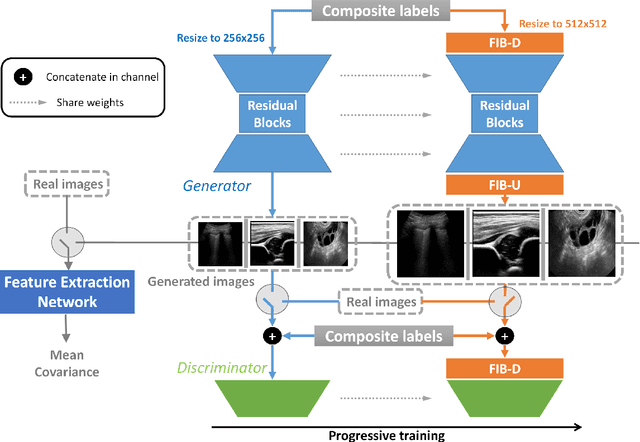

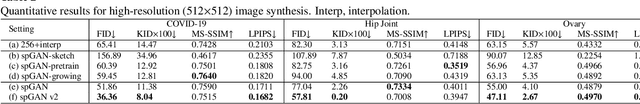

Abstract:Ultrasound (US) imaging is widely used for anatomical structure inspection in clinical diagnosis. The training of new sonographers and deep learning based algorithms for US image analysis usually requires a large amount of data. However, obtaining and labeling large-scale US imaging data are not easy tasks, especially for diseases with low incidence. Realistic US image synthesis can alleviate this problem to a great extent. In this paper, we propose a generative adversarial network (GAN) based image synthesis framework. Our main contributions include: 1) we present the first work that can synthesize realistic B-mode US images with high-resolution and customized texture editing features; 2) to enhance structural details of generated images, we propose to introduce auxiliary sketch guidance into a conditional GAN. We superpose the edge sketch onto the object mask and use the composite mask as the network input; 3) to generate high-resolution US images, we adopt a progressive training strategy to gradually generate high-resolution images from low-resolution images. In addition, a feature loss is proposed to minimize the difference of high-level features between the generated and real images, which further improves the quality of generated images; 4) the proposed US image synthesis method is quite universal and can also be generalized to the US images of other anatomical structures besides the three ones tested in our study (lung, hip joint, and ovary); 5) extensive experiments on three large US image datasets are conducted to validate our method. Ablation studies, customized texture editing, user studies, and segmentation tests demonstrate promising results of our method in synthesizing realistic US images.

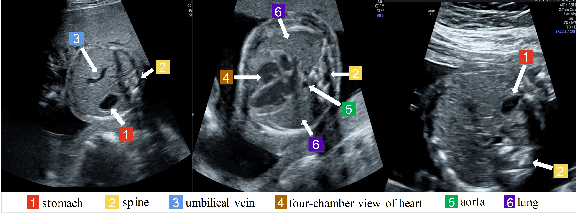

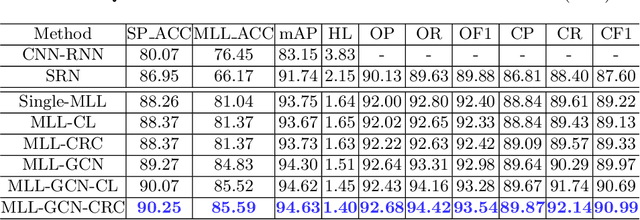

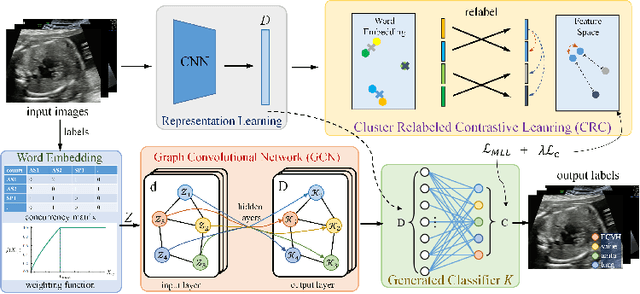

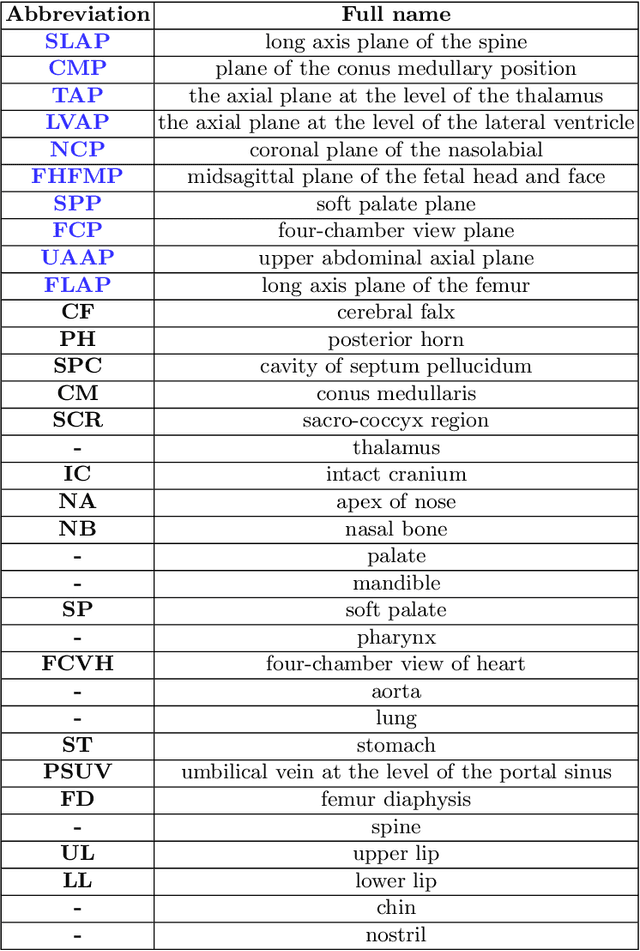

Statistical Dependency Guided Contrastive Learning for Multiple Labeling in Prenatal Ultrasound

Aug 11, 2021

Abstract:Standard plane recognition plays an important role in prenatal ultrasound (US) screening. Automatically recognizing the standard plane along with the corresponding anatomical structures in US image can not only facilitate US image interpretation but also improve diagnostic efficiency. In this study, we build a novel multi-label learning (MLL) scheme to identify multiple standard planes and corresponding anatomical structures of fetus simultaneously. Our contribution is three-fold. First, we represent the class correlation by word embeddings to capture the fine-grained semantic and latent statistical concurrency. Second, we equip the MLL with a graph convolutional network to explore the inner and outer relationship among categories. Third, we propose a novel cluster relabel-based contrastive learning algorithm to encourage the divergence among ambiguous classes. Extensive validation was performed on our large in-house dataset. Our approach reports the highest accuracy as 90.25% for standard planes labeling, 85.59% for planes and structures labeling and mAP as 94.63%. The proposed MLL scheme provides a novel perspective for standard plane recognition and can be easily extended to other medical image classification tasks.

Remove Appearance Shift for Ultrasound Image Segmentation via Fast and Universal Style Transfer

Feb 14, 2020

Abstract:Deep Neural Networks (DNNs) suffer from the performance degradation when image appearance shift occurs, especially in ultrasound (US) image segmentation. In this paper, we propose a novel and intuitive framework to remove the appearance shift, and hence improve the generalization ability of DNNs. Our work has three highlights. First, we follow the spirit of universal style transfer to remove appearance shifts, which was not explored before for US images. Without sacrificing image structure details, it enables the arbitrary style-content transfer. Second, accelerated with Adaptive Instance Normalization block, our framework achieved real-time speed required in the clinical US scanning. Third, an efficient and effective style image selection strategy is proposed to ensure the target-style US image and testing content US image properly match each other. Experiments on two large US datasets demonstrate that our methods are superior to state-of-the-art methods on making DNNs robust against various appearance shifts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge