Shengyong Chen

LIDAR: Lightweight Adaptive Cue-Aware Fusion Vision Mamba for Multimodal Segmentation of Structural Cracks

Jul 30, 2025Abstract:Achieving pixel-level segmentation with low computational cost using multimodal data remains a key challenge in crack segmentation tasks. Existing methods lack the capability for adaptive perception and efficient interactive fusion of cross-modal features. To address these challenges, we propose a Lightweight Adaptive Cue-Aware Vision Mamba network (LIDAR), which efficiently perceives and integrates morphological and textural cues from different modalities under multimodal crack scenarios, generating clear pixel-level crack segmentation maps. Specifically, LIDAR is composed of a Lightweight Adaptive Cue-Aware Visual State Space module (LacaVSS) and a Lightweight Dual Domain Dynamic Collaborative Fusion module (LD3CF). LacaVSS adaptively models crack cues through the proposed mask-guided Efficient Dynamic Guided Scanning Strategy (EDG-SS), while LD3CF leverages an Adaptive Frequency Domain Perceptron (AFDP) and a dual-pooling fusion strategy to effectively capture spatial and frequency-domain cues across modalities. Moreover, we design a Lightweight Dynamically Modulated Multi-Kernel convolution (LDMK) to perceive complex morphological structures with minimal computational overhead, replacing most convolutional operations in LIDAR. Experiments on three datasets demonstrate that our method outperforms other state-of-the-art (SOTA) methods. On the light-field depth dataset, our method achieves 0.8204 in F1 and 0.8465 in mIoU with only 5.35M parameters. Code and datasets are available at https://github.com/Karl1109/LIDAR-Mamba.

Dynamic Graph-Like Learning with Contrastive Clustering on Temporally-Factored Ship Motion Data for Imbalanced Sea State Estimation in Autonomous Vessel

Apr 21, 2025

Abstract:Accurate sea state estimation is crucial for the real-time control and future state prediction of autonomous vessels. However, traditional methods struggle with challenges such as data imbalance and feature redundancy in ship motion data, limiting their effectiveness. To address these challenges, we propose the Temporal-Graph Contrastive Clustering Sea State Estimator (TGC-SSE), a novel deep learning model that combines three key components: a time dimension factorization module to reduce data redundancy, a dynamic graph-like learning module to capture complex variable interactions, and a contrastive clustering loss function to effectively manage class imbalance. Our experiments demonstrate that TGC-SSE significantly outperforms existing methods across 14 public datasets, achieving the highest accuracy in 9 datasets, with a 20.79% improvement over EDI. Furthermore, in the field of sea state estimation, TGC-SSE surpasses five benchmark methods and seven deep learning models. Ablation studies confirm the effectiveness of each module, demonstrating their respective roles in enhancing overall model performance. Overall, TGC-SSE not only improves the accuracy of sea state estimation but also exhibits strong generalization capabilities, providing reliable support for autonomous vessel operations.

Prototype-based Heterogeneous Federated Learning for Blade Icing Detection in Wind Turbines with Class Imbalanced Data

Mar 11, 2025Abstract:Wind farms, typically in high-latitude regions, face a high risk of blade icing. Traditional centralized training methods raise serious privacy concerns. To enhance data privacy in detecting wind turbine blade icing, traditional federated learning (FL) is employed. However, data heterogeneity, resulting from collections across wind farms in varying environmental conditions, impacts the model's optimization capabilities. Moreover, imbalances in wind turbine data lead to models that tend to favor recognizing majority classes, thus neglecting critical icing anomalies. To tackle these challenges, we propose a federated prototype learning model for class-imbalanced data in heterogeneous environments to detect wind turbine blade icing. We also propose a contrastive supervised loss function to address the class imbalance problem. Experiments on real data from 20 turbines across two wind farms show our method outperforms five FL models and five class imbalance methods, with an average improvement of 19.64\% in \( mF_{\beta} \) and 5.73\% in \( m \)BA compared to the second-best method, BiFL.

SCSegamba: Lightweight Structure-Aware Vision Mamba for Crack Segmentation in Structures

Mar 03, 2025Abstract:Pixel-level segmentation of structural cracks across various scenarios remains a considerable challenge. Current methods encounter challenges in effectively modeling crack morphology and texture, facing challenges in balancing segmentation quality with low computational resource usage. To overcome these limitations, we propose a lightweight Structure-Aware Vision Mamba Network (SCSegamba), capable of generating high-quality pixel-level segmentation maps by leveraging both the morphological information and texture cues of crack pixels with minimal computational cost. Specifically, we developed a Structure-Aware Visual State Space module (SAVSS), which incorporates a lightweight Gated Bottleneck Convolution (GBC) and a Structure-Aware Scanning Strategy (SASS). The key insight of GBC lies in its effectiveness in modeling the morphological information of cracks, while the SASS enhances the perception of crack topology and texture by strengthening the continuity of semantic information between crack pixels. Experiments on crack benchmark datasets demonstrate that our method outperforms other state-of-the-art (SOTA) methods, achieving the highest performance with only 2.8M parameters. On the multi-scenario dataset, our method reached 0.8390 in F1 score and 0.8479 in mIoU. The code is available at https://github.com/Karl1109/SCSegamba.

Can Students Beyond The Teacher? Distilling Knowledge from Teacher's Bias

Dec 13, 2024

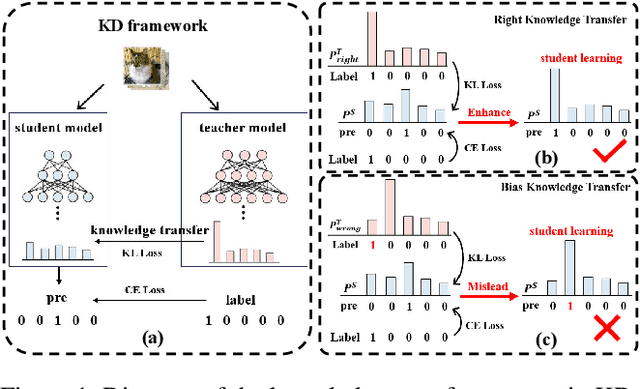

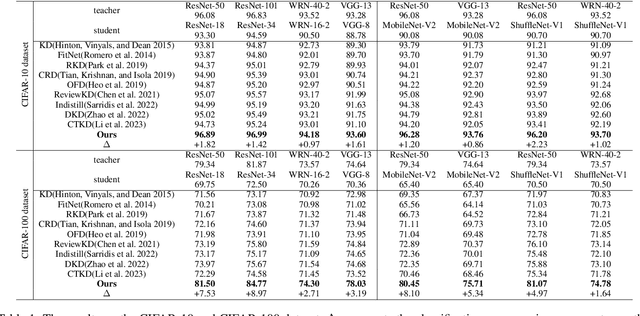

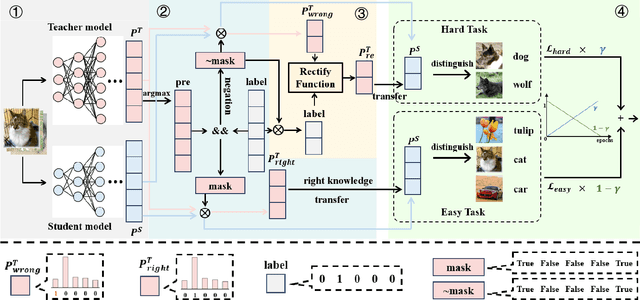

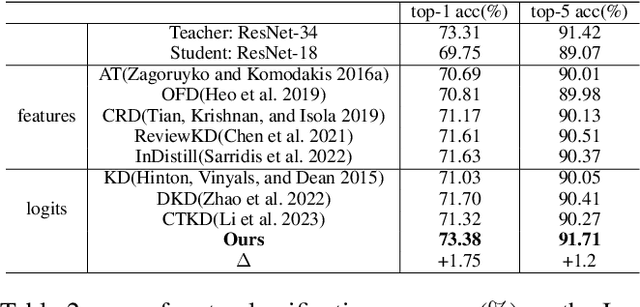

Abstract:Knowledge distillation (KD) is a model compression technique that transfers knowledge from a large teacher model to a smaller student model to enhance its performance. Existing methods often assume that the student model is inherently inferior to the teacher model. However, we identify that the fundamental issue affecting student performance is the bias transferred by the teacher. Current KD frameworks transmit both right and wrong knowledge, introducing bias that misleads the student model. To address this issue, we propose a novel strategy to rectify bias and greatly improve the student model's performance. Our strategy involves three steps: First, we differentiate knowledge and design a bias elimination method to filter out biases, retaining only the right knowledge for the student model to learn. Next, we propose a bias rectification method to rectify the teacher model's wrong predictions, fundamentally addressing bias interference. The student model learns from both the right knowledge and the rectified biases, greatly improving its prediction accuracy. Additionally, we introduce a dynamic learning approach with a loss function that updates weights dynamically, allowing the student model to quickly learn right knowledge-based easy tasks initially and tackle hard tasks corresponding to biases later, greatly enhancing the student model's learning efficiency. To the best of our knowledge, this is the first strategy enabling the student model to surpass the teacher model. Experiments demonstrate that our strategy, as a plug-and-play module, is versatile across various mainstream KD frameworks. We will release our code after the paper is accepted.

MFCLIP: Multi-modal Fine-grained CLIP for Generalizable Diffusion Face Forgery Detection

Sep 15, 2024Abstract:The rapid development of photo-realistic face generation methods has raised significant concerns in society and academia, highlighting the urgent need for robust and generalizable face forgery detection (FFD) techniques. Although existing approaches mainly capture face forgery patterns using image modality, other modalities like fine-grained noises and texts are not fully explored, which limits the generalization capability of the model. In addition, most FFD methods tend to identify facial images generated by GAN, but struggle to detect unseen diffusion-synthesized ones. To address the limitations, we aim to leverage the cutting-edge foundation model, contrastive language-image pre-training (CLIP), to achieve generalizable diffusion face forgery detection (DFFD). In this paper, we propose a novel multi-modal fine-grained CLIP (MFCLIP) model, which mines comprehensive and fine-grained forgery traces across image-noise modalities via language-guided face forgery representation learning, to facilitate the advancement of DFFD. Specifically, we devise a fine-grained language encoder (FLE) that extracts fine global language features from hierarchical text prompts. We design a multi-modal vision encoder (MVE) to capture global image forgery embeddings as well as fine-grained noise forgery patterns extracted from the richest patch, and integrate them to mine general visual forgery traces. Moreover, we build an innovative plug-and-play sample pair attention (SPA) method to emphasize relevant negative pairs and suppress irrelevant ones, allowing cross-modality sample pairs to conduct more flexible alignment. Extensive experiments and visualizations show that our model outperforms the state of the arts on different settings like cross-generator, cross-forgery, and cross-dataset evaluations.

Staircase Cascaded Fusion of Lightweight Local Pattern Recognition and Long-Range Dependencies for Structural Crack Segmentation

Aug 23, 2024

Abstract:Detecting cracks with pixel-level precision for key structures is a significant challenge, as existing methods struggle to effectively integrate local textures and pixel dependencies of cracks. Furthermore, these methods often possess numerous parameters and substantial computational requirements, complicating deployment on edge devices. In this paper, we propose a staircase cascaded fusion crack segmentation network (CrackSCF) that generates high-quality crack segmentation maps using minimal computational resources. We constructed a staircase cascaded fusion module that effectively captures local patterns of cracks and long-range dependencies of pixels, and it can suppress background noise well. To reduce the computational resources required by the model, we introduced a lightweight convolution block, which replaces all convolution operations in the network, significantly reducing the required computation and parameters without affecting the network's performance. To evaluate our method, we created a challenging benchmark dataset called TUT and conducted experiments on this dataset and five other public datasets. The experimental results indicate that our method offers significant advantages over existing methods, especially in handling background noise interference and detailed crack segmentation. The F1 and mIoU scores on the TUT dataset are 0.8382 and 0.8473, respectively, achieving state-of-the-art (SOTA) performance while requiring the least computational resources. The code and dataset is available at https://github.com/Karl1109/CrackSCF.

An End-to-End Model for Time Series Classification In the Presence of Missing Values

Aug 11, 2024

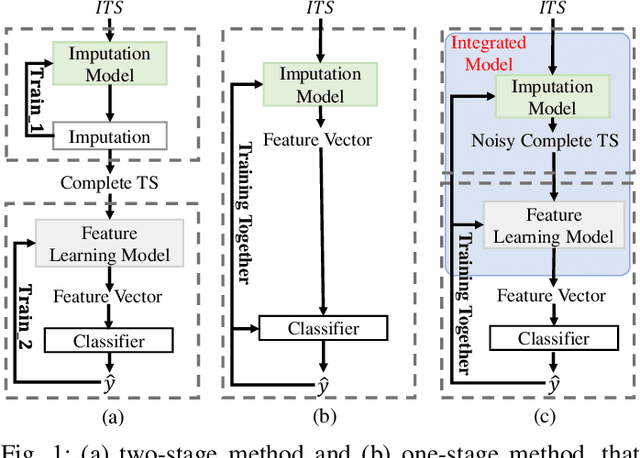

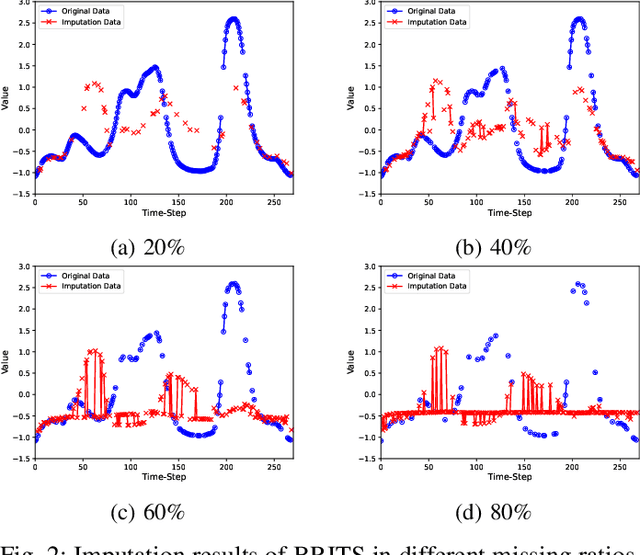

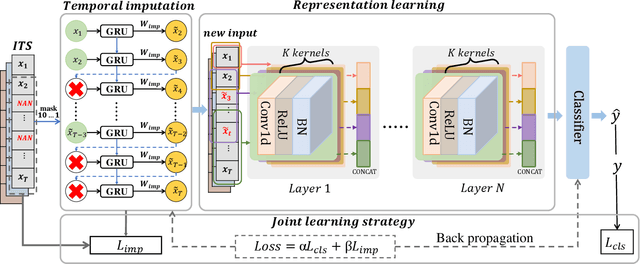

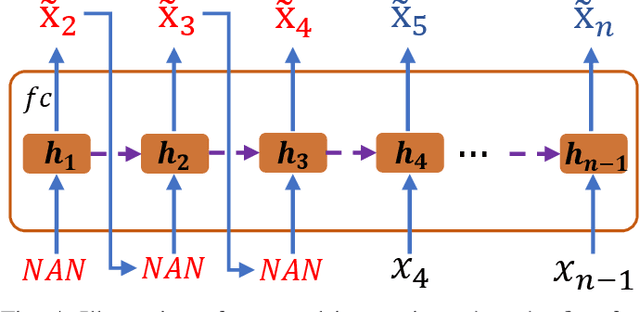

Abstract:Time series classification with missing data is a prevalent issue in time series analysis, as temporal data often contain missing values in practical applications. The traditional two-stage approach, which handles imputation and classification separately, can result in sub-optimal performance as label information is not utilized in the imputation process. On the other hand, a one-stage approach can learn features under missing information, but feature representation is limited as imputed errors are propagated in the classification process. To overcome these challenges, this study proposes an end-to-end neural network that unifies data imputation and representation learning within a single framework, allowing the imputation process to take advantage of label information. Differing from previous methods, our approach places less emphasis on the accuracy of imputation data and instead prioritizes classification performance. A specifically designed multi-scale feature learning module is implemented to extract useful information from the noise-imputation data. The proposed model is evaluated on 68 univariate time series datasets from the UCR archive, as well as a multivariate time series dataset with various missing data ratios and 4 real-world datasets with missing information. The results indicate that the proposed model outperforms state-of-the-art approaches for incomplete time series classification, particularly in scenarios with high levels of missing data.

PIR: Remote Sensing Image-Text Retrieval with Prior Instruction Representation Learning

May 16, 2024Abstract:Remote sensing image-text retrieval constitutes a foundational aspect of remote sensing interpretation tasks, facilitating the alignment of vision and language representations. This paper introduces a prior instruction representation (PIR) learning paradigm that draws on prior knowledge to instruct adaptive learning of vision and text representations. Based on PIR, a domain-adapted remote sensing image-text retrieval framework PIR-ITR is designed to address semantic noise issues in vision-language understanding tasks. However, with massive additional data for pre-training the vision-language foundation model, remote sensing image-text retrieval is further developed into an open-domain retrieval task. Continuing with the above, we propose PIR-CLIP, a domain-specific CLIP-based framework for remote sensing image-text retrieval, to address semantic noise in remote sensing vision-language representations and further improve open-domain retrieval performance. In vision representation, Vision Instruction Representation (VIR) based on Spatial-PAE utilizes the prior-guided knowledge of the remote sensing scene recognition by building a belief matrix to select key features for reducing the impact of semantic noise. In text representation, Language Cycle Attention (LCA) based on Temporal-PAE uses the previous time step to cyclically activate the current time step to enhance text representation capability. A cluster-wise Affiliation Loss (AL) is proposed to constrain the inter-classes and to reduce the semantic confusion zones in the common subspace. Comprehensive experiments demonstrate that PIR could enhance vision and text representations and outperform the state-of-the-art methods of closed-domain and open-domain retrieval on two benchmark datasets, RSICD and RSITMD.

Conditional Diffusion Feature Refinement for Continuous Sign Language Recognition

May 05, 2023

Abstract:In this work, we are dedicated to leveraging the denoising diffusion models' success and formulating feature refinement as the autoencoder-formed diffusion process. The state-of-the-art CSLR framework consists of a spatial module, a visual module, a sequence module, and a sequence learning function. However, this framework has faced sequence module overfitting caused by the objective function and small-scale available benchmarks, resulting in insufficient model training. To overcome the overfitting problem, some CSLR studies enforce the sequence module to learn more visual temporal information or be guided by more informative supervision to refine its representations. In this work, we propose a novel autoencoder-formed conditional diffusion feature refinement~(ACDR) to refine the sequence representations to equip desired properties by learning the encoding-decoding optimization process in an end-to-end way. Specifically, for the ACDR, a noising Encoder is proposed to progressively add noise equipped with semantic conditions to the sequence representations. And a denoising Decoder is proposed to progressively denoise the noisy sequence representations with semantic conditions. Therefore, the sequence representations can be imbued with the semantics of provided semantic conditions. Further, a semantic constraint is employed to prevent the denoised sequence representations from semantic corruption. Extensive experiments are conducted to validate the effectiveness of our ACDR, benefiting state-of-the-art methods and achieving a notable gain on three benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge