Cong Bai

PMPGuard: Catching Pseudo-Matched Pairs in Remote Sensing Image-Text Retrieval

Dec 21, 2025Abstract:Remote sensing (RS) image-text retrieval faces significant challenges in real-world datasets due to the presence of Pseudo-Matched Pairs (PMPs), semantically mismatched or weakly aligned image-text pairs, which hinder the learning of reliable cross-modal alignments. To address this issue, we propose a novel retrieval framework that leverages Cross-Modal Gated Attention and a Positive-Negative Awareness Attention mechanism to mitigate the impact of such noisy associations. The gated module dynamically regulates cross-modal information flow, while the awareness mechanism explicitly distinguishes informative (positive) cues from misleading (negative) ones during alignment learning. Extensive experiments on three benchmark RS datasets, i.e., RSICD, RSITMD, and RS5M, demonstrate that our method consistently achieves state-of-the-art performance, highlighting its robustness and effectiveness in handling real-world mismatches and PMPs in RS image-text retrieval tasks.

IDOL: Meeting Diverse Distribution Shifts with Prior Physics for Tropical Cyclone Multi-Task Estimation

Nov 13, 2025

Abstract:Tropical Cyclone (TC) estimation aims to accurately estimate various TC attributes in real time. However, distribution shifts arising from the complex and dynamic nature of TC environmental fields, such as varying geographical conditions and seasonal changes, present significant challenges to reliable estimation. Most existing methods rely on multi-modal fusion for feature extraction but overlook the intrinsic distribution of feature representations, leading to poor generalization under out-of-distribution (OOD) scenarios. To address this, we propose an effective Identity Distribution-Oriented Physical Invariant Learning framework (IDOL), which imposes identity-oriented constraints to regulate the feature space under the guidance of prior physical knowledge, thereby dealing distribution variability with physical invariance. Specifically, the proposed IDOL employs the wind field model and dark correlation knowledge of TC to model task-shared and task-specific identity tokens. These tokens capture task dependencies and intrinsic physical invariances of TC, enabling robust estimation of TC wind speed, pressure, inner-core, and outer-core size under distribution shifts. Extensive experiments conducted on multiple datasets and tasks demonstrate the outperformance of the proposed IDOL, verifying that imposing identity-oriented constraints based on prior physical knowledge can effectively mitigates diverse distribution shifts in TC estimation.Code is available at https://github.com/Zjut-MultimediaPlus/IDOL.

From Swath to Full-Disc: Advancing Precipitation Retrieval with Multimodal Knowledge Expansion

Jun 08, 2025Abstract:Accurate near-real-time precipitation retrieval has been enhanced by satellite-based technologies. However, infrared-based algorithms have low accuracy due to weak relations with surface precipitation, whereas passive microwave and radar-based methods are more accurate but limited in range. This challenge motivates the Precipitation Retrieval Expansion (PRE) task, which aims to enable accurate, infrared-based full-disc precipitation retrievals beyond the scanning swath. We introduce Multimodal Knowledge Expansion, a two-stage pipeline with the proposed PRE-Net model. In the Swath-Distilling stage, PRE-Net transfers knowledge from a multimodal data integration model to an infrared-based model within the scanning swath via Coordinated Masking and Wavelet Enhancement (CoMWE). In the Full-Disc Adaptation stage, Self-MaskTune refines predictions across the full disc by balancing multimodal and full-disc infrared knowledge. Experiments on the introduced PRE benchmark demonstrate that PRE-Net significantly advanced precipitation retrieval performance, outperforming leading products like PERSIANN-CCS, PDIR, and IMERG. The code will be available at https://github.com/Zjut-MultimediaPlus/PRE-Net.

NeighborRetr: Balancing Hub Centrality in Cross-Modal Retrieval

Mar 13, 2025Abstract:Cross-modal retrieval aims to bridge the semantic gap between different modalities, such as visual and textual data, enabling accurate retrieval across them. Despite significant advancements with models like CLIP that align cross-modal representations, a persistent challenge remains: the hubness problem, where a small subset of samples (hubs) dominate as nearest neighbors, leading to biased representations and degraded retrieval accuracy. Existing methods often mitigate hubness through post-hoc normalization techniques, relying on prior data distributions that may not be practical in real-world scenarios. In this paper, we directly mitigate hubness during training and introduce NeighborRetr, a novel method that effectively balances the learning of hubs and adaptively adjusts the relations of various kinds of neighbors. Our approach not only mitigates the hubness problem but also enhances retrieval performance, achieving state-of-the-art results on multiple cross-modal retrieval benchmarks. Furthermore, NeighborRetr demonstrates robust generalization to new domains with substantial distribution shifts, highlighting its effectiveness in real-world applications. We make our code publicly available at: https://github.com/zzezze/NeighborRetr .

TCP-Diffusion: A Multi-modal Diffusion Model for Global Tropical Cyclone Precipitation Forecasting with Change Awareness

Oct 17, 2024Abstract:Precipitation from tropical cyclones (TCs) can cause disasters such as flooding, mudslides, and landslides. Predicting such precipitation in advance is crucial, giving people time to prepare and defend against these precipitation-induced disasters. Developing deep learning (DL) rainfall prediction methods offers a new way to predict potential disasters. However, one problem is that most existing methods suffer from cumulative errors and lack physical consistency. Second, these methods overlook the importance of meteorological factors in TC rainfall and their integration with the numerical weather prediction (NWP) model. Therefore, we propose Tropical Cyclone Precipitation Diffusion (TCP-Diffusion), a multi-modal model for global tropical cyclone precipitation forecasting. It forecasts TC rainfall around the TC center for the next 12 hours at 3 hourly resolution based on past rainfall observations and multi-modal environmental variables. Adjacent residual prediction (ARP) changes the training target from the absolute rainfall value to the rainfall trend and gives our model the ability of rainfall change awareness, reducing cumulative errors and ensuring physical consistency. Considering the influence of TC-related meteorological factors and the useful information from NWP model forecasts, we propose a multi-model framework with specialized encoders to extract richer information from environmental variables and results provided by NWP models. The results of extensive experiments show that our method outperforms other DL methods and the NWP method from the European Centre for Medium-Range Weather Forecasts (ECMWF).

PIR: Remote Sensing Image-Text Retrieval with Prior Instruction Representation Learning

May 16, 2024Abstract:Remote sensing image-text retrieval constitutes a foundational aspect of remote sensing interpretation tasks, facilitating the alignment of vision and language representations. This paper introduces a prior instruction representation (PIR) learning paradigm that draws on prior knowledge to instruct adaptive learning of vision and text representations. Based on PIR, a domain-adapted remote sensing image-text retrieval framework PIR-ITR is designed to address semantic noise issues in vision-language understanding tasks. However, with massive additional data for pre-training the vision-language foundation model, remote sensing image-text retrieval is further developed into an open-domain retrieval task. Continuing with the above, we propose PIR-CLIP, a domain-specific CLIP-based framework for remote sensing image-text retrieval, to address semantic noise in remote sensing vision-language representations and further improve open-domain retrieval performance. In vision representation, Vision Instruction Representation (VIR) based on Spatial-PAE utilizes the prior-guided knowledge of the remote sensing scene recognition by building a belief matrix to select key features for reducing the impact of semantic noise. In text representation, Language Cycle Attention (LCA) based on Temporal-PAE uses the previous time step to cyclically activate the current time step to enhance text representation capability. A cluster-wise Affiliation Loss (AL) is proposed to constrain the inter-classes and to reduce the semantic confusion zones in the common subspace. Comprehensive experiments demonstrate that PIR could enhance vision and text representations and outperform the state-of-the-art methods of closed-domain and open-domain retrieval on two benchmark datasets, RSICD and RSITMD.

Little Strokes Fell Great Oaks: Boosting the Hierarchical Features for Multi-exposure Image Fusion

Apr 10, 2024

Abstract:In recent years, deep learning networks have made remarkable strides in the domain of multi-exposure image fusion. Nonetheless, prevailing approaches often involve directly feeding over-exposed and under-exposed images into the network, which leads to the under-utilization of inherent information present in the source images. Additionally, unsupervised techniques predominantly employ rudimentary weighted summation for color channel processing, culminating in an overall desaturated final image tone. To partially mitigate these issues, this study proposes a gamma correction module specifically designed to fully leverage latent information embedded within source images. Furthermore, a modified transformer block, embracing with self-attention mechanisms, is introduced to optimize the fusion process. Ultimately, a novel color enhancement algorithm is presented to augment image saturation while preserving intricate details. The source code is available at https://github.com/ZhiyingDu/BHFMEF.

Direction-Oriented Visual-semantic Embedding Model for Remote Sensing Image-text Retrieval

Oct 12, 2023

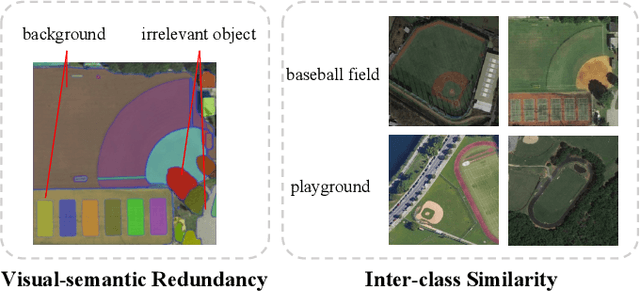

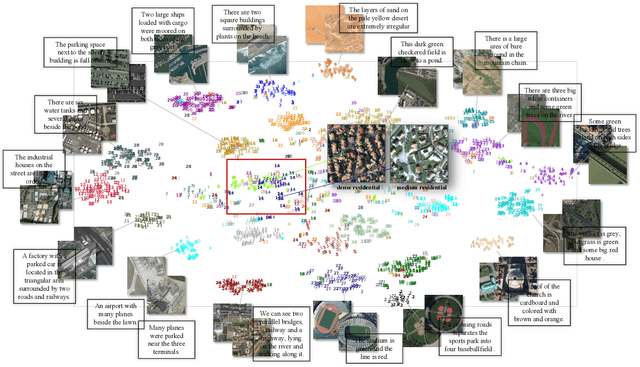

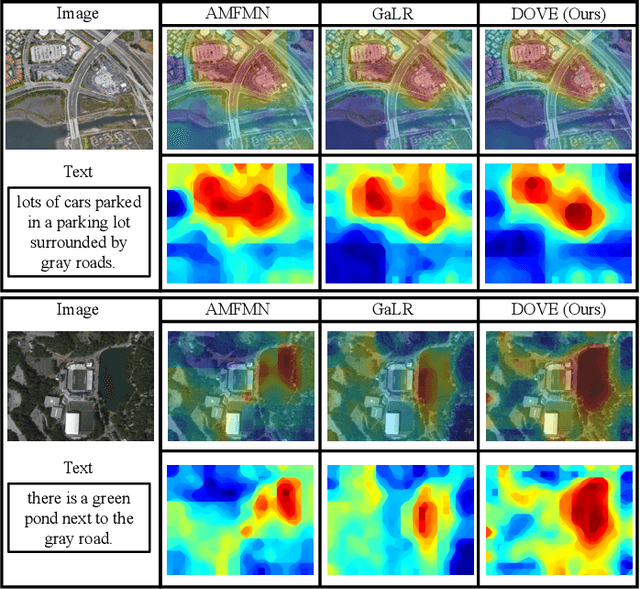

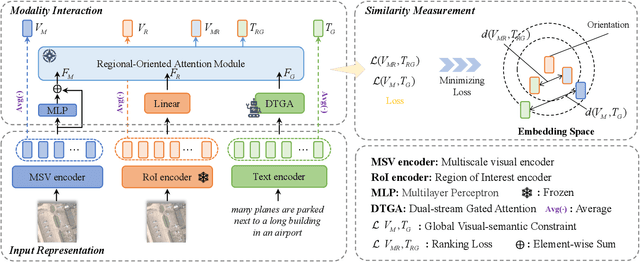

Abstract:Image-text retrieval has developed rapidly in recent years. However, it is still a challenge in remote sensing due to visual-semantic imbalance, which leads to incorrect matching of non-semantic visual and textual features. To solve this problem, we propose a novel Direction-Oriented Visual-semantic Embedding Model (DOVE) to mine the relationship between vision and language. Concretely, a Regional-Oriented Attention Module (ROAM) adaptively adjusts the distance between the final visual and textual embeddings in the latent semantic space, oriented by regional visual features. Meanwhile, a lightweight Digging Text Genome Assistant (DTGA) is designed to expand the range of tractable textual representation and enhance global word-level semantic connections using less attention operations. Ultimately, we exploit a global visual-semantic constraint to reduce single visual dependency and serve as an external constraint for the final visual and textual representations. The effectiveness and superiority of our method are verified by extensive experiments including parameter evaluation, quantitative comparison, ablation studies and visual analysis, on two benchmark datasets, RSICD and RSITMD.

A Generalized Physical-knowledge-guided Dynamic Model for Underwater Image Enhancement

Aug 10, 2023Abstract:Underwater images often suffer from color distortion and low contrast resulting in various image types, due to the scattering and absorption of light by water. While it is difficult to obtain high-quality paired training samples with a generalized model. To tackle these challenges, we design a Generalized Underwater image enhancement method via a Physical-knowledge-guided Dynamic Model (short for GUPDM), consisting of three parts: Atmosphere-based Dynamic Structure (ADS), Transmission-guided Dynamic Structure (TDS), and Prior-based Multi-scale Structure (PMS). In particular, to cover complex underwater scenes, this study changes the global atmosphere light and the transmission to simulate various underwater image types (e.g., the underwater image color ranging from yellow to blue) through the formation model. We then design ADS and TDS that use dynamic convolutions to adaptively extract prior information from underwater images and generate parameters for PMS. These two modules enable the network to select appropriate parameters for various water types adaptively. Besides, the multi-scale feature extraction module in PMS uses convolution blocks with different kernel sizes and obtains weights for each feature map via channel attention block and fuses them to boost the receptive field of the network. The source code will be available at \href{https://github.com/shiningZZ/GUPDM}{https://github.com/shiningZZ/GUPDM}.

Towards General and Fast Video Derain via Knowledge Distillation

Aug 10, 2023

Abstract:As a common natural weather condition, rain can obscure video frames and thus affect the performance of the visual system, so video derain receives a lot of attention. In natural environments, rain has a wide variety of streak types, which increases the difficulty of the rain removal task. In this paper, we propose a Rain Review-based General video derain Network via knowledge distillation (named RRGNet) that handles different rain streak types with one pre-training weight. Specifically, we design a frame grouping-based encoder-decoder network that makes full use of the temporal information of the video. Further, we use the old task model to guide the current model in learning new rain streak types while avoiding forgetting. To consolidate the network's ability to derain, we design a rain review module to play back data from old tasks for the current model. The experimental results show that our developed general method achieves the best results in terms of running speed and derain effect.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge