Sanjit Seshia

Locally Pareto-Optimal Interpretations for Black-Box Machine Learning Models

Aug 21, 2025Abstract:Creating meaningful interpretations for black-box machine learning models involves balancing two often conflicting objectives: accuracy and explainability. Exploring the trade-off between these objectives is essential for developing trustworthy interpretations. While many techniques for multi-objective interpretation synthesis have been developed, they typically lack formal guarantees on the Pareto-optimality of the results. Methods that do provide such guarantees, on the other hand, often face severe scalability limitations when exploring the Pareto-optimal space. To address this, we develop a framework based on local optimality guarantees that enables more scalable synthesis of interpretations. Specifically, we consider the problem of synthesizing a set of Pareto-optimal interpretations with local optimality guarantees, within the immediate neighborhood of each solution. Our approach begins with a multi-objective learning or search technique, such as Multi-Objective Monte Carlo Tree Search, to generate a best-effort set of Pareto-optimal candidates with respect to accuracy and explainability. We then verify local optimality for each candidate as a Boolean satisfiability problem, which we solve using a SAT solver. We demonstrate the efficacy of our approach on a set of benchmarks, comparing it against previous methods for exploring the Pareto-optimal front of interpretations. In particular, we show that our approach yields interpretations that closely match those synthesized by methods offering global guarantees.

Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems

May 10, 2024

Abstract:Ensuring that AI systems reliably and robustly avoid harmful or dangerous behaviours is a crucial challenge, especially for AI systems with a high degree of autonomy and general intelligence, or systems used in safety-critical contexts. In this paper, we will introduce and define a family of approaches to AI safety, which we will refer to as guaranteed safe (GS) AI. The core feature of these approaches is that they aim to produce AI systems which are equipped with high-assurance quantitative safety guarantees. This is achieved by the interplay of three core components: a world model (which provides a mathematical description of how the AI system affects the outside world), a safety specification (which is a mathematical description of what effects are acceptable), and a verifier (which provides an auditable proof certificate that the AI satisfies the safety specification relative to the world model). We outline a number of approaches for creating each of these three core components, describe the main technical challenges, and suggest a number of potential solutions to them. We also argue for the necessity of this approach to AI safety, and for the inadequacy of the main alternative approaches.

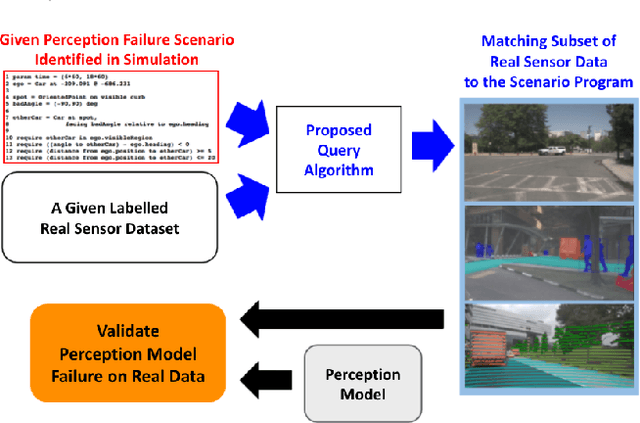

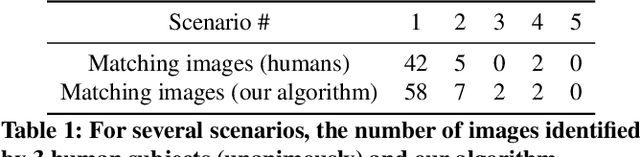

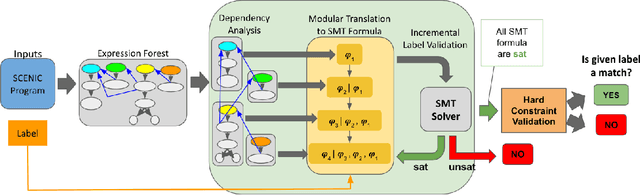

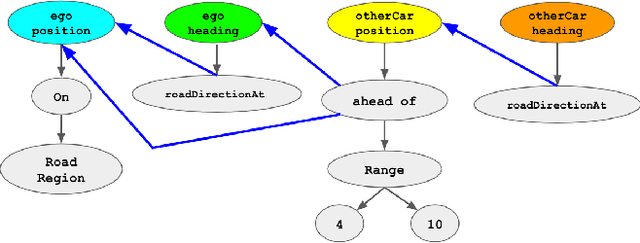

Querying Labelled Data with Scenario Programs for Sim-to-Real Validation

Dec 01, 2021

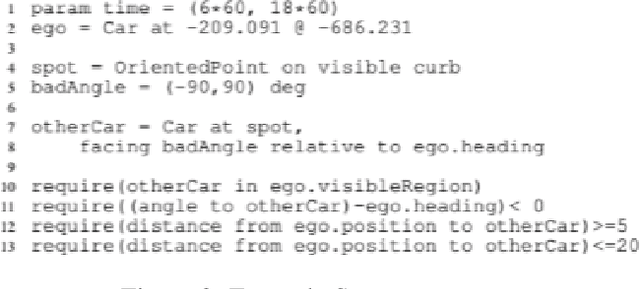

Abstract:Simulation-based testing of autonomous vehicles (AVs) has become an essential complement to road testing to ensure safety. Consequently, substantial research has focused on searching for failure scenarios in simulation. However, a fundamental question remains: are AV failure scenarios identified in simulation meaningful in reality, i.e., are they reproducible on the real system? Due to the sim-to-real gap arising from discrepancies between simulated and real sensor data, a failure scenario identified in simulation can be either a spurious artifact of the synthetic sensor data or an actual failure that persists with real sensor data. An approach to validate simulated failure scenarios is to identify instances of the scenario in a corpus of real data, and check if the failure persists on the real data. To this end, we propose a formal definition of what it means for a labelled data item to match an abstract scenario, encoded as a scenario program using the SCENIC probabilistic programming language. Using this definition, we develop a querying algorithm which, given a scenario program and a labelled dataset, finds the subset of data matching the scenario. Experiments demonstrate that our algorithm is accurate and efficient on a variety of realistic traffic scenarios, and scales to a reasonable number of agents.

Entropy-Guided Control Improvisation

Mar 09, 2021

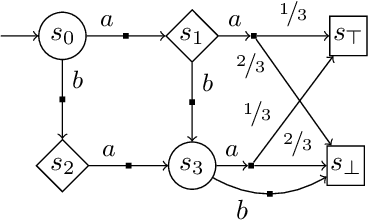

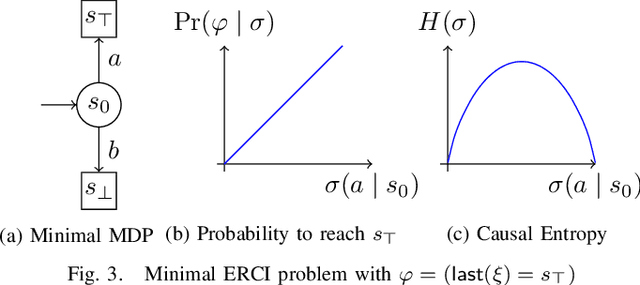

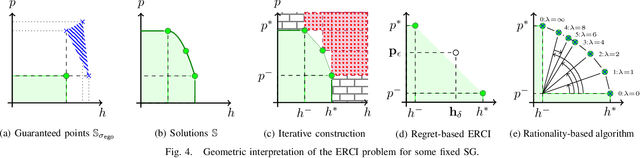

Abstract:High level declarative constraints provide a powerful (and popular) way to define and construct control policies; however, most synthesis algorithms do not support specifying the degree of randomness (unpredictability) of the resulting controller. In many contexts, e.g., patrolling, testing, behavior prediction, and planning on idealized models, predictable or biased controllers are undesirable. To address these concerns, we introduce the \emph{Entropic Reactive Control Improvisation} (ERCI) framework and algorithm that supports synthesizing control policies for stochastic games that are declaratively specified by (i) a \emph{hard constraint} specifying what must occur (ii) a \emph{soft constraint} specifying what typically occurs, and (iii) a \emph{randomization constraint} specifying the unpredictability and variety of the controller, as quantified using causal entropy. This framework, which extends the state-of-the-art by supporting arbitrary combinations of adversarial and probabilistic uncertainty in the environment, enables a flexible modeling formalism which we argue, theoretically and empirically, remains tractable.

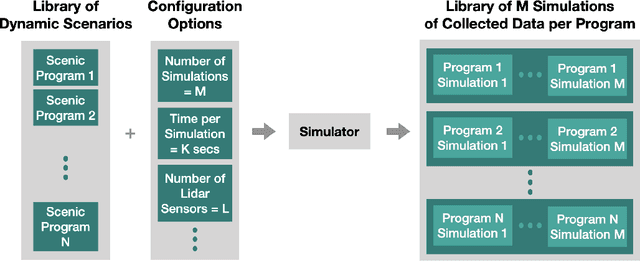

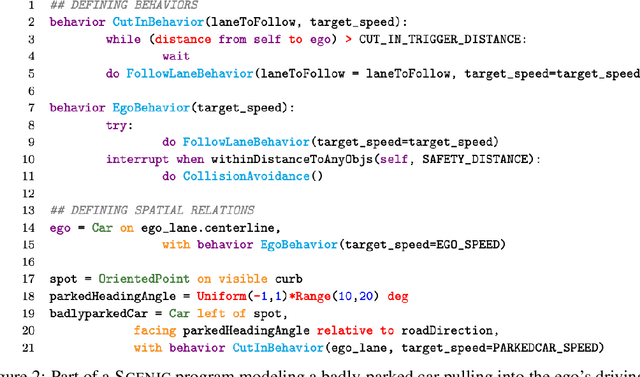

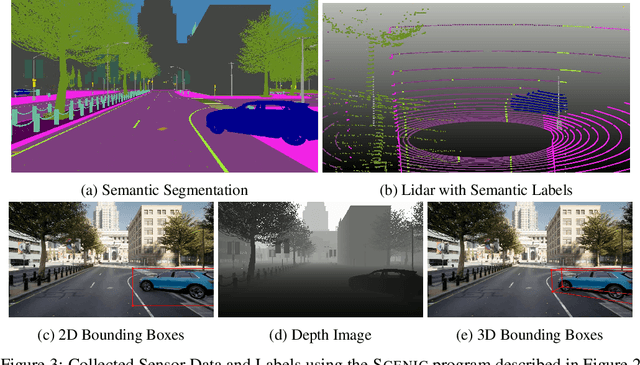

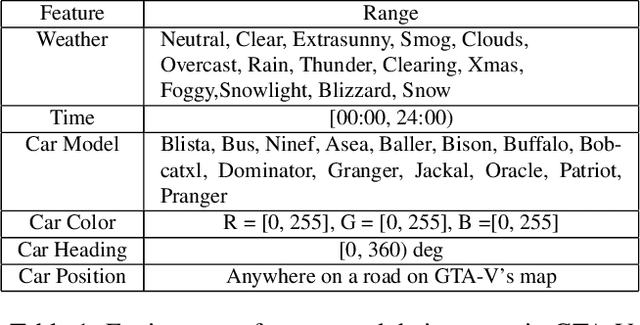

A Customizable Dynamic Scenario Modeling and Data Generation Platform for Autonomous Driving

Nov 30, 2020

Abstract:Safely interacting with humans is a significant challenge for autonomous driving. The performance of this interaction depends on machine learning-based modules of an autopilot, such as perception, behavior prediction, and planning. These modules require training datasets with high-quality labels and a diverse range of realistic dynamic behaviors. Consequently, training such modules to handle rare scenarios is difficult because they are, by definition, rarely represented in real-world datasets. Hence, there is a practical need to augment datasets with synthetic data covering these rare scenarios. In this paper, we present a platform to model dynamic and interactive scenarios, generate the scenarios in simulation with different modalities of labeled sensor data, and collect this information for data augmentation. To our knowledge, this is the first integrated platform for these tasks specialized to the autonomous driving domain.

A Programmatic and Semantic Approach to Explaining and DebuggingNeural Network Based Object Detectors

Dec 01, 2019

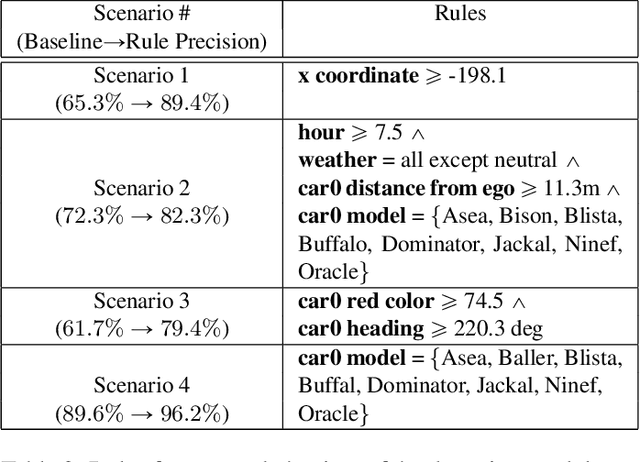

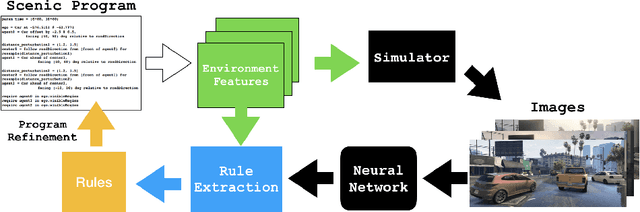

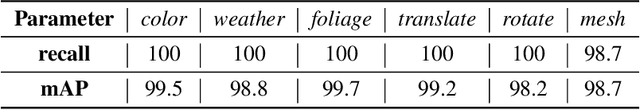

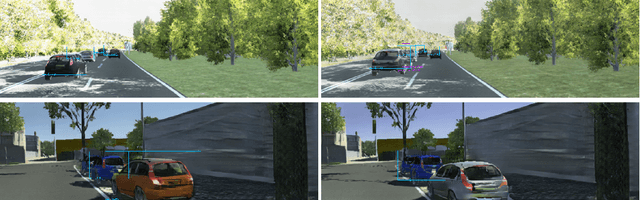

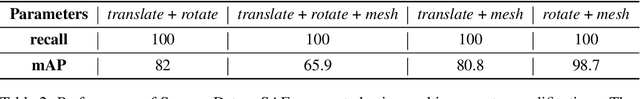

Abstract:Even as deep neural networks have become very effective for tasks in vision and perception, it remains difficult to explain and debug their behavior. In this paper, we present a programmatic and semantic approach to explaining, understanding, and debugging the correct and incorrect behaviors of a neural network based perception system. Our approach is semantic in that it employs a high-level representation of the distribution of environment scenarios that the detector is intended to work on. It is programmatic in that the representation is a program in a domain-specific probabilistic programming language using which synthetic data can be generated to train and test the neural network. We present a framework that assesses the performance of the neural network to identify correct and incorrect detections, extracts rules from those results that semantically characterizes the correct and incorrect scenarios, and then specializes the probabilistic program with those rules in order to more precisely characterize the scenarios in which the neural network operates correctly or not, without human intervention to identify important features. We demonstrate our results using the SCENIC probabilistic programming language and a neural network-based object detector. Our experiments show that it is possible to automatically generate compact rules that significantly increase the correct detection rate (or conversely the incorrect detection rate) of the network and can thus help with debugging and understanding its behavior.

Generating Semantic Adversarial Examples with Differentiable Rendering

Oct 02, 2019

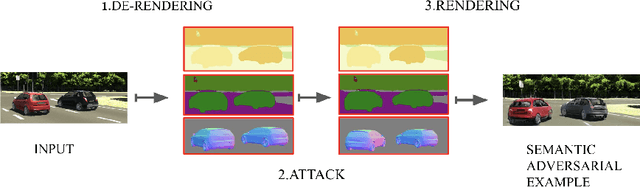

Abstract:Machine learning (ML) algorithms, especially deep neural networks, have demonstrated success in several domains. However, several types of attacks have raised concerns about deploying ML in safety-critical domains, such as autonomous driving and security. An attacker perturbs a data point slightly in the concrete feature space (e.g., pixel space) and causes the ML algorithm to produce incorrect output (e.g. a perturbed stop sign is classified as a yield sign). These perturbed data points are called adversarial examples, and there are numerous algorithms in the literature for constructing adversarial examples and defending against them. In this paper we explore semantic adversarial examples (SAEs) where an attacker creates perturbations in the semantic space representing the environment that produces input for the ML model. For example, an attacker can change the background of the image to be cloudier to cause misclassification. We present an algorithm for constructing SAEs that uses recent advances in differential rendering and inverse graphics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge