Ruitao Xie

A Weakly Supervised and Globally Explainable Learning Framework for Brain Tumor Segmentation

Aug 02, 2024

Abstract:Machine-based brain tumor segmentation can help doctors make better diagnoses. However, the complex structure of brain tumors and expensive pixel-level annotations present challenges for automatic tumor segmentation. In this paper, we propose a counterfactual generation framework that not only achieves exceptional brain tumor segmentation performance without the need for pixel-level annotations, but also provides explainability. Our framework effectively separates class-related features from class-unrelated features of the samples, and generate new samples that preserve identity features while altering class attributes by embedding different class-related features. We perform topological data analysis on the extracted class-related features and obtain a globally explainable manifold, and for each abnormal sample to be segmented, a meaningful normal sample could be effectively generated with the guidance of the rule-based paths designed within the manifold for comparison for identifying the tumor regions. We evaluate our proposed method on two datasets, which demonstrates superior performance of brain tumor segmentation. The code is available at https://github.com/xrt11/tumor-segmentation.

Accurate Explanation Model for Image Classifiers using Class Association Embedding

Jun 12, 2024Abstract:Image classification is a primary task in data analysis where explainable models are crucially demanded in various applications. Although amounts of methods have been proposed to obtain explainable knowledge from the black-box classifiers, these approaches lack the efficiency of extracting global knowledge regarding the classification task, thus is vulnerable to local traps and often leads to poor accuracy. In this study, we propose a generative explanation model that combines the advantages of global and local knowledge for explaining image classifiers. We develop a representation learning method called class association embedding (CAE), which encodes each sample into a pair of separated class-associated and individual codes. Recombining the individual code of a given sample with altered class-associated code leads to a synthetic real-looking sample with preserved individual characters but modified class-associated features and possibly flipped class assignments. A building-block coherency feature extraction algorithm is proposed that efficiently separates class-associated features from individual ones. The extracted feature space forms a low-dimensional manifold that visualizes the classification decision patterns. Explanation on each individual sample can be then achieved in a counter-factual generation manner which continuously modifies the sample in one direction, by shifting its class-associated code along a guided path, until its classification outcome is changed. We compare our method with state-of-the-art ones on explaining image classification tasks in the form of saliency maps, demonstrating that our method achieves higher accuracies. The code is available at https://github.com/xrt11/XAI-CODE.

Finding Beautiful and Happy Images for Mental Health and Well-being Applications

Apr 28, 2024Abstract:This paper explores how artificial intelligence (AI) technology can contribute to achieve progress on good health and well-being, one of the United Nations' 17 Sustainable Development Goals. It is estimated that one in ten of the global population lived with a mental disorder. Inspired by studies showing that engaging and viewing beautiful natural images can make people feel happier and less stressful, lead to higher emotional well-being, and can even have therapeutic values, we explore how AI can help to promote mental health by developing automatic algorithms for finding beautiful and happy images. We first construct a large image database consisting of nearly 20K very high resolution colour photographs of natural scenes where each image is labelled with beautifulness and happiness scores by about 10 observers. Statistics of the database shows that there is a good correlation between the beautifulness and happiness scores which provides anecdotal evidence to corroborate that engaging beautiful natural images can potentially benefit mental well-being. Building on this unique database, the very first of its kind, we have developed a deep learning based model for automatically predicting the beautifulness and happiness scores of natural images. Experimental results are presented to show that it is possible to develop AI algorithms to automatically assess an image's beautifulness and happiness values which can in turn be used to develop applications for promoting mental health and well-being.

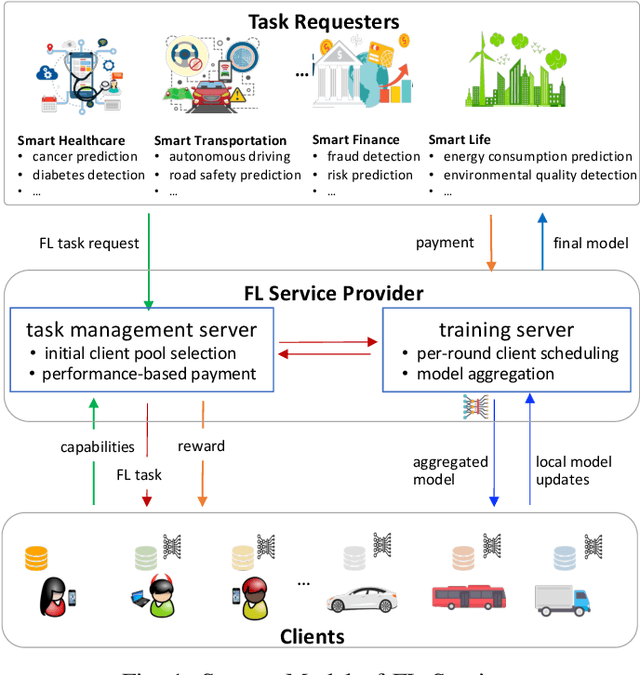

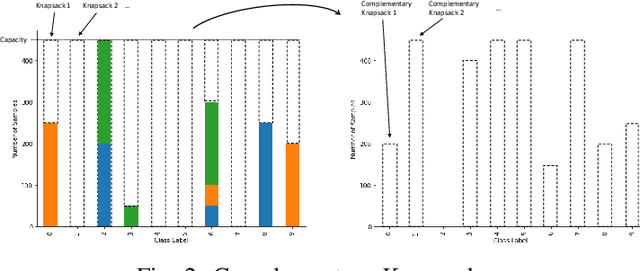

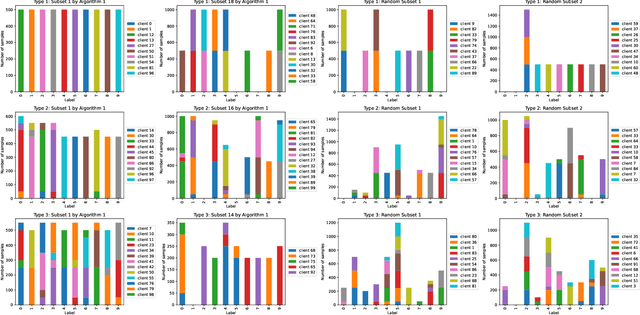

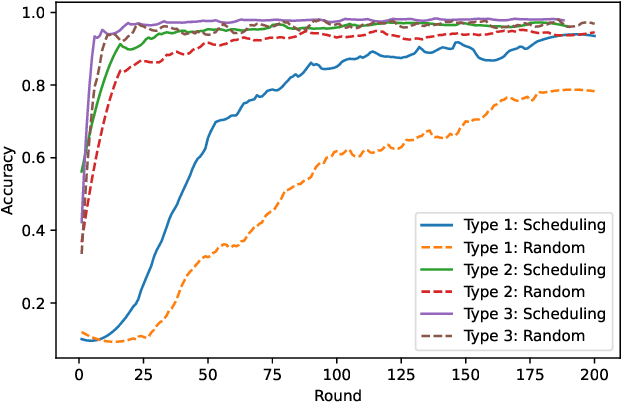

Multi-Criteria Client Selection and Scheduling with Fairness Guarantee for Federated Learning Service

Dec 05, 2023

Abstract:Federated Learning (FL) enables multiple clients to train machine learning models collaboratively without sharing the raw training data. However, for a given FL task, how to select a group of appropriate clients fairly becomes a challenging problem due to budget restrictions and client heterogeneity. In this paper, we propose a multi-criteria client selection and scheduling scheme with a fairness guarantee, comprising two stages: 1) preliminary client pool selection, and 2) per-round client scheduling. Specifically, we first define a client selection metric informed by several criteria, such as client resources, data quality, and client behaviors. Then, we formulate the initial client pool selection problem into an optimization problem that aims to maximize the overall scores of selected clients within a given budget and propose a greedy algorithm to solve it. To guarantee fairness, we further formulate the per-round client scheduling problem and propose a heuristic algorithm to divide the client pool into several subsets such that every client is selected at least once while guaranteeing that the `integrated' dataset in a subset is close to an independent and identical distribution (iid). Our experimental results show that our scheme can improve the model quality especially when data are non-iid.

Autism Spectrum Disorder Classification in Children based on Structural MRI Features Extracted using Contrastive Variational Autoencoder

Jul 03, 2023

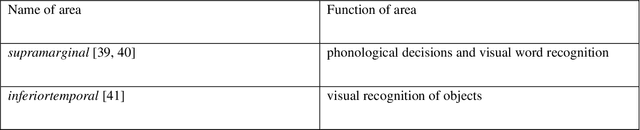

Abstract:Autism spectrum disorder (ASD) is a highly disabling mental disease that brings significant impairments of social interaction ability to the patients, making early screening and intervention of ASD critical. With the development of the machine learning and neuroimaging technology, extensive research has been conducted on machine classification of ASD based on structural MRI (s-MRI). However, most studies involve with datasets where participants' age are above 5. Few studies conduct machine classification of ASD for participants below 5-year-old, but, with mediocre predictive accuracy. In this paper, we push the boundary of predictive accuracy (above 0.97) of machine classification of ASD in children (age range: 0.92-4.83 years), based on s-MRI features extracted using contrastive variational autoencoder (CVAE). 78 s-MRI, collected from Shenzhen Children's Hospital, are used for training CVAE, which consists of both ASD-specific feature channel and common shared feature channel. The ASD participants represented by ASD-specific features can be easily discriminated from TC participants represented by the common shared features, leading to high classification accuracy. In case of degraded predictive accuracy when data size is extremely small, a transfer learning strategy is proposed here as a potential solution. Finally, we conduct neuroanatomical interpretation based on the correlation between s-MRI features extracted from CVAE and surface area of different cortical regions, which discloses potential biomarkers that could help target treatments of ASD in the future.

Active Globally Explainable Learning for Medical Images via Class Association Embedding and Cyclic Adversarial Generation

Jun 12, 2023Abstract:Explainability poses a major challenge to artificial intelligence (AI) techniques. Current studies on explainable AI (XAI) lack the efficiency of extracting global knowledge about the learning task, thus suffer deficiencies such as imprecise saliency, context-aware absence and vague meaning. In this paper, we propose the class association embedding (CAE) approach to address these issues. We employ an encoder-decoder architecture to embed sample features and separate them into class-related and individual-related style vectors simultaneously. Recombining the individual-style code of a given sample with the class-style code of another leads to a synthetic sample with preserved individual characters but changed class assignment, following a cyclic adversarial learning strategy. Class association embedding distills the global class-related features of all instances into a unified domain with well separation between classes. The transition rules between different classes can be then extracted and further employed to individual instances. We then propose an active XAI framework which manipulates the class-style vector of a certain sample along guided paths towards the counter-classes, resulting in a series of counter-example synthetic samples with identical individual characters. Comparing these counterfactual samples with the original ones provides a global, intuitive illustration to the nature of the classification tasks. We adopt the framework on medical image classification tasks, which show that more precise saliency maps with powerful context-aware representation can be achieved compared with existing methods. Moreover, the disease pathology can be directly visualized via traversing the paths in the class-style space.

AGE Challenge: Angle Closure Glaucoma Evaluation in Anterior Segment Optical Coherence Tomography

May 05, 2020

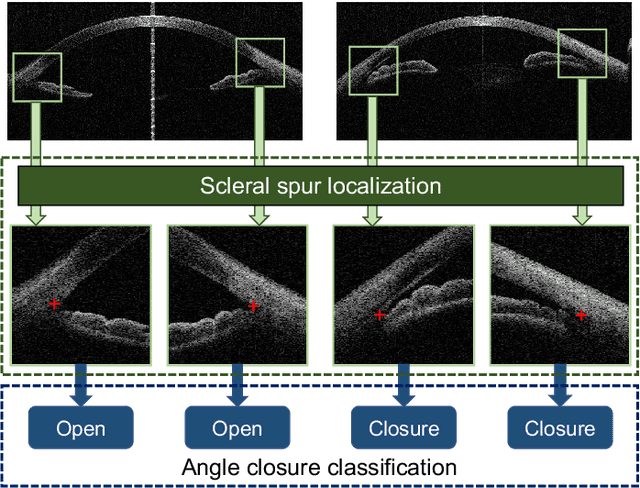

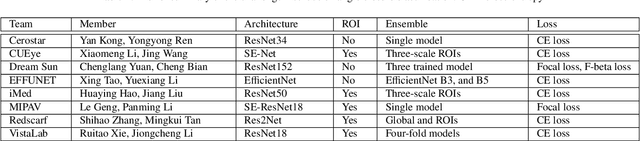

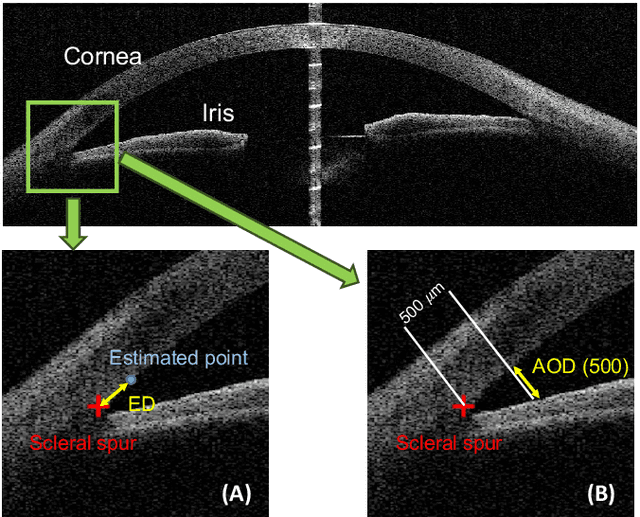

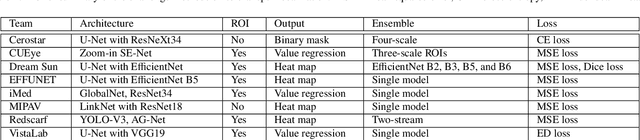

Abstract:Angle closure glaucoma (ACG) is a more aggressive disease than open-angle glaucoma, where the abnormal anatomical structures of the anterior chamber angle (ACA) may cause an elevated intraocular pressure and gradually leads to glaucomatous optic neuropathy and eventually to visual impairment and blindness. Anterior Segment Optical Coherence Tomography (AS-OCT) imaging provides a fast and contactless way to discriminate angle closure from open angle. Although many medical image analysis algorithms have been developed for glaucoma diagnosis, only a few studies have focused on AS-OCT imaging. In particular, there is no public AS-OCT dataset available for evaluating the existing methods in a uniform way, which limits the progress in the development of automated techniques for angle closure detection and assessment. To address this, we organized the Angle closure Glaucoma Evaluation challenge (AGE), held in conjunction with MICCAI 2019. The AGE challenge consisted of two tasks: scleral spur localization and angle closure classification. For this challenge, we released a large data of 4800 annotated AS-OCT images from 199 patients, and also proposed an evaluation framework to benchmark and compare different models. During the AGE challenge, over 200 teams registered online, and more than 1100 results were submitted for online evaluation. Finally, eight teams participated in the onsite challenge. In this paper, we summarize these eight onsite challenge methods and analyze their corresponding results in the two tasks. We further discuss limitations and future directions. In the AGE challenge, the top-performing approach had an average Euclidean Distance of 10 pixel in scleral spur localization, while in the task of angle closure classification, all the algorithms achieved the satisfactory performances, especially, 100% accuracy rate for top-two performances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge