Quoc Dang Vu

StainFuser: Controlling Diffusion for Faster Neural Style Transfer in Multi-Gigapixel Histology Images

Mar 14, 2024

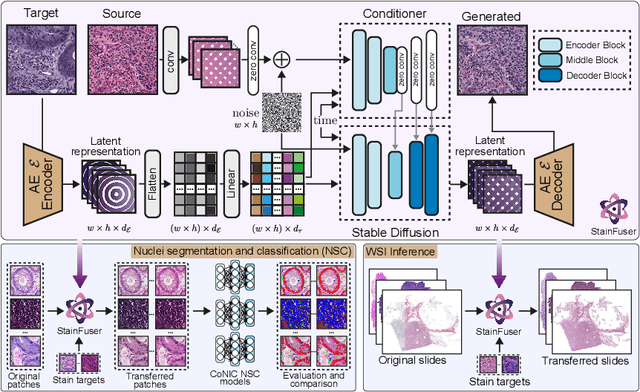

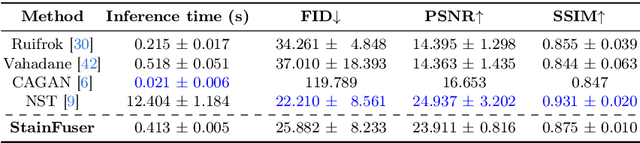

Abstract:Stain normalization algorithms aim to transform the color and intensity characteristics of a source multi-gigapixel histology image to match those of a target image, mitigating inconsistencies in the appearance of stains used to highlight cellular components in the images. We propose a new approach, StainFuser, which treats this problem as a style transfer task using a novel Conditional Latent Diffusion architecture, eliminating the need for handcrafted color components. With this method, we curate SPI-2M the largest stain normalization dataset to date of over 2 million histology images with neural style transfer for high-quality transformations. Trained on this data, StainFuser outperforms current state-of-the-art GAN and handcrafted methods in terms of the quality of normalized images. Additionally, compared to existing approaches, it improves the performance of nuclei instance segmentation and classification models when used as a test time augmentation method on the challenging CoNIC dataset. Finally, we apply StainFuser on multi-gigapixel Whole Slide Images (WSIs) and demonstrate improved performance in terms of computational efficiency, image quality and consistency across tiles over current methods.

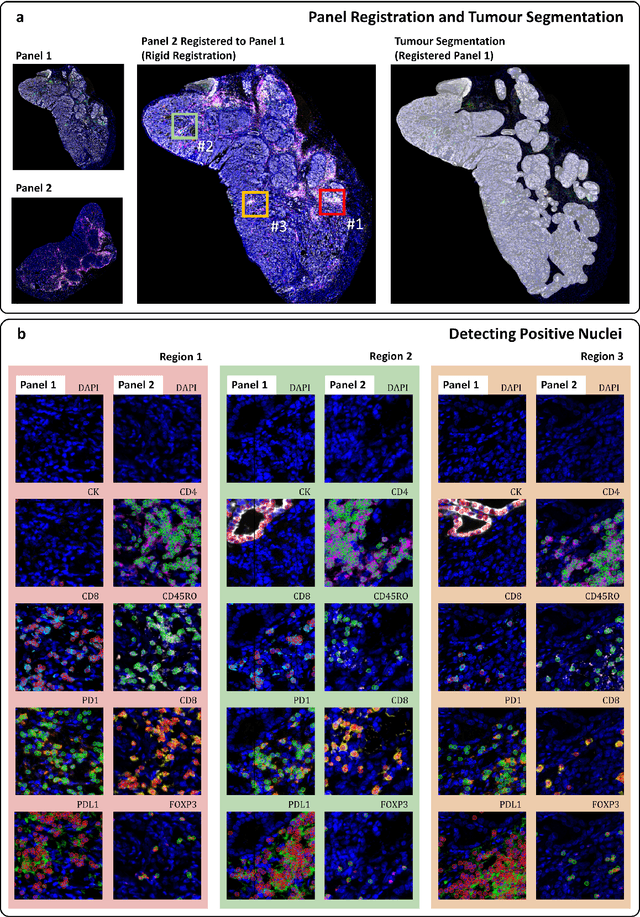

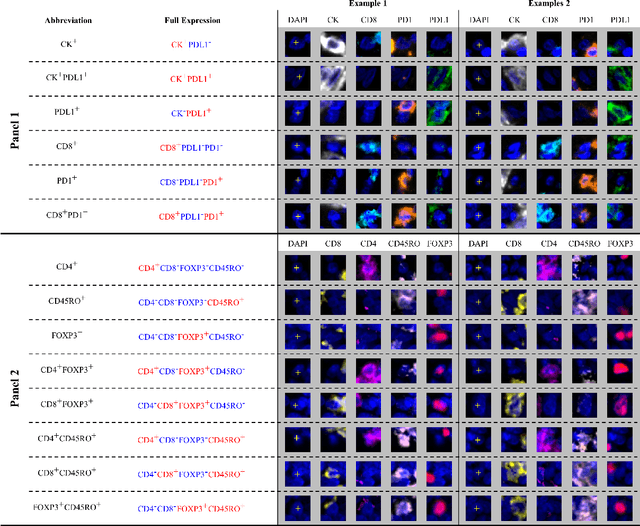

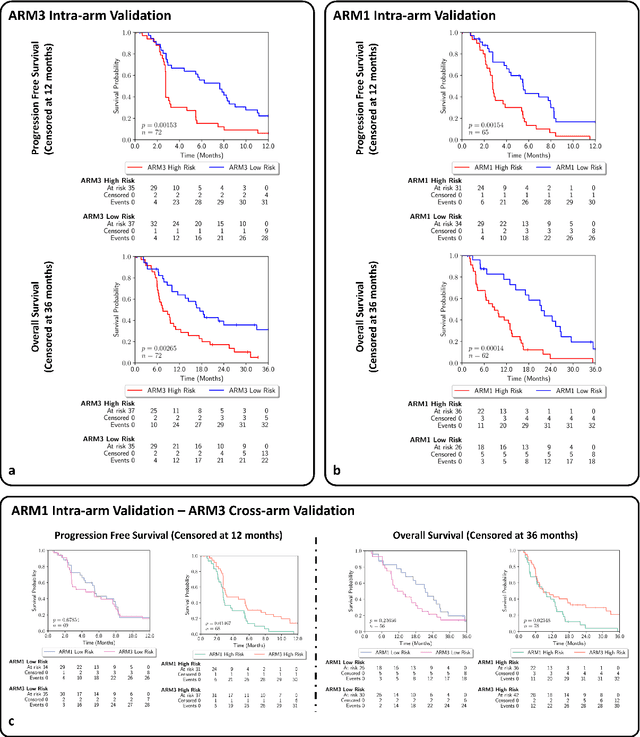

An AI based Digital Score of Tumour-Immune Microenvironment Predicts Benefit to Maintenance Immunotherapy in Advanced Oesophagogastric Adenocarcinoma

Feb 29, 2024

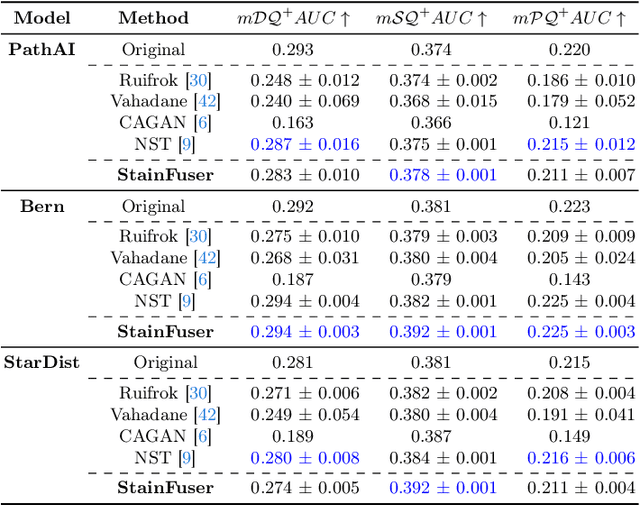

Abstract:Gastric and oesophageal (OG) cancers are the leading causes of cancer mortality worldwide. In OG cancers, recent studies have showed that PDL1 immune checkpoint inhibitors (ICI) in combination with chemotherapy improves patient survival. However, our understanding of the tumour immune microenvironment in OG cancers remains limited. In this study, we interrogate multiplex immunofluorescence (mIF) images taken from patients with advanced Oesophagogastric Adenocarcinoma (OGA) who received first-line fluoropyrimidine and platinum-based chemotherapy in the PLATFORM trial (NCT02678182) to predict the efficacy of the treatment and to explore the biological basis of patients responding to maintenance durvalumab (PDL1 inhibitor). Our proposed Artificial Intelligence (AI) based marker successfully identified responder from non-responder (p < 0.05) as well as those who could potentially benefit from ICI with statistical significance (p < 0.05) for both progression free and overall survival. Our findings suggest that T cells that express FOXP3 seem to heavily influence the patient treatment response and survival outcome. We also observed that higher levels of CD8+PD1+ cells are consistently linked to poor prognosis for both OS and PFS, regardless of ICI.

CoNIC Challenge: Pushing the Frontiers of Nuclear Detection, Segmentation, Classification and Counting

Mar 14, 2023

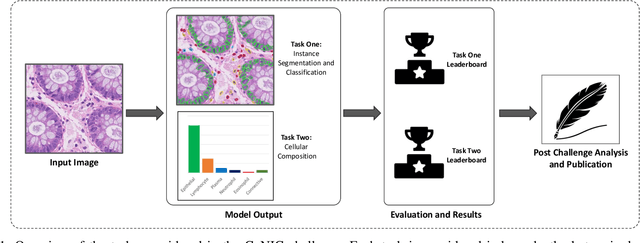

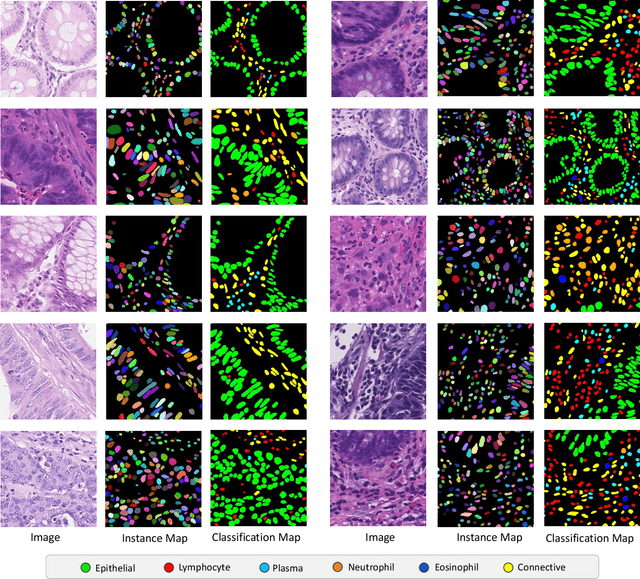

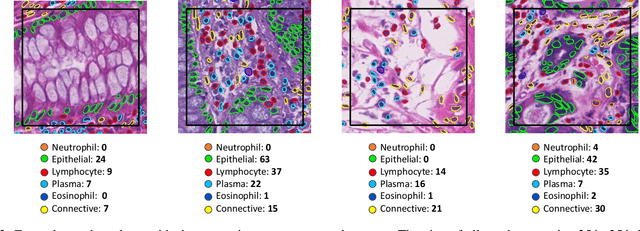

Abstract:Nuclear detection, segmentation and morphometric profiling are essential in helping us further understand the relationship between histology and patient outcome. To drive innovation in this area, we setup a community-wide challenge using the largest available dataset of its kind to assess nuclear segmentation and cellular composition. Our challenge, named CoNIC, stimulated the development of reproducible algorithms for cellular recognition with real-time result inspection on public leaderboards. We conducted an extensive post-challenge analysis based on the top-performing models using 1,658 whole-slide images of colon tissue. With around 700 million detected nuclei per model, associated features were used for dysplasia grading and survival analysis, where we demonstrated that the challenge's improvement over the previous state-of-the-art led to significant boosts in downstream performance. Our findings also suggest that eosinophils and neutrophils play an important role in the tumour microevironment. We release challenge models and WSI-level results to foster the development of further methods for biomarker discovery.

Nuclear Segmentation and Classification: On Color & Compression Generalization

Jan 09, 2023Abstract:Since the introduction of digital and computational pathology as a field, one of the major problems in the clinical application of algorithms has been the struggle to generalize well to examples outside the distribution of the training data. Existing work to address this in both pathology and natural images has focused almost exclusively on classification tasks. We explore and evaluate the robustness of the 7 best performing nuclear segmentation and classification models from the largest computational pathology challenge for this problem to date, the CoNIC challenge. We demonstrate that existing state-of-the-art (SoTA) models are robust towards compression artifacts but suffer substantial performance reduction when subjected to shifts in the color domain. We find that using stain normalization to address the domain shift problem can be detrimental to the model performance. On the other hand, neural style transfer is more consistent in improving test performance when presented with large color variations in the wild.

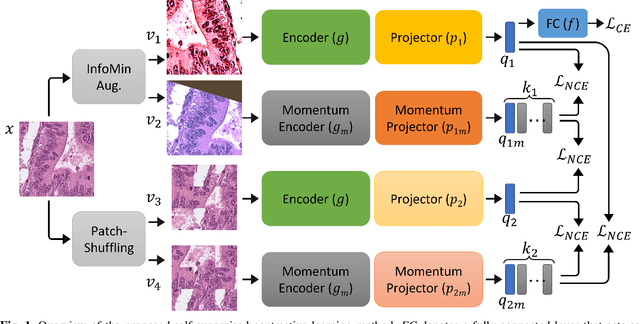

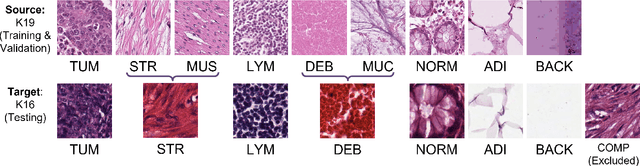

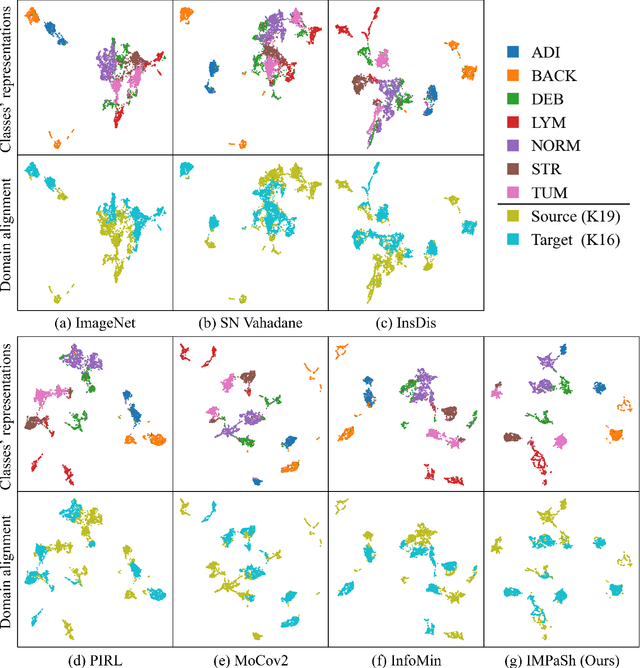

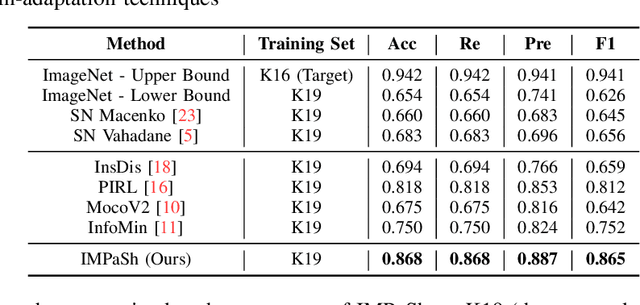

IMPaSh: A Novel Domain-shift Resistant Representation for Colorectal Cancer Tissue Classification

Aug 23, 2022

Abstract:The appearance of histopathology images depends on tissue type, staining and digitization procedure. These vary from source to source and are the potential causes for domain-shift problems. Owing to this problem, despite the great success of deep learning models in computational pathology, a model trained on a specific domain may still perform sub-optimally when we apply them to another domain. To overcome this, we propose a new augmentation called PatchShuffling and a novel self-supervised contrastive learning framework named IMPaSh for pre-training deep learning models. Using these, we obtained a ResNet50 encoder that can extract image representation resistant to domain-shift. We compared our derived representation against those acquired based on other domain-generalization techniques by using them for the cross-domain classification of colorectal tissue images. We show that the proposed method outperforms other traditional histology domain-adaptation and state-of-the-art self-supervised learning methods. Code is available at: https://github.com/trinhvg/IMPash .

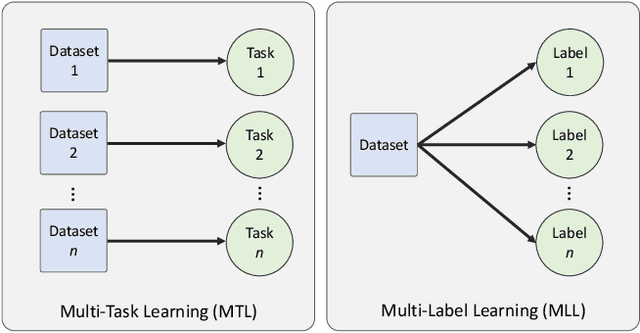

One Model is All You Need: Multi-Task Learning Enables Simultaneous Histology Image Segmentation and Classification

Feb 28, 2022

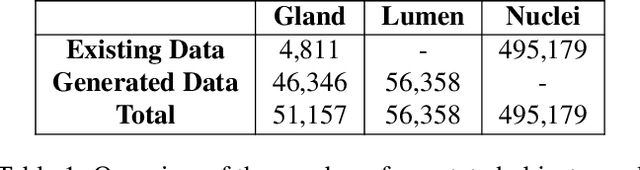

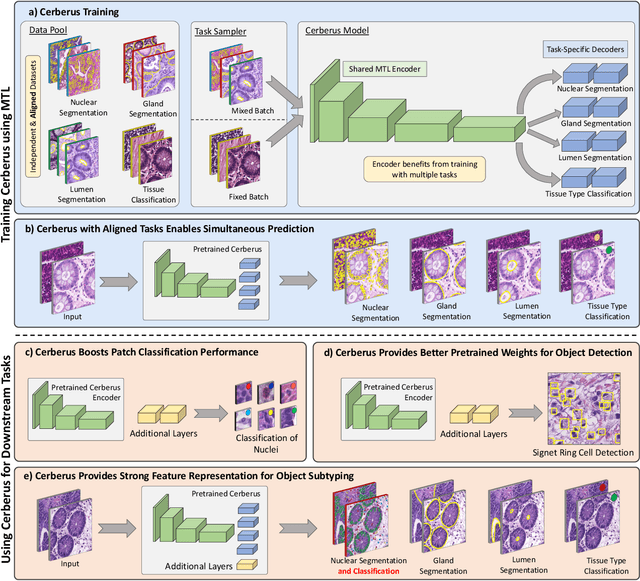

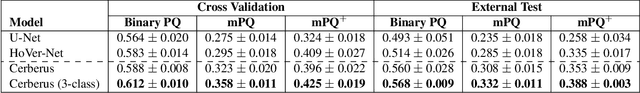

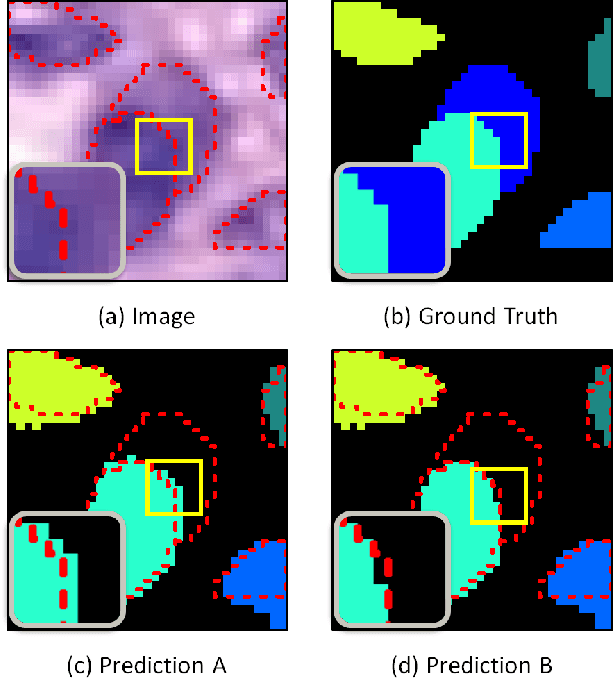

Abstract:The recent surge in performance for image analysis of digitised pathology slides can largely be attributed to the advance of deep learning. Deep models can be used to initially localise various structures in the tissue and hence facilitate the extraction of interpretable features for biomarker discovery. However, these models are typically trained for a single task and therefore scale poorly as we wish to adapt the model for an increasing number of different tasks. Also, supervised deep learning models are very data hungry and therefore rely on large amounts of training data to perform well. In this paper we present a multi-task learning approach for segmentation and classification of nuclei, glands, lumen and different tissue regions that leverages data from multiple independent data sources. While ensuring that our tasks are aligned by the same tissue type and resolution, we enable simultaneous prediction with a single network. As a result of feature sharing, we also show that the learned representation can be used to improve downstream tasks, including nuclear classification and signet ring cell detection. As part of this work, we use a large dataset consisting of over 600K objects for segmentation and 440K patches for classification and make the data publicly available. We use our approach to process the colorectal subset of TCGA, consisting of 599 whole-slide images, to localise 377 million, 900K and 2.1 million nuclei, glands and lumen respectively. We make this resource available to remove a major barrier in the development of explainable models for computational pathology.

Handcrafted Histological Transformer (H2T): Unsupervised Representation of Whole Slide Images

Feb 14, 2022

Abstract:Diagnostic, prognostic and therapeutic decision-making of cancer in pathology clinics can now be carried out based on analysis of multi-gigapixel tissue images, also known as whole-slide images (WSIs). Recently, deep convolutional neural networks (CNNs) have been proposed to derive unsupervised WSI representations; these are attractive as they rely less on expert annotation which is cumbersome. However, a major trade-off is that higher predictive power generally comes at the cost of interpretability, posing a challenge to their clinical use where transparency in decision-making is generally expected. To address this challenge, we present a handcrafted framework based on deep CNN for constructing holistic WSI-level representations. Building on recent findings about the internal working of the Transformer in the domain of natural language processing, we break down its processes and handcraft them into a more transparent framework that we term as the Handcrafted Histological Transformer or H2T. Based on our experiments involving various datasets consisting of a total of 5,306 WSIs, the results demonstrate that H2T based holistic WSI-level representations offer competitive performance compared to recent state-of-the-art methods and can be readily utilized for various downstream analysis tasks. Finally, our results demonstrate that the H2T framework can be up to 14 times faster than the Transformer models.

CoNIC: Colon Nuclei Identification and Counting Challenge 2022

Nov 29, 2021

Abstract:Nuclear segmentation, classification and quantification within Haematoxylin & Eosin stained histology images enables the extraction of interpretable cell-based features that can be used in downstream explainable models in computational pathology (CPath). However, automatic recognition of different nuclei is faced with a major challenge in that there are several different types of nuclei, some of them exhibiting large intra-class variability. To help drive forward research and innovation for automatic nuclei recognition in CPath, we organise the Colon Nuclei Identification and Counting (CoNIC) Challenge. The challenge encourages researchers to develop algorithms that perform segmentation, classification and counting of nuclei within the current largest known publicly available nuclei-level dataset in CPath, containing around half a million labelled nuclei. Therefore, the CoNIC challenge utilises over 10 times the number of nuclei as the previous largest challenge dataset for nuclei recognition. It is important for algorithms to be robust to input variation if we wish to deploy them in a clinical setting. Therefore, as part of this challenge we will also test the sensitivity of each submitted algorithm to certain input variations.

XY Network for Nuclear Segmentation in Multi-Tissue Histology Images

Dec 16, 2018

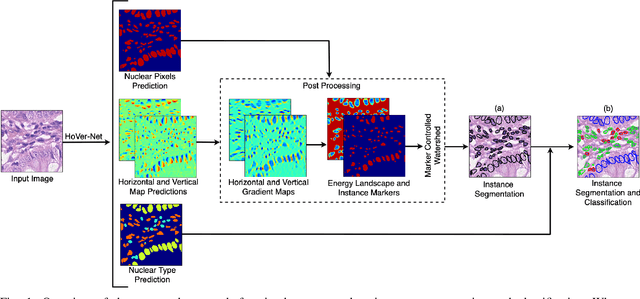

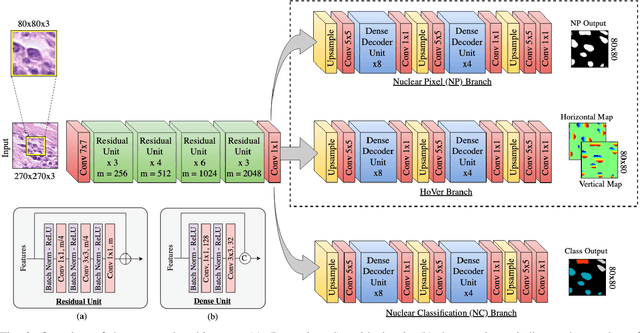

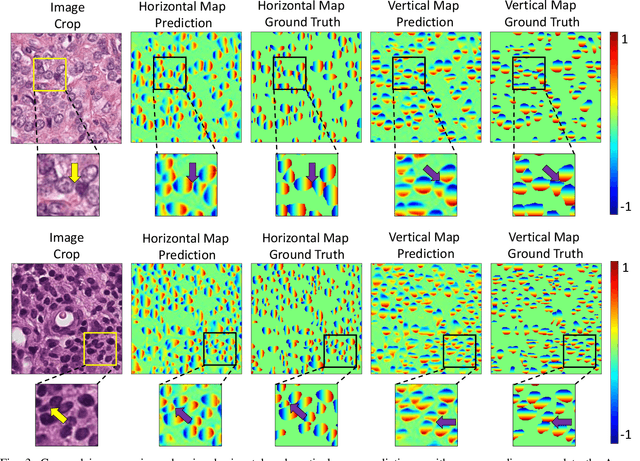

Abstract:Nuclear segmentation within Haematoxylin & Eosin stained histology images is a fundamental prerequisite in the digital pathology work-flow, due to the ability for nuclear features to act as key diagnostic markers. The development of automated methods for nuclear segmentation enables the quantitative analysis of tens of thousands of nuclei within a whole-slide pathology image, opening up possibilities of further analysis of large-scale nuclear morphometry. However, automated nuclear segmentation is faced with a major challenge in that there are several different types of nuclei, some of them exhibiting large intra-class variability such as the tumour cells. Additionally, some of the nuclei are often clustered together. To address these challenges, we present a novel convolutional neural network for automated nuclear segmentation that leverages the instance-rich information encoded within the vertical and horizontal distances of nuclear pixels to their centres of mass. These distances are then utilised to separate clustered nuclei, resulting in an accurate segmentation, particularly in areas with overlapping instances. We demonstrate state-of-the-art performance compared to other methods on four independent multi-tissue histology image datasets. Furthermore, we propose an interpretable and reliable evaluation framework that effectively quantifies nuclear segmentation performance and overcomes the limitations of existing performance measures.

Methods for Segmentation and Classification of Digital Microscopy Tissue Images

Oct 31, 2018

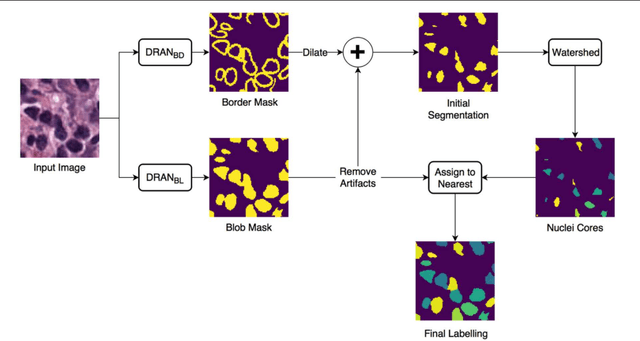

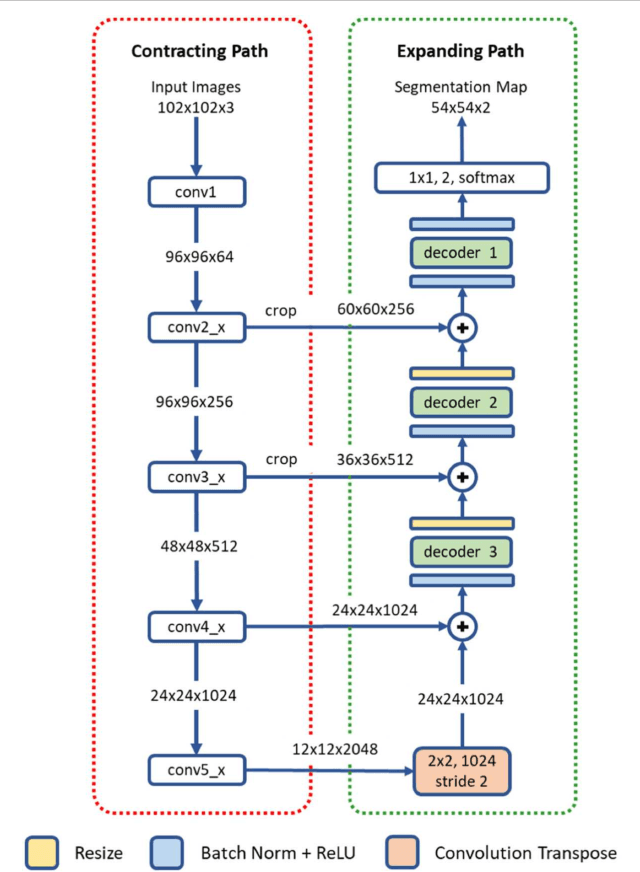

Abstract:High-resolution microscopy images of tissue specimens provide detailed information about the morphology of normal and diseased tissue. Image analysis of tissue morphology can help cancer researchers develop a better understanding of cancer biology. Segmentation of nuclei and classification of tissue images are two common tasks in tissue image analysis. Development of accurate and efficient algorithms for these tasks is a challenging problem because of the complexity of tissue morphology and tumor heterogeneity. In this paper we present two computer algorithms; one designed for segmentation of nuclei and the other for classification of whole slide tissue images. The segmentation algorithm implements a multiscale deep residual aggregation network to accurately segment nuclear material and then separate clumped nuclei into individual nuclei. The classification algorithm initially carries out patch-level classification via a deep learning method, then patch-level statistical and morphological features are used as input to a random forest regression model for whole slide image classification. The segmentation and classification algorithms were evaluated in the MICCAI 2017 Digital Pathology challenge. The segmentation algorithm achieved an accuracy score of 0.78. The classification algorithm achieved an accuracy score of 0.81.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge