Qitong Wang

A Lightweight Sparse Interaction Network for Time Series Forecasting

Feb 02, 2026Abstract:Recent work shows that linear models can outperform several transformer models in long-term time-series forecasting (TSF). However, instead of explicitly performing temporal interaction through self-attention, linear models implicitly perform it based on stacked MLP structures, which may be insufficient in capturing the complex temporal dependencies and their performance still has potential for improvement. To this end, we propose a Lightweight Sparse Interaction Network (LSINet) for TSF task. Inspired by the sparsity of self-attention, we propose a Multihead Sparse Interaction Mechanism (MSIM). Different from self-attention, MSIM learns the important connections between time steps through sparsity-induced Bernoulli distribution to capture temporal dependencies for TSF. The sparsity is ensured by the proposed self-adaptive regularization loss. Moreover, we observe the shareability of temporal interactions and propose to perform Shared Interaction Learning (SIL) for MSIM to further enhance efficiency and improve convergence. LSINet is a linear model comprising only MLP structures with low overhead and equipped with explicit temporal interaction mechanisms. Extensive experiments on public datasets show that LSINet achieves both higher accuracy and better efficiency than advanced linear models and transformer models in TSF tasks. The code is available at the link https://github.com/Meteor-Stars/LSINet.

Multi-period Learning for Financial Time Series Forecasting

Nov 07, 2025

Abstract:Time series forecasting is important in finance domain. Financial time series (TS) patterns are influenced by both short-term public opinions and medium-/long-term policy and market trends. Hence, processing multi-period inputs becomes crucial for accurate financial time series forecasting (TSF). However, current TSF models either use only single-period input, or lack customized designs for addressing multi-period characteristics. In this paper, we propose a Multi-period Learning Framework (MLF) to enhance financial TSF performance. MLF considers both TSF's accuracy and efficiency requirements. Specifically, we design three new modules to better integrate the multi-period inputs for improving accuracy: (i) Inter-period Redundancy Filtering (IRF), that removes the information redundancy between periods for accurate self-attention modeling, (ii) Learnable Weighted-average Integration (LWI), that effectively integrates multi-period forecasts, (iii) Multi-period self-Adaptive Patching (MAP), that mitigates the bias towards certain periods by setting the same number of patches across all periods. Furthermore, we propose a Patch Squeeze module to reduce the number of patches in self-attention modeling for maximized efficiency. MLF incorporates multiple inputs with varying lengths (periods) to achieve better accuracy and reduces the costs of selecting input lengths during training. The codes and datasets are available at https://github.com/Meteor-Stars/MLF.

Multi-Sense Embeddings for Language Models and Knowledge Distillation

Apr 08, 2025Abstract:Transformer-based large language models (LLMs) rely on contextual embeddings which generate different (continuous) representations for the same token depending on its surrounding context. Nonetheless, words and tokens typically have a limited number of senses (or meanings). We propose multi-sense embeddings as a drop-in replacement for each token in order to capture the range of their uses in a language. To construct a sense embedding dictionary, we apply a clustering algorithm to embeddings generated by an LLM and consider the cluster centers as representative sense embeddings. In addition, we propose a novel knowledge distillation method that leverages the sense dictionary to learn a smaller student model that mimics the senses from the much larger base LLM model, offering significant space and inference time savings, while maintaining competitive performance. Via thorough experiments on various benchmarks, we showcase the effectiveness of our sense embeddings and knowledge distillation approach. We share our code at https://github.com/Qitong-Wang/SenseDict

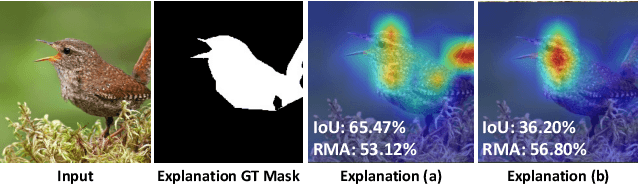

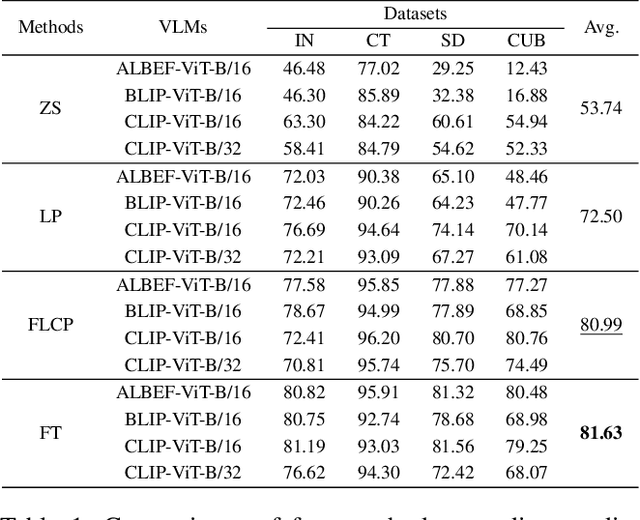

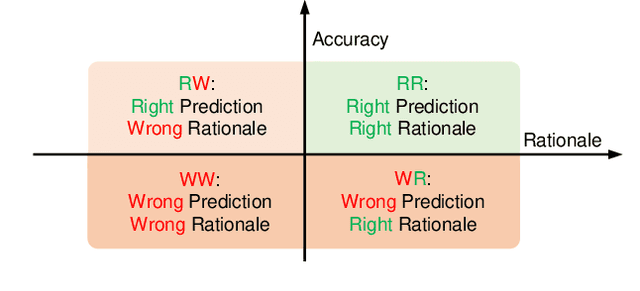

Beyond Accuracy: On the Effects of Fine-tuning Towards Vision-Language Model's Prediction Rationality

Dec 17, 2024

Abstract:Vision-Language Models (VLMs), such as CLIP, have already seen widespread applications. Researchers actively engage in further fine-tuning VLMs in safety-critical domains. In these domains, prediction rationality is crucial: the prediction should be correct and based on valid evidence. Yet, for VLMs, the impact of fine-tuning on prediction rationality is seldomly investigated. To study this problem, we proposed two new metrics called Prediction Trustworthiness and Inference Reliability. We conducted extensive experiments on various settings and observed some interesting phenomena. On the one hand, we found that the well-adopted fine-tuning methods led to more correct predictions based on invalid evidence. This potentially undermines the trustworthiness of correct predictions from fine-tuned VLMs. On the other hand, having identified valid evidence of target objects, fine-tuned VLMs were more likely to make correct predictions. Moreover, the findings are also consistent under distributional shifts and across various experimental settings. We hope our research offer fresh insights to VLM fine-tuning.

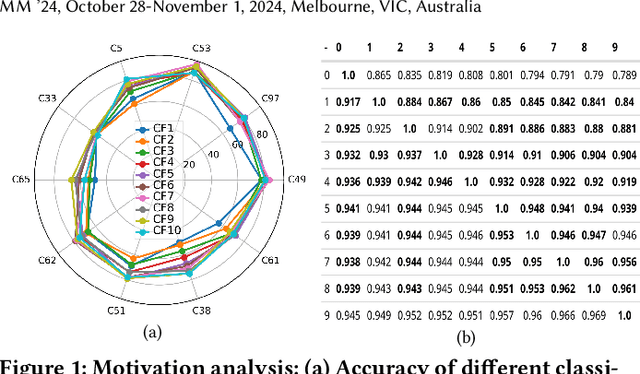

Enhancing Adaptive Deep Networks for Image Classification via Uncertainty-aware Decision Fusion

Aug 25, 2024

Abstract:Handling varying computational resources is a critical issue in modern AI applications. Adaptive deep networks, featuring the dynamic employment of multiple classifier heads among different layers, have been proposed to address classification tasks under varying computing resources. Existing approaches typically utilize the last classifier supported by the available resources for inference, as they believe that the last classifier always performs better across all classes. However, our findings indicate that earlier classifier heads can outperform the last head for certain classes. Based on this observation, we introduce the Collaborative Decision Making (CDM) module, which fuses the multiple classifier heads to enhance the inference performance of adaptive deep networks. CDM incorporates an uncertainty-aware fusion method based on evidential deep learning (EDL), that utilizes the reliability (uncertainty values) from the first c-1 classifiers to improve the c-th classifier' accuracy. We also design a balance term that reduces fusion saturation and unfairness issues caused by EDL constraints to improve the fusion quality of CDM. Finally, a regularized training strategy that uses the last classifier to guide the learning process of early classifiers is proposed to further enhance the CDM module's effect, called the Guided Collaborative Decision Making (GCDM) framework. The experimental evaluation demonstrates the effectiveness of our approaches. Results on ImageNet datasets show CDM and GCDM obtain 0.4% to 2.8% accuracy improvement (under varying computing resources) on popular adaptive networks. The code is available at the link https://github.com/Meteor-Stars/GCDM_AdaptiveNet.

Learning from Semantic Alignment between Unpaired Multiviews for Egocentric Video Recognition

Aug 23, 2023Abstract:We are concerned with a challenging scenario in unpaired multiview video learning. In this case, the model aims to learn comprehensive multiview representations while the cross-view semantic information exhibits variations. We propose Semantics-based Unpaired Multiview Learning (SUM-L) to tackle this unpaired multiview learning problem. The key idea is to build cross-view pseudo-pairs and do view-invariant alignment by leveraging the semantic information of videos. To facilitate the data efficiency of multiview learning, we further perform video-text alignment for first-person and third-person videos, to fully leverage the semantic knowledge to improve video representations. Extensive experiments on multiple benchmark datasets verify the effectiveness of our framework. Our method also outperforms multiple existing view-alignment methods, under the more challenging scenario than typical paired or unpaired multimodal or multiview learning. Our code is available at https://github.com/wqtwjt1996/SUM-L.

A Hierarchical Transformer Encoder to Improve Entire Neoplasm Segmentation on Whole Slide Image of Hepatocellular Carcinoma

Jul 11, 2023

Abstract:In digital histopathology, entire neoplasm segmentation on Whole Slide Image (WSI) of Hepatocellular Carcinoma (HCC) plays an important role, especially as a preprocessing filter to automatically exclude healthy tissue, in histological molecular correlations mining and other downstream histopathological tasks. The segmentation task remains challenging due to HCC's inherent high-heterogeneity and the lack of dependency learning in large field of view. In this article, we propose a novel deep learning architecture with a hierarchical Transformer encoder, HiTrans, to learn the global dependencies within expanded 4096$\times$4096 WSI patches. HiTrans is designed to encode and decode the patches with larger reception fields and the learned global dependencies, compared to the state-of-the-art Fully Convolutional Neural networks (FCNN). Empirical evaluations verified that HiTrans leads to better segmentation performance by taking into account regional and global dependency information.

Learning Representational Invariances for Data-Efficient Action Recognition

Mar 30, 2021

Abstract:Data augmentation is a ubiquitous technique for improving image classification when labeled data is scarce. Constraining the model predictions to be invariant to diverse data augmentations effectively injects the desired representational invariances to the model (e.g., invariance to photometric variations), leading to improved accuracy. Compared to image data, the appearance variations in videos are far more complex due to the additional temporal dimension. Yet, data augmentation methods for videos remain under-explored. In this paper, we investigate various data augmentation strategies that capture different video invariances, including photometric, geometric, temporal, and actor/scene augmentations. When integrated with existing consistency-based semi-supervised learning frameworks, we show that our data augmentation strategy leads to promising performance on the Kinetics-100, UCF-101, and HMDB-51 datasets in the low-label regime. We also validate our data augmentation strategy in the fully supervised setting and demonstrate improved performance.

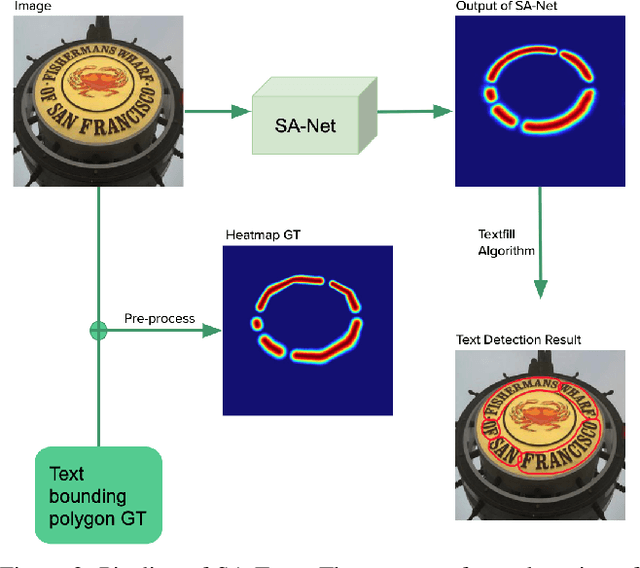

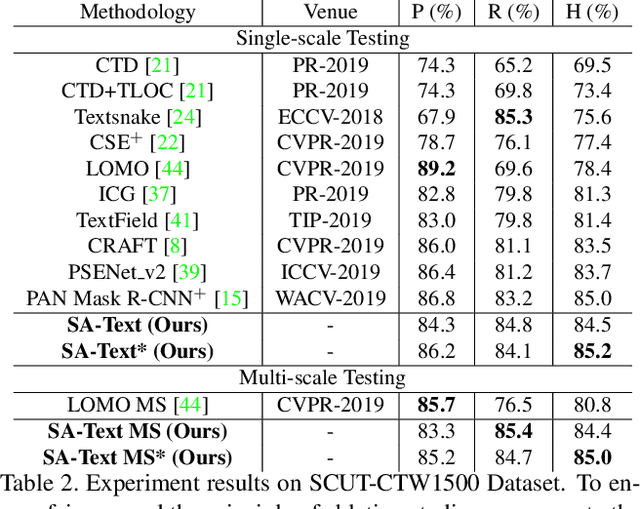

SA-Text: Simple but Accurate Detector for Text of Arbitrary Shapes

Dec 03, 2019

Abstract:We introduce a new framework for text detection named SA-Text meaning "Simple but Accurate," which utilizes heatmaps to detect text regions in natural scene images effectively. SA-Text detects text that occurs in various fonts, shapes, and orientations in natural scene images with complicated backgrounds. Experiments on three challenging and public scene-text-detection datasets, Total-Text, SCUT-CTW1500, and MSRA-TD500 show the effectiveness and generalization ability of SA-Text in detecting not only multi-lingual oriented straight but also curved text in scripts of multiple languages. To further show the practicality of SA-Text, we combine it with a powerful state-of-the-art text recognition model and thus propose a pipeline-based text spotting system called SAA ("text spotting" is used as the technical term for "detection and recognition of text"). Our experimental results of SAA on the Total-Text dataset show that SAA outperforms four state-of-the-art text spotting frameworks by at least 9 percent points in the F-measure, which means that SA-Text can be used as a complete text detection and recognition system in real applications.

Deep Neural Network for Semantic-based Text Recognition in Images

Aug 15, 2019

Abstract:State-of-the-art text spotting systems typically aim to detect isolated words or word-by-word text in images of natural scenes and ignore the semantic coherence within a region of text. However, when interpreted together, seemingly isolated words may be easier to recognize. On this basis, we propose a novel "semantic-based text recognition" (STR) deep learning model that reads text in images with the help of understanding context. STR consists of several modules. We introduce the Text Grouping and Arranging (TGA) algorithm to connect and order isolated text regions. A text-recognition network interprets isolated words. Benefiting from semantic information, a sequenceto-sequence network model efficiently corrects inaccurate and uncertain phrases produced earlier in the STR pipeline. We present experiments on two new distinct datasets that contain scanned catalog images of interior designs and photographs of protesters with hand-written signs, respectively. Our results show that our STR model outperforms a baseline method that uses state-of-the-art single-wordrecognition techniques on both datasets. STR yields a high accuracy rate of 90% on the catalog images and 71% on the more difficult protest images, suggesting its generality in recognizing text.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge