Vasileios Kalantzis

Multi-Sense Embeddings for Language Models and Knowledge Distillation

Apr 08, 2025Abstract:Transformer-based large language models (LLMs) rely on contextual embeddings which generate different (continuous) representations for the same token depending on its surrounding context. Nonetheless, words and tokens typically have a limited number of senses (or meanings). We propose multi-sense embeddings as a drop-in replacement for each token in order to capture the range of their uses in a language. To construct a sense embedding dictionary, we apply a clustering algorithm to embeddings generated by an LLM and consider the cluster centers as representative sense embeddings. In addition, we propose a novel knowledge distillation method that leverages the sense dictionary to learn a smaller student model that mimics the senses from the much larger base LLM model, offering significant space and inference time savings, while maintaining competitive performance. Via thorough experiments on various benchmarks, we showcase the effectiveness of our sense embeddings and knowledge distillation approach. We share our code at https://github.com/Qitong-Wang/SenseDict

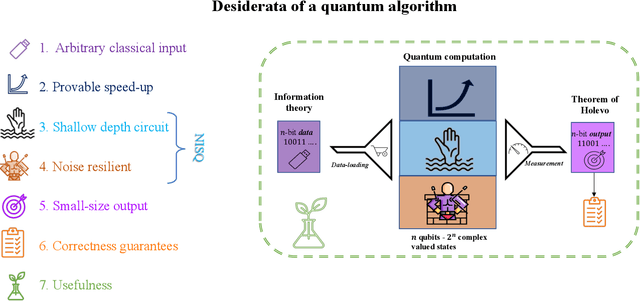

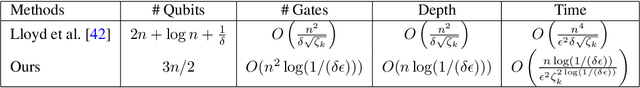

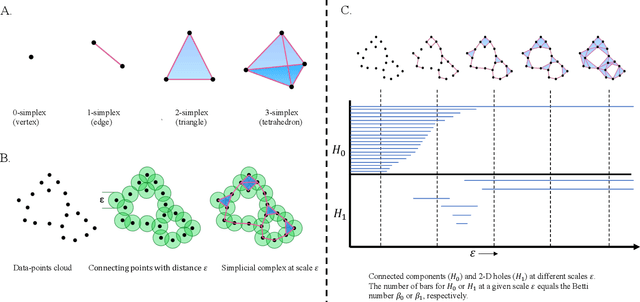

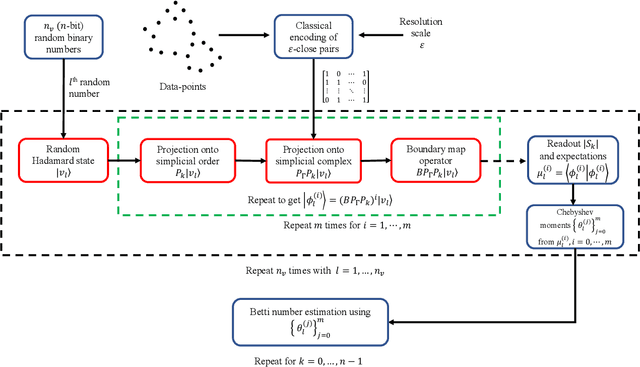

Towards Quantum Advantage on Noisy Quantum Computers

Sep 27, 2022

Abstract:Topological data analysis (TDA) is a powerful technique for extracting complex and valuable shape-related summaries of high-dimensional data. However, the computational demands of classical TDA algorithms are exorbitant, and quickly become impractical for high-order characteristics. Quantum computing promises exponential speedup for certain problems. Yet, many existing quantum algorithms with notable asymptotic speedups require a degree of fault tolerance that is currently unavailable. In this paper, we present NISQ-TDA, the first fully implemented end-to-end quantum machine learning algorithm needing only a linear circuit-depth, that is applicable to non-handcrafted high-dimensional classical data, with potential speedup under stringent conditions. The algorithm neither suffers from the data-loading problem nor does it need to store the input data on the quantum computer explicitly. Our approach includes three key innovations: (a) an efficient realization of the full boundary operator as a sum of Pauli operators; (b) a quantum rejection sampling and projection approach to restrict a uniform superposition to the simplices of the desired order in the complex; and (c) a stochastic rank estimation method to estimate the topological features in the form of approximate Betti numbers. We present theoretical results that establish additive error guarantees for NISQ-TDA, and the circuit and computational time and depth complexities for exponentially scaled output estimates, up to the error tolerance. The algorithm was successfully executed on quantum computing devices, as well as on noisy quantum simulators, applied to small datasets. Preliminary empirical results suggest that the algorithm is robust to noise.

Directed Graph Auto-Encoders

Feb 25, 2022

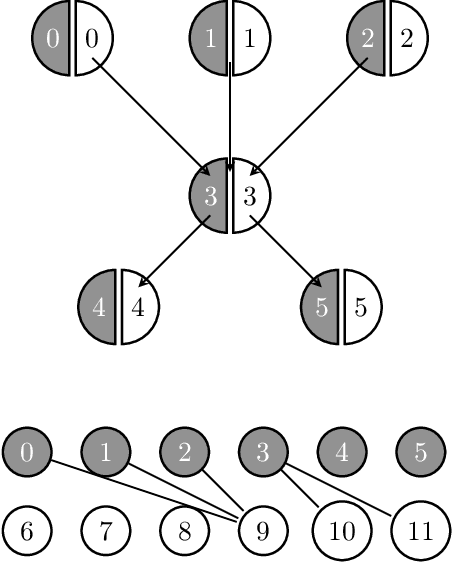

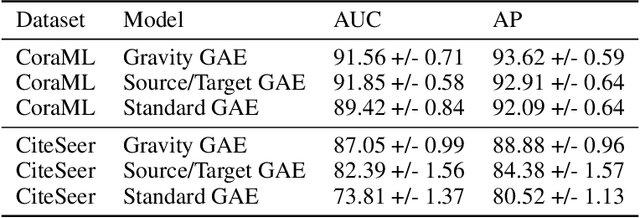

Abstract:We introduce a new class of auto-encoders for directed graphs, motivated by a direct extension of the Weisfeiler-Leman algorithm to pairs of node labels. The proposed model learns pairs of interpretable latent representations for the nodes of directed graphs, and uses parameterized graph convolutional network (GCN) layers for its encoder and an asymmetric inner product decoder. Parameters in the encoder control the weighting of representations exchanged between neighboring nodes. We demonstrate the ability of the proposed model to learn meaningful latent embeddings and achieve superior performance on the directed link prediction task on several popular network datasets.

PCENet: High Dimensional Surrogate Modeling for Learning Uncertainty

Feb 11, 2022

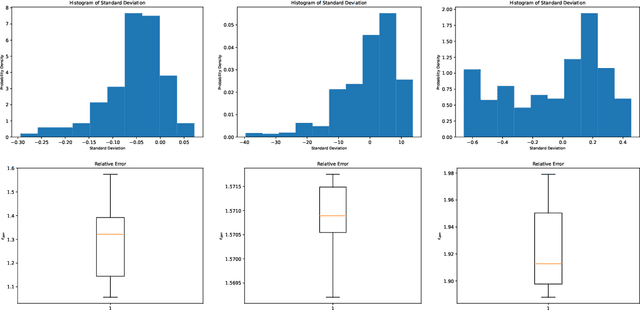

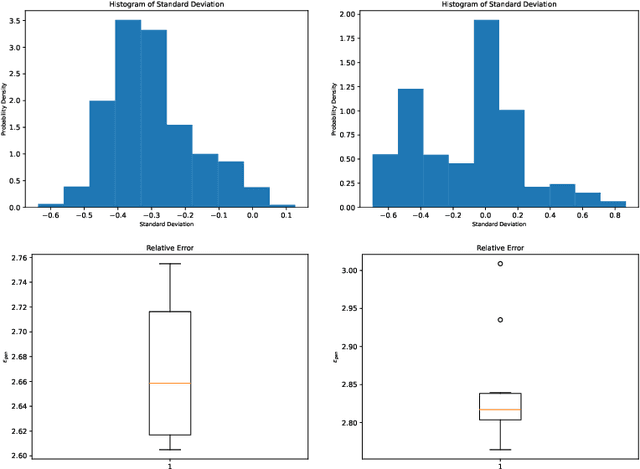

Abstract:Learning data representations under uncertainty is an important task that emerges in numerous machine learning applications. However, uncertainty quantification (UQ) techniques are computationally intensive and become prohibitively expensive for high-dimensional data. In this paper, we present a novel surrogate model for representation learning and uncertainty quantification, which aims to deal with data of moderate to high dimensions. The proposed model combines a neural network approach for dimensionality reduction of the (potentially high-dimensional) data, with a surrogate model method for learning the data distribution. We first employ a variational autoencoder (VAE) to learn a low-dimensional representation of the data distribution. We then propose to harness polynomial chaos expansion (PCE) formulation to map this distribution to the output target. The coefficients of PCE are learned from the distribution representation of the training data using a maximum mean discrepancy (MMD) approach. Our model enables us to (a) learn a representation of the data, (b) estimate uncertainty in the high-dimensional data system, and (c) match high order moments of the output distribution; without any prior statistical assumptions on the data. Numerical experimental results are presented to illustrate the performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge