Qingsong Yang

Flattery in Motion: Benchmarking and Analyzing Sycophancy in Video-LLMs

Jun 08, 2025Abstract:As video large language models (Video-LLMs) become increasingly integrated into real-world applications that demand grounded multimodal reasoning, ensuring their factual consistency and reliability is of critical importance. However, sycophancy, the tendency of these models to align with user input even when it contradicts the visual evidence, undermines their trustworthiness in such contexts. Current sycophancy research has largely overlooked its specific manifestations in the video-language domain, resulting in a notable absence of systematic benchmarks and targeted evaluations to understand how Video-LLMs respond under misleading user input. To fill this gap, we propose VISE (Video-LLM Sycophancy Benchmarking and Evaluation), the first dedicated benchmark designed to evaluate sycophantic behavior in state-of-the-art Video-LLMs across diverse question formats, prompt biases, and visual reasoning tasks. Specifically, VISE pioneeringly brings linguistic perspectives on sycophancy into the visual domain, enabling fine-grained analysis across multiple sycophancy types and interaction patterns. In addition, we explore key-frame selection as an interpretable, training-free mitigation strategy, which reveals potential paths for reducing sycophantic bias by strengthening visual grounding.

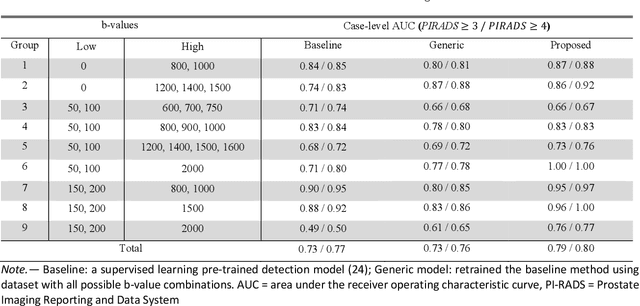

Deep Learning-based Unsupervised Domain Adaptation via a Unified Model for Prostate Lesion Detection Using Multisite Bi-parametric MRI Datasets

Aug 08, 2024

Abstract:Our hypothesis is that UDA using diffusion-weighted images, generated with a unified model, offers a promising and reliable strategy for enhancing the performance of supervised learning models in multi-site prostate lesion detection, especially when various b-values are present. This retrospective study included data from 5,150 patients (14,191 samples) collected across nine different imaging centers. A novel UDA method using a unified generative model was developed for multi-site PCa detection. This method translates diffusion-weighted imaging (DWI) acquisitions, including apparent diffusion coefficient (ADC) and individual DW images acquired using various b-values, to align with the style of images acquired using b-values recommended by Prostate Imaging Reporting and Data System (PI-RADS) guidelines. The generated ADC and DW images replace the original images for PCa detection. An independent set of 1,692 test cases (2,393 samples) was used for evaluation. The area under the receiver operating characteristic curve (AUC) was used as the primary metric, and statistical analysis was performed via bootstrapping. For all test cases, the AUC values for baseline SL and UDA methods were 0.73 and 0.79 (p<.001), respectively, for PI-RADS>=3, and 0.77 and 0.80 (p<.001) for PI-RADS>=4 PCa lesions. In the 361 test cases under the most unfavorable image acquisition setting, the AUC values for baseline SL and UDA were 0.49 and 0.76 (p<.001) for PI-RADS>=3, and 0.50 and 0.77 (p<.001) for PI-RADS>=4 PCa lesions. The results indicate the proposed UDA with generated images improved the performance of SL methods in multi-site PCa lesion detection across datasets with various b values, especially for images acquired with significant deviations from the PI-RADS recommended DWI protocol (e.g. with an extremely high b-value).

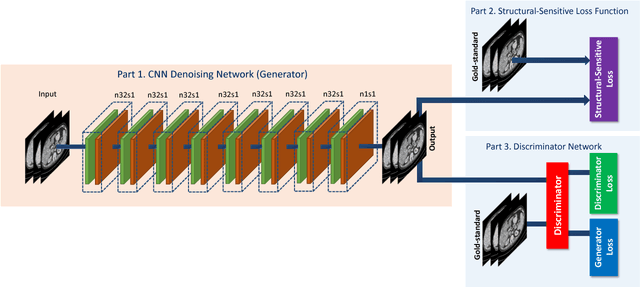

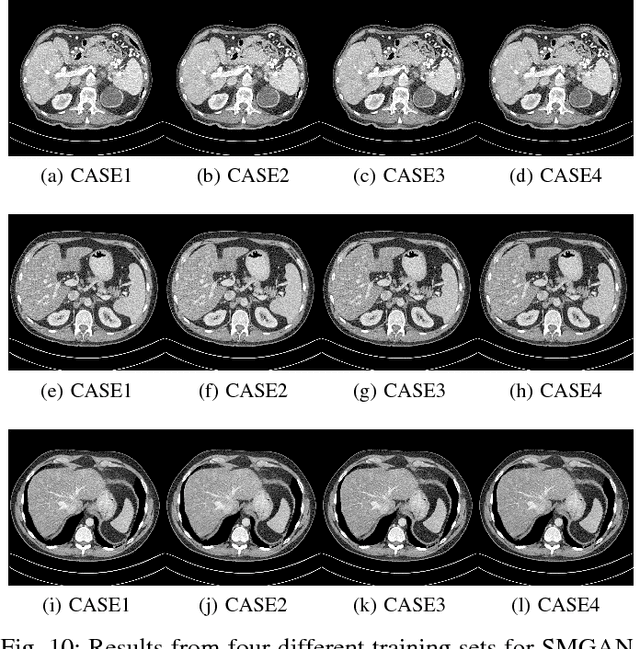

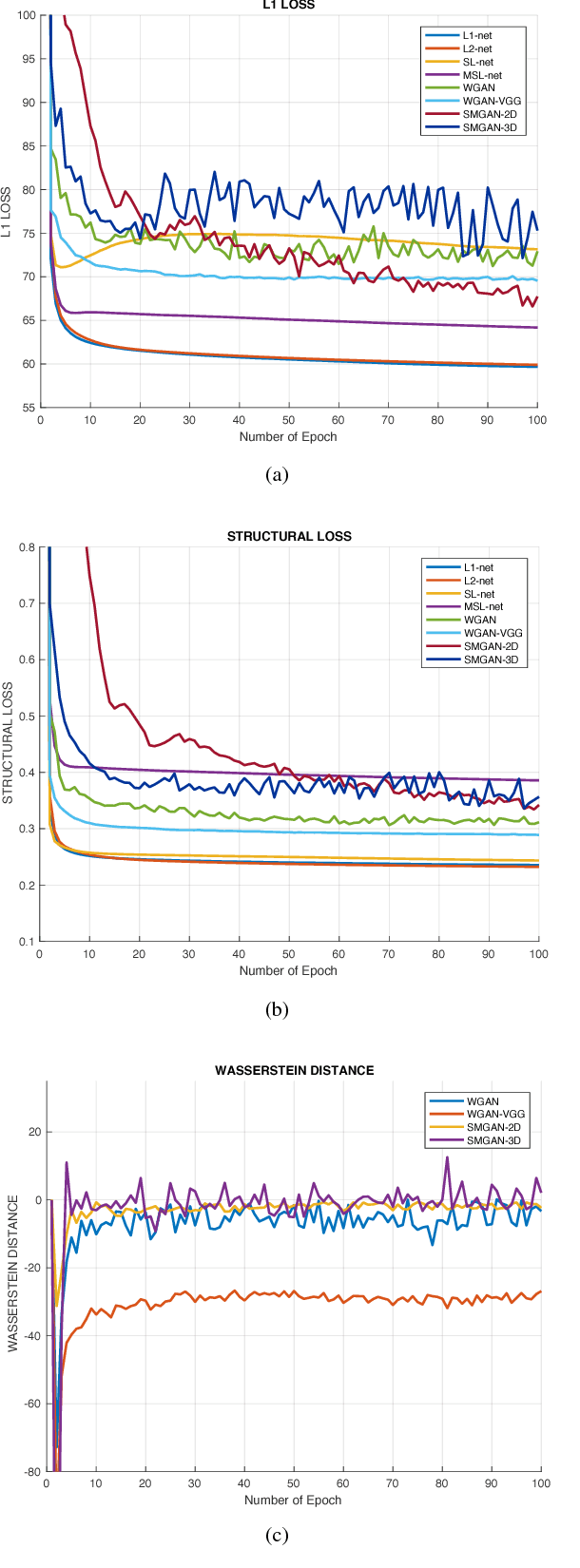

Structure-sensitive Multi-scale Deep Neural Network for Low-Dose CT Denoising

Aug 10, 2018

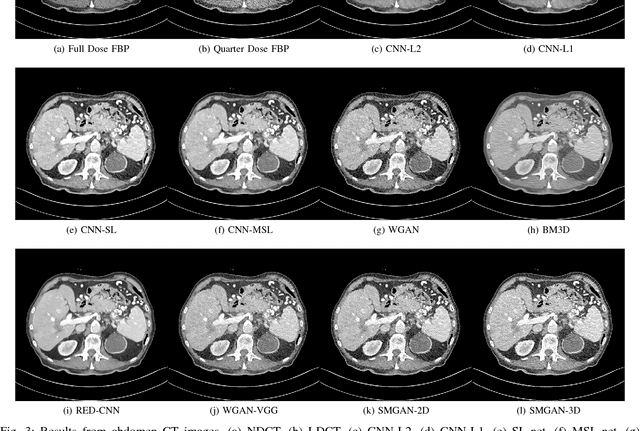

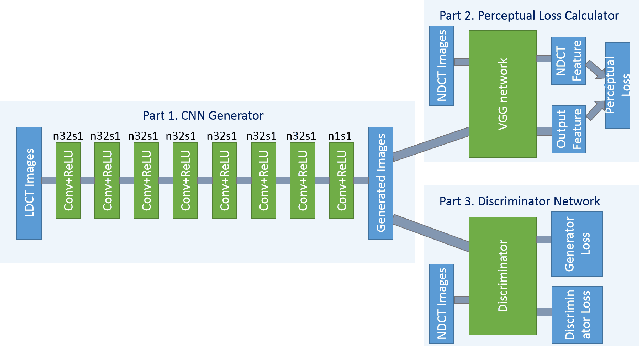

Abstract:Computed tomography (CT) is a popular medical imaging modality in clinical applications. At the same time, the x-ray radiation dose associated with CT scans raises public concerns due to its potential risks to the patients. Over the past years, major efforts have been dedicated to the development of Low-Dose CT (LDCT) methods. However, the radiation dose reduction compromises the signal-to-noise ratio (SNR), leading to strong noise and artifacts that down-grade CT image quality. In this paper, we propose a novel 3D noise reduction method, called Structure-sensitive Multi-scale Generative Adversarial Net (SMGAN), to improve the LDCT image quality. Specifically, we incorporate three-dimensional (3D) volumetric information to improve the image quality. Also, different loss functions for training denoising models are investigated. Experiments show that the proposed method can effectively preserve structural and texture information from normal-dose CT (NDCT) images, and significantly suppress noise and artifacts. Qualitative visual assessments by three experienced radiologists demonstrate that the proposed method retrieves more detailed information, and outperforms competing methods.

3D Convolutional Encoder-Decoder Network for Low-Dose CT via Transfer Learning from a 2D Trained Network

Apr 29, 2018

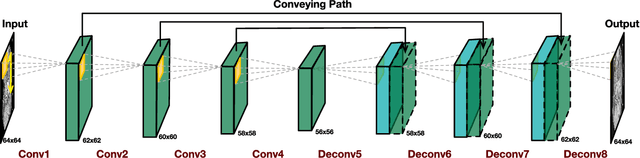

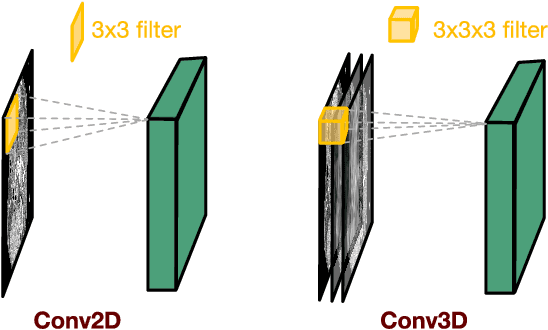

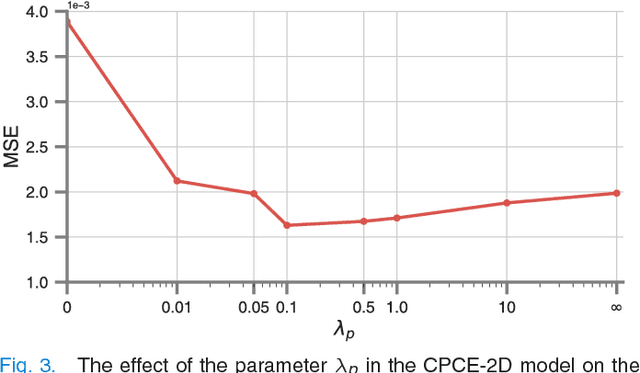

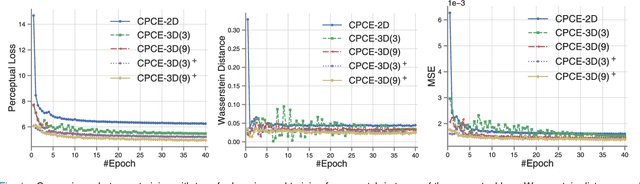

Abstract:Low-dose computed tomography (CT) has attracted a major attention in the medical imaging field, since CT-associated x-ray radiation carries health risks for patients. The reduction of CT radiation dose, however, compromises the signal-to-noise ratio, and may compromise the image quality and the diagnostic performance. Recently, deep-learning-based algorithms have achieved promising results in low-dose CT denoising, especially convolutional neural network (CNN) and generative adversarial network (GAN). This article introduces a Contracting Path-based Convolutional Encoder-decoder (CPCE) network in 2D and 3D configurations within the GAN framework for low-dose CT denoising. A novel feature of our approach is that an initial 3D CPCE denoising model can be directly obtained by extending a trained 2D CNN and then fine-tuned to incorporate 3D spatial information from adjacent slices. Based on the transfer learning from 2D to 3D, the 3D network converges faster and achieves a better denoising performance than that trained from scratch. By comparing the CPCE with recently published methods based on the simulated Mayo dataset and the real MGH dataset, we demonstrate that the 3D CPCE denoising model has a better performance, suppressing image noise and preserving subtle structures.

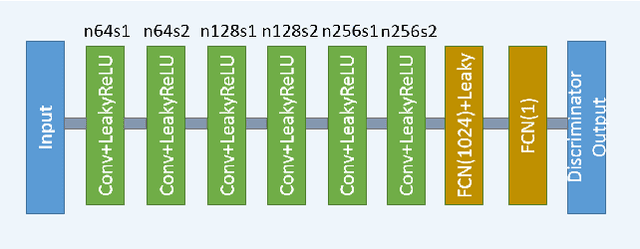

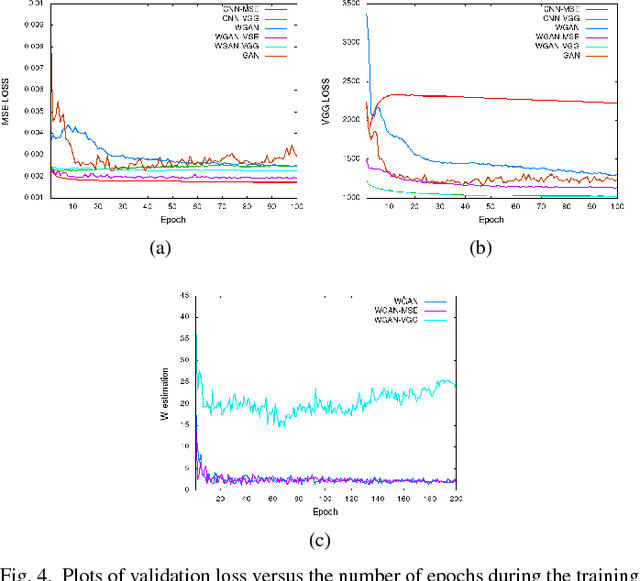

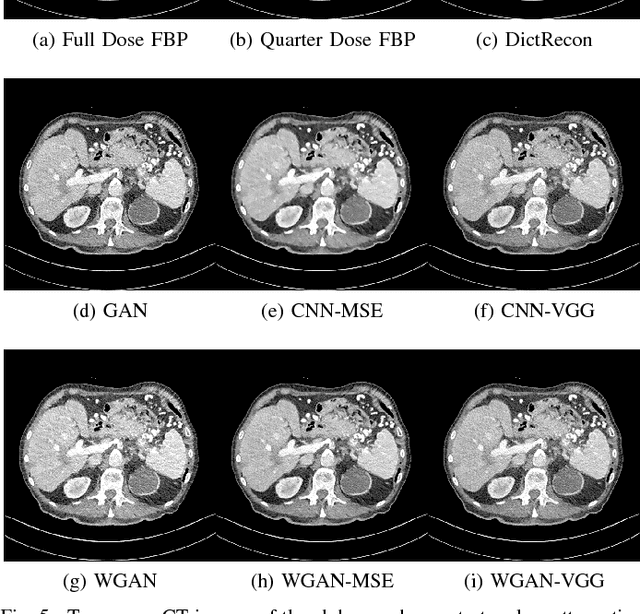

Low Dose CT Image Denoising Using a Generative Adversarial Network with Wasserstein Distance and Perceptual Loss

Apr 24, 2018

Abstract:In this paper, we introduce a new CT image denoising method based on the generative adversarial network (GAN) with Wasserstein distance and perceptual similarity. The Wasserstein distance is a key concept of the optimal transform theory, and promises to improve the performance of the GAN. The perceptual loss compares the perceptual features of a denoised output against those of the ground truth in an established feature space, while the GAN helps migrate the data noise distribution from strong to weak. Therefore, our proposed method transfers our knowledge of visual perception to the image denoising task, is capable of not only reducing the image noise level but also keeping the critical information at the same time. Promising results have been obtained in our experiments with clinical CT images.

CT Image Denoising with Perceptive Deep Neural Networks

Feb 22, 2017

Abstract:Increasing use of CT in modern medical practice has raised concerns over associated radiation dose. Reduction of radiation dose associated with CT can increase noise and artifacts, which can adversely affect diagnostic confidence. Denoising of low-dose CT images on the other hand can help improve diagnostic confidence, which however is a challenging problem due to its ill-posed nature, since one noisy image patch may correspond to many different output patches. In the past decade, machine learning based approaches have made quite impressive progress in this direction. However, most of those methods, including the recently popularized deep learning techniques, aim for minimizing mean-squared-error (MSE) between a denoised CT image and the ground truth, which results in losing important structural details due to over-smoothing, although the PSNR based performance measure looks great. In this work, we introduce a new perceptual similarity measure as the objective function for a deep convolutional neural network to facilitate CT image denoising. Instead of directly computing MSE for pixel-to-pixel intensity loss, we compare the perceptual features of a denoised output against those of the ground truth in a feature space. Therefore, our proposed method is capable of not only reducing the image noise levels, but also keeping the critical structural information at the same time. Promising results have been obtained in our experiments with a large number of CT images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge