Qiang Zhu

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

LingLanMiDian: Systematic Evaluation of LLMs on TCM Knowledge and Clinical Reasoning

Feb 02, 2026Abstract:Large language models (LLMs) are advancing rapidly in medical NLP, yet Traditional Chinese Medicine (TCM) with its distinctive ontology, terminology, and reasoning patterns requires domain-faithful evaluation. Existing TCM benchmarks are fragmented in coverage and scale and rely on non-unified or generation-heavy scoring that hinders fair comparison. We present the LingLanMiDian (LingLan) benchmark, a large-scale, expert-curated, multi-task suite that unifies evaluation across knowledge recall, multi-hop reasoning, information extraction, and real-world clinical decision-making. LingLan introduces a consistent metric design, a synonym-tolerant protocol for clinical labels, a per-dataset 400-item Hard subset, and a reframing of diagnosis and treatment recommendation into single-choice decision recognition. We conduct comprehensive, zero-shot evaluations on 14 leading open-source and proprietary LLMs, providing a unified perspective on their strengths and limitations in TCM commonsense knowledge understanding, reasoning, and clinical decision support; critically, the evaluation on Hard subset reveals a substantial gap between current models and human experts in TCM-specialized reasoning. By bridging fundamental knowledge and applied reasoning through standardized evaluation, LingLan establishes a unified, quantitative, and extensible foundation for advancing TCM LLMs and domain-specific medical AI research. All evaluation data and code are available at https://github.com/TCMAI-BJTU/LingLan and http://tcmnlp.com.

UCPO: Uncertainty-Aware Policy Optimization

Jan 30, 2026Abstract:The key to building trustworthy Large Language Models (LLMs) lies in endowing them with inherent uncertainty expression capabilities to mitigate the hallucinations that restrict their high-stakes applications. However, existing RL paradigms such as GRPO often suffer from Advantage Bias due to binary decision spaces and static uncertainty rewards, inducing either excessive conservatism or overconfidence. To tackle this challenge, this paper unveils the root causes of reward hacking and overconfidence in current RL paradigms incorporating uncertainty-based rewards, based on which we propose the UnCertainty-Aware Policy Optimization (UCPO) framework. UCPO employs Ternary Advantage Decoupling to separate and independently normalize deterministic and uncertain rollouts, thereby eliminating advantage bias. Furthermore, a Dynamic Uncertainty Reward Adjustment mechanism is introduced to calibrate uncertainty weights in real-time according to model evolution and instance difficulty. Experimental results in mathematical reasoning and general tasks demonstrate that UCPO effectively resolves the reward imbalance, significantly improving the reliability and calibration of the model beyond their knowledge boundaries.

Crystal Generation using the Fully Differentiable Pipeline and Latent Space Optimization

Jan 08, 2026Abstract:We present a materials generation framework that couples a symmetry-conditioned variational autoencoder (CVAE) with a differentiable SO(3) power spectrum objective to steer candidates toward a specified local environment under the crystallographic constraints. In particular, we implement a fully differentiable pipeline that performs batch-wise optimization on both direct and latent crystallographic representations. Using the GPU acceleration, the implementation achieves about fivefold speed compared to our previous CPU workflow, while yielding comparable outcomes. In addition, we introduce the optimization strategy that alternatively performs optimization on the direct and latent crystal representations. This dual-level relaxation approach can effectively overcome local barrier defined by different objective gradients, thus increasing the success rate of generating complex structures satisfying the targe local environments. This framework can be extended to systems consisting of multi-components and multi-environments, providing a scalable route to generate material structures with the target local environment.

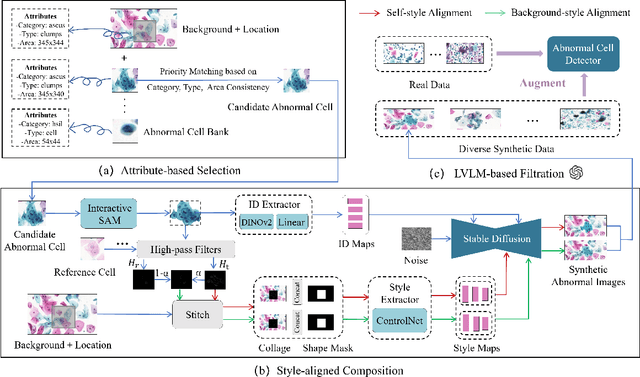

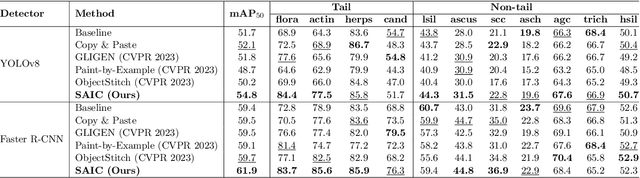

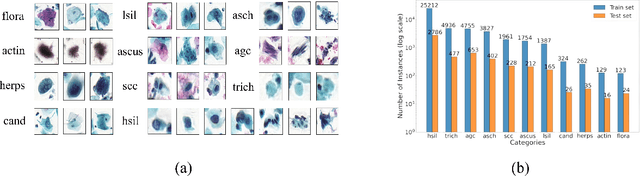

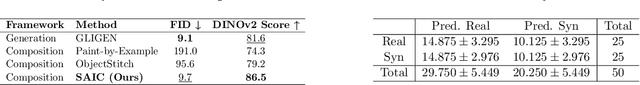

Style-Aligned Image Composition for Robust Detection of Abnormal Cells in Cytopathology

Jun 26, 2025

Abstract:Challenges such as the lack of high-quality annotations, long-tailed data distributions, and inconsistent staining styles pose significant obstacles to training neural networks to detect abnormal cells in cytopathology robustly. This paper proposes a style-aligned image composition (SAIC) method that composes high-fidelity and style-preserved pathological images to enhance the effectiveness and robustness of detection models. Without additional training, SAIC first selects an appropriate candidate from the abnormal cell bank based on attribute guidance. Then, it employs a high-frequency feature reconstruction to achieve a style-aligned and high-fidelity composition of abnormal cells and pathological backgrounds. Finally, it introduces a large vision-language model to filter high-quality synthesis images. Experimental results demonstrate that incorporating SAIC-synthesized images effectively enhances the performance and robustness of abnormal cell detection for tail categories and styles, thereby improving overall detection performance. The comprehensive quality evaluation further confirms the generalizability and practicality of SAIC in clinical application scenarios. Our code will be released at https://github.com/Joey-Qi/SAIC.

Compressed Video Super-Resolution based on Hierarchical Encoding

Jun 17, 2025

Abstract:This paper presents a general-purpose video super-resolution (VSR) method, dubbed VSR-HE, specifically designed to enhance the perceptual quality of compressed content. Targeting scenarios characterized by heavy compression, the method upscales low-resolution videos by a ratio of four, from 180p to 720p or from 270p to 1080p. VSR-HE adopts hierarchical encoding transformer blocks and has been sophisticatedly optimized to eliminate a wide range of compression artifacts commonly introduced by H.265/HEVC encoding across various quantization parameter (QP) levels. To ensure robustness and generalization, the model is trained and evaluated under diverse compression settings, allowing it to effectively restore fine-grained details and preserve visual fidelity. The proposed VSR-HE has been officially submitted to the ICME 2025 Grand Challenge on VSR for Video Conferencing (Team BVI-VSR), under both the Track 1 (General-Purpose Real-World Video Content) and Track 2 (Talking Head Videos).

AI-Assisted Rapid Crystal Structure Generation Towards a Target Local Environment

Jun 09, 2025

Abstract:In the field of material design, traditional crystal structure prediction approaches require extensive structural sampling through computationally expensive energy minimization methods using either force fields or quantum mechanical simulations. While emerging artificial intelligence (AI) generative models have shown great promise in generating realistic crystal structures more rapidly, most existing models fail to account for the unique symmetries and periodicity of crystalline materials, and they are limited to handling structures with only a few tens of atoms per unit cell. Here, we present a symmetry-informed AI generative approach called Local Environment Geometry-Oriented Crystal Generator (LEGO-xtal) that overcomes these limitations. Our method generates initial structures using AI models trained on an augmented small dataset, and then optimizes them using machine learning structure descriptors rather than traditional energy-based optimization. We demonstrate the effectiveness of LEGO-xtal by expanding from 25 known low-energy sp2 carbon allotropes to over 1,700, all within 0.5 eV/atom of the ground-state energy of graphite. This framework offers a generalizable strategy for the targeted design of materials with modular building blocks, such as metal-organic frameworks and next-generation battery materials.

Image-to-Image Translation with Diffusion Transformers and CLIP-Based Image Conditioning

May 21, 2025Abstract:Image-to-image translation aims to learn a mapping between a source and a target domain, enabling tasks such as style transfer, appearance transformation, and domain adaptation. In this work, we explore a diffusion-based framework for image-to-image translation by adapting Diffusion Transformers (DiT), which combine the denoising capabilities of diffusion models with the global modeling power of transformers. To guide the translation process, we condition the model on image embeddings extracted from a pre-trained CLIP encoder, allowing for fine-grained and structurally consistent translations without relying on text or class labels. We incorporate both a CLIP similarity loss to enforce semantic consistency and an LPIPS perceptual loss to enhance visual fidelity during training. We validate our approach on two benchmark datasets: face2comics, which translates real human faces to comic-style illustrations, and edges2shoes, which translates edge maps to realistic shoe images. Experimental results demonstrate that DiT, combined with CLIP-based conditioning and perceptual similarity objectives, achieves high-quality, semantically faithful translations, offering a promising alternative to GAN-based models for paired image-to-image translation tasks.

NTIRE 2025 Challenge on Image Super-Resolution ($\times$4): Methods and Results

Apr 20, 2025Abstract:This paper presents the NTIRE 2025 image super-resolution ($\times$4) challenge, one of the associated competitions of the 10th NTIRE Workshop at CVPR 2025. The challenge aims to recover high-resolution (HR) images from low-resolution (LR) counterparts generated through bicubic downsampling with a $\times$4 scaling factor. The objective is to develop effective network designs or solutions that achieve state-of-the-art SR performance. To reflect the dual objectives of image SR research, the challenge includes two sub-tracks: (1) a restoration track, emphasizes pixel-wise accuracy and ranks submissions based on PSNR; (2) a perceptual track, focuses on visual realism and ranks results by a perceptual score. A total of 286 participants registered for the competition, with 25 teams submitting valid entries. This report summarizes the challenge design, datasets, evaluation protocol, the main results, and methods of each team. The challenge serves as a benchmark to advance the state of the art and foster progress in image SR.

The Tenth NTIRE 2025 Efficient Super-Resolution Challenge Report

Apr 14, 2025Abstract:This paper presents a comprehensive review of the NTIRE 2025 Challenge on Single-Image Efficient Super-Resolution (ESR). The challenge aimed to advance the development of deep models that optimize key computational metrics, i.e., runtime, parameters, and FLOPs, while achieving a PSNR of at least 26.90 dB on the $\operatorname{DIV2K\_LSDIR\_valid}$ dataset and 26.99 dB on the $\operatorname{DIV2K\_LSDIR\_test}$ dataset. A robust participation saw \textbf{244} registered entrants, with \textbf{43} teams submitting valid entries. This report meticulously analyzes these methods and results, emphasizing groundbreaking advancements in state-of-the-art single-image ESR techniques. The analysis highlights innovative approaches and establishes benchmarks for future research in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge