Pierre-Marc Jodoin

MYRIAD

Clinical-ComBAT: a diffusion-weighted MRI harmonization method for clinical applications

Nov 06, 2025Abstract:Diffusion-weighted magnetic resonance imaging (DW-MRI) derived scalar maps are effective for assessing neurodegenerative diseases and microstructural properties of white matter in large number of brain conditions. However, DW-MRI inherently limits the combination of data from multiple acquisition sites without harmonization to mitigate scanner-specific biases. While the widely used ComBAT method reduces site effects in research, its reliance on linear covariate relationships, homogeneous populations, fixed site numbers, and well populated sites constrains its clinical use. To overcome these limitations, we propose Clinical-ComBAT, a method designed for real-world clinical scenarios. Clinical-ComBAT harmonizes each site independently, enabling flexibility as new data and clinics are introduced. It incorporates a non-linear polynomial data model, site-specific harmonization referenced to a normative site, and variance priors adaptable to small cohorts. It further includes hyperparameter tuning and a goodness-of-fit metric for harmonization assessment. We demonstrate its effectiveness on simulated and real data, showing improved alignment of diffusion metrics and enhanced applicability for normative modeling.

Reinforcement Learning for Unsupervised Domain Adaptation in Spatio-Temporal Echocardiography Segmentation

Oct 16, 2025Abstract:Domain adaptation methods aim to bridge the gap between datasets by enabling knowledge transfer across domains, reducing the need for additional expert annotations. However, many approaches struggle with reliability in the target domain, an issue particularly critical in medical image segmentation, where accuracy and anatomical validity are essential. This challenge is further exacerbated in spatio-temporal data, where the lack of temporal consistency can significantly degrade segmentation quality, and particularly in echocardiography, where the presence of artifacts and noise can further hinder segmentation performance. To address these issues, we present RL4Seg3D, an unsupervised domain adaptation framework for 2D + time echocardiography segmentation. RL4Seg3D integrates novel reward functions and a fusion scheme to enhance key landmark precision in its segmentations while processing full-sized input videos. By leveraging reinforcement learning for image segmentation, our approach improves accuracy, anatomical validity, and temporal consistency while also providing, as a beneficial side effect, a robust uncertainty estimator, which can be used at test time to further enhance segmentation performance. We demonstrate the effectiveness of our framework on over 30,000 echocardiographic videos, showing that it outperforms standard domain adaptation techniques without the need for any labels on the target domain. Code is available at https://github.com/arnaudjudge/RL4Seg3D.

Generation of realistic cardiac ultrasound sequences with ground truth motion and speckle decorrelation

Sep 05, 2025

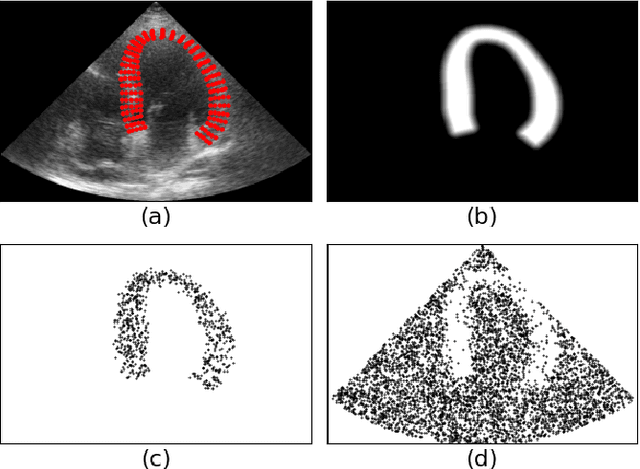

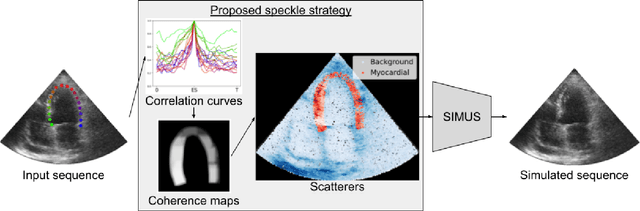

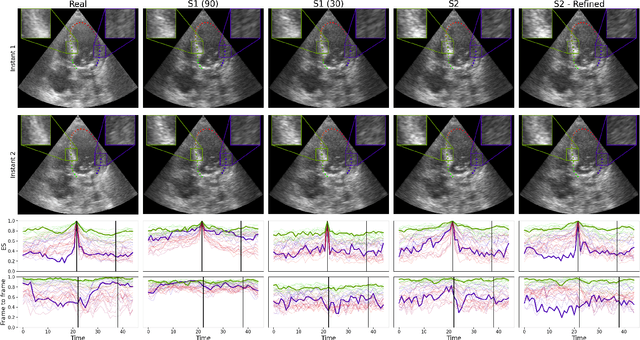

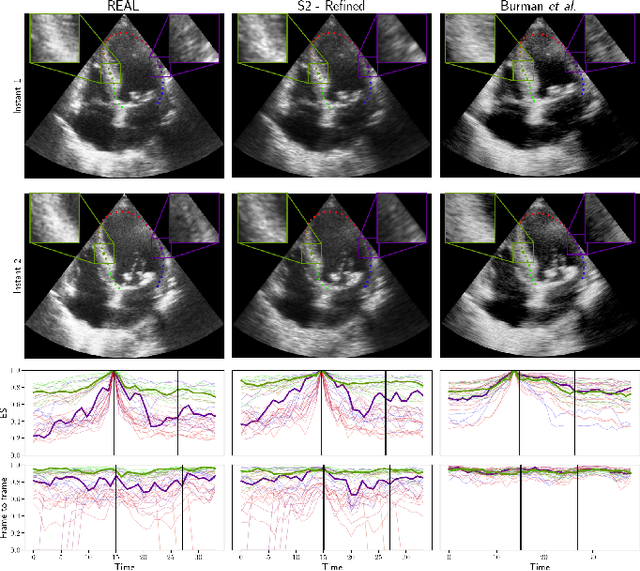

Abstract:Simulated ultrasound image sequences are key for training and validating machine learning algorithms for left ventricular strain estimation. Several simulation pipelines have been proposed to generate sequences with corresponding ground truth motion, but they suffer from limited realism as they do not consider speckle decorrelation. In this work, we address this limitation by proposing an improved simulation framework that explicitly accounts for speckle decorrelation. Our method builds on an existing ultrasound simulation pipeline by incorporating a dynamic model of speckle variation. Starting from real ultrasound sequences and myocardial segmentations, we generate meshes that guide image formation. Instead of applying a fixed ratio of myocardial and background scatterers, we introduce a coherence map that adapts locally over time. This map is derived from correlation values measured directly from the real ultrasound data, ensuring that simulated sequences capture the characteristic temporal changes observed in practice. We evaluated the realism of our approach using ultrasound data from 98 patients in the CAMUS database. Performance was assessed by comparing correlation curves from real and simulated images. The proposed method achieved lower mean absolute error compared to the baseline pipeline, indicating that it more faithfully reproduces the decorrelation behavior seen in clinical data.

Exploring the robustness of TractOracle methods in RL-based tractography

Jul 15, 2025

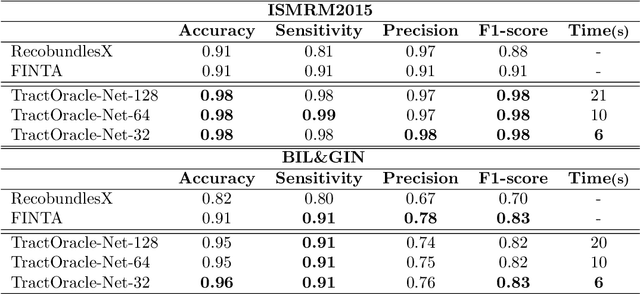

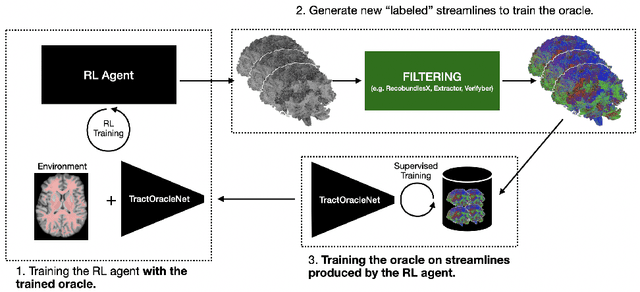

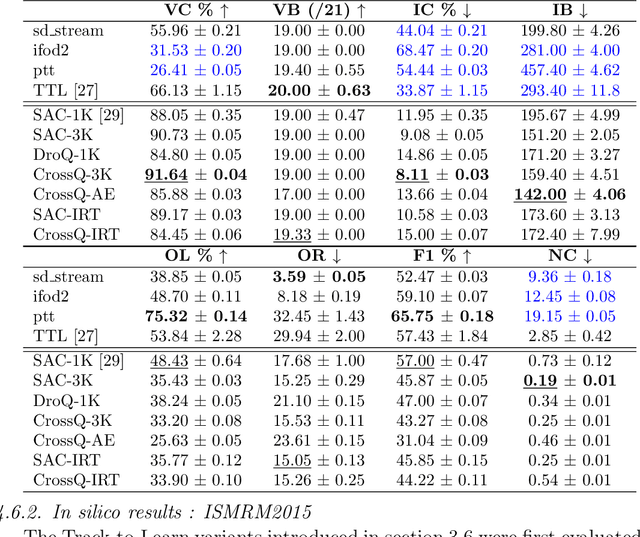

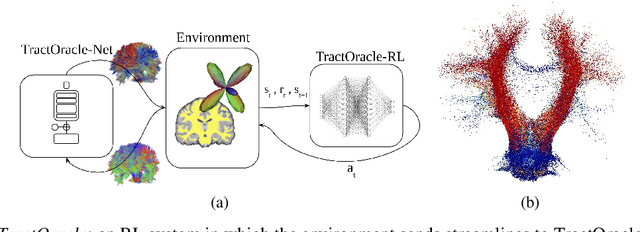

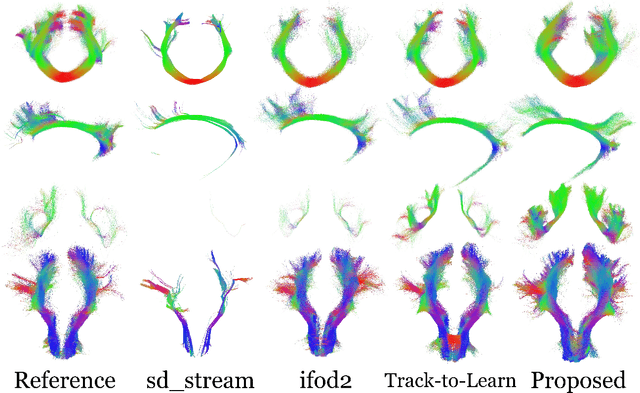

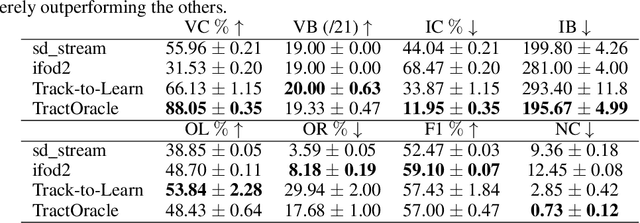

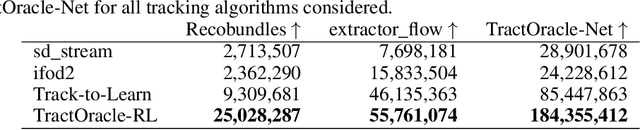

Abstract:Tractography algorithms leverage diffusion MRI to reconstruct the fibrous architecture of the brain's white matter. Among machine learning approaches, reinforcement learning (RL) has emerged as a promising framework for tractography, outperforming traditional methods in several key aspects. TractOracle-RL, a recent RL-based approach, reduces false positives by incorporating anatomical priors into the training process via a reward-based mechanism. In this paper, we investigate four extensions of the original TractOracle-RL framework by integrating recent advances in RL, and we evaluate their performance across five diverse diffusion MRI datasets. Results demonstrate that combining an oracle with the RL framework consistently leads to robust and reliable tractography, regardless of the specific method or dataset used. We also introduce a novel RL training scheme called Iterative Reward Training (IRT), inspired by the Reinforcement Learning from Human Feedback (RLHF) paradigm. Instead of relying on human input, IRT leverages bundle filtering methods to iteratively refine the oracle's guidance throughout training. Experimental results show that RL methods trained with oracle feedback significantly outperform widely used tractography techniques in terms of accuracy and anatomical validity.

ComBAT Harmonization for diffusion MRI: Challenges and Best Practices

May 19, 2025Abstract:Over the years, ComBAT has become the standard method for harmonizing MRI-derived measurements, with its ability to compensate for site-related additive and multiplicative biases while preserving biological variability. However, ComBAT relies on a set of assumptions that, when violated, can result in flawed harmonization. In this paper, we thoroughly review ComBAT's mathematical foundation, outlining these assumptions, and exploring their implications for the demographic composition necessary for optimal results. Through a series of experiments involving a slightly modified version of ComBAT called Pairwise-ComBAT tailored for normative modeling applications, we assess the impact of various population characteristics, including population size, age distribution, the absence of certain covariates, and the magnitude of additive and multiplicative factors. Based on these experiments, we present five essential recommendations that should be carefully considered to enhance consistency and supporting reproducibility, two essential factors for open science, collaborative research, and real-life clinical deployment.

Uncertainty Propagation for Echocardiography Clinical Metric Estimation via Contour Sampling

Feb 18, 2025Abstract:Echocardiography plays a fundamental role in the extraction of important clinical parameters (e.g. left ventricular volume and ejection fraction) required to determine the presence and severity of heart-related conditions. When deploying automated techniques for computing these parameters, uncertainty estimation is crucial for assessing their utility. Since clinical parameters are usually derived from segmentation maps, there is no clear path for converting pixel-wise uncertainty values into uncertainty estimates in the downstream clinical metric calculation. In this work, we propose a novel uncertainty estimation method based on contouring rather than segmentation. Our method explicitly predicts contour location uncertainty from which contour samples can be drawn. Finally, the sampled contours can be used to propagate uncertainty to clinical metrics. Our proposed method not only provides accurate uncertainty estimations for the task of contouring but also for the downstream clinical metrics on two cardiac ultrasound datasets. Code is available at: https://github.com/ThierryJudge/contouring-uncertainty.

Domain Adaptation of Echocardiography Segmentation Via Reinforcement Learning

Jun 25, 2024

Abstract:Performance of deep learning segmentation models is significantly challenged in its transferability across different medical imaging domains, particularly when aiming to adapt these models to a target domain with insufficient annotated data for effective fine-tuning. While existing domain adaptation (DA) methods propose strategies to alleviate this problem, these methods do not explicitly incorporate human-verified segmentation priors, compromising the potential of a model to produce anatomically plausible segmentations. We introduce RL4Seg, an innovative reinforcement learning framework that reduces the need to otherwise incorporate large expertly annotated datasets in the target domain, and eliminates the need for lengthy manual human review. Using a target dataset of 10,000 unannotated 2D echocardiographic images, RL4Seg not only outperforms existing state-of-the-art DA methods in accuracy but also achieves 99% anatomical validity on a subset of 220 expert-validated subjects from the target domain. Furthermore, our framework's reward network offers uncertainty estimates comparable with dedicated state-of-the-art uncertainty methods, demonstrating the utility and effectiveness of RL4Seg in overcoming domain adaptation challenges in medical image segmentation.

TractOracle: towards an anatomically-informed reward function for RL-based tractography

Mar 26, 2024

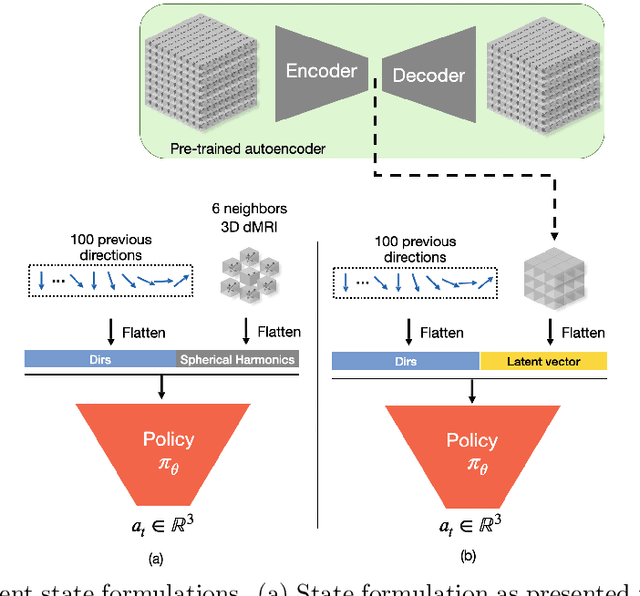

Abstract:Reinforcement learning (RL)-based tractography is a competitive alternative to machine learning and classical tractography algorithms due to its high anatomical accuracy obtained without the need for any annotated data. However, the reward functions so far used to train RL agents do not encapsulate anatomical knowledge which causes agents to generate spurious false positives tracts. In this paper, we propose a new RL tractography system, TractOracle, which relies on a reward network trained for streamline classification. This network is used both as a reward function during training as well as a mean for stopping the tracking process early and thus reduce the number of false positive streamlines. This makes our system a unique method that evaluates and reconstructs WM streamlines at the same time. We report an improvement of true positive ratios by almost 20\% and a reduction of 3x of false positive ratios on one dataset and an increase between 2x and 7x in the number true positive streamlines on another dataset.

Fusing Echocardiography Images and Medical Records for Continuous Patient Stratification

Jan 15, 2024Abstract:Deep learning now enables automatic and robust extraction of cardiac function descriptors from echocardiographic sequences, such as ejection fraction or strain. These descriptors provide fine-grained information that physicians consider, in conjunction with more global variables from the clinical record, to assess patients' condition. Drawing on novel transformer models applied to tabular data (e.g., variables from electronic health records), we propose a method that considers all descriptors extracted from medical records and echocardiograms to learn the representation of a difficult-to-characterize cardiovascular pathology, namely hypertension. Our method first projects each variable into its own representation space using modality-specific approaches. These standardized representations of multimodal data are then fed to a transformer encoder, which learns to merge them into a comprehensive representation of the patient through a pretext task of predicting a clinical rating. This pretext task is formulated as an ordinal classification to enforce a pathological continuum in the representation space. We observe the major trends along this continuum for a cohort of 239 hypertensive patients to describe, with unprecedented gradation, the effect of hypertension on a number of cardiac function descriptors. Our analysis shows that i) pretrained weights from a foundation model allow to reach good performance (83% accuracy) even with limited data (less than 200 training samples), ii) trends across the population are reproducible between trainings, and iii) for descriptors whose interactions with hypertension are well documented, patterns are consistent with prior physiological knowledge.

Merging multiple input descriptors and supervisors in a deep neural network for tractogram filtering

Jul 11, 2023Abstract:One of the main issues of the current tractography methods is their high false-positive rate. Tractogram filtering is an option to remove false-positive streamlines from tractography data in a post-processing step. In this paper, we train a deep neural network for filtering tractography data in which every streamline of a tractogram is classified as {\em plausible, implausible}, or {\em inconclusive}. For this, we use four different tractogram filtering strategies as supervisors: TractQuerier, RecobundlesX, TractSeg, and an anatomy-inspired filter. Their outputs are combined to obtain the classification labels for the streamlines. We assessed the importance of different types of information along the streamlines for performing this classification task, including the coordinates of the streamlines, diffusion data, landmarks, T1-weighted information, and a brain parcellation. We found that the streamline coordinates are the most relevant followed by the diffusion data in this particular classification task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge