Nathan Painchaud

DAFTED: Decoupled Asymmetric Fusion of Tabular and Echocardiographic Data for Cardiac Hypertension Diagnosis

Sep 19, 2025Abstract:Multimodal data fusion is a key approach for enhancing diagnosis in medical applications. We propose an asymmetric fusion strategy starting from a primary modality and integrating secondary modalities by disentangling shared and modality-specific information. Validated on a dataset of 239 patients with echocardiographic time series and tabular records, our model outperforms existing methods, achieving an AUC over 90%. This improvement marks a crucial benchmark for clinical use.

Fusing Echocardiography Images and Medical Records for Continuous Patient Stratification

Jan 15, 2024Abstract:Deep learning now enables automatic and robust extraction of cardiac function descriptors from echocardiographic sequences, such as ejection fraction or strain. These descriptors provide fine-grained information that physicians consider, in conjunction with more global variables from the clinical record, to assess patients' condition. Drawing on novel transformer models applied to tabular data (e.g., variables from electronic health records), we propose a method that considers all descriptors extracted from medical records and echocardiograms to learn the representation of a difficult-to-characterize cardiovascular pathology, namely hypertension. Our method first projects each variable into its own representation space using modality-specific approaches. These standardized representations of multimodal data are then fed to a transformer encoder, which learns to merge them into a comprehensive representation of the patient through a pretext task of predicting a clinical rating. This pretext task is formulated as an ordinal classification to enforce a pathological continuum in the representation space. We observe the major trends along this continuum for a cohort of 239 hypertensive patients to describe, with unprecedented gradation, the effect of hypertension on a number of cardiac function descriptors. Our analysis shows that i) pretrained weights from a foundation model allow to reach good performance (83% accuracy) even with limited data (less than 200 training samples), ii) trends across the population are reproducible between trainings, and iii) for descriptors whose interactions with hypertension are well documented, patterns are consistent with prior physiological knowledge.

Extraction of volumetric indices from echocardiography: which deep learning solution for clinical use?

May 08, 2023Abstract:Deep learning-based methods have spearheaded the automatic analysis of echocardiographic images, taking advantage of the publication of multiple open access datasets annotated by experts (CAMUS being one of the largest public databases). However, these models are still considered unreliable by clinicians due to unresolved issues concerning i) the temporal consistency of their predictions, and ii) their ability to generalize across datasets. In this context, we propose a comprehensive comparison between the current best performing methods in medical/echocardiographic image segmentation, with a particular focus on temporal consistency and cross-dataset aspects. We introduce a new private dataset, named CARDINAL, of apical two-chamber and apical four-chamber sequences, with reference segmentation over the full cardiac cycle. We show that the proposed 3D nnU-Net outperforms alternative 2D and recurrent segmentation methods. We also report that the best models trained on CARDINAL, when tested on CAMUS without any fine-tuning, still manage to perform competitively with respect to prior methods. Overall, the experimental results suggest that with sufficient training data, 3D nnU-Net could become the first automated tool to finally meet the standards of an everyday clinical device.

Echocardiography Segmentation with Enforced Temporal Consistency

Dec 03, 2021

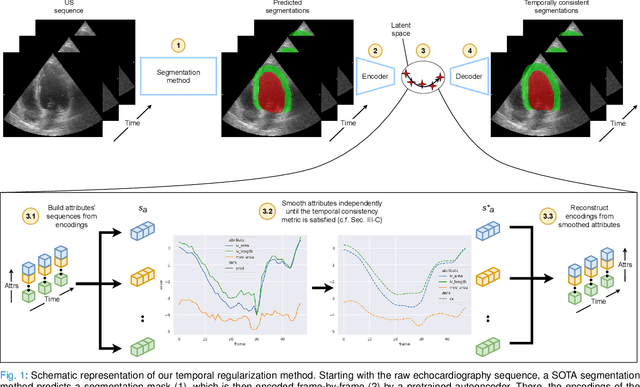

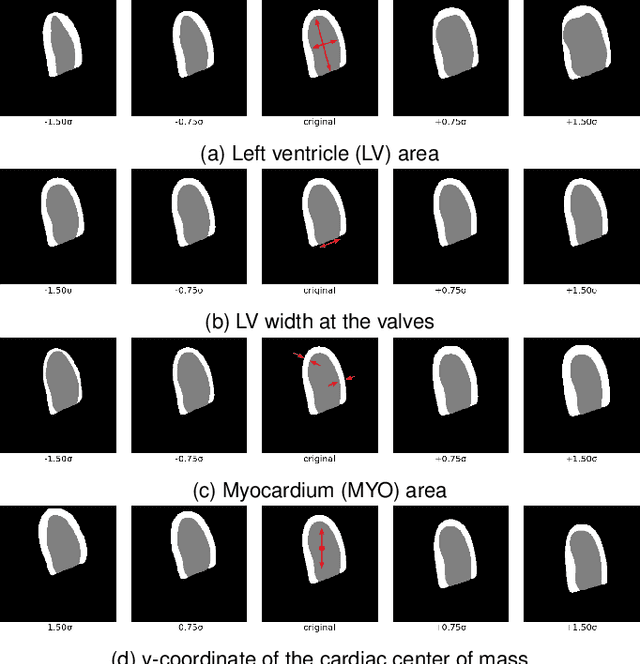

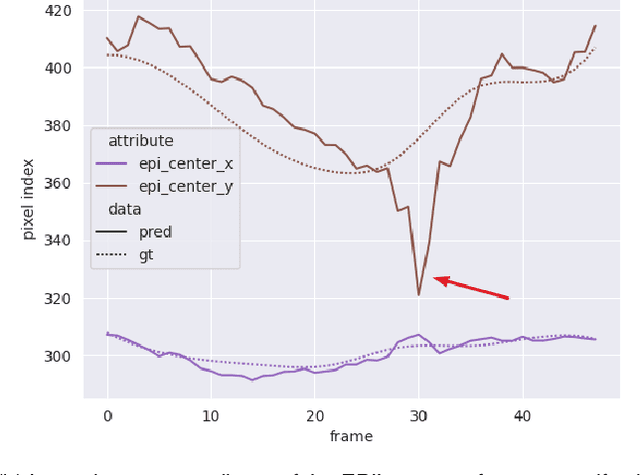

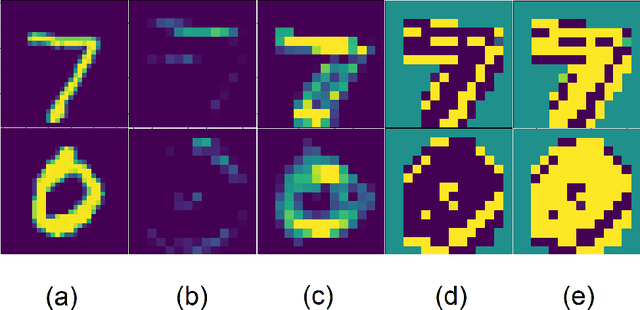

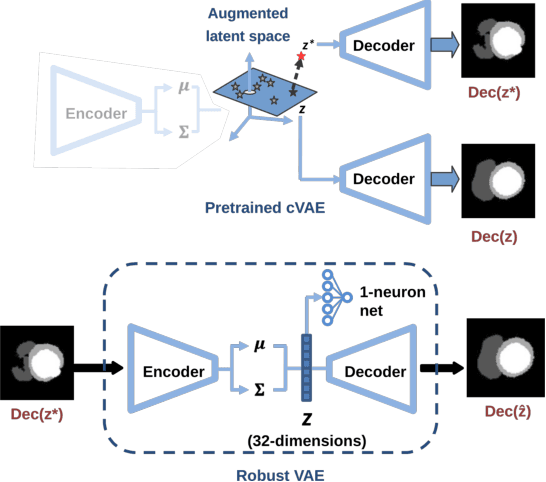

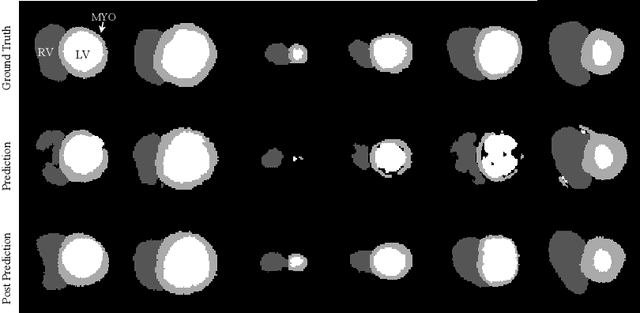

Abstract:Convolutional neural networks (CNN) have demonstrated their ability to segment 2D cardiac ultrasound images. However, despite recent successes according to which the intra-observer variability on end-diastole and end-systole images has been reached, CNNs still struggle to leverage temporal information to provide accurate and temporally consistent segmentation maps across the whole cycle. Such consistency is required to accurately describe the cardiac function, a necessary step in diagnosing many cardiovascular diseases. In this paper, we propose a framework to learn the 2D+time long-axis cardiac shape such that the segmented sequences can benefit from temporal and anatomical consistency constraints. Our method is a post-processing that takes as input segmented echocardiographic sequences produced by any state-of-the-art method and processes it in two steps to (i) identify spatio-temporal inconsistencies according to the overall dynamics of the cardiac sequence and (ii) correct the inconsistencies. The identification and correction of cardiac inconsistencies relies on a constrained autoencoder trained to learn a physiologically interpretable embedding of cardiac shapes, where we can both detect and fix anomalies. We tested our framework on 98 full-cycle sequences from the CAMUS dataset, which will be rendered public alongside this paper. Our temporal regularization method not only improves the accuracy of the segmentation across the whole sequences, but also enforces temporal and anatomical consistency.

Neural Teleportation

Dec 02, 2020

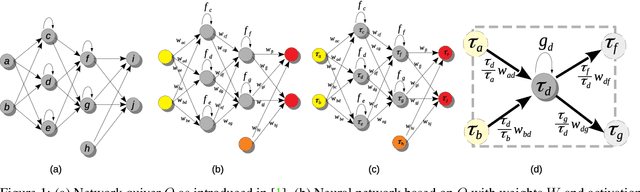

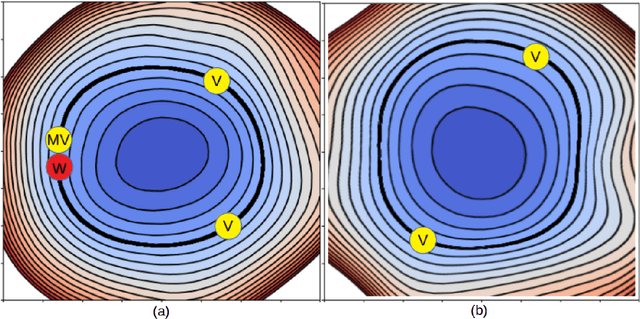

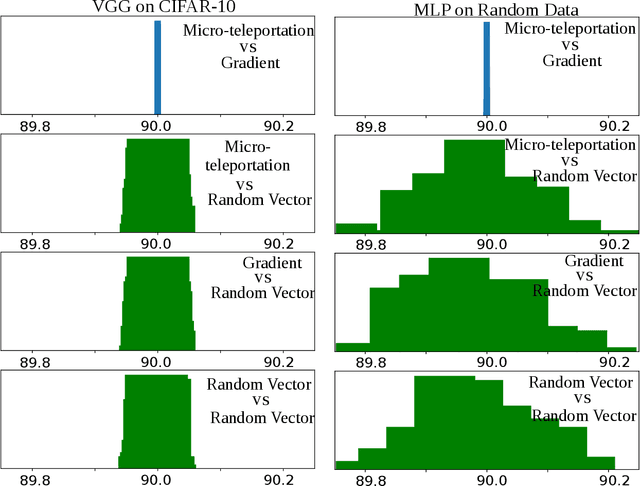

Abstract:In this paper, we explore a process called neural teleportation, a mathematical consequence of applying quiver representation theory to neural networks. Neural teleportation "teleports" a network to a new position in the weight space, while leaving its function unchanged. This concept generalizes the notion of positive scale invariance of ReLU networks to any network with any activation functions and any architecture. In this paper, we shed light on surprising and counter-intuitive consequences neural teleportation has on the loss landscape. In particular, we show that teleportation can be used to explore loss level curves, that it changes the loss landscape, sharpens global minima and boosts back-propagated gradients. From these observations, we demonstrate that teleportation accelerates training when used during initialization regardless of the model, its activation function, the loss function, and the training data. Our results can be reproduced with the code available here: https://github.com/vitalab/neuralteleportation.

Cardiac Segmentation with Strong Anatomical Guarantees

Jun 15, 2020

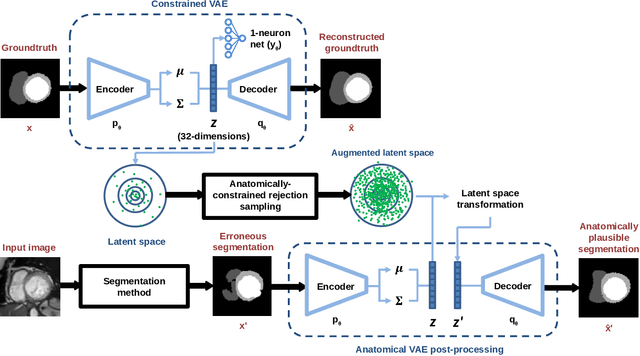

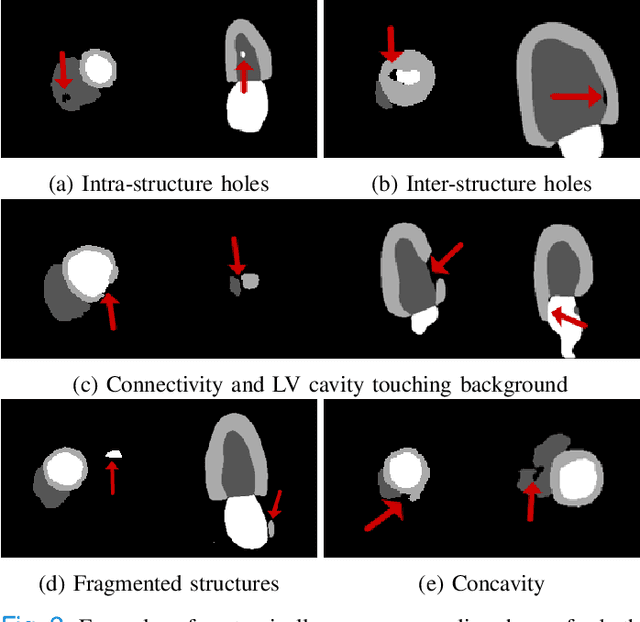

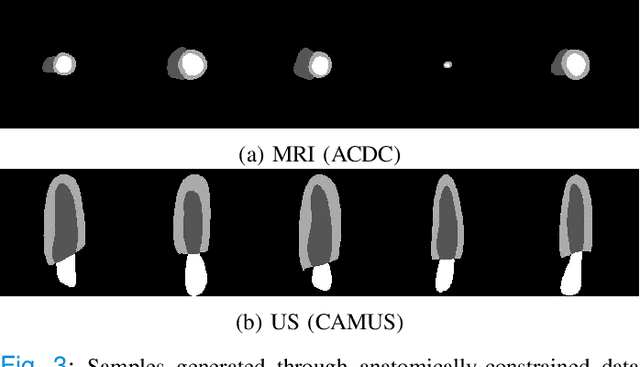

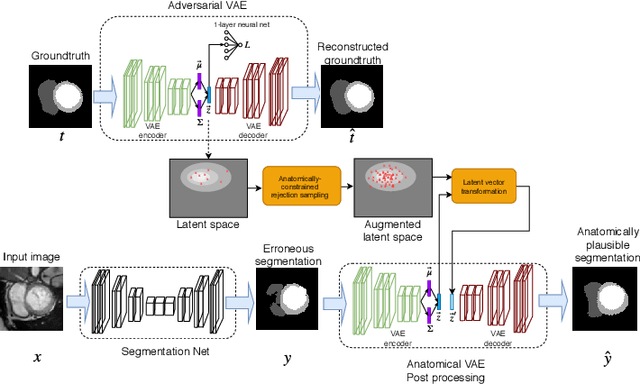

Abstract:Convolutional neural networks (CNN) have had unprecedented success in medical imaging and, in particular, in medical image segmentation. However, despite the fact that segmentation results are closer than ever to the inter-expert variability, CNNs are not immune to producing anatomically inaccurate segmentations, even when built upon a shape prior. In this paper, we present a framework for producing cardiac image segmentation maps that are guaranteed to respect pre-defined anatomical criteria, while remaining within the inter-expert variability. The idea behind our method is to use a well-trained CNN, have it process cardiac images, identify the anatomically implausible results and warp these results toward the closest anatomically valid cardiac shape. This warping procedure is carried out with a constrained variational autoencoder (cVAE) trained to learn a representation of valid cardiac shapes through a smooth, yet constrained, latent space. With this cVAE, we can project any implausible shape into the cardiac latent space and steer it toward the closest correct shape. We tested our framework on short-axis MRI as well as apical two and four-chamber view ultrasound images, two modalities for which cardiac shapes are drastically different. With our method, CNNs can now produce results that are both within the inter-expert variability and always anatomically plausible without having to rely on a shape prior.

On the effectiveness of GAN generated cardiac MRIs for segmentation

May 22, 2020

Abstract:In this work, we propose a Variational Autoencoder (VAE) - Generative Adversarial Networks (GAN) model that can produce highly realistic MRI together with its pixel accurate groundtruth for the application of cine-MR image cardiac segmentation. On one side of our model is a Variational Autoencoder (VAE) trained to learn the latent representations of cardiac shapes. On the other side is a GAN that uses "SPatially-Adaptive (DE)Normalization" (SPADE) modules to generate realistic MR images tailored to a given anatomical map. At test time, the sampling of the VAE latent space allows to generate an arbitrary large number of cardiac shapes, which are fed to the GAN that subsequently generates MR images whose cardiac structure fits that of the cardiac shapes. In other words, our system can generate a large volume of realistic yet labeled cardiac MR images. We show that segmentation with CNNs trained with our synthetic annotated images gets competitive results compared to traditional techniques. We also show that combining data augmentation with our GAN-generated images lead to an improvement in the Dice score of up to 12 percent while allowing for better generalization capabilities on other datasets.

Cardiac MRI Segmentation with Strong Anatomical Guarantees

Jul 05, 2019

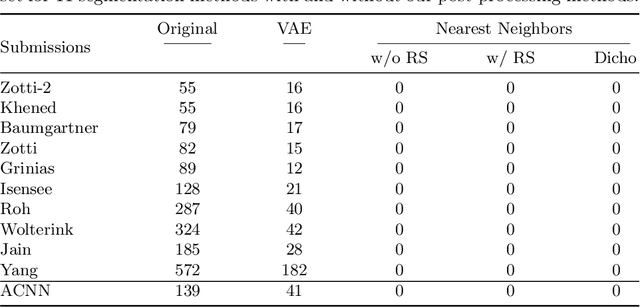

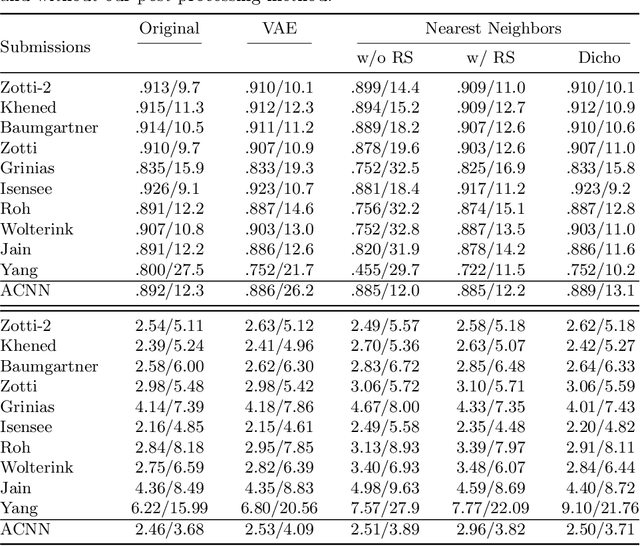

Abstract:Recent publications have shown that the segmentation accuracy of modern-day convolutional neural networks (CNN) applied on cardiac MRI can reach the inter-expert variability, a great achievement in this area of research. However, despite these successes, CNNs still produce anatomically inaccurate segmentations as they provide no guarantee on the anatomical plausibility of their outcome, even when using a shape prior. In this paper, we propose a cardiac MRI segmentation method which always produces anatomically plausible results. At the core of the method is an adversarial variational autoencoder (aVAE) whose latent space encodes a smooth manifold on which lies a large spectrum of valid cardiac shapes. This aVAE is used to automatically warp anatomically inaccurate cardiac shapes towards a close but correct shape. Our method can accommodate any cardiac segmentation method and convert its anatomically implausible results to plausible ones without affecting its overall geometric and clinical metrics. With our method, CNNs can now produce results that are both within the inter-expert variability and always anatomically plausible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge