Mohamed Daoudi

Localized Latent Editing for Dose-Response Modeling in Botulinum Toxin Injection Planning

Jan 27, 2026Abstract:Botulinum toxin (Botox) injections are the gold standard for managing facial asymmetry and aesthetic rejuvenation, yet determining the optimal dosage remains largely intuitive, often leading to suboptimal outcomes. We propose a localized latent editing framework that simulates Botulinum Toxin injection effects for injection planning through dose-response modeling. Our key contribution is a Region-Specific Latent Axis Discovery method that learns localized muscle relaxation trajectories in StyleGAN2's latent space, enabling precise control over specific facial regions without global side effects. By correlating these localized latent trajectories with injected toxin units, we learn a predictive dose-response model. We rigorously compare two approaches: direct metric regression versus image-based generative simulation on a clinical dataset of N=360 images from 46 patients. On a hold-out test set, our framework demonstrates moderate-to-strong structural correlations for geometric asymmetry metrics, confirming that the generative model correctly captures the direction of morphological changes. While biological variability limits absolute precision, we introduce a hybrid "Human-in-the-Loop" workflow where clinicians interactively refine simulations, bridging the gap between pathological reconstruction and cosmetic planning.

A Non-Invasive 3D Gait Analysis Framework for Quantifying Psychomotor Retardation in Major Depressive Disorder

Jan 27, 2026Abstract:Predicting the status of Major Depressive Disorder (MDD) from objective, non-invasive methods is an active research field. Yet, extracting automatically objective, interpretable features for a detailed analysis of the patient state remains largely unexplored. Among MDD's symptoms, Psychomotor retardation (PMR) is a core item, yet its clinical assessment remains largely subjective. While 3D motion capture offers an objective alternative, its reliance on specialized hardware often precludes routine clinical use. In this paper, we propose a non-invasive computational framework that transforms monocular RGB video into clinically relevant 3D gait kinematics. Our pipeline uses Gravity-View Coordinates along with a novel trajectory-correction algorithm that leverages the closed-loop topology of our adapted Timed Up and Go (TUG) protocol to mitigate monocular depth errors. This novel pipeline enables the extraction of 297 explicit gait biomechanical biomarkers from a single camera capture. To address the challenges of small clinical datasets, we introduce a stability-based machine learning framework that identifies robust motor signatures while preventing overfitting. Validated on the CALYPSO dataset, our method achieves an 83.3% accuracy in detecting PMR and explains 64% of the variance in overall depression severity (R^2=0.64). Notably, our study reveals a strong link between reduced ankle propulsion and restricted pelvic mobility to the depressive motor phenotype. These results demonstrate that physical movement serves as a robust proxy for the cognitive state, offering a transparent and scalable tool for the objective monitoring of depression in standard clinical environments.

REACT 2025: the Third Multiple Appropriate Facial Reaction Generation Challenge

May 22, 2025

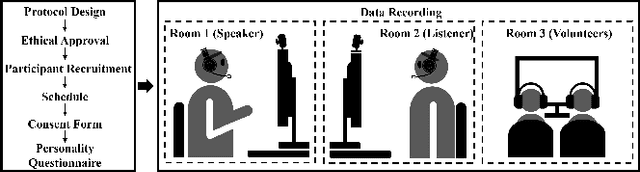

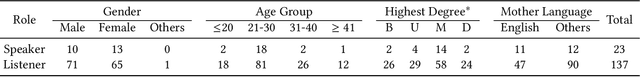

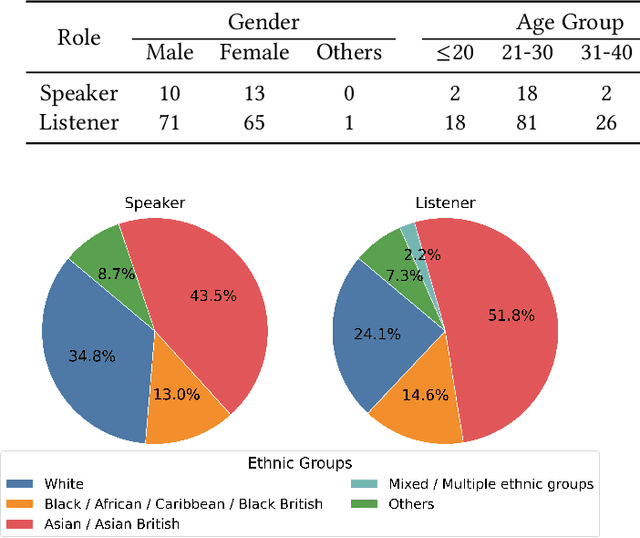

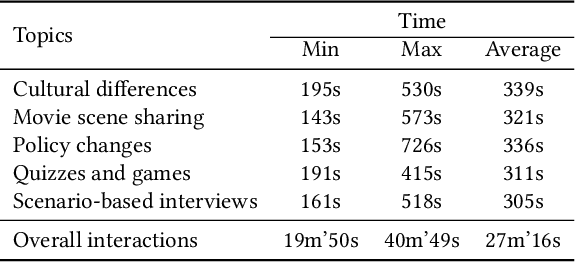

Abstract:In dyadic interactions, a broad spectrum of human facial reactions might be appropriate for responding to each human speaker behaviour. Following the successful organisation of the REACT 2023 and REACT 2024 challenges, we are proposing the REACT 2025 challenge encouraging the development and benchmarking of Machine Learning (ML) models that can be used to generate multiple appropriate, diverse, realistic and synchronised human-style facial reactions expressed by human listeners in response to an input stimulus (i.e., audio-visual behaviours expressed by their corresponding speakers). As a key of the challenge, we provide challenge participants with the first natural and large-scale multi-modal MAFRG dataset (called MARS) recording 137 human-human dyadic interactions containing a total of 2856 interaction sessions covering five different topics. In addition, this paper also presents the challenge guidelines and the performance of our baselines on the two proposed sub-challenges: Offline MAFRG and Online MAFRG, respectively. The challenge baseline code is publicly available at https://github.com/reactmultimodalchallenge/baseline_react2025

Wearable-Derived Behavioral and Physiological Biomarkers for Classifying Unipolar and Bipolar Depression Severity

Apr 17, 2025Abstract:Depression is a complex mental disorder characterized by a diverse range of observable and measurable indicators that go beyond traditional subjective assessments. Recent research has increasingly focused on objective, passive, and continuous monitoring using wearable devices to gain more precise insights into the physiological and behavioral aspects of depression. However, most existing studies primarily distinguish between healthy and depressed individuals, adopting a binary classification that fails to capture the heterogeneity of depressive disorders. In this study, we leverage wearable devices to predict depression subtypes-specifically unipolar and bipolar depression-aiming to identify distinctive biomarkers that could enhance diagnostic precision and support personalized treatment strategies. To this end, we introduce the CALYPSO dataset, designed for non-invasive detection of depression subtypes and symptomatology through physiological and behavioral signals, including blood volume pulse, electrodermal activity, body temperature, and three-axis acceleration. Additionally, we establish a benchmark on the dataset using well-known features and standard machine learning methods. Preliminary results indicate that features related to physical activity, extracted from accelerometer data, are the most effective in distinguishing between unipolar and bipolar depression, achieving an accuracy of $96.77\%$. Temperature-based features also showed high discriminative power, reaching an accuracy of $93.55\%$. These findings highlight the potential of physiological and behavioral monitoring for improving the classification of depressive subtypes, paving the way for more tailored clinical interventions.

Measuring Anxiety Levels with Head Motion Patterns in Severe Depression Population

Feb 12, 2025Abstract:Depression and anxiety are prevalent mental health disorders that frequently cooccur, with anxiety significantly influencing both the manifestation and treatment of depression. An accurate assessment of anxiety levels in individuals with depression is crucial to develop effective and personalized treatment plans. This study proposes a new noninvasive method for quantifying anxiety severity by analyzing head movements -specifically speed, acceleration, and angular displacement - during video-recorded interviews with patients suffering from severe depression. Using data from a new CALYPSO Depression Dataset, we extracted head motion characteristics and applied regression analysis to predict clinically evaluated anxiety levels. Our results demonstrate a high level of precision, achieving a mean absolute error (MAE) of 0.35 in predicting the severity of psychological anxiety based on head movement patterns. This indicates that our approach can enhance the understanding of anxiety's role in depression and assist psychiatrists in refining treatment strategies for individuals.

Partial Non-rigid Deformations and interpolations of Human Body Surfaces

Dec 03, 2024

Abstract:Non-rigid shape deformations pose significant challenges, and most existing methods struggle to handle partial deformations effectively. We present Partial Non-rigid Deformations and interpolations of the human body Surfaces (PaNDAS), a new method to learn local and global deformations of 3D surface meshes by building on recent deep models. Unlike previous approaches, our method enables restricting deformations to specific parts of the shape in a versatile way and allows for mixing and combining various poses from the database, all while not requiring any optimization at inference time. We demonstrate that the proposed framework can be used to generate new shapes, interpolate between parts of shapes, and perform other shape manipulation tasks with state-of-the-art accuracy and greater locality across various types of human surface data. Code and data will be made available soon.

Beyond Fixed Topologies: Unregistered Training and Comprehensive Evaluation Metrics for 3D Talking Heads

Oct 14, 2024

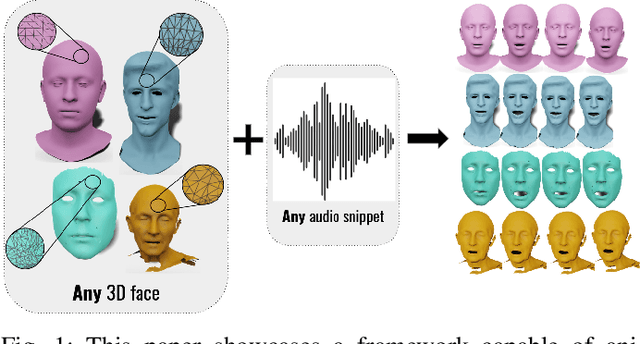

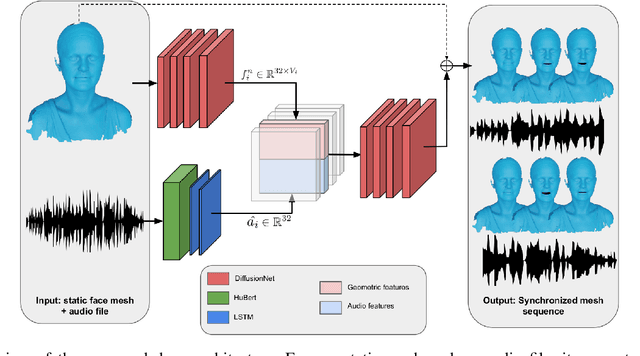

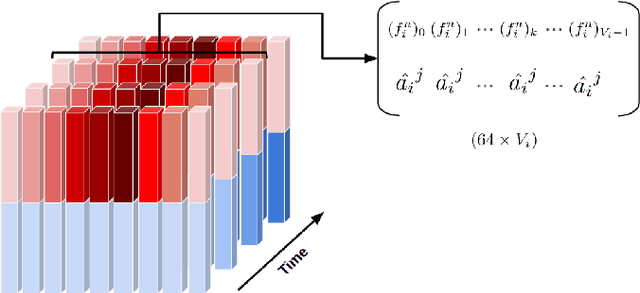

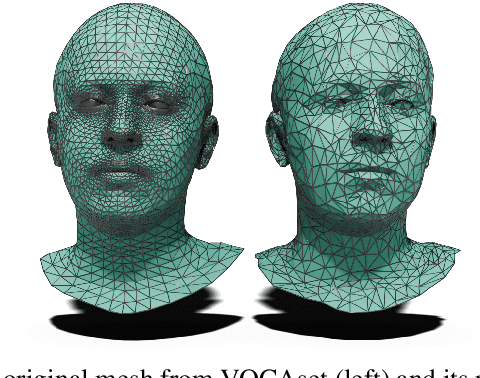

Abstract:Generating speech-driven 3D talking heads presents numerous challenges; among those is dealing with varying mesh topologies. Existing methods require a registered setting, where all meshes share a common topology: a point-wise correspondence across all meshes the model can animate. While simplifying the problem, it limits applicability as unseen meshes must adhere to the training topology. This work presents a framework capable of animating 3D faces in arbitrary topologies, including real scanned data. Our approach relies on a model leveraging heat diffusion over meshes to overcome the fixed topology constraint. We explore two training settings: a supervised one, in which training sequences share a fixed topology within a sequence but any mesh can be animated at test time, and an unsupervised one, which allows effective training with varying mesh structures. Additionally, we highlight the limitations of current evaluation metrics and propose new metrics for better lip-syncing evaluation between speech and facial movements. Our extensive evaluation shows our approach performs favorably compared to fixed topology techniques, setting a new benchmark by offering a versatile and high-fidelity solution for 3D talking head generation.

Geometric Generative Models based on Morphological Equivariant PDEs and GANs

Mar 25, 2024

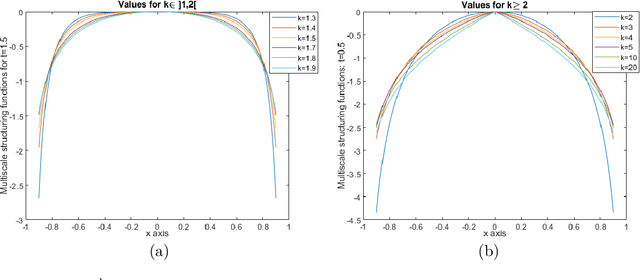

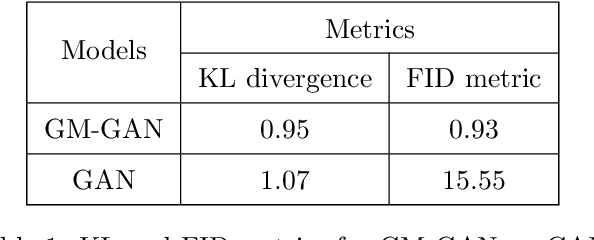

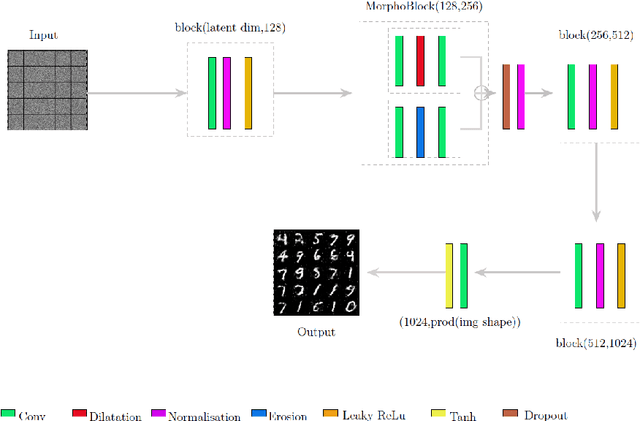

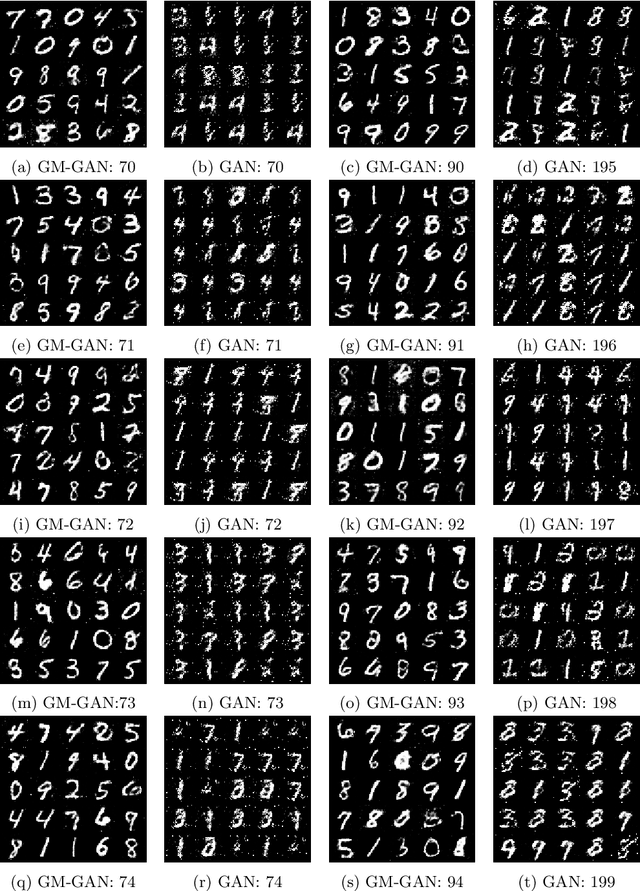

Abstract:Content and image generation consist in creating or generating data from noisy information by extracting specific features such as texture, edges, and other thin image structures. We are interested here in generative models, and two main problems are addressed. Firstly, the improvements of specific feature extraction while accounting at multiscale levels intrinsic geometric features; and secondly, the equivariance of the network to reduce its complexity and provide a geometric interpretability. To proceed, we propose a geometric generative model based on an equivariant partial differential equation (PDE) for group convolution neural networks (G-CNNs), so called PDE-G-CNNs, built on morphology operators and generative adversarial networks (GANs). Equivariant morphological PDE layers are composed of multiscale dilations and erosions formulated in Riemannian manifolds, while group symmetries are defined on a Lie group. We take advantage of the Lie group structure to properly integrate the equivariance in layers, and are able to use the Riemannian metric to solve the multiscale morphological operations. Each point of the Lie group is associated with a unique point in the manifold, which helps us derive a metric on the Riemannian manifold from a tensor field invariant under the Lie group so that the induced metric has the same symmetries. The proposed geometric morphological GAN (GM-GAN) is obtained by using the proposed morphological equivariant convolutions in PDE-G-CNNs to bring nonlinearity in classical CNNs. GM-GAN is evaluated on MNIST data and compared with GANs. Preliminary results show that GM-GAN model outperforms classical GAN.

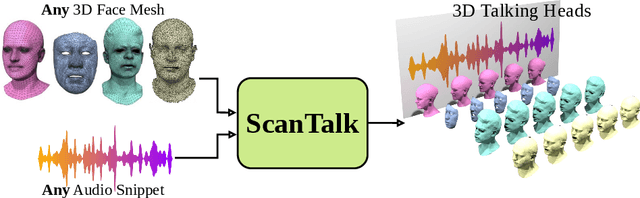

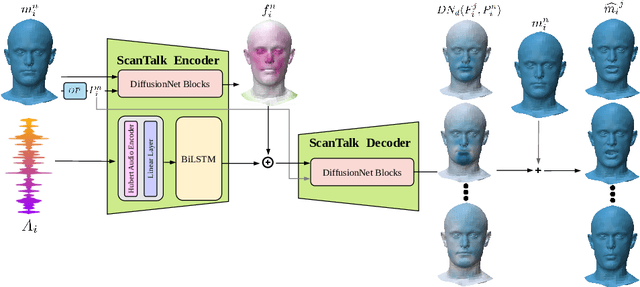

ScanTalk: 3D Talking Heads from Unregistered Scans

Mar 19, 2024

Abstract:Speech-driven 3D talking heads generation has emerged as a significant area of interest among researchers, presenting numerous challenges. Existing methods are constrained by animating faces with fixed topologies, wherein point-wise correspondence is established, and the number and order of points remains consistent across all identities the model can animate. In this work, we present ScanTalk, a novel framework capable of animating 3D faces in arbitrary topologies including scanned data. Our approach relies on the DiffusionNet architecture to overcome the fixed topology constraint, offering promising avenues for more flexible and realistic 3D animations. By leveraging the power of DiffusionNet, ScanTalk not only adapts to diverse facial structures but also maintains fidelity when dealing with scanned data, thereby enhancing the authenticity and versatility of generated 3D talking heads. Through comprehensive comparisons with state-of-the-art methods, we validate the efficacy of our approach, demonstrating its capacity to generate realistic talking heads comparable to existing techniques. While our primary objective is to develop a generic method free from topological constraints, all state-of-the-art methodologies are bound by such limitations. Code for reproducing our results, and the pre-trained model will be made available.

Basis restricted elastic shape analysis on the space of unregistered surfaces

Nov 07, 2023Abstract:This paper introduces a new mathematical and numerical framework for surface analysis derived from the general setting of elastic Riemannian metrics on shape spaces. Traditionally, those metrics are defined over the infinite dimensional manifold of immersed surfaces and satisfy specific invariance properties enabling the comparison of surfaces modulo shape preserving transformations such as reparametrizations. The specificity of the approach we develop is to restrict the space of allowable transformations to predefined finite dimensional bases of deformation fields. These are estimated in a data-driven way so as to emulate specific types of surface transformations observed in a training set. The use of such bases allows to simplify the representation of the corresponding shape space to a finite dimensional latent space. However, in sharp contrast with methods involving e.g. mesh autoencoders, the latent space is here equipped with a non-Euclidean Riemannian metric precisely inherited from the family of aforementioned elastic metrics. We demonstrate how this basis restricted model can be then effectively implemented to perform a variety of tasks on surface meshes which, importantly, does not assume these to be pre-registered (i.e. with given point correspondences) or to even have a consistent mesh structure. We specifically validate our approach on human body shape and pose data as well as human face scans, and show how it generally outperforms state-of-the-art methods on problems such as shape registration, interpolation, motion transfer or random pose generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge