Sylvain Arguillere

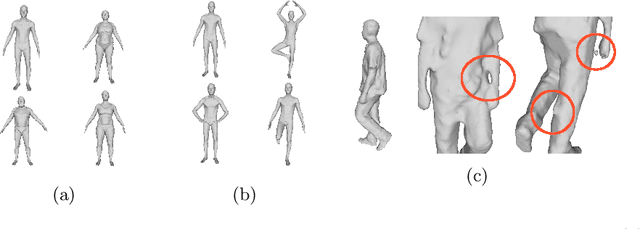

Partial Non-rigid Deformations and interpolations of Human Body Surfaces

Dec 03, 2024

Abstract:Non-rigid shape deformations pose significant challenges, and most existing methods struggle to handle partial deformations effectively. We present Partial Non-rigid Deformations and interpolations of the human body Surfaces (PaNDAS), a new method to learn local and global deformations of 3D surface meshes by building on recent deep models. Unlike previous approaches, our method enables restricting deformations to specific parts of the shape in a versatile way and allows for mixing and combining various poses from the database, all while not requiring any optimization at inference time. We demonstrate that the proposed framework can be used to generate new shapes, interpolate between parts of shapes, and perform other shape manipulation tasks with state-of-the-art accuracy and greater locality across various types of human surface data. Code and data will be made available soon.

Beyond Fixed Topologies: Unregistered Training and Comprehensive Evaluation Metrics for 3D Talking Heads

Oct 14, 2024

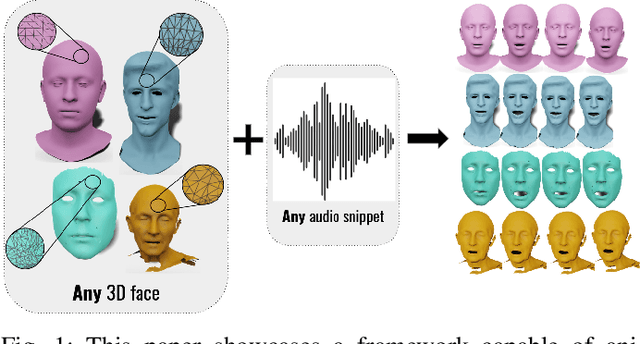

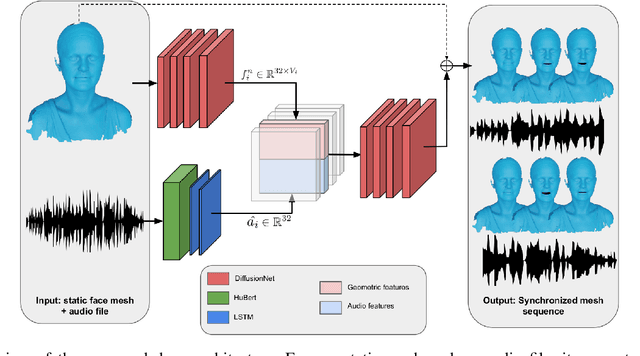

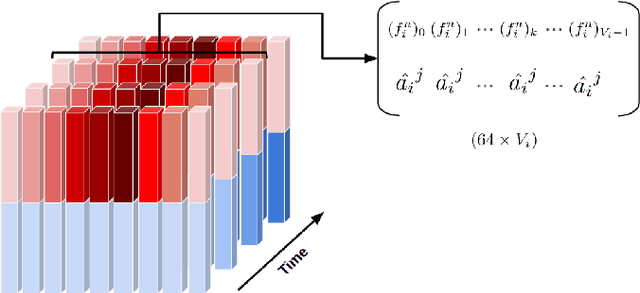

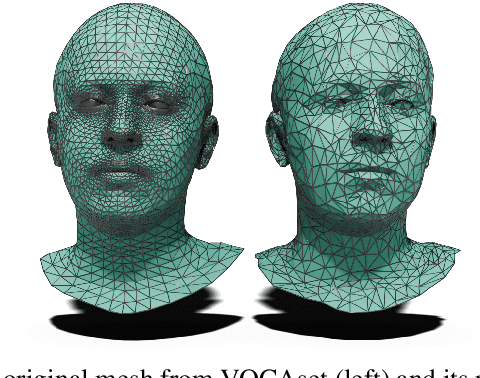

Abstract:Generating speech-driven 3D talking heads presents numerous challenges; among those is dealing with varying mesh topologies. Existing methods require a registered setting, where all meshes share a common topology: a point-wise correspondence across all meshes the model can animate. While simplifying the problem, it limits applicability as unseen meshes must adhere to the training topology. This work presents a framework capable of animating 3D faces in arbitrary topologies, including real scanned data. Our approach relies on a model leveraging heat diffusion over meshes to overcome the fixed topology constraint. We explore two training settings: a supervised one, in which training sequences share a fixed topology within a sequence but any mesh can be animated at test time, and an unsupervised one, which allows effective training with varying mesh structures. Additionally, we highlight the limitations of current evaluation metrics and propose new metrics for better lip-syncing evaluation between speech and facial movements. Our extensive evaluation shows our approach performs favorably compared to fixed topology techniques, setting a new benchmark by offering a versatile and high-fidelity solution for 3D talking head generation.

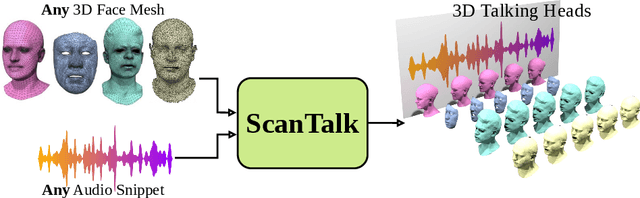

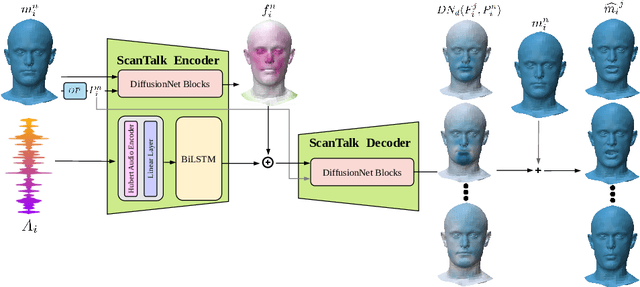

ScanTalk: 3D Talking Heads from Unregistered Scans

Mar 19, 2024

Abstract:Speech-driven 3D talking heads generation has emerged as a significant area of interest among researchers, presenting numerous challenges. Existing methods are constrained by animating faces with fixed topologies, wherein point-wise correspondence is established, and the number and order of points remains consistent across all identities the model can animate. In this work, we present ScanTalk, a novel framework capable of animating 3D faces in arbitrary topologies including scanned data. Our approach relies on the DiffusionNet architecture to overcome the fixed topology constraint, offering promising avenues for more flexible and realistic 3D animations. By leveraging the power of DiffusionNet, ScanTalk not only adapts to diverse facial structures but also maintains fidelity when dealing with scanned data, thereby enhancing the authenticity and versatility of generated 3D talking heads. Through comprehensive comparisons with state-of-the-art methods, we validate the efficacy of our approach, demonstrating its capacity to generate realistic talking heads comparable to existing techniques. While our primary objective is to develop a generic method free from topological constraints, all state-of-the-art methodologies are bound by such limitations. Code for reproducing our results, and the pre-trained model will be made available.

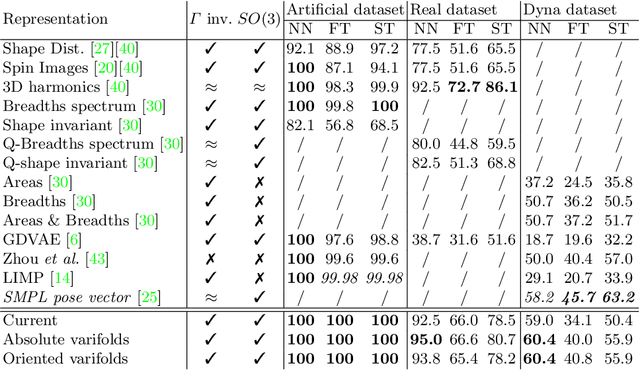

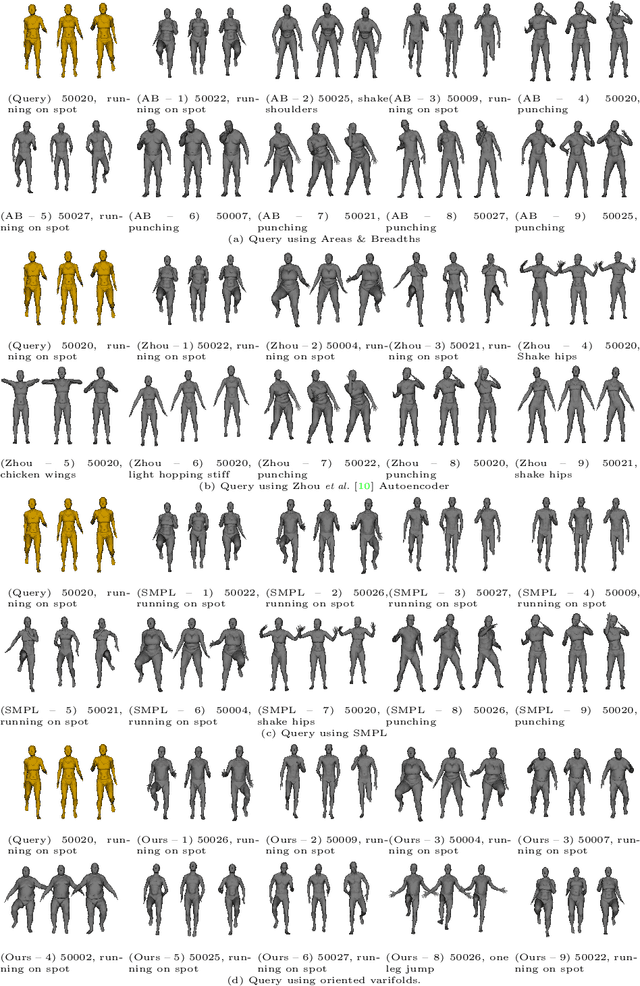

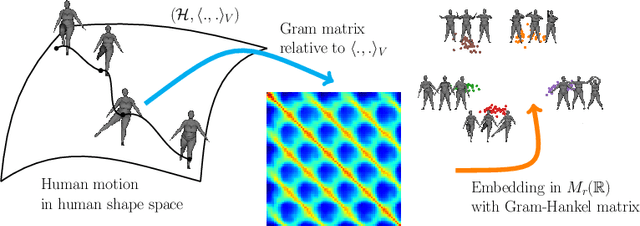

3D Shape Sequence of Human Comparison and Classification using Current and Varifolds

Jul 25, 2022

Abstract:In this paper we address the task of the comparison and the classification of 3D shape sequences of human. The non-linear dynamics of the human motion and the changing of the surface parametrization over the time make this task very challenging. To tackle this issue, we propose to embed the 3D shape sequences in an infinite dimensional space, the space of varifolds, endowed with an inner product that comes from a given positive definite kernel. More specifically, our approach involves two steps: 1) the surfaces are represented as varifolds, this representation induces metrics equivariant to rigid motions and invariant to parametrization; 2) the sequences of 3D shapes are represented by Gram matrices derived from their infinite dimensional Hankel matrices. The problem of comparison of two 3D sequences of human is formulated as a comparison of two Gram-Hankel matrices. Extensive experiments on CVSSP3D and Dyna datasets show that our method is competitive with state-of-the-art in 3D human sequence motion retrieval. Code for the experiments is available at https://github.com/CRISTAL-3DSAM/HumanComparisonVarifolds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge