Sylvain Arguillère

Toward Mesh-Invariant 3D Generative Deep Learning with Geometric Measures

Jun 27, 2023

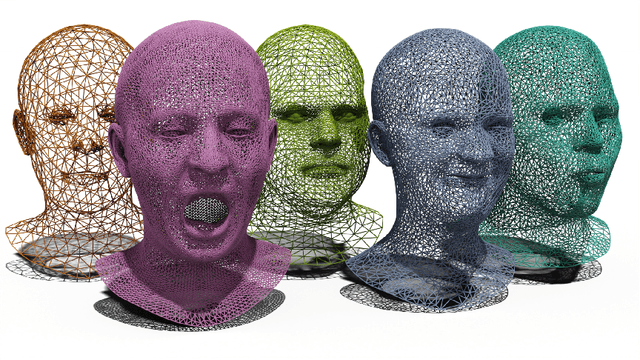

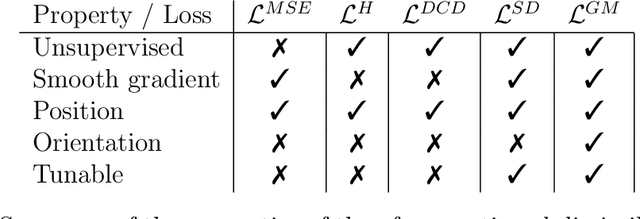

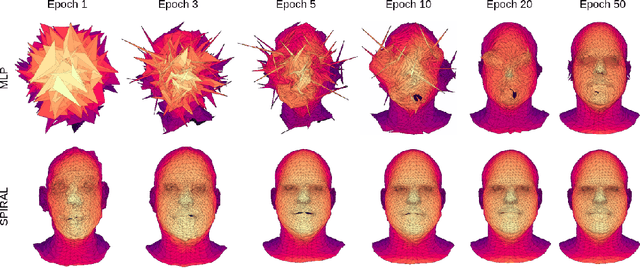

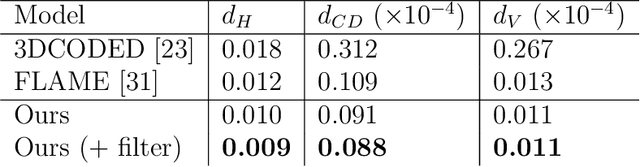

Abstract:3D generative modeling is accelerating as the technology allowing the capture of geometric data is developing. However, the acquired data is often inconsistent, resulting in unregistered meshes or point clouds. Many generative learning algorithms require correspondence between each point when comparing the predicted shape and the target shape. We propose an architecture able to cope with different parameterizations, even during the training phase. In particular, our loss function is built upon a kernel-based metric over a representation of meshes using geometric measures such as currents and varifolds. The latter allows to implement an efficient dissimilarity measure with many desirable properties such as robustness to resampling of the mesh or point cloud. We demonstrate the efficiency and resilience of our model with a generative learning task of human faces.

ResNet-LDDMM: Advancing the LDDMM Framework Using Deep Residual Networks

Feb 16, 2021

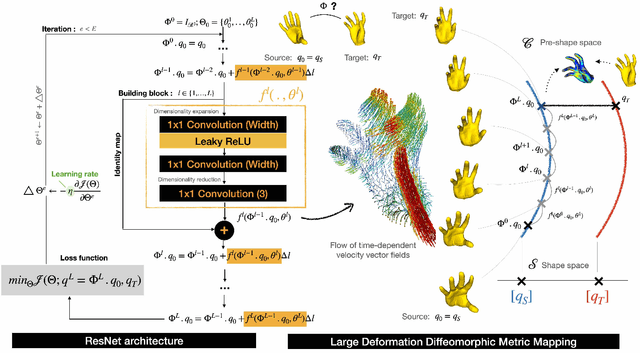

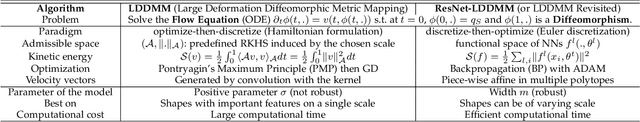

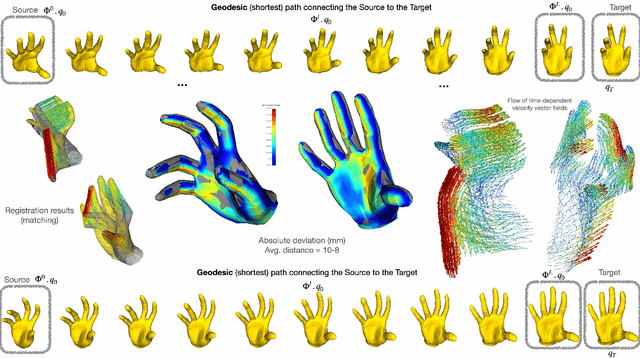

Abstract:In deformable registration, the geometric framework - large deformation diffeomorphic metric mapping or LDDMM, in short - has inspired numerous techniques for comparing, deforming, averaging and analyzing shapes or images. Grounded in flows, which are akin to the equations of motion used in fluid dynamics, LDDMM algorithms solve the flow equation in the space of plausible deformations, i.e. diffeomorphisms. In this work, we make use of deep residual neural networks to solve the non-stationary ODE (flow equation) based on a Euler's discretization scheme. The central idea is to represent time-dependent velocity fields as fully connected ReLU neural networks (building blocks) and derive optimal weights by minimizing a regularized loss function. Computing minimizing paths between deformations, thus between shapes, turns to find optimal network parameters by back-propagating over the intermediate building blocks. Geometrically, at each time step, ResNet-LDDMM searches for an optimal partition of the space into multiple polytopes, and then computes optimal velocity vectors as affine transformations on each of these polytopes. As a result, different parts of the shape, even if they are close (such as two fingers of a hand), can be made to belong to different polytopes, and therefore be moved in different directions without costing too much energy. Importantly, we show how diffeomorphic transformations, or more precisely bilipshitz transformations, are predicted by our algorithm. We illustrate these ideas on diverse registration problems of 3D shapes under complex topology-preserving transformations. We thus provide essential foundations for more advanced shape variability analysis under a novel joint geometric-neural networks Riemannian-like framework, i.e. ResNet-LDDMM.

3D Normal Coordinate Systems for Cortical Areas

Aug 07, 2018

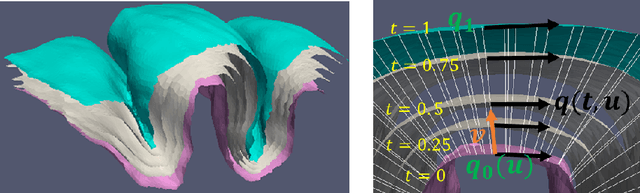

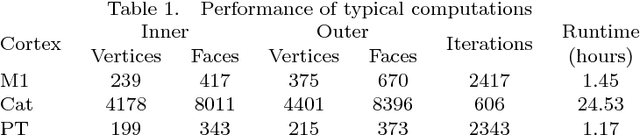

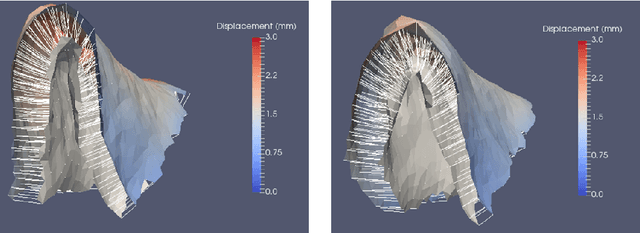

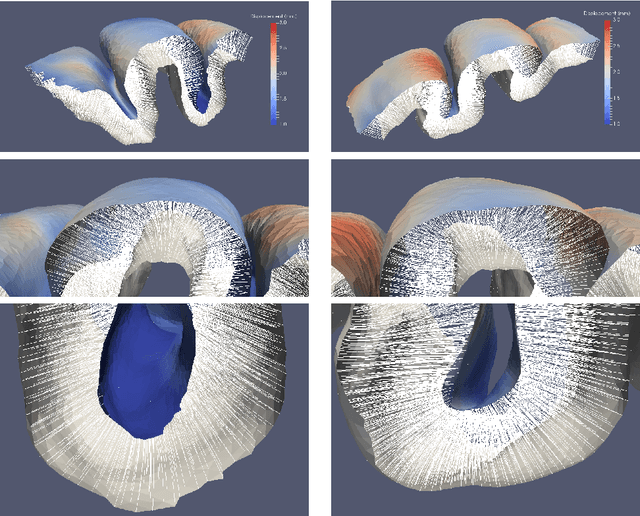

Abstract:A surface-based diffeomorphic algorithm to generate 3D coordinate grids in the cortical ribbon is described. In the grid, normal coordinate lines are generated by the diffeomorphic evolution from the grey/white (inner) surface to the grey/csf (outer) surface. Specifically, the cortical ribbon is described by two triangulated surfaces with open boundaries. Conceptually, the inner surface sits on top of the white matter structure and the outer on top of the gray matter. It is assumed that the cortical ribbon consists of cortical columns which are orthogonal to the white matter surface. This might be viewed as a consequence of the development of the columns in the embryo. It is also assumed that the columns are orthogonal to the outer surface so that the resultant vector field is orthogonal to the evolving surface. Then the distance of the normal lines from the vector field such that the inner surface evolves diffeomorphically towards the outer one can be construed as a measure of thickness. Applications are described for the auditory cortices in human adults and cats with normal hearing or hearing loss. The approach offers great potential for cortical morphometry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge