Mingjia Yin

From Feature Interaction to Feature Generation: A Generative Paradigm of CTR Prediction Models

Dec 16, 2025

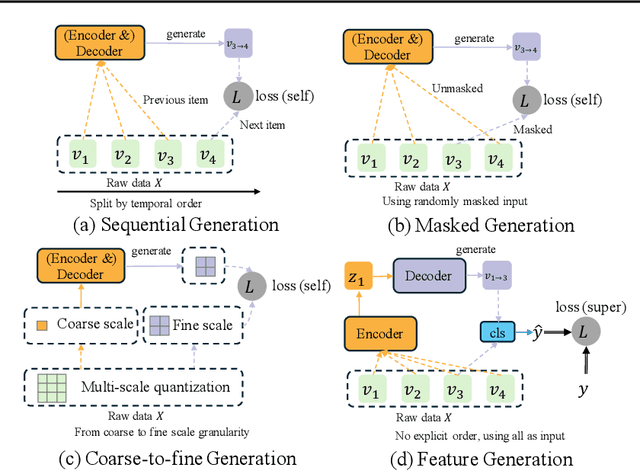

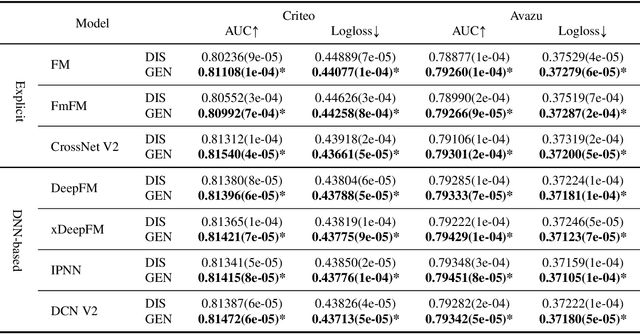

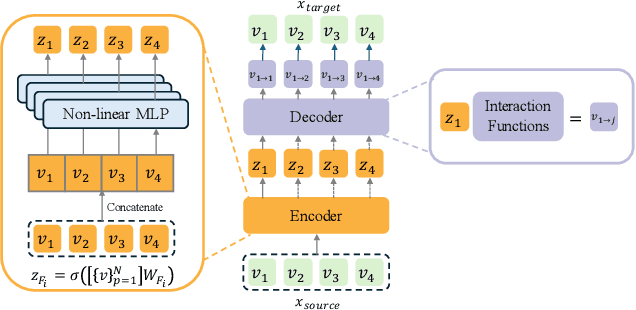

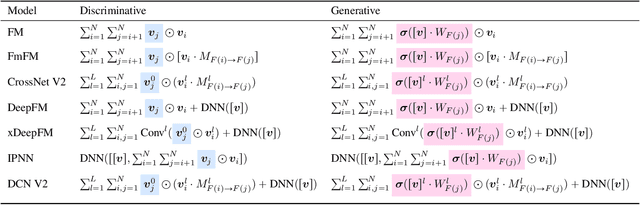

Abstract:Click-Through Rate (CTR) prediction, a core task in recommendation systems, aims to estimate the probability of users clicking on items. Existing models predominantly follow a discriminative paradigm, which relies heavily on explicit interactions between raw ID embeddings. However, this paradigm inherently renders them susceptible to two critical issues: embedding dimensional collapse and information redundancy, stemming from the over-reliance on feature interactions \emph{over raw ID embeddings}. To address these limitations, we propose a novel \emph{Supervised Feature Generation (SFG)} framework, \emph{shifting the paradigm from discriminative ``feature interaction" to generative ``feature generation"}. Specifically, SFG comprises two key components: an \emph{Encoder} that constructs hidden embeddings for each feature, and a \emph{Decoder} tasked with regenerating the feature embeddings of all features from these hidden representations. Unlike existing generative approaches that adopt self-supervised losses, we introduce a supervised loss to utilize the supervised signal, \ie, click or not, in the CTR prediction task. This framework exhibits strong generalizability: it can be seamlessly integrated with most existing CTR models, reformulating them under the generative paradigm. Extensive experiments demonstrate that SFG consistently mitigates embedding collapse and reduces information redundancy, while yielding substantial performance gains across various datasets and base models. The code is available at https://github.com/USTC-StarTeam/GE4Rec.

Enhancing CTR Prediction with De-correlated Expert Networks

May 23, 2025Abstract:Modeling feature interactions is essential for accurate click-through rate (CTR) prediction in advertising systems. Recent studies have adopted the Mixture-of-Experts (MoE) approach to improve performance by ensembling multiple feature interaction experts. These studies employ various strategies, such as learning independent embedding tables for each expert or utilizing heterogeneous expert architectures, to differentiate the experts, which we refer to expert \emph{de-correlation}. However, it remains unclear whether these strategies effectively achieve de-correlated experts. To address this, we propose a De-Correlated MoE (D-MoE) framework, which introduces a Cross-Expert De-Correlation loss to minimize expert correlations.Additionally, we propose a novel metric, termed Cross-Expert Correlation, to quantitatively evaluate the expert de-correlation degree. Based on this metric, we identify a key finding for MoE framework design: \emph{different de-correlation strategies are mutually compatible, and progressively employing them leads to reduced correlation and enhanced performance}.Extensive experiments have been conducted to validate the effectiveness of D-MoE and the de-correlation principle. Moreover, online A/B testing on Tencent's advertising platforms demonstrates that D-MoE achieves a significant 1.19\% Gross Merchandise Volume (GMV) lift compared to the Multi-Embedding MoE baseline.

TD3: Tucker Decomposition Based Dataset Distillation Method for Sequential Recommendation

Feb 06, 2025

Abstract:In the era of data-centric AI, the focus of recommender systems has shifted from model-centric innovations to data-centric approaches. The success of modern AI models is built on large-scale datasets, but this also results in significant training costs. Dataset distillation has emerged as a key solution, condensing large datasets to accelerate model training while preserving model performance. However, condensing discrete and sequentially correlated user-item interactions, particularly with extensive item sets, presents considerable challenges. This paper introduces \textbf{TD3}, a novel \textbf{T}ucker \textbf{D}ecomposition based \textbf{D}ataset \textbf{D}istillation method within a meta-learning framework, designed for sequential recommendation. TD3 distills a fully expressive \emph{synthetic sequence summary} from original data. To efficiently reduce computational complexity and extract refined latent patterns, Tucker decomposition decouples the summary into four factors: \emph{synthetic user latent factor}, \emph{temporal dynamics latent factor}, \emph{shared item latent factor}, and a \emph{relation core} that models their interconnections. Additionally, a surrogate objective in bi-level optimization is proposed to align feature spaces extracted from models trained on both original data and synthetic sequence summary beyond the na\"ive performance matching approach. In the \emph{inner-loop}, an augmentation technique allows the learner to closely fit the synthetic summary, ensuring an accurate update of it in the \emph{outer-loop}. To accelerate the optimization process and address long dependencies, RaT-BPTT is employed for bi-level optimization. Experiments and analyses on multiple public datasets have confirmed the superiority and cross-architecture generalizability of the proposed designs. Codes are released at https://github.com/USTC-StarTeam/TD3.

MDAP: A Multi-view Disentangled and Adaptive Preference Learning Framework for Cross-Domain Recommendation

Oct 08, 2024

Abstract:Cross-domain Recommendation systems leverage multi-domain user interactions to improve performance, especially in sparse data or new user scenarios. However, CDR faces challenges such as effectively capturing user preferences and avoiding negative transfer. To address these issues, we propose the Multi-view Disentangled and Adaptive Preference Learning (MDAP) framework. Our MDAP framework uses a multiview encoder to capture diverse user preferences. The framework includes a gated decoder that adaptively combines embeddings from different views to generate a comprehensive user representation. By disentangling representations and allowing adaptive feature selection, our model enhances adaptability and effectiveness. Extensive experiments on benchmark datasets demonstrate that our method significantly outperforms state-of-the-art CDR and single-domain models, providing more accurate recommendations and deeper insights into user behavior across different domains.

Entropy Law: The Story Behind Data Compression and LLM Performance

Jul 11, 2024

Abstract:Data is the cornerstone of large language models (LLMs), but not all data is useful for model learning. Carefully selected data can better elicit the capabilities of LLMs with much less computational overhead. Most methods concentrate on evaluating the quality of individual samples in data selection, while the combinatorial effects among samples are neglected. Even if each sample is of perfect quality, their combinations may be suboptimal in teaching LLMs due to their intrinsic homogeneity or contradiction. In this paper, we aim to uncover the underlying relationships between LLM performance and data selection. Inspired by the information compression nature of LLMs, we uncover an ``entropy law'' that connects LLM performance with data compression ratio and first-epoch training loss, which reflect the information redundancy of a dataset and the mastery of inherent knowledge encoded in this dataset, respectively. Through both theoretical deduction and empirical evaluation, we find that model performance is negatively correlated to the compression ratio of training data, which usually yields a lower training loss. Based on the findings of the entropy law, we propose a quite efficient and universal data selection method named \textbf{ZIP} for training LLMs, which aim to prioritize data subsets exhibiting a low compression ratio. Based on a multi-stage algorithm that selects diverse data in a greedy manner, we can obtain a good data subset with satisfactory diversity. Extensive experiments have been conducted to validate the entropy law and the superiority of ZIP across different LLM backbones and alignment stages. We also present an interesting application of entropy law that can detect potential performance risks at the beginning of model training.

Dataset Regeneration for Sequential Recommendation

May 28, 2024Abstract:The sequential recommender (SR) system is a crucial component of modern recommender systems, as it aims to capture the evolving preferences of users. Significant efforts have been made to enhance the capabilities of SR systems. These methods typically follow the \textbf{model-centric} paradigm, which involves developing effective models based on fixed datasets. However, this approach often overlooks potential quality issues and flaws inherent in the data. Driven by the potential of \textbf{data-centric} AI, we propose a novel data-centric paradigm for developing an ideal training dataset using a model-agnostic dataset regeneration framework called DR4SR. This framework enables the regeneration of a dataset with exceptional cross-architecture generalizability. Additionally, we introduce the DR4SR+ framework, which incorporates a model-aware dataset personalizer to tailor the regenerated dataset specifically for a target model. To demonstrate the effectiveness of the data-centric paradigm, we integrate our framework with various model-centric methods and observe significant performance improvements across four widely adopted datasets. Furthermore, we conduct in-depth analyses to explore the potential of the data-centric paradigm and provide valuable insights. The code can be found at \textcolor{blue}{\url{https://anonymous.4open.science/r/KDD2024-86EA/}}

Learning Partially Aligned Item Representation for Cross-Domain Sequential Recommendation

May 21, 2024

Abstract:Cross-domain sequential recommendation (CDSR) aims to uncover and transfer users' sequential preferences across multiple recommendation domains. While significant endeavors have been made, they primarily concentrated on developing advanced transfer modules and aligning user representations using self-supervised learning techniques. However, the problem of aligning item representations has received limited attention, and misaligned item representations can potentially lead to sub-optimal sequential modeling and user representation alignment. To this end, we propose a model-agnostic framework called \textbf{C}ross-domain item representation \textbf{A}lignment for \textbf{C}ross-\textbf{D}omain \textbf{S}equential \textbf{R}ecommendation (\textbf{CA-CDSR}), which achieves sequence-aware generation and adaptively partial alignment for item representations. Specifically, we first develop a sequence-aware feature augmentation strategy, which captures both collaborative and sequential item correlations, thus facilitating holistic item representation generation. Next, we conduct an empirical study to investigate the partial representation alignment problem from a spectrum perspective. It motivates us to devise an adaptive spectrum filter, achieving partial alignment adaptively. Furthermore, the aligned item representations can be fed into different sequential encoders to obtain user representations. The entire framework is optimized in a multi-task learning paradigm with an annealing strategy. Extensive experiments have demonstrated that CA-CDSR can surpass state-of-the-art baselines by a significant margin and can effectively align items in representation spaces to enhance performance.

A Unified Framework for Adaptive Representation Enhancement and Inversed Learning in Cross-Domain Recommendation

Mar 30, 2024

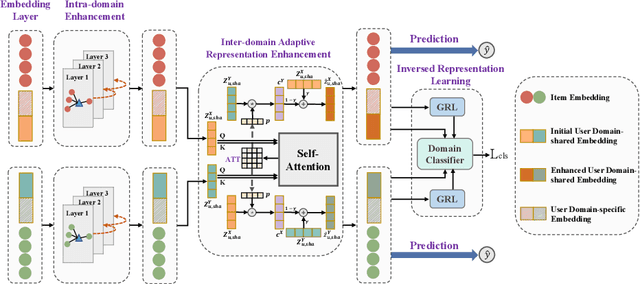

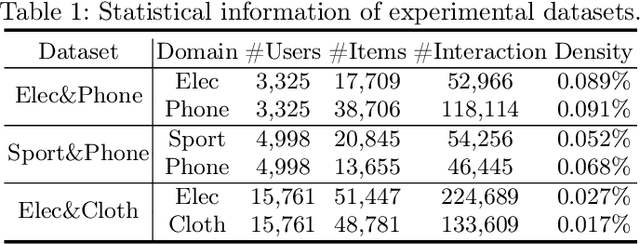

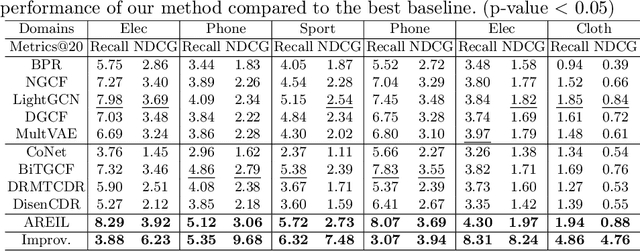

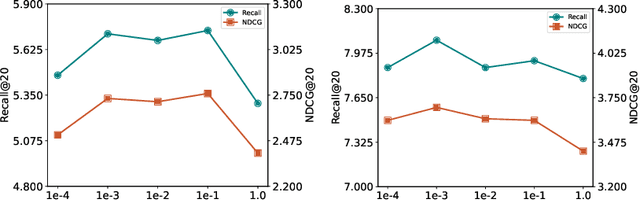

Abstract:Cross-domain recommendation (CDR), aiming to extract and transfer knowledge across domains, has attracted wide attention for its efficacy in addressing data sparsity and cold-start problems. Despite significant advances in representation disentanglement to capture diverse user preferences, existing methods usually neglect representation enhancement and lack rigorous decoupling constraints, thereby limiting the transfer of relevant information. To this end, we propose a Unified Framework for Adaptive Representation Enhancement and Inversed Learning in Cross-Domain Recommendation (AREIL). Specifically, we first divide user embeddings into domain-shared and domain-specific components to disentangle mixed user preferences. Then, we incorporate intra-domain and inter-domain information to adaptively enhance the ability of user representations. In particular, we propose a graph convolution module to capture high-order information, and a self-attention module to reveal inter-domain correlations and accomplish adaptive fusion. Next, we adopt domain classifiers and gradient reversal layers to achieve inversed representation learning in a unified framework. Finally, we employ a cross-entropy loss for measuring recommendation performance and jointly optimize the entire framework via multi-task learning. Extensive experiments on multiple datasets validate the substantial improvement in the recommendation performance of AREIL. Moreover, ablation studies and representation visualizations further illustrate the effectiveness of adaptive enhancement and inversed learning in CDR.

APGL4SR: A Generic Framework with Adaptive and Personalized Global Collaborative Information in Sequential Recommendation

Nov 06, 2023

Abstract:The sequential recommendation system has been widely studied for its promising effectiveness in capturing dynamic preferences buried in users' sequential behaviors. Despite the considerable achievements, existing methods usually focus on intra-sequence modeling while overlooking exploiting global collaborative information by inter-sequence modeling, resulting in inferior recommendation performance. Therefore, previous works attempt to tackle this problem with a global collaborative item graph constructed by pre-defined rules. However, these methods neglect two crucial properties when capturing global collaborative information, i.e., adaptiveness and personalization, yielding sub-optimal user representations. To this end, we propose a graph-driven framework, named Adaptive and Personalized Graph Learning for Sequential Recommendation (APGL4SR), that incorporates adaptive and personalized global collaborative information into sequential recommendation systems. Specifically, we first learn an adaptive global graph among all items and capture global collaborative information with it in a self-supervised fashion, whose computational burden can be further alleviated by the proposed SVD-based accelerator. Furthermore, based on the graph, we propose to extract and utilize personalized item correlations in the form of relative positional encoding, which is a highly compatible manner of personalizing the utilization of global collaborative information. Finally, the entire framework is optimized in a multi-task learning paradigm, thus each part of APGL4SR can be mutually reinforced. As a generic framework, APGL4SR can outperform other baselines with significant margins. The code is available at https://github.com/Graph-Team/APGL4SR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge