Min Hua

Multi-Agent Reinforcement Learning for Connected and Automated Vehicles Control: Recent Advancements and Future Prospects

Dec 18, 2023

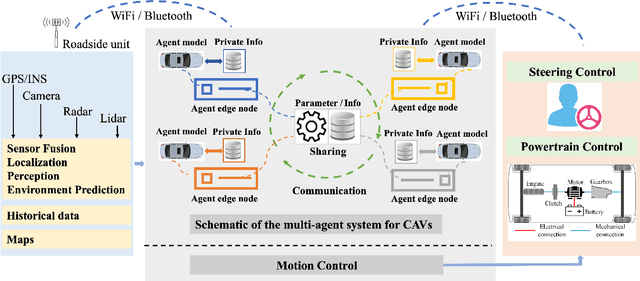

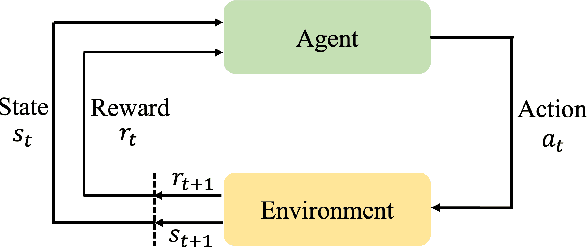

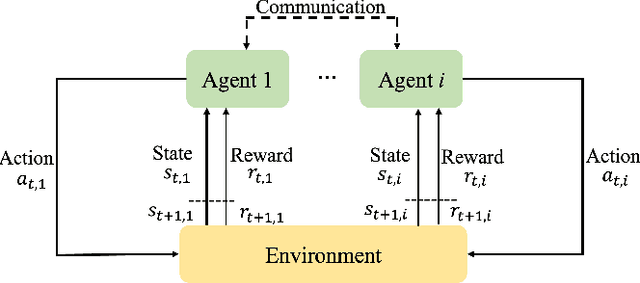

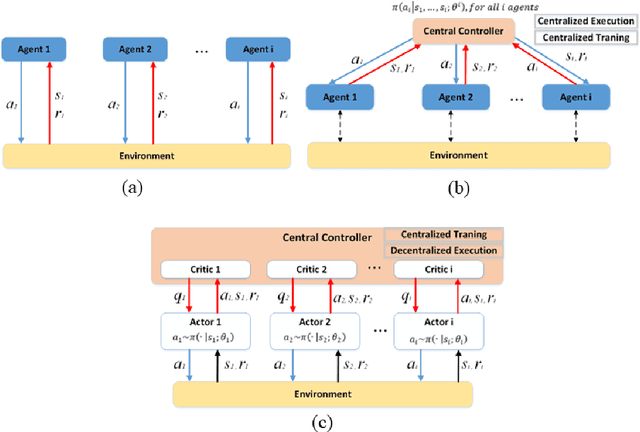

Abstract:Connected and automated vehicles (CAVs) have emerged as a potential solution to the future challenges of developing safe, efficient, and eco-friendly transportation systems. However, CAV control presents significant challenges, given the complexity of interconnectivity and coordination required among the vehicles. To address this, multi-agent reinforcement learning (MARL), with its notable advancements in addressing complex problems in autonomous driving, robotics, and human-vehicle interaction, has emerged as a promising tool for enhancing the capabilities of CAVs. However, there is a notable absence of current reviews on the state-of-the-art MARL algorithms in the context of CAVs. Therefore, this paper delivers a comprehensive review of the application of MARL techniques within the field of CAV control. The paper begins by introducing MARL, followed by a detailed explanation of its unique advantages in addressing complex mobility and traffic scenarios that involve multiple agents. It then presents a comprehensive survey of MARL applications on the extent of control dimensions for CAVs, covering critical and typical scenarios such as platooning control, lane-changing, and unsignalized intersections. In addition, the paper provides a comprehensive review of the prominent simulation platforms used to create reliable environments for training in MARL. Lastly, the paper examines the current challenges associated with deploying MARL within CAV control and outlines potential solutions that can effectively overcome these issues. Through this review, the study highlights the tremendous potential of MARL to enhance the performance and collaboration of CAV control in terms of safety, travel efficiency, and economy.

Recent Progress in Energy Management of Connected Hybrid Electric Vehicles Using Reinforcement Learning

Aug 28, 2023

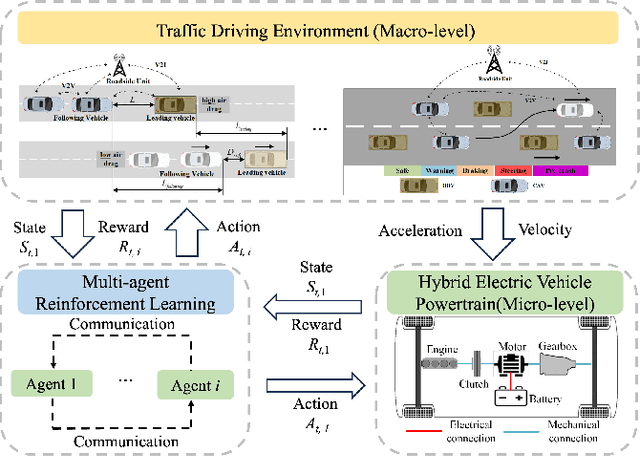

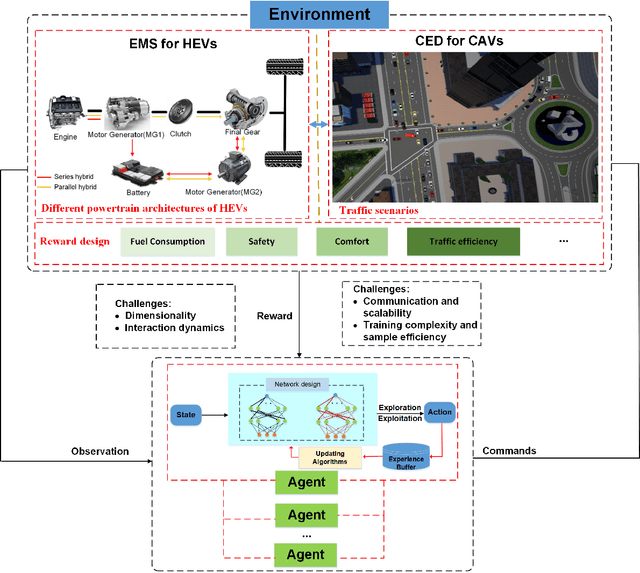

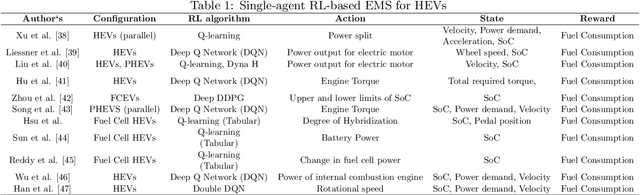

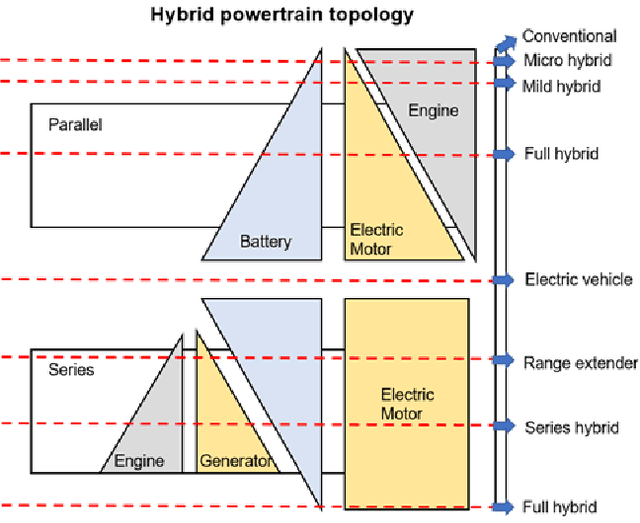

Abstract:The growing adoption of hybrid electric vehicles (HEVs) presents a transformative opportunity for revolutionizing transportation energy systems. The shift towards electrifying transportation aims to curb environmental concerns related to fossil fuel consumption. This necessitates efficient energy management systems (EMS) to optimize energy efficiency. The evolution of EMS from HEVs to connected hybrid electric vehicles (CHEVs) represent a pivotal shift. For HEVs, EMS now confronts the intricate energy cooperation requirements of CHEVs, necessitating advanced algorithms for route optimization, charging coordination, and load distribution. Challenges persist in both domains, including optimal energy utilization for HEVs, and cooperative eco-driving control (CED) for CHEVs across diverse vehicle types. Reinforcement learning (RL) stands out as a promising tool for addressing these challenges at hand. Specifically, within the realm of CHEVs, the application of multi-agent reinforcement learning (MARL) emerges as a powerful approach for effectively tackling the intricacies of CED control. Despite extensive research, few reviews span from individual vehicles to multi-vehicle scenarios. This review bridges the gap, highlighting challenges, advancements, and potential contributions of RL-based solutions for future sustainable transportation systems.

Study on the Impacts of Hazardous Behaviors on Autonomous Vehicle Collision Rates Based on Humanoid Scenario Generation in CARLA

Jul 15, 2023

Abstract:Testing of function safety and Safety Of The Intended Functionality (SOTIF) is important for autonomous vehicles (AVs). It is hard to test the AV's hazard response in the real world because it would involve hazards to passengers and other road users. This paper studied on virtual testing of AV on the CARLA platform and proposed a Humanoid Scenario Generation (HSG) scheme to investigate the impacts of hazardous behaviors on AV collision rates. The HSG scheme breakthrough the current limitation on the rarity and reproducibility of real scenes. By accurately capturing five prominent human driver behaviors that directly contribute to vehicle collisions in the real world, the methodology significantly enhances the realism and diversity of the simulation, as evidenced by collision rate statistics across various traffic scenarios. Thus, the modular framework allows for customization, and its seamless integration within the CARLA platform ensures compatibility with existing tools. Ultimately, the comparison results demonstrate that all vehicles that exhibited hazardous behaviors followed the predefined random speed distribution and the effectiveness of the HSG was validated by the distinct characteristics displayed by these behaviors.

Coordinated Control of Path Tracking and Yaw Stability for Distributed Drive Electric Vehicle Based on AMPC and DYC

Apr 24, 2023

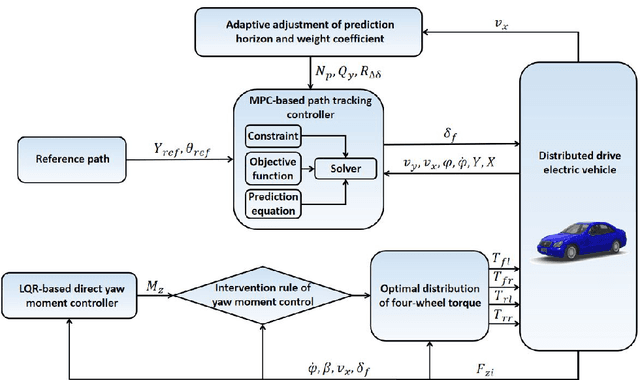

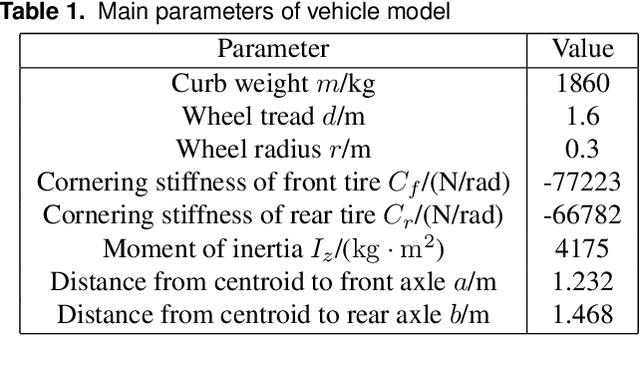

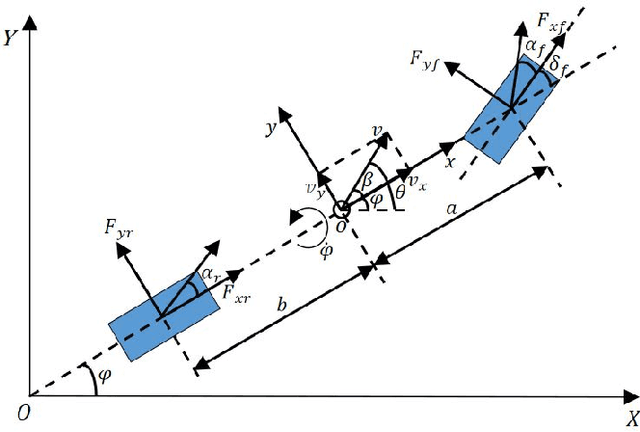

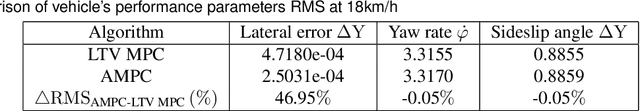

Abstract:Maintaining both path-tracking accuracy and yaw stability of distributed drive electric vehicles (DDEVs) under various driving conditions presents a significant challenge in the field of vehicle control. To address this limitation, a coordinated control strategy that integrates adaptive model predictive control (AMPC) path-tracking control and direct yaw moment control (DYC) is proposed for DDEVs. The proposed strategy, inspired by a hierarchical framework, is coordinated by the upper layer of path-tracking control and the lower layer of direct yaw moment control. Based on the linear time-varying model predictive control (LTV MPC) algorithm, the effects of prediction horizon and weight coefficients on the path-tracking accuracy and yaw stability of the vehicle are compared and analyzed first. According to the aforementioned analysis, an AMPC path-tracking controller with variable prediction horizon and weight coefficients is designed considering the vehicle speed's variation in the upper layer. The lower layer involves DYC based on the linear quadratic regulator (LQR) technique. Specifically, the intervention rule of DYC is determined by the threshold of the yaw rate error and the phase diagram of the sideslip angle. Extensive simulation experiments are conducted to evaluate the proposed coordinated control strategy under different driving conditions. The results show that, under variable speed and low adhesion conditions, the vehicle's yaw stability and path-tracking accuracy have been improved by 21.58\% and 14.43\%, respectively, compared to AMPC. Similarly, under high speed and low adhesion conditions, the vehicle's yaw stability and path-tracking accuracy have been improved by 44.30\% and 14.25\%, respectively, compared to the coordination of LTV MPC and DYC. The results indicate that the proposed adaptive path-tracking controller is effective across different speeds.

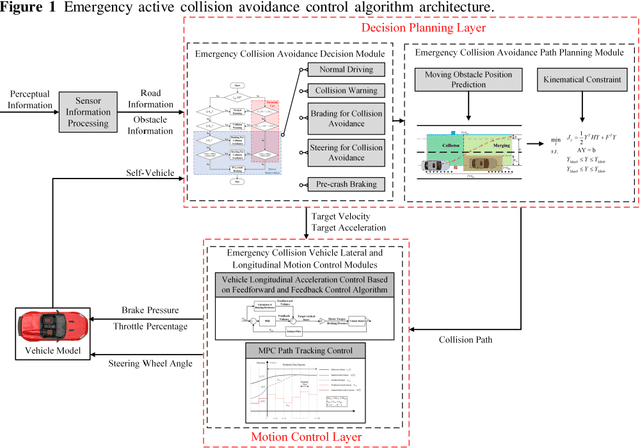

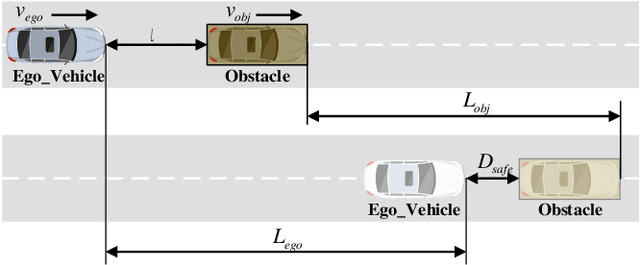

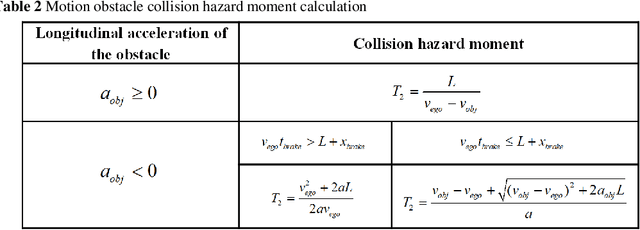

Multi-level decision framework collision avoidance algorithm in emergency scenarios

Apr 21, 2023

Abstract:With the rapid development of autonomous driving, the attention of academia has increasingly focused on the development of anti-collision systems in emergency scenarios, which have a crucial impact on driving safety. While numerous anti-collision strategies have emerged in recent years, most of them only consider steering or braking. The dynamic and complex nature of the driving environment presents a challenge to developing robust collision avoidance algorithms in emergency scenarios. To address the complex, dynamic obstacle scene and improve lateral maneuverability, this paper establishes a multi-level decision-making obstacle avoidance framework that employs the safe distance model and integrates emergency steering and emergency braking to complete the obstacle avoidance process. This approach helps avoid the high-risk situation of vehicle instability that can result from the separation of steering and braking actions. In the emergency steering algorithm, we define the collision hazard moment and propose a multi-constraint dynamic collision avoidance planning method that considers the driving area. Simulation results demonstrate that the decision-making collision avoidance logic can be applied to dynamic collision avoidance scenarios in complex traffic situations, effectively completing the obstacle avoidance task in emergency scenarios and improving the safety of autonomous driving.

Energy Management of Multi-mode Plug-in Hybrid Electric Vehicle using Multi-agent Deep Reinforcement Learning

Mar 16, 2023

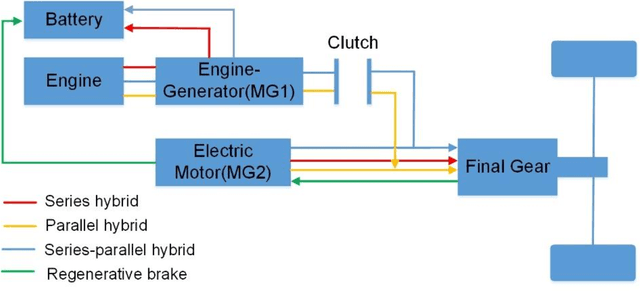

Abstract:The recently emerging multi-mode plug-in hybrid electric vehicle (PHEV) technology is one of the pathways making contributions to decarbonization, and its energy management requires multiple-input and multiple-output (MIMO) control. At the present, the existing methods usually decouple the MIMO control into single-output (MISO) control and can only achieve its local optimal performance. To optimize the multi-mode vehicle globally, this paper studies a MIMO control method for energy management of the multi-mode PHEV based on multi-agent deep reinforcement learning (MADRL). By introducing a relevance ratio, a hand-shaking strategy is proposed to enable two learning agents to work collaboratively under the MADRL framework using the deep deterministic policy gradient (DDPG) algorithm. Unified settings for the DDPG agents are obtained through a sensitivity analysis of the influencing factors to the learning performance. The optimal working mode for the hand-shaking strategy is attained through a parametric study on the relevance ratio. The advantage of the proposed energy management method is demonstrated on a software-in-the-loop testing platform. The result of the study indiates that learning rate of the DDPG agents is the greatest factor in learning performance. Using the unified DDPG settings and a relevance ratio of 0.2, the proposed MADRL method can save up to 4% energy compared to the single-agent method.

Optimal Energy Management of Plug-in Hybrid Vehicles Through Exploration-to-Exploitation Ratio Control in Ensemble Reinforcement Learning

Mar 15, 2023Abstract:Developing intelligent energy management systems with high adaptability and superiority is necessary and significant for Hybrid Electric Vehicles (HEVs). This paper proposed an ensemble learning-based scheme based on a learning automata module (LAM) to enhance vehicle energy efficiency. Two parallel base learners following two exploration-to-exploitation ratios (E2E) methods are used to generate an optimal solution, and the final action is jointly determined by the LAM using three ensemble methods. 'Reciprocal function-based decay' (RBD) and 'Step-based decay' (SBD) are proposed respectively to generate E2E ratio trajectories based on conventional Exponential decay (EXD) functions of reinforcement learning. Furthermore, considering the different performances of three decay functions, an optimal combination with the RBD, SBD, and EXD is employed to determine the ultimate action. Experiments are carried out in software-in-loop (SiL) and hardware-in-the-loop (HiL) to validate the potential performance of energy-saving under four predefined cycles. The SiL test demonstrates that the ensemble learning system with an optimal combination can achieve 1.09$\%$ higher vehicle energy efficiency than a single Q-learning strategy with the EXD function. In the HiL test, the ensemble learning system with an optimal combination can save more than 1.04$\%$ in the predefined real-world driving condition than the single Q-learning scheme based on the EXD function.

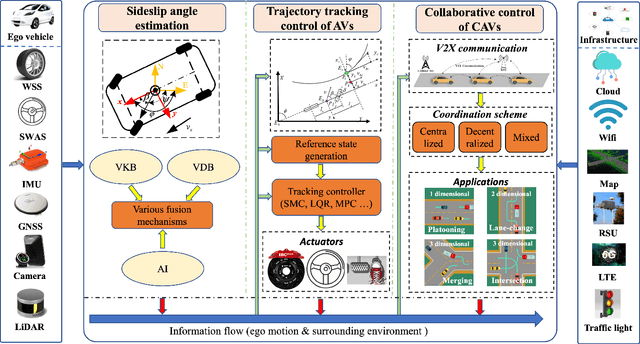

A Systematic Survey of Control Techniques and Applications: From Autonomous Vehicles to Connected and Automated Vehicles

Mar 10, 2023

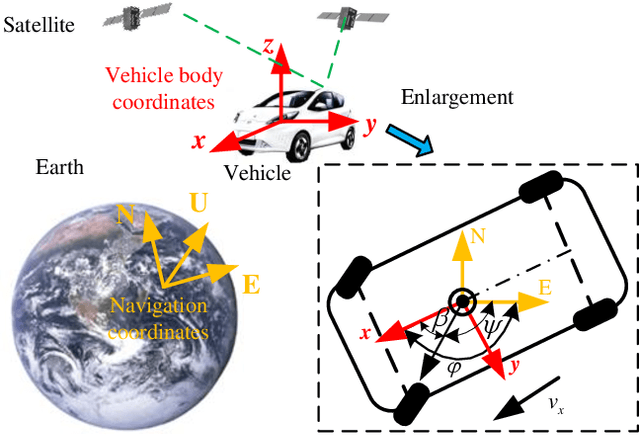

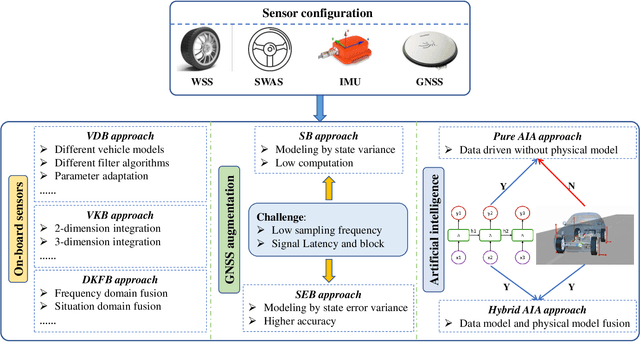

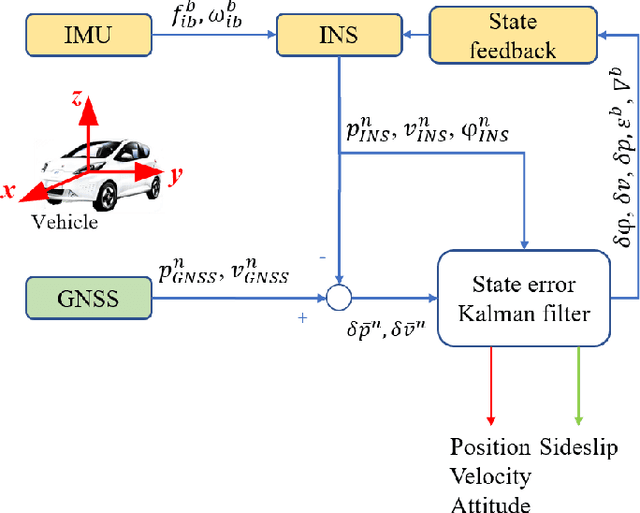

Abstract:Vehicle control is one of the most critical challenges in autonomous vehicles (AVs) and connected and automated vehicles (CAVs), and it is paramount in vehicle safety, passenger comfort, transportation efficiency, and energy saving. This survey attempts to provide a comprehensive and thorough overview of the current state of vehicle control technology, focusing on the evolution from vehicle state estimation and trajectory tracking control in AVs at the microscopic level to collaborative control in CAVs at the macroscopic level. First, this review starts with vehicle key state estimation, specifically vehicle sideslip angle, which is the most pivotal state for vehicle trajectory control, to discuss representative approaches. Then, we present symbolic vehicle trajectory tracking control approaches for AVs. On top of that, we further review the collaborative control frameworks for CAVs and corresponding applications. Finally, this survey concludes with a discussion of future research directions and the challenges. This survey aims to provide a contextualized and in-depth look at state of the art in vehicle control for AVs and CAVs, identifying critical areas of focus and pointing out the potential areas for further exploration.

Multi-agent Deep Reinforcement Learning for Charge-sustaining Control of Multi-mode Hybrid Vehicles

Sep 06, 2022

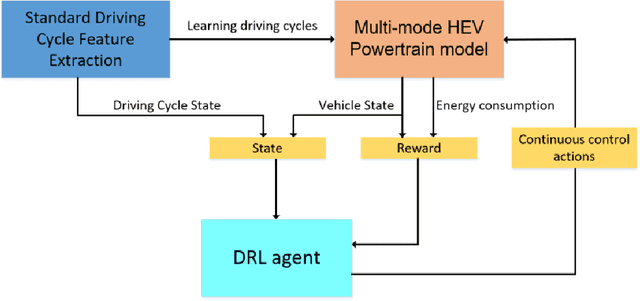

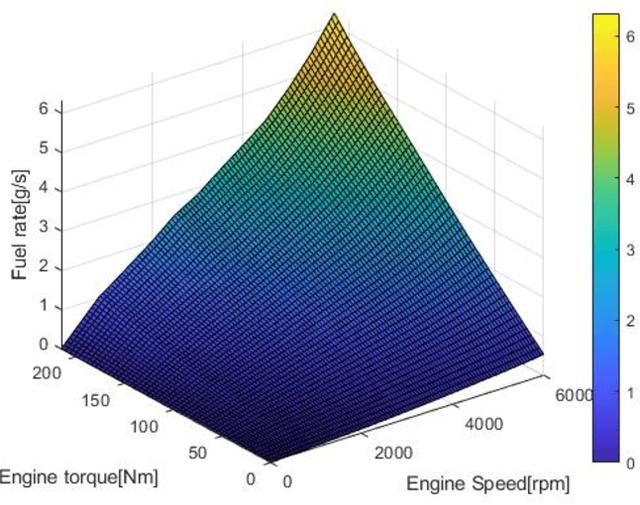

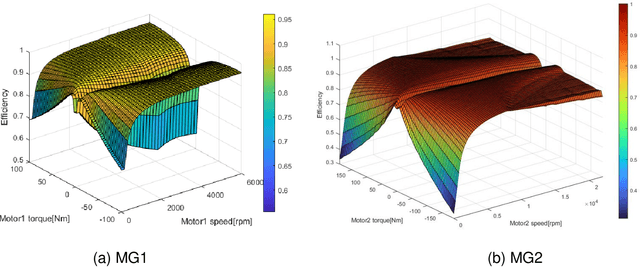

Abstract:Transportation electrification requires an increasing number of electric components (e.g., electric motors and electric energy storage systems) on vehicles, and control of the electric powertrains usually involves multiple inputs and multiple outputs (MIMO). This paper focused on the online optimization of energy management strategy for a multi-mode hybrid electric vehicle based on multi-agent reinforcement learning (MARL) algorithms that aim to address MIMO control optimization while most existing methods only deal with single output control. A new collaborative cyber-physical learning with multi-agents is proposed based on the analysis of the evolution of energy efficiency of the multi-mode hybrid electric vehicle (HEV) optimized by a deep deterministic policy gradient (DDPG)-based MARL algorithm. Then a learning driving cycle is set by a novel random method to speed up the training process. Eventually, network design, learning rate, and policy noise are incorporated in the sensibility analysis and the DDPG-based algorithm parameters are determined, and the learning performance with the different relationships of multi-agents is studied and demonstrates that the not completely independent relationship with Ratio 0.2 is the best. The compassion study with the single-agent and multi-agent suggests that the multi-agent can achieve approximately 4% improvement of total energy over the single-agent scheme. Therefore, the multi-objective control by MARL can achieve good optimization effects and application efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge