Michele Zorzi

Department of Information Engineering, University of Padova, Italy

Sensing-Based Beamformed Resource Allocation in Standalone Millimeter-Wave Vehicular Networks

Mar 19, 2025

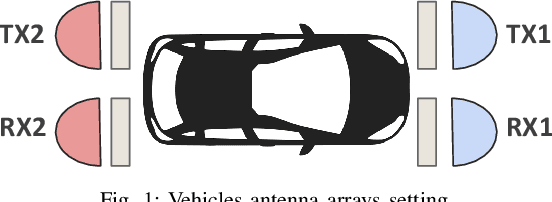

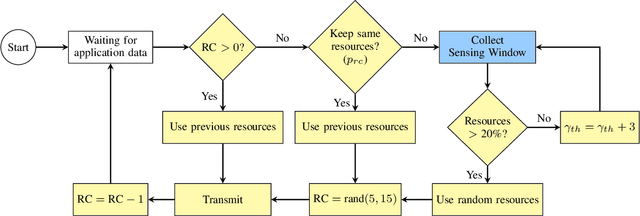

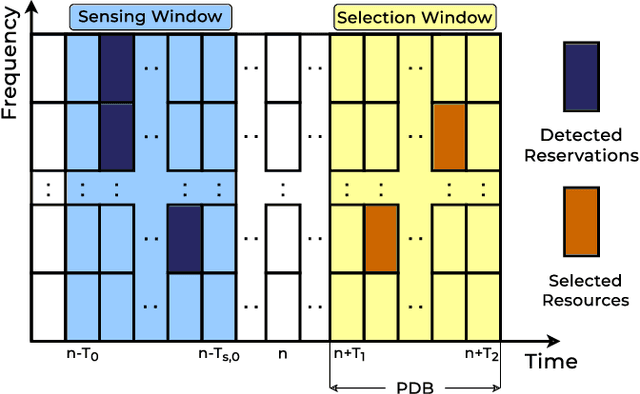

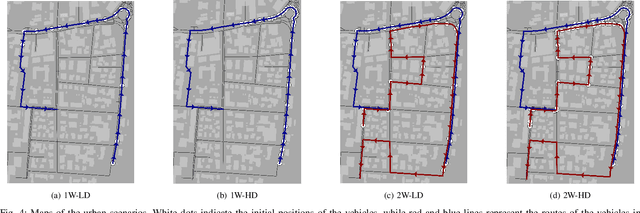

Abstract:In 3GPP New Radio (NR) Vehicle-to-Everything (V2X), the new standard for next-generation vehicular networks, vehicles can autonomously select sidelink resources for data transmission, which permits network operations without cellular coverage. However, standalone resource allocation is uncoordinated, and is complicated by the high mobility of the nodes that may introduce unforeseen channel collisions (e.g., when a transmitting vehicle changes path) or free up resources (e.g., when a vehicle moves outside of the communication area). Moreover, unscheduled resource allocation is prone to the hidden node and exposed node problems, which are particularly critical considering directional transmissions. In this paper, we implement and demonstrate a new channel access scheme for NR V2X in Frequency Range 2 (FR2), i.e., at millimeter wave (mmWave) frequencies, based on directional and beamformed transmissions along with Sidelink Control Information (SCI) to select resources for transmission. We prove via simulation that this approach can reduce the probability of collision for resource allocation, compared to a baseline solution that does not configure SCI transmissions.

Performance Evaluation of IoT LoRa Networks on Mars Through ns-3 Simulations

Dec 27, 2024

Abstract:In recent years, there has been a significant surge of interest in Mars exploration, driven by the planet's potential for human settlement and its proximity to Earth. In this paper, we explore the performance of the LoRaWAN technology on Mars, to study whether commercial off-the-shelf IoT products, designed and developed on Earth, can be deployed on the Martian surface. We use the ns-3 simulator to model various environmental conditions, primarily focusing on the Free Space Path Loss (FSPL) and the impact of Martian dust storms. Simulation results are given with respect to Earth, as a function of the distance, packet size, offered traffic, and the impact of Mars' atmospheric perturbations. We show that LoRaWAN can be a viable communication solution on Mars, although the performance is heavily affected by the extreme Martian environment over long distances.

Enhanced Time Division Duplexing Slot Allocation and Scheduling in Non-Terrestrial Networks

Dec 02, 2024Abstract:The integration of non-terrestrial networks (NTNs) and terrestrial networks (TNs) is fundamental for extending connectivity to rural and underserved areas that lack coverage from traditional cellular infrastructure. However, this integration presents several challenges. For instance, TNs mainly operate in Time Division Duplexing (TDD). However, for NTN via satellites, TDD is complicated due to synchronization problems in large cells, and the significant impact of guard periods and long propagation delays. In this paper, we propose a novel slot allocation mechanism to enable TDD in NTN. This approach permits to allocate additional transmissions during the guard period between a downlink slot and the corresponding uplink slot to reduce the overhead, provided that they do not interfere with other concurrent transmissions. Moreover, we propose two scheduling methods to select the users that transmit based on considerations related to the Signal-to-Noise Ratio (SNR) or the propagation delay. Simulations demonstrate that our proposal can increase the network capacity compared to a benchmark scheme that does not schedule transmissions in guard periods.

Effective Communication with Dynamic Feature Compression

Jan 29, 2024Abstract:The remote wireless control of industrial systems is one of the major use cases for 5G and beyond systems: in these cases, the massive amounts of sensory information that need to be shared over the wireless medium may overload even high-capacity connections. Consequently, solving the effective communication problem by optimizing the transmission strategy to discard irrelevant information can provide a significant advantage, but is often a very complex task. In this work, we consider a prototypal system in which an observer must communicate its sensory data to a robot controlling a task (e.g., a mobile robot in a factory). We then model it as a remote Partially Observable Markov Decision Process (POMDP), considering the effect of adopting semantic and effective communication-oriented solutions on the overall system performance. We split the communication problem by considering an ensemble Vector Quantized Variational Autoencoder (VQ-VAE) encoding, and train a Deep Reinforcement Learning (DRL) agent to dynamically adapt the quantization level, considering both the current state of the environment and the memory of past messages. We tested the proposed approach on the well-known CartPole reference control problem, obtaining a significant performance increase over traditional approaches.

On the Energy Consumption of UAV Edge Computing in Non-Terrestrial Networks

Dec 20, 2023

Abstract:During the last few years, the use of Unmanned Aerial Vehicles (UAVs) equipped with sensors and cameras has emerged as a cutting-edge technology to provide services such as surveillance, infrastructure inspections, and target acquisition. However, this approach requires UAVs to process data onboard, mainly for person/object detection and recognition, which may pose significant energy constraints as UAVs are battery-powered. A possible solution can be the support of Non-Terrestrial Networks (NTNs) for edge computing. In particular, UAVs can partially offload data (e.g., video acquisitions from onboard sensors) to more powerful upstream High Altitude Platforms (HAPs) or satellites acting as edge computing servers to increase the battery autonomy compared to local processing, even though at the expense of some data transmission delays. Accordingly, in this study we model the energy consumption of UAVs, HAPs, and satellites considering the energy for data processing, offloading, and hovering. Then, we investigate whether data offloading can improve the system performance. Simulations demonstrate that edge computing can improve both UAV autonomy and end-to-end delay compared to onboard processing in many configurations.

Minimizing Energy Consumption for 5G NR Beam Management for RedCap Devices

Sep 26, 2023

Abstract:In 5G New Radio (NR), beam management entails periodic and continuous transmission and reception of control signals in the form of synchronization signal blocks (SSBs), used to perform initial access and/or channel estimation. However, this procedure demands continuous energy consumption, which is particularly challenging to handle for low-cost, low-complexity, and battery-constrained devices, such as RedCap devices to support mid-market Internet of Things (IoT) use cases. In this context, this work aims at reducing the energy consumption during beam management for RedCap devices, while ensuring that the desired Quality of Service (QoS) requirements are met. To do so, we formalize an optimization problem in an Indoor Factory (InF) scenario to select the best beam management parameters, including the beam update periodicity and the beamwidth, to minimize energy consumption based on users' distribution and their speed. The analysis yields the regions of feasibility, i.e., the upper limit(s) on the beam management parameters for RedCap devices, that we use to provide design guidelines accordingly.

Modeling Interference for the Coexistence of 6G Networks and Passive Sensing Systems

Aug 07, 2023

Abstract:Future wireless networks and sensing systems will benefit from access to large chunks of spectrum above 100 GHz, to achieve terabit-per-second data rates in 6th Generation (6G) cellular systems and improve accuracy and reach of Earth exploration and sensing and radio astronomy applications. These are extremely sensitive to interference from artificial signals, thus the spectrum above 100 GHz features several bands which are protected from active transmissions under current spectrum regulations. To provide more agile access to the spectrum for both services, active and passive users will have to coexist without harming passive sensing operations. In this paper, we provide the first, fundamental analysis of Radio Frequency Interference (RFI) that large-scale terrestrial deployments introduce in different satellite sensing systems now orbiting the Earth. We develop a geometry-based analysis and extend it into a data-driven model which accounts for realistic propagation, building obstruction, ground reflection, for network topology with up to $10^5$ nodes in more than $85$ km$^2$. We show that the presence of harmful RFI depends on several factors, including network load, density and topology, satellite orientation, and building density. The results and methodology provide the foundation for the development of coexistence solutions and spectrum policy towards 6G.

Communication-Efficient Federated Learning through Importance Sampling

Jun 25, 2023

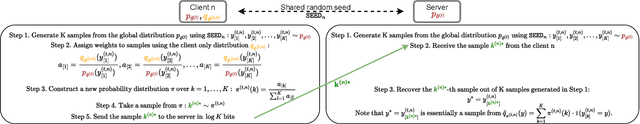

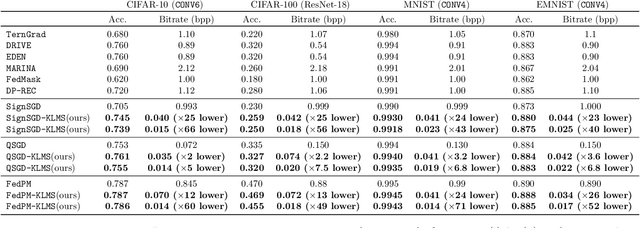

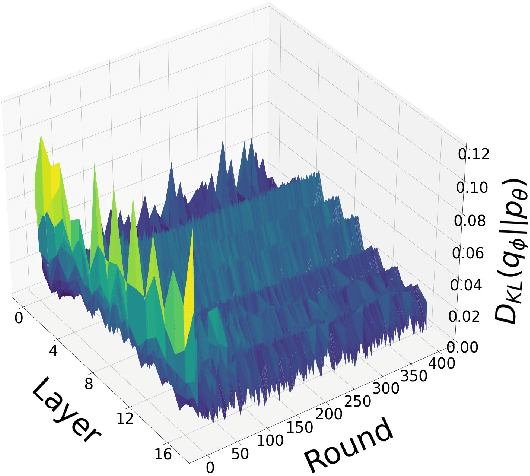

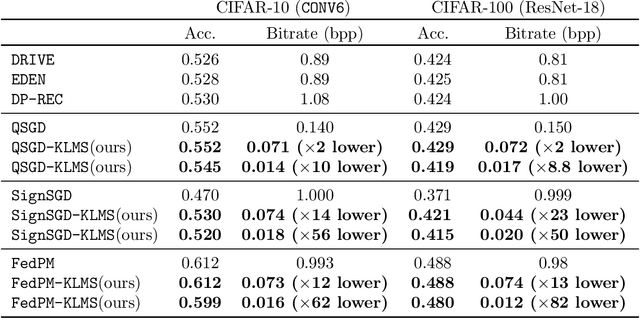

Abstract:The high communication cost of sending model updates from the clients to the server is a significant bottleneck for scalable federated learning (FL). Among existing approaches, state-of-the-art bitrate-accuracy tradeoffs have been achieved using stochastic compression methods -- in which the client $n$ sends a sample from a client-only probability distribution $q_{\phi^{(n)}}$, and the server estimates the mean of the clients' distributions using these samples. However, such methods do not take full advantage of the FL setup where the server, throughout the training process, has side information in the form of a pre-data distribution $p_{\theta}$ that is close to the client's distribution $q_{\phi^{(n)}}$ in Kullback-Leibler (KL) divergence. In this work, we exploit this closeness between the clients' distributions $q_{\phi^{(n)}}$'s and the side information $p_{\theta}$ at the server, and propose a framework that requires approximately $D_{KL}(q_{\phi^{(n)}}|| p_{\theta})$ bits of communication. We show that our method can be integrated into many existing stochastic compression frameworks such as FedPM, Federated SGLD, and QSGD to attain the same (and often higher) test accuracy with up to $50$ times reduction in the bitrate.

Downlink Clustering-Based Scheduling of IRS-Assisted Communications With Reconfiguration Constraints

May 23, 2023Abstract:Intelligent reflecting surfaces (IRSs) are being widely investigated as a potential low-cost and energy-efficient alternative to active relays for improving coverage in next-generation cellular networks. However, technical constraints in the configuration of IRSs should be taken into account in the design of scheduling solutions and the assessment of their performance. To this end, we examine an IRS-assisted time division multiple access (TDMA) cellular network where the reconfiguration of the IRS incurs a communication cost; thus, we aim at limiting the number of reconfigurations over time. Along these lines, we propose a clustering-based heuristic scheduling scheme that maximizes the cell sum capacity, subject to a fixed number of reconfigurations within a TDMA frame. First, the best configuration of each user equipment (UE), in terms of joint beamforming and optimal IRS configuration, is determined using an iterative algorithm. Then, we propose different clustering techniques to divide the UEs into subsets sharing the same sub-optimal IRS configuration, derived through distance- and capacity-based algorithms. Finally, UEs within the same cluster are scheduled accordingly. We provide extensive numerical results for different propagation scenarios, IRS sizes, and phase shifters quantization constraints, showing the effectiveness of our approach in supporting multi-user IRS systems with practical constraints.

Semantic Communication of Learnable Concepts

May 14, 2023

Abstract:We consider the problem of communicating a sequence of concepts, i.e., unknown and potentially stochastic maps, which can be observed only through examples, i.e., the mapping rules are unknown. The transmitter applies a learning algorithm to the available examples, and extracts knowledge from the data by optimizing a probability distribution over a set of models, i.e., known functions, which can better describe the observed data, and so potentially the underlying concepts. The transmitter then needs to communicate the learned models to a remote receiver through a rate-limited channel, to allow the receiver to decode the models that can describe the underlying sampled concepts as accurately as possible in their semantic space. After motivating our analysis, we propose the formal problem of communicating concepts, and provide its rate-distortion characterization, pointing out its connection with the concepts of empirical and strong coordination in a network. We also provide a bound for the distortion-rate function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge