Takayuki Shimizu

Toyota Motor North America, Mountain View, CA, USA

Sensing-Based Beamformed Resource Allocation in Standalone Millimeter-Wave Vehicular Networks

Mar 19, 2025

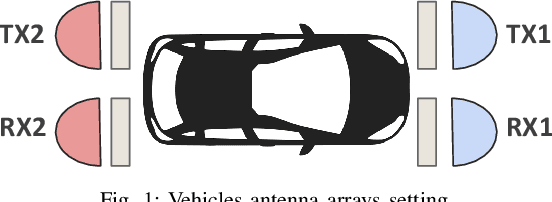

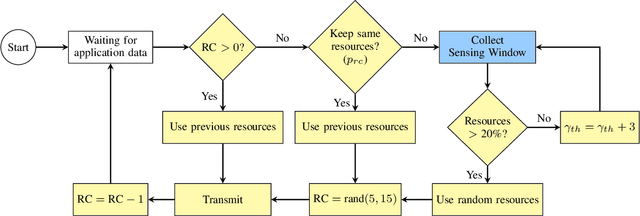

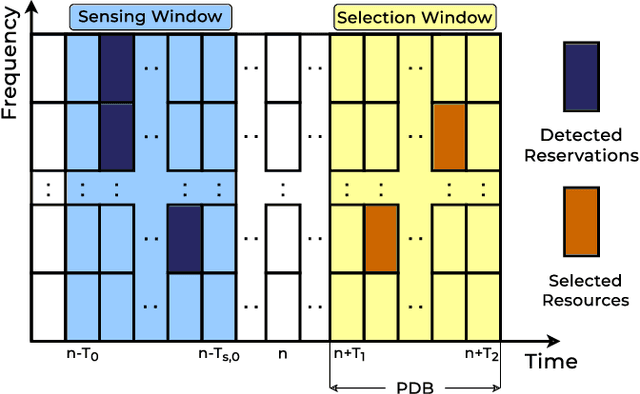

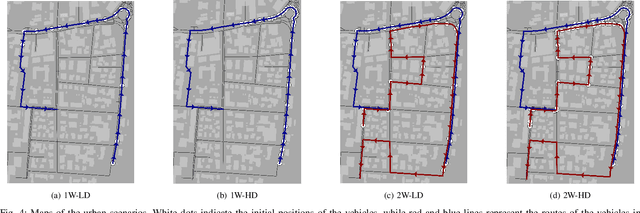

Abstract:In 3GPP New Radio (NR) Vehicle-to-Everything (V2X), the new standard for next-generation vehicular networks, vehicles can autonomously select sidelink resources for data transmission, which permits network operations without cellular coverage. However, standalone resource allocation is uncoordinated, and is complicated by the high mobility of the nodes that may introduce unforeseen channel collisions (e.g., when a transmitting vehicle changes path) or free up resources (e.g., when a vehicle moves outside of the communication area). Moreover, unscheduled resource allocation is prone to the hidden node and exposed node problems, which are particularly critical considering directional transmissions. In this paper, we implement and demonstrate a new channel access scheme for NR V2X in Frequency Range 2 (FR2), i.e., at millimeter wave (mmWave) frequencies, based on directional and beamformed transmissions along with Sidelink Control Information (SCI) to select resources for transmission. We prove via simulation that this approach can reduce the probability of collision for resource allocation, compared to a baseline solution that does not configure SCI transmissions.

A Hybrid Model/Data-Driven Solution to Channel, Position and Orientation Tracking in mmWave Vehicular Systems

Mar 07, 2025Abstract:Channel tracking in millimeter wave (mmWave) vehicular systems is crucial for maintaining robust vehicle-to-infrastructure (V2I) communication links, which can be leveraged to achieve high accuracy vehicle position and orientation tracking as a byproduct of communication. While prior work tends to simplify the system model by omitting critical system factors such as clock offsets, filtering effects, antenna array orientation offsets, and channel estimation errors, we address the challenges of a practical mmWave multiple-input multiple-output (MIMO) communication system between a single base station (BS) and a vehicle while tracking the vehicle's position and orientation (PO) considering realistic driving behaviors. We first develop a channel tracking algorithm based on multidimensional orthogonal matching pursuit (MOMP) with factoring (F-MOMP) to reduce computational complexity and enable high-resolution channel estimates during the tracking stage, suitable for PO estimation. Then, we develop a network called VO-ChAT (Vehicle Orientation-Channel Attention for orientation Tracking), which processes the channel estimate sequence for orientation prediction. Afterward, a weighted least squares (WLS) problem that exploits the channel geometry is formulated to create an initial estimate of the vehicle's 2D position. A second network named VP-ChAT (Vehicle Position-Channel Attention for position Tracking) refines the geometric position estimate. VP-ChAT is a Transformer inspired network processing the historical channel and position estimates to provide the correction for the initial geometric position estimate. The proposed solution is evaluated using raytracing generated channels in an urban canyon environment. For 80% of the cases it achieves a 2D position tracking accuracy of 26 cm while orientation errors are kept below 0.5 degree.

OOSTraj: Out-of-Sight Trajectory Prediction With Vision-Positioning Denoising

Apr 02, 2024Abstract:Trajectory prediction is fundamental in computer vision and autonomous driving, particularly for understanding pedestrian behavior and enabling proactive decision-making. Existing approaches in this field often assume precise and complete observational data, neglecting the challenges associated with out-of-view objects and the noise inherent in sensor data due to limited camera range, physical obstructions, and the absence of ground truth for denoised sensor data. Such oversights are critical safety concerns, as they can result in missing essential, non-visible objects. To bridge this gap, we present a novel method for out-of-sight trajectory prediction that leverages a vision-positioning technique. Our approach denoises noisy sensor observations in an unsupervised manner and precisely maps sensor-based trajectories of out-of-sight objects into visual trajectories. This method has demonstrated state-of-the-art performance in out-of-sight noisy sensor trajectory denoising and prediction on the Vi-Fi and JRDB datasets. By enhancing trajectory prediction accuracy and addressing the challenges of out-of-sight objects, our work significantly contributes to improving the safety and reliability of autonomous driving in complex environments. Our work represents the first initiative towards Out-Of-Sight Trajectory prediction (OOSTraj), setting a new benchmark for future research. The code is available at \url{https://github.com/Hai-chao-Zhang/OOSTraj}.

Layout Sequence Prediction From Noisy Mobile Modality

Oct 09, 2023Abstract:Trajectory prediction plays a vital role in understanding pedestrian movement for applications such as autonomous driving and robotics. Current trajectory prediction models depend on long, complete, and accurately observed sequences from visual modalities. Nevertheless, real-world situations often involve obstructed cameras, missed objects, or objects out of sight due to environmental factors, leading to incomplete or noisy trajectories. To overcome these limitations, we propose LTrajDiff, a novel approach that treats objects obstructed or out of sight as equally important as those with fully visible trajectories. LTrajDiff utilizes sensor data from mobile phones to surmount out-of-sight constraints, albeit introducing new challenges such as modality fusion, noisy data, and the absence of spatial layout and object size information. We employ a denoising diffusion model to predict precise layout sequences from noisy mobile data using a coarse-to-fine diffusion strategy, incorporating the RMS, Siamese Masked Encoding Module, and MFM. Our model predicts layout sequences by implicitly inferring object size and projection status from a single reference timestamp or significantly obstructed sequences. Achieving SOTA results in randomly obstructed experiments and extremely short input experiments, our model illustrates the effectiveness of leveraging noisy mobile data. In summary, our approach offers a promising solution to the challenges faced by layout sequence and trajectory prediction models in real-world settings, paving the way for utilizing sensor data from mobile phones to accurately predict pedestrian bounding box trajectories. To the best of our knowledge, this is the first work that addresses severely obstructed and extremely short layout sequences by combining vision with noisy mobile modality, making it the pioneering work in the field of layout sequence trajectory prediction.

Sparse Recovery with Attention: A Hybrid Data/Model Driven Solution for High Accuracy Position and Channel Tracking at mmWave

Aug 26, 2023Abstract:In this paper, we propose first a mmWave channel tracking algorithm based on multidimensional orthogonal matching pursuit algorithm (MOMP) using reduced sparsifying dictionaries, which exploits information from channel estimates in previous frames. Then, we present an algorithm to obtain the vehicle's initial location for the current frame by solving a system of geometric equations that leverage the estimated path parameters. Next, we design an attention network that analyzes the series of channel estimates, the vehicle's trajectory, and the initial estimate of the position associated with the current frame, to generate a refined, high accuracy position estimate. The proposed system is evaluated through numerical experiments using realistic mmWave channel series generated by ray-tracing. The experimental results show that our system provides a 2D position tracking error below 20 cm, significantly outperforming previous work based on Bayesian filtering.

Learning to Localize with Attention: from sparse mmWave channel estimates from a single BS to high accuracy 3D location

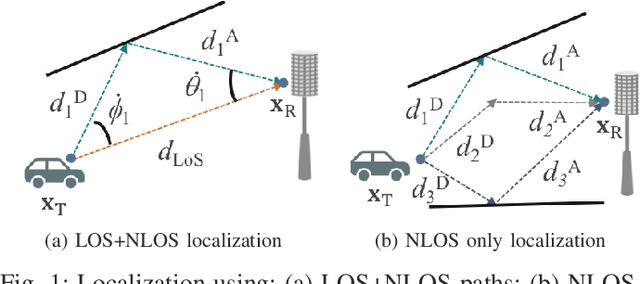

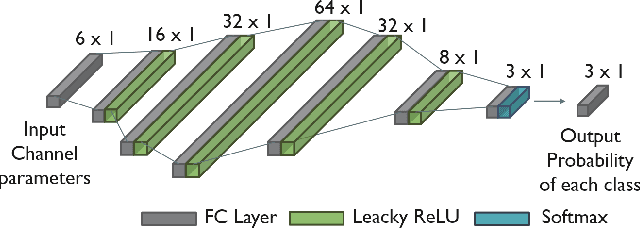

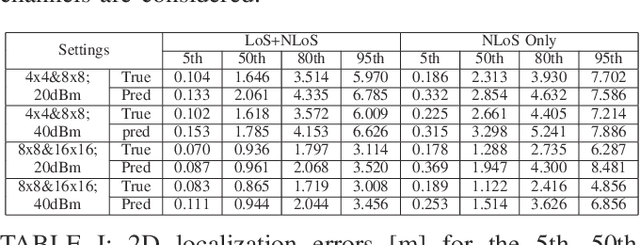

Jun 30, 2023Abstract:One strategy to obtain user location information in a wireless network operating at millimeter wave (mmWave) is based on the exploitation of the geometric relationships between the channel parameters and the user position. These relationships can be easily built from the LoS path and/or first order reflections, but high resolution channel estimates are required for high accuracy. In this paper, we consider a mmWave MIMO system based on a hybrid architecture, and develop first a low complexity channel estimation strategy based on MOMP suitable for high dimensional channels, as those associated to operating with large planar arrays. Then, a deep neural network (DNN) called PathNet is designed to classify the order of the estimated channel paths, so that only the line-of-sight (LOS) path and first order reflections are selected for localization purposes. Next, a 3D localization strategy exploiting the geometry of the environment is developed to operate in both LOS and non-line-of-sight (NLOS) conditions, while considering the unknown clock offset between the transmitter (TX) and the receiver (RX). Finally, a Transformer based network exploiting attention mechanisms called ChanFormer is proposed to refine the initial position estimate obtained from the geometric system of equations that connects user position and channel parameters. Simulation results obtained with realistic vehicular channels generated by ray tracing indicate that sub-meter accuracy (<= 0.45 m) can be achieved for 95% of the users in LOS channels, and for 50% of the users in NLOS conditions.

Enabling NLoS LEO Satellite Communications with Reconfigurable Intelligent Surfaces

May 31, 2022

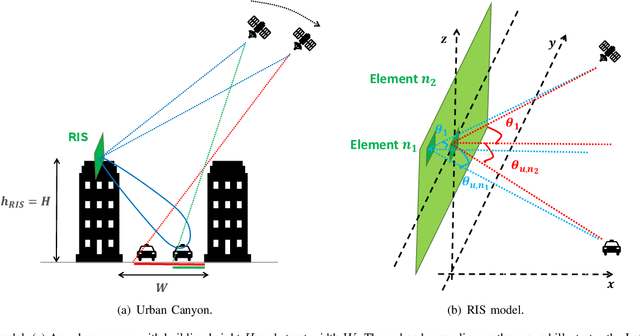

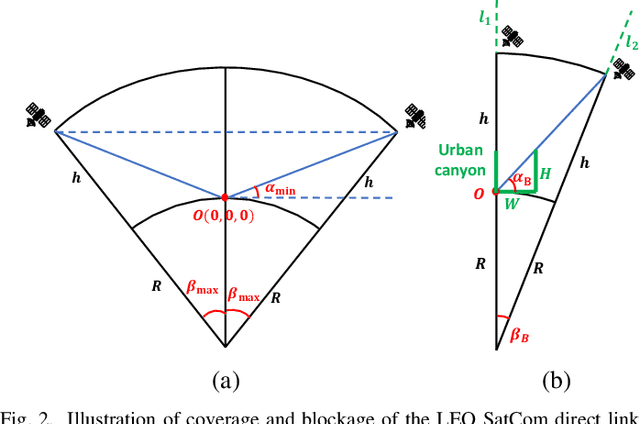

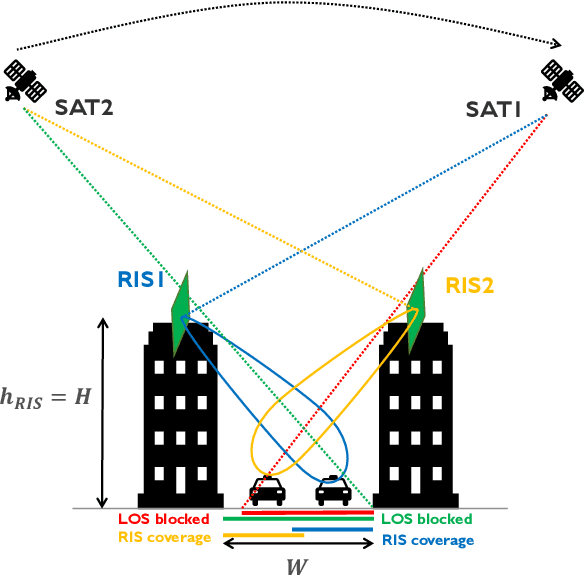

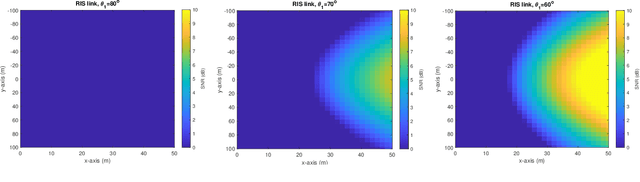

Abstract:Low Earth Orbit (LEO) satellite communications (SatCom) are considered a promising solution to provide uninterrupted services in cellular networks. Line-of-sight (LoS) links between the LEO satellites and the ground users are, however, easily blocked in urban scenarios. In this paper, we propose to enable LEO SatCom in non-line-of-sight (NLoS) channels, as those corresponding to links to users in urban canyons, with the aid of reconfigurable intelligent surfaces (RISs). First, we derive the near field signal model for the satellite-RIS-user link. Then, we propose two deployments to improve the coverage of a RIS-aided link: down tilting the RIS located on the top of a building, and considering a deployment with RISs located on the top of opposite buildings. Simulation results show the effectiveness of using RISs in LEO SatCom to overcome blockages in urban canyons. Insights about the optimal tilt angle and the coverage extension provided by the deployment of an additional RIS are also provided.

Joint Initial Access and Localization in Millimeter Wave Vehicular Networks: a Hybrid Model/Data Driven Approach

Apr 04, 2022

Abstract:High resolution compressive channel estimation provides information for vehicle localization when a hybrid mmWave MIMO system is considered. Complexity and memory requirements can, however, become a bottleneck when high accuracy localization is required. An additional challenge is the need of path order information to apply the appropriate geometric relationships between the channel path parameters and the vehicle, RSU and scatterers position. In this paper, we propose a low complexity channel estimation strategy of the angle of departure and time difference of arrival based on multidimensional orthogonal matching pursuit. We also design a deep neural network that predicts the order of the channel paths so only the LoS and first order reflections are used for localization. Simulation results obtained with realistic vehicular channels generated by ray tracing show that sub-meter accuracy can be achieved for 50% of the users, without resorting to perfect synchronization assumptions or unfeasible all-digital high resolution MIMO architectures.

Adaptive Neural Network-based OFDM Receivers

Mar 25, 2022

Abstract:We propose and examine the idea of continuously adapting state-of-the-art neural network (NN)-based orthogonal frequency division multiplex (OFDM) receivers to current channel conditions. This online adaptation via retraining is mainly motivated by two reasons: First, receiver design typically focuses on the universal optimal performance for a wide range of possible channel realizations. However, in actual applications and within short time intervals, only a subset of these channel parameters is likely to occur, as macro parameters, e.g., the maximum channel delay, can assumed to be static. Second, in-the-field alterations like temporal interferences or other conditions out of the originally intended specifications can occur on a practical (real-world) transmission. While conventional (filter-based) systems would require reconfiguration or additional signal processing to cope with these unforeseen conditions, NN-based receivers can learn to mitigate previously unseen effects even after their deployment. For this, we showcase on-the-fly adaption to current channel conditions and temporal alterations solely based on recovered labels from an outer forward error correction (FEC) code without any additional piloting overhead. To underline the flexibility of the proposed adaptive training, we showcase substantial gains for scenarios with static channel macro parameters, for out-ofspecification usage and for interference compensation.

Deep Learning-based Link Configuration for Radar-aided Multiuser mmWave Vehicle-to-Infrastructure Communication

Jan 12, 2022

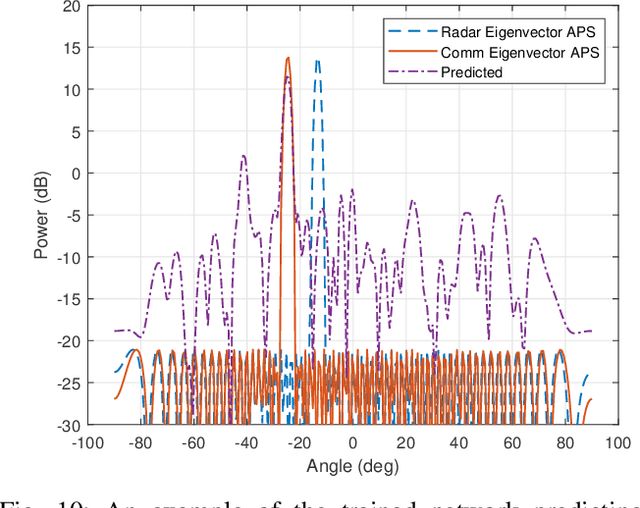

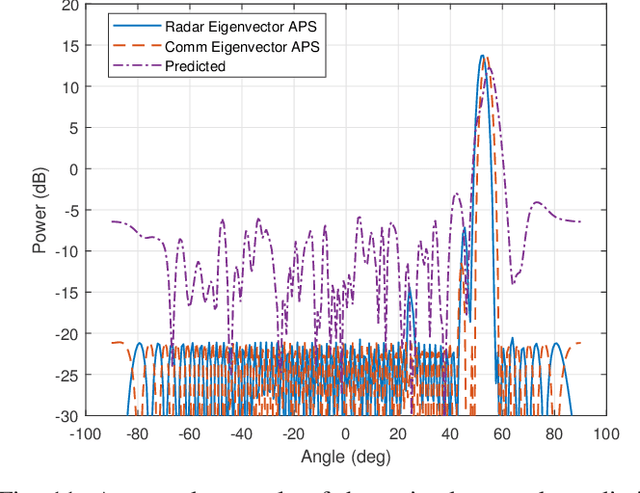

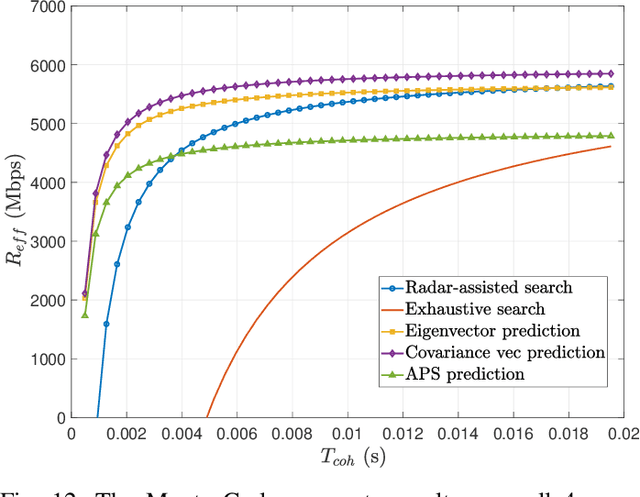

Abstract:Configuring millimeter wave links following a conventional beam training protocol, as the one proposed in the current cellular standard, introduces a large communication overhead, specially relevant in vehicular systems, where the channels are highly dynamic. In this paper, we propose the use of a passive radar array to sense automotive radar transmissions coming from multiple vehicles on the road, and a radar processing chain that provides information about a reduced set of candidate beams for the links between the road-infrastructure and each one of the vehicles. This prior information can be later leveraged by the beam training protocol to significantly reduce overhead. The radar processing chain estimates both the timing and chirp rates of the radar signals, isolates the individual signals by filtering out interfering radar chirps, and estimates the spatial covariance of each individual radar transmission. Then, a deep network is used to translate features of these radar spatial covariances into features of the communication spatial covariances, by learning the intricate mapping between radar and communication channels, in both line-of-sight and non-line-of-sight settings. The communication rates and outage probabilities of this approach are compared against exhaustive search and pure radar-aided beam training methods (without deep learning-based mapping), and evaluated on multi-user channels simulated by ray tracing. Results show that: (i) the proposed processing chain can reliably isolate the spatial covariances for individual radars, and (ii) the radar-to-communications translation strategy based on deep learning provides a significant improvement over pure radar-aided methods in both LOS and NLOS channels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge