Nuria Gonzalez-Prelcic

The Role of ISAC in 6G Networks: Enabling Next-Generation Wireless Systems

Oct 06, 2025

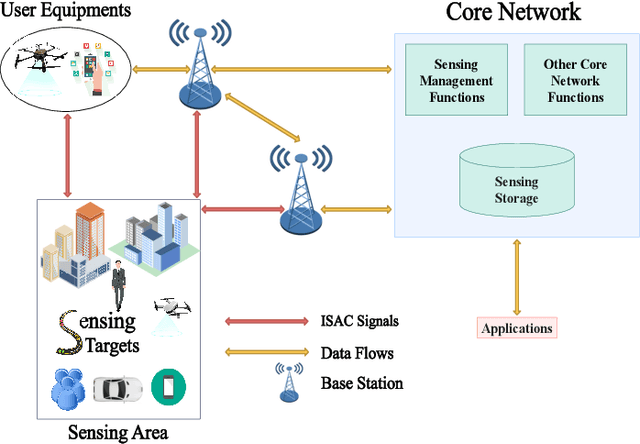

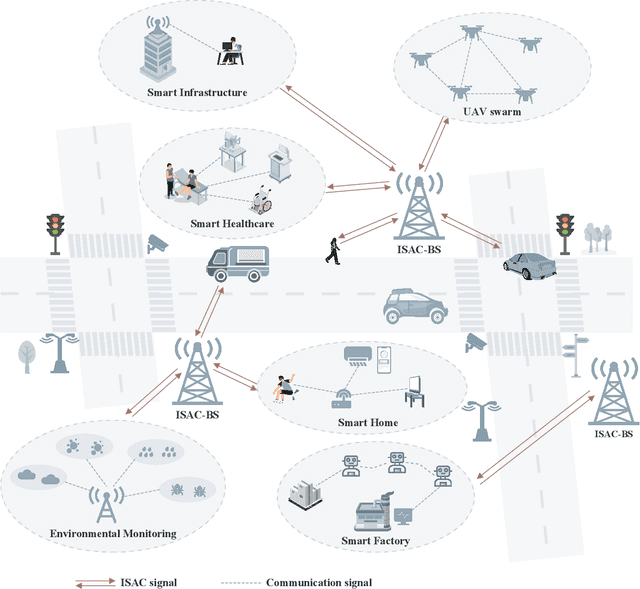

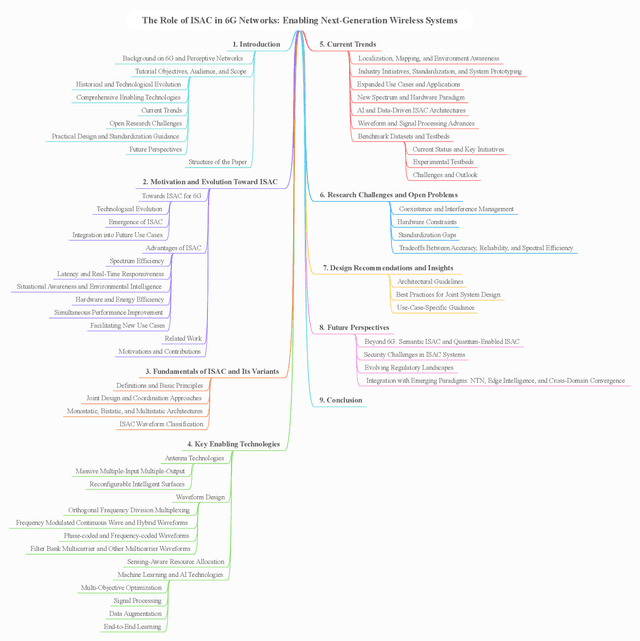

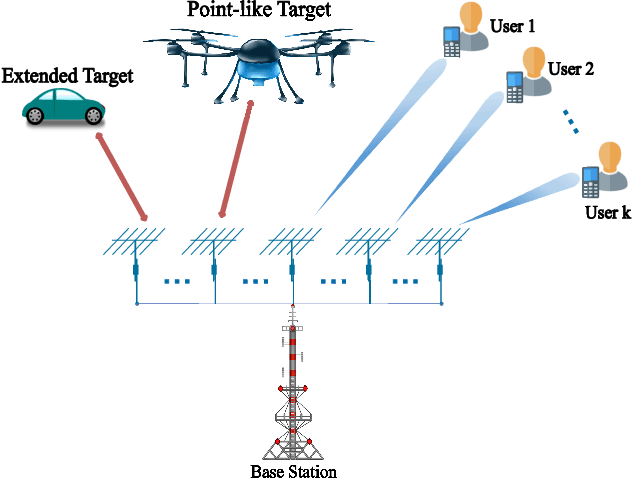

Abstract:The commencement of the sixth-generation (6G) wireless networks represents a fundamental shift in the integration of communication and sensing technologies to support next-generation applications. Integrated sensing and communication (ISAC) is a key concept in this evolution, enabling end-to-end support for both communication and sensing within a unified framework. It enhances spectrum efficiency, reduces latency, and supports diverse use cases, including smart cities, autonomous systems, and perceptive environments. This tutorial provides a comprehensive overview of ISAC's role in 6G networks, beginning with its evolution since 5G and the technical drivers behind its adoption. Core principles and system variations of ISAC are introduced, followed by an in-depth discussion of the enabling technologies that facilitate its practical deployment. The paper further analyzes current research directions to highlight key challenges, open issues, and emerging trends. Design insights and recommendations are also presented to support future development and implementation. This work ultimately try to address three central questions: Why is ISAC essential for 6G? What innovations does it bring? How will it shape the future of wireless communication?

Enabling NLoS LEO Satellite Communications with Reconfigurable Intelligent Surfaces

May 31, 2022

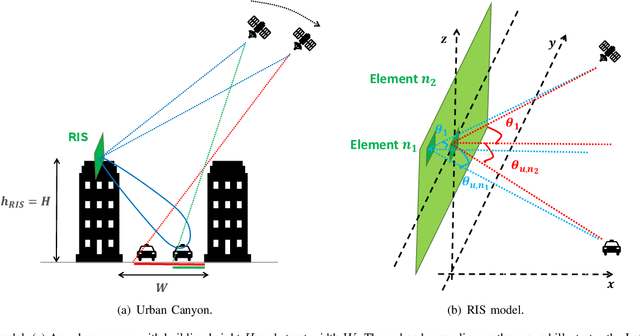

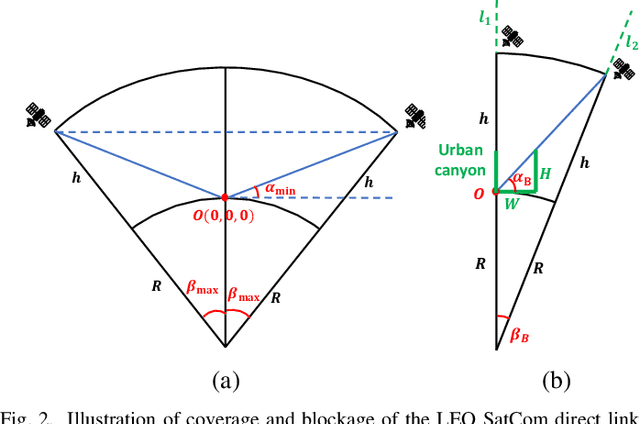

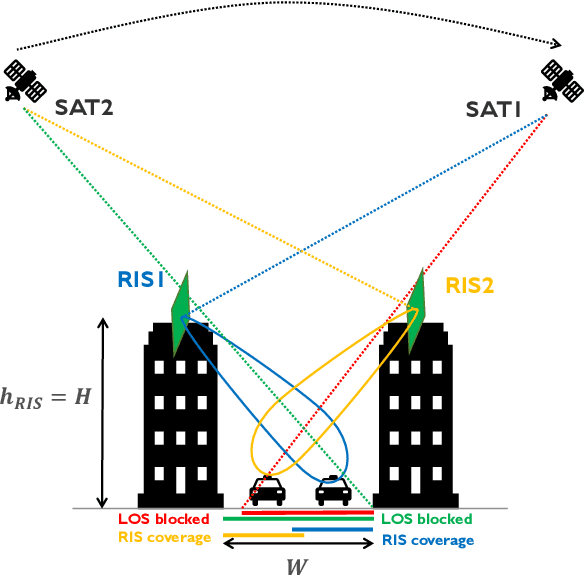

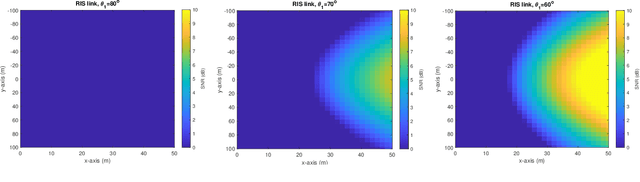

Abstract:Low Earth Orbit (LEO) satellite communications (SatCom) are considered a promising solution to provide uninterrupted services in cellular networks. Line-of-sight (LoS) links between the LEO satellites and the ground users are, however, easily blocked in urban scenarios. In this paper, we propose to enable LEO SatCom in non-line-of-sight (NLoS) channels, as those corresponding to links to users in urban canyons, with the aid of reconfigurable intelligent surfaces (RISs). First, we derive the near field signal model for the satellite-RIS-user link. Then, we propose two deployments to improve the coverage of a RIS-aided link: down tilting the RIS located on the top of a building, and considering a deployment with RISs located on the top of opposite buildings. Simulation results show the effectiveness of using RISs in LEO SatCom to overcome blockages in urban canyons. Insights about the optimal tilt angle and the coverage extension provided by the deployment of an additional RIS are also provided.

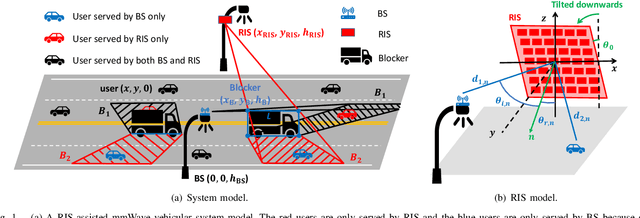

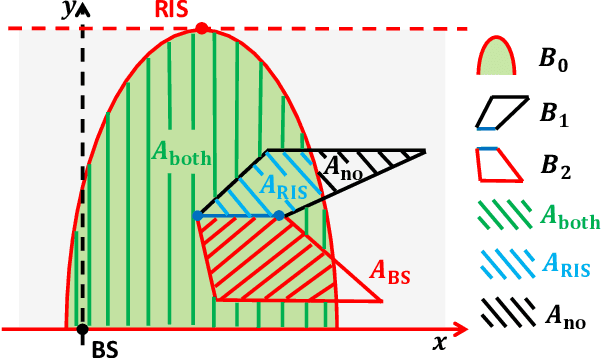

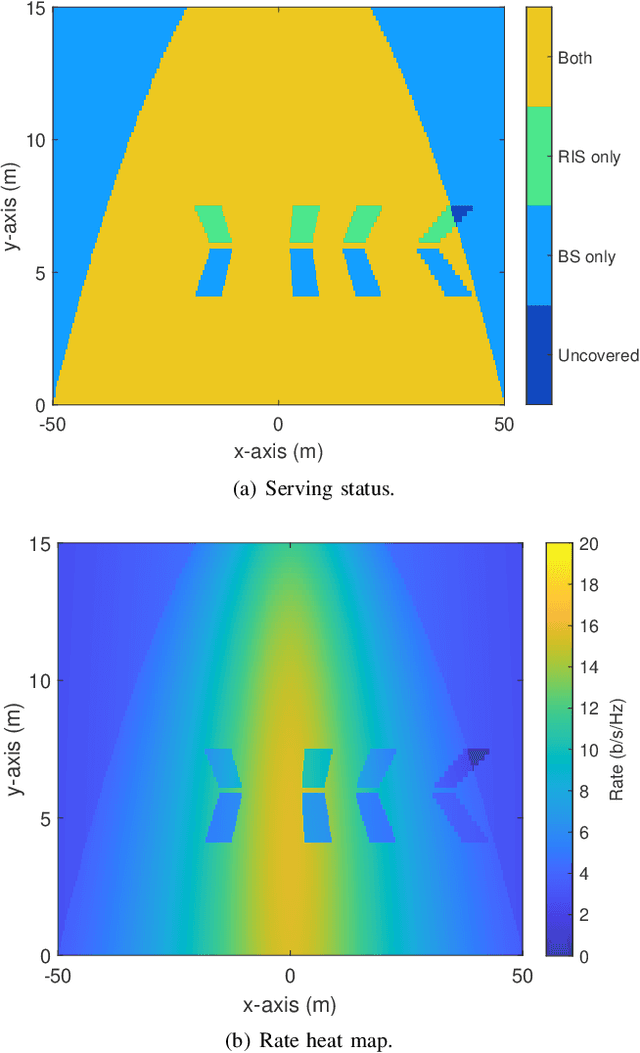

Optimizing the Deployment of Reconfigurable Intelligent Surfaces in MmWave Vehicular Systems

May 31, 2022

Abstract:Millimeter wave (MmWave) systems are vulnerable to blockages, which cause signal drop and link outage. One solution is to deploy reconfigurable intelligent surfaces (RISs) to add a strong non-line-of-sight path from the transmitter to receiver. To achieve the best performance, the location of the deployed RIS should be optimized for a given site, considering the distribution of potential users and possible blockers. In this paper, we find the optimal location, height and downtilt of RIS working in a realistic vehicular scenario. Because of the proximity between the RIS and the vehicles, and the large electrical size of the RIS, we consider a 3D geometry including the elevation angle and near-field beamforming. We provide results on RIS configuration in terms of both coverage ratio and area-averaged rate. We find that the optimized RIS improves the average averaged rate fifty percent over the case without a RIS, as well as further improvements in the coverage ratio.

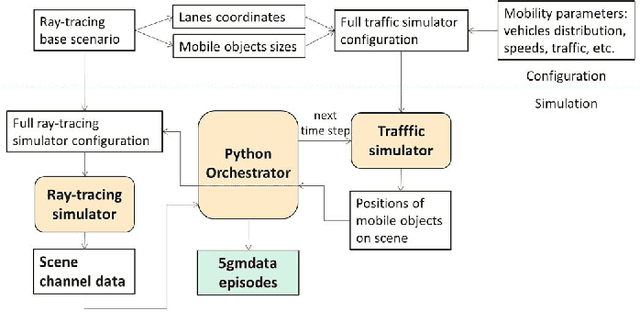

5G MIMO Data for Machine Learning: Application to Beam-Selection using Deep Learning

Jun 09, 2021

Abstract:The increasing complexity of configuring cellular networks suggests that machine learning (ML) can effectively improve 5G technologies. Deep learning has proven successful in ML tasks such as speech processing and computational vision, with a performance that scales with the amount of available data. The lack of large datasets inhibits the flourish of deep learning applications in wireless communications. This paper presents a methodology that combines a vehicle traffic simulator with a raytracing simulator, to generate channel realizations representing 5G scenarios with mobility of both transceivers and objects. The paper then describes a specific dataset for investigating beams-election techniques on vehicle-to-infrastructure using millimeter waves. Experiments using deep learning in classification, regression and reinforcement learning problems illustrate the use of datasets generated with the proposed methodology

A hybrid beamforming design for massive MIMO LEO satellite communications

Apr 22, 2021

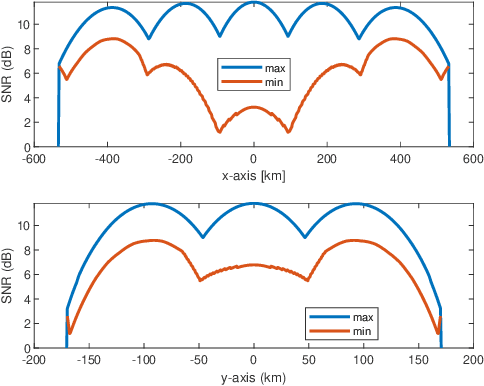

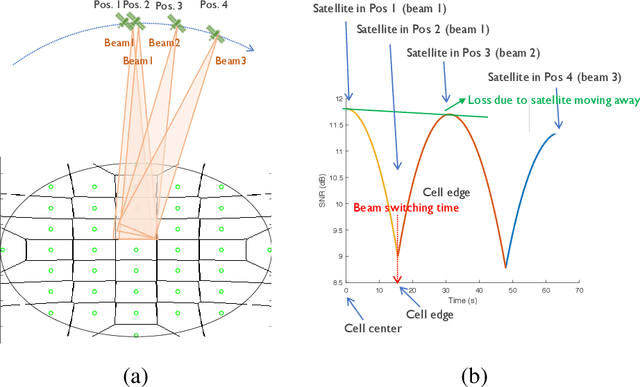

Abstract:5G and future cellular networks intend to incorporate low earth orbit (LEO) satellite communication systems (SatCom) to solve the coverage and availability problems that cannot be addressed by satellite-based or ground-based infrastructure alone. This integration of terrestrial and non terrestrial networks poses many technical challenges which need to be identified and addressed. To this aim, we design and simulate the downlink of a LEO SatCom compatible with 5G NR, with a special focus on the design of the beamforming codebook at the satellite side. The performance of this approach is evaluated for the link between a LEO satellite and a mobile terminal in the Ku band, assuming a realistic channel model and commercial antenna array designs, both at the satellite and the terminal. Simulation results provide insights on open research challenges related to analog codebook design and hybrid beamforming strategies, requirements of the antenna terminals to provide a given SNR, or required beam reconfiguration capabilities among others.

Fast Orthonormal Sparsifying Transforms Based on Householder Reflectors

Nov 24, 2016

Abstract:Dictionary learning is the task of determining a data-dependent transform that yields a sparse representation of some observed data. The dictionary learning problem is non-convex, and usually solved via computationally complex iterative algorithms. Furthermore, the resulting transforms obtained generally lack structure that permits their fast application to data. To address this issue, this paper develops a framework for learning orthonormal dictionaries which are built from products of a few Householder reflectors. Two algorithms are proposed to learn the reflector coefficients: one that considers a sequential update of the reflectors and one with a simultaneous update of all reflectors that imposes an additional internal orthogonal constraint. The proposed methods have low computational complexity and are shown to converge to local minimum points which can be described in terms of the spectral properties of the matrices involved. The resulting dictionaries balance between the computational complexity and the quality of the sparse representations by controlling the number of Householder reflectors in their product. Simulations of the proposed algorithms are shown in the image processing setting where well-known fast transforms are available for comparisons. The proposed algorithms have favorable reconstruction error and the advantage of a fast implementation relative to the classical, unstructured, dictionaries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge