Aldebaro Klautau

Accelerating Ray Tracing-Based Wireless Channels Generation for Real-Time Network Digital Twins

Apr 13, 2025Abstract:Ray tracing (RT) simulation is a widely used approach to enable modeling wireless channels in applications such as network digital twins. However, the computational cost to execute RT is proportional to factors such as the level of detail used in the adopted 3D scenario. This work proposes RT pre-processing algorithms that aim at simplifying the 3D scene without distorting the channel. It also proposes a post-processing method that augments a set of RT results to achieve an improved time resolution. These methods enable using RT in applications that use a detailed and photorealistic 3D scenario, while generating consistent wireless channels over time. Our simulation results with different 3D scenarios demonstrate that it is possible to reduce the simulation time by more than 50% without compromising the accuracy of the RT parameters.

Machine Learning-Based mmWave MIMO Beam Tracking in V2I Scenarios: Algorithms and Datasets

Dec 06, 2024Abstract:This work investigates the use of machine learning applied to the beam tracking problem in 5G networks and beyond. The goal is to decrease the overhead associated to MIMO millimeter wave beamforming. In comparison to beam selection (also called initial beam acquisition), ML-based beam tracking is less investigated in the literature due to factors such as the lack of comprehensive datasets. One of the contributions of this work is a new public multimodal dataset, which includes images, LIDAR information and GNSS positioning, enabling the evaluation of new data fusion algorithms applied to wireless communications. The work also contributes with an evaluation of the performance of beam tracking algorithms, and associated methodology. When considering as inputs the LIDAR data, the coordinates and the information from previously selected beams, the proposed deep neural network based on ResNet and using LSTM layers, significantly outperformed the other beam tracking models.

* 5 pages, conference: 2024 IEEE Latin-American Conference on Communications (LATINCOM)

CAVIAR: Co-simulation of 6G Communications, 3D Scenarios and AI for Digital Twins

Jan 06, 2024Abstract:Digital twins are an important technology for advancing mobile communications, specially in use cases that require simultaneously simulating the wireless channel, 3D scenes and machine learning. Aiming at providing a solution to this demand, this work describes a modular co-simulation methodology called CAVIAR. Here, CAVIAR is upgraded to support a message passing library and enable the virtual counterpart of a digital twin system using different 6G-related simulators. The main contributions of this work are the detailed description of different CAVIAR architectures, the implementation of this methodology to assess a 6G use case of UAV-based search and rescue mission (SAR), and the generation of benchmarking data about the computational resource usage. For executing the SAR co-simulation we adopt five open-source solutions: the physical and link level network simulator Sionna, the simulator for autonomous vehicles AirSim, scikit-learn for training a decision tree for MIMO beam selection, Yolov8 for the detection of rescue targets and NATS for message passing. Results for the implemented SAR use case suggest that the methodology can run in a single machine, with the main demanded resources being the CPU processing and the GPU memory.

Simulation of machine learning-based 6G systems in virtual worlds

Apr 15, 2022

Abstract:Digital representations of the real world are being used in many applications, such as augmented reality. 6G systems will not only support use cases that rely on virtual worlds but also benefit from their rich contextual information to improve performance and reduce communication overhead. This paper focuses on the simulation of 6G systems that rely on a 3D representation of the environment, as captured by cameras and other sensors. We present new strategies for obtaining paired MIMO channels and multimodal data. We also discuss trade-offs between speed and accuracy when generating channels via ray tracing. We finally provide beam selection simulation results to assess the proposed methodology.

Generating MIMO Channels For 6G Virtual Worlds Using Ray-tracing Simulations

Jun 09, 2021

Abstract:Some 6G use cases include augmented reality and high-fidelity holograms, with this information flowing through the network. Hence, it is expected that 6G systems can feed machine learning algorithms with such context information to optimize communication performance. This paper focuses on the simulation of 6G MIMO systems that rely on a 3-D representation of the environment as captured by cameras and eventually other sensors. We present new and improved Raymobtime datasets, which consist of paired MIMO channels and multimodal data. We also discuss tradeoffs between speed and accuracy when generating channels via ray-tracing. We finally provide results of beam selection and channel estimation to assess the impact of the improvements in the ray-tracing simulation methodology.

5G MIMO Data for Machine Learning: Application to Beam-Selection using Deep Learning

Jun 09, 2021

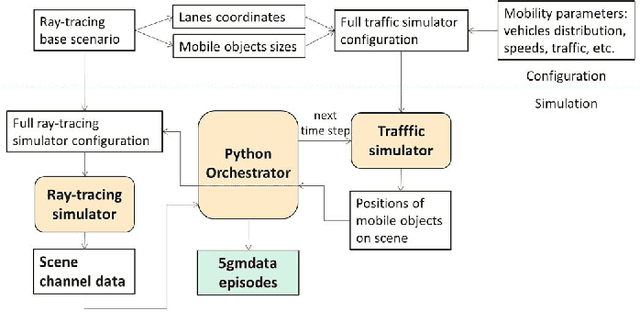

Abstract:The increasing complexity of configuring cellular networks suggests that machine learning (ML) can effectively improve 5G technologies. Deep learning has proven successful in ML tasks such as speech processing and computational vision, with a performance that scales with the amount of available data. The lack of large datasets inhibits the flourish of deep learning applications in wireless communications. This paper presents a methodology that combines a vehicle traffic simulator with a raytracing simulator, to generate channel realizations representing 5G scenarios with mobility of both transceivers and objects. The paper then describes a specific dataset for investigating beams-election techniques on vehicle-to-infrastructure using millimeter waves. Experiments using deep learning in classification, regression and reinforcement learning problems illustrate the use of datasets generated with the proposed methodology

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge