Marco Giordani

Department of Information Engineering, University of Padova, Italy

Teleoperated Driving: a New Challenge for 3D Object Detection in Compressed Point Clouds

Jun 13, 2025Abstract:In recent years, the development of interconnected devices has expanded in many fields, from infotainment to education and industrial applications. This trend has been accelerated by the increased number of sensors and accessibility to powerful hardware and software. One area that significantly benefits from these advancements is Teleoperated Driving (TD). In this scenario, a controller drives safely a vehicle from remote leveraging sensors data generated onboard the vehicle, and exchanged via Vehicle-to-Everything (V2X) communications. In this work, we tackle the problem of detecting the presence of cars and pedestrians from point cloud data to enable safe TD operations. More specifically, we exploit the SELMA dataset, a multimodal, open-source, synthetic dataset for autonomous driving, that we expanded by including the ground-truth bounding boxes of 3D objects to support object detection. We analyze the performance of state-of-the-art compression algorithms and object detectors under several metrics, including compression efficiency, (de)compression and inference time, and detection accuracy. Moreover, we measure the impact of compression and detection on the V2X network in terms of data rate and latency with respect to 3GPP requirements for TD applications.

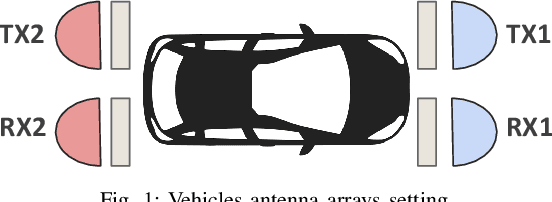

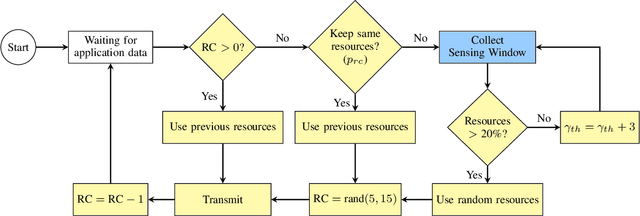

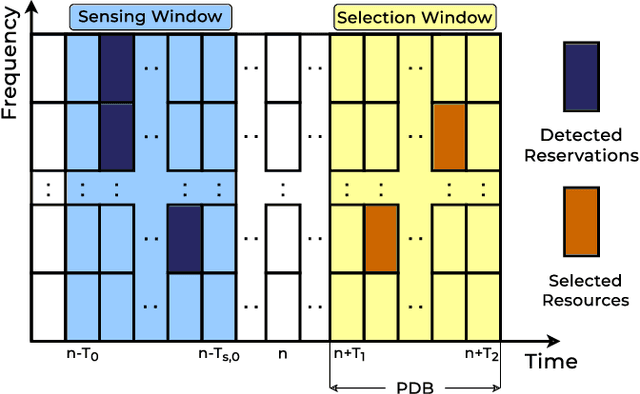

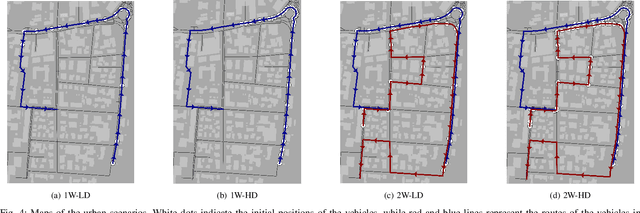

Sensing-Based Beamformed Resource Allocation in Standalone Millimeter-Wave Vehicular Networks

Mar 19, 2025

Abstract:In 3GPP New Radio (NR) Vehicle-to-Everything (V2X), the new standard for next-generation vehicular networks, vehicles can autonomously select sidelink resources for data transmission, which permits network operations without cellular coverage. However, standalone resource allocation is uncoordinated, and is complicated by the high mobility of the nodes that may introduce unforeseen channel collisions (e.g., when a transmitting vehicle changes path) or free up resources (e.g., when a vehicle moves outside of the communication area). Moreover, unscheduled resource allocation is prone to the hidden node and exposed node problems, which are particularly critical considering directional transmissions. In this paper, we implement and demonstrate a new channel access scheme for NR V2X in Frequency Range 2 (FR2), i.e., at millimeter wave (mmWave) frequencies, based on directional and beamformed transmissions along with Sidelink Control Information (SCI) to select resources for transmission. We prove via simulation that this approach can reduce the probability of collision for resource allocation, compared to a baseline solution that does not configure SCI transmissions.

Performance Evaluation of IoT LoRa Networks on Mars Through ns-3 Simulations

Dec 27, 2024

Abstract:In recent years, there has been a significant surge of interest in Mars exploration, driven by the planet's potential for human settlement and its proximity to Earth. In this paper, we explore the performance of the LoRaWAN technology on Mars, to study whether commercial off-the-shelf IoT products, designed and developed on Earth, can be deployed on the Martian surface. We use the ns-3 simulator to model various environmental conditions, primarily focusing on the Free Space Path Loss (FSPL) and the impact of Martian dust storms. Simulation results are given with respect to Earth, as a function of the distance, packet size, offered traffic, and the impact of Mars' atmospheric perturbations. We show that LoRaWAN can be a viable communication solution on Mars, although the performance is heavily affected by the extreme Martian environment over long distances.

Enhanced Time Division Duplexing Slot Allocation and Scheduling in Non-Terrestrial Networks

Dec 02, 2024Abstract:The integration of non-terrestrial networks (NTNs) and terrestrial networks (TNs) is fundamental for extending connectivity to rural and underserved areas that lack coverage from traditional cellular infrastructure. However, this integration presents several challenges. For instance, TNs mainly operate in Time Division Duplexing (TDD). However, for NTN via satellites, TDD is complicated due to synchronization problems in large cells, and the significant impact of guard periods and long propagation delays. In this paper, we propose a novel slot allocation mechanism to enable TDD in NTN. This approach permits to allocate additional transmissions during the guard period between a downlink slot and the corresponding uplink slot to reduce the overhead, provided that they do not interfere with other concurrent transmissions. Moreover, we propose two scheduling methods to select the users that transmit based on considerations related to the Signal-to-Noise Ratio (SNR) or the propagation delay. Simulations demonstrate that our proposal can increase the network capacity compared to a benchmark scheme that does not schedule transmissions in guard periods.

On the Energy Consumption of UAV Edge Computing in Non-Terrestrial Networks

Dec 20, 2023

Abstract:During the last few years, the use of Unmanned Aerial Vehicles (UAVs) equipped with sensors and cameras has emerged as a cutting-edge technology to provide services such as surveillance, infrastructure inspections, and target acquisition. However, this approach requires UAVs to process data onboard, mainly for person/object detection and recognition, which may pose significant energy constraints as UAVs are battery-powered. A possible solution can be the support of Non-Terrestrial Networks (NTNs) for edge computing. In particular, UAVs can partially offload data (e.g., video acquisitions from onboard sensors) to more powerful upstream High Altitude Platforms (HAPs) or satellites acting as edge computing servers to increase the battery autonomy compared to local processing, even though at the expense of some data transmission delays. Accordingly, in this study we model the energy consumption of UAVs, HAPs, and satellites considering the energy for data processing, offloading, and hovering. Then, we investigate whether data offloading can improve the system performance. Simulations demonstrate that edge computing can improve both UAV autonomy and end-to-end delay compared to onboard processing in many configurations.

Minimizing Energy Consumption for 5G NR Beam Management for RedCap Devices

Sep 26, 2023

Abstract:In 5G New Radio (NR), beam management entails periodic and continuous transmission and reception of control signals in the form of synchronization signal blocks (SSBs), used to perform initial access and/or channel estimation. However, this procedure demands continuous energy consumption, which is particularly challenging to handle for low-cost, low-complexity, and battery-constrained devices, such as RedCap devices to support mid-market Internet of Things (IoT) use cases. In this context, this work aims at reducing the energy consumption during beam management for RedCap devices, while ensuring that the desired Quality of Service (QoS) requirements are met. To do so, we formalize an optimization problem in an Indoor Factory (InF) scenario to select the best beam management parameters, including the beam update periodicity and the beamwidth, to minimize energy consumption based on users' distribution and their speed. The analysis yields the regions of feasibility, i.e., the upper limit(s) on the beam management parameters for RedCap devices, that we use to provide design guidelines accordingly.

Downlink Clustering-Based Scheduling of IRS-Assisted Communications With Reconfiguration Constraints

May 23, 2023Abstract:Intelligent reflecting surfaces (IRSs) are being widely investigated as a potential low-cost and energy-efficient alternative to active relays for improving coverage in next-generation cellular networks. However, technical constraints in the configuration of IRSs should be taken into account in the design of scheduling solutions and the assessment of their performance. To this end, we examine an IRS-assisted time division multiple access (TDMA) cellular network where the reconfiguration of the IRS incurs a communication cost; thus, we aim at limiting the number of reconfigurations over time. Along these lines, we propose a clustering-based heuristic scheduling scheme that maximizes the cell sum capacity, subject to a fixed number of reconfigurations within a TDMA frame. First, the best configuration of each user equipment (UE), in terms of joint beamforming and optimal IRS configuration, is determined using an iterative algorithm. Then, we propose different clustering techniques to divide the UEs into subsets sharing the same sub-optimal IRS configuration, derived through distance- and capacity-based algorithms. Finally, UEs within the same cluster are scheduled accordingly. We provide extensive numerical results for different propagation scenarios, IRS sizes, and phase shifters quantization constraints, showing the effectiveness of our approach in supporting multi-user IRS systems with practical constraints.

Implementation of a Channel Model for Non-Terrestrial Networks in ns-3

May 09, 2023

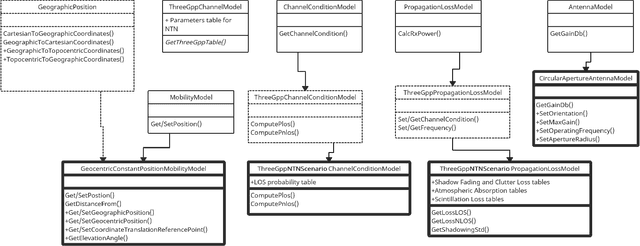

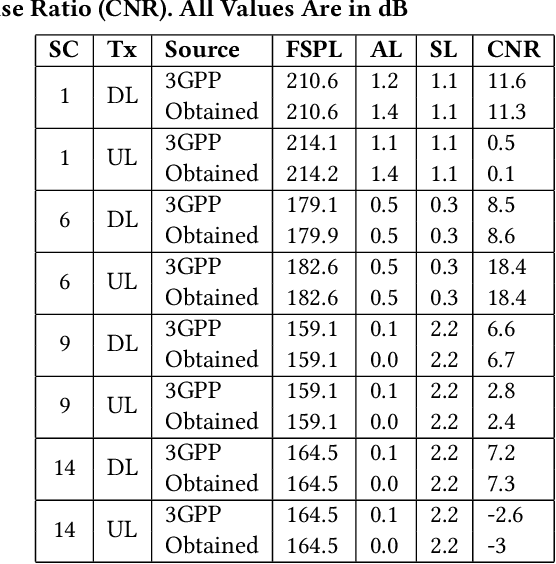

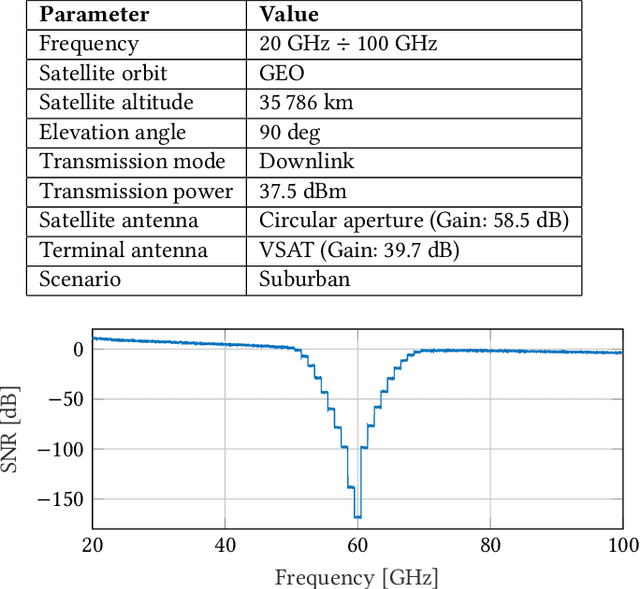

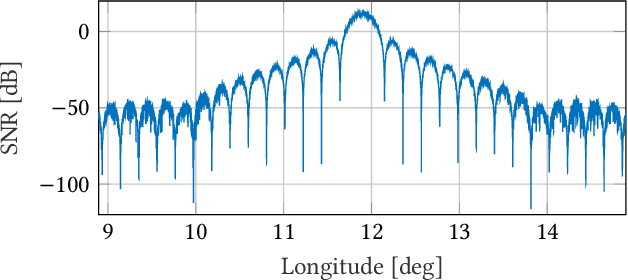

Abstract:While the 5th generation (5G) of mobile networks has landed in the commercial area, the research community is exploring new functionalities for 6th generation (6G) networks, for example non-terrestrial networks (NTNs) via space/air nodes such as Unmanned Aerial Vehicles (UAVs), High Altitute Platforms (HAPs) or satellites. Specifically, satellite-based communication offers new opportunities for future wireless applications, such as providing connectivity to remote or otherwise unconnected areas, complementing terrestrial networks to reduce connection downtime, as well as increasing traffic efficiency in hot spot areas. In this context, an accurate characterization of the NTN channel is the first step towards proper protocol design. Along these lines, this paper provides an ns-3 implementation of the 3rd Generation Partnership Project (3GPP) channel and antenna models for NTN described in Technical Report 38.811. In particular, we extend the ns-3 code base with new modules to implement the attenuation of the signal in air/space due to atmospheric gases and scintillation, and new mobility and fading models to account for the Geocentric Cartesian coordinate system of satellites. Finally, we validate the accuracy of our ns-3 module via simulations against 3GPP calibration results

Towards Decentralized Predictive Quality of Service in Next-Generation Vehicular Networks

Feb 22, 2023Abstract:To ensure safety in teleoperated driving scenarios, communication between vehicles and remote drivers must satisfy strict latency and reliability requirements. In this context, Predictive Quality of Service (PQoS) was investigated as a tool to predict unanticipated degradation of the Quality of Service (QoS), and allow the network to react accordingly. In this work, we design a reinforcement learning (RL) agent to implement PQoS in vehicular networks. To do so, based on data gathered at the Radio Access Network (RAN) and/or the end vehicles, as well as QoS predictions, our framework is able to identify the optimal level of compression to send automotive data under low latency and reliability constraints. We consider different learning schemes, including centralized, fully-distributed, and federated learning. We demonstrate via ns-3 simulations that, while centralized learning generally outperforms any other solution, decentralized learning, and especially federated learning, offers a good trade-off between convergence time and reliability, with positive implications in terms of privacy and complexity.

Downlink TDMA Scheduling for IRS-aided Communications with Block-Static Constraints

Jan 25, 2023

Abstract:Intelligent reflecting surfaces (IRSs) are being studied as possible low-cost energy-efficient alternatives to active relays, with the goal of solving the coverage issues of millimeter wave (mmWave) and terahertz (THz) network deployments. In the literature, these surfaces are often studied by idealizing their characteristics. Notably, it is often assumed that IRSs can tune with arbitrary frequency the phase-shifts induced by their elements, thanks to a wire-like control channel to the next generation node base (gNB). Instead, in this work we investigate an IRS-aided time division multiple access (TDMA) cellular network, where the reconfiguration of the IRS may entail an energy or communication cost, and we aim at limiting the number of reconfigurations over time. We develop a clustering-based heuristic scheduling, which optimizes the system sum-rate subject to a given number of reconfigurations within the TDMA frame. To such end, we first cluster user equipments (UEs) with a similar optimal IRS configuration. Then, we compute an overall IRS cluster configuration, which can be thus kept constant while scheduling the whole UEs cluster. Numerical results show that our approach is effective in supporting IRSs-aided systems with practical constraints, achieving up to 85% of the throughput obtained by an ideal deployment, while providing a 50% reduction in the number of IRS reconfigurations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge