Jonathan Gambini

Milan Research Center, HUAWEI, Italy

Downlink Clustering-Based Scheduling of IRS-Assisted Communications With Reconfiguration Constraints

May 23, 2023Abstract:Intelligent reflecting surfaces (IRSs) are being widely investigated as a potential low-cost and energy-efficient alternative to active relays for improving coverage in next-generation cellular networks. However, technical constraints in the configuration of IRSs should be taken into account in the design of scheduling solutions and the assessment of their performance. To this end, we examine an IRS-assisted time division multiple access (TDMA) cellular network where the reconfiguration of the IRS incurs a communication cost; thus, we aim at limiting the number of reconfigurations over time. Along these lines, we propose a clustering-based heuristic scheduling scheme that maximizes the cell sum capacity, subject to a fixed number of reconfigurations within a TDMA frame. First, the best configuration of each user equipment (UE), in terms of joint beamforming and optimal IRS configuration, is determined using an iterative algorithm. Then, we propose different clustering techniques to divide the UEs into subsets sharing the same sub-optimal IRS configuration, derived through distance- and capacity-based algorithms. Finally, UEs within the same cluster are scheduled accordingly. We provide extensive numerical results for different propagation scenarios, IRS sizes, and phase shifters quantization constraints, showing the effectiveness of our approach in supporting multi-user IRS systems with practical constraints.

Downlink TDMA Scheduling for IRS-aided Communications with Block-Static Constraints

Jan 25, 2023

Abstract:Intelligent reflecting surfaces (IRSs) are being studied as possible low-cost energy-efficient alternatives to active relays, with the goal of solving the coverage issues of millimeter wave (mmWave) and terahertz (THz) network deployments. In the literature, these surfaces are often studied by idealizing their characteristics. Notably, it is often assumed that IRSs can tune with arbitrary frequency the phase-shifts induced by their elements, thanks to a wire-like control channel to the next generation node base (gNB). Instead, in this work we investigate an IRS-aided time division multiple access (TDMA) cellular network, where the reconfiguration of the IRS may entail an energy or communication cost, and we aim at limiting the number of reconfigurations over time. We develop a clustering-based heuristic scheduling, which optimizes the system sum-rate subject to a given number of reconfigurations within the TDMA frame. To such end, we first cluster user equipments (UEs) with a similar optimal IRS configuration. Then, we compute an overall IRS cluster configuration, which can be thus kept constant while scheduling the whole UEs cluster. Numerical results show that our approach is effective in supporting IRSs-aided systems with practical constraints, achieving up to 85% of the throughput obtained by an ideal deployment, while providing a 50% reduction in the number of IRS reconfigurations.

End-to-End Simulation of 5G Networks Assisted by IRS and AF Relays

Mar 04, 2022

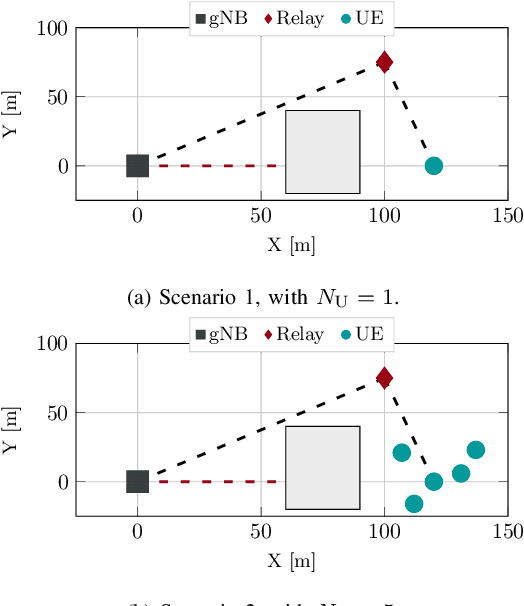

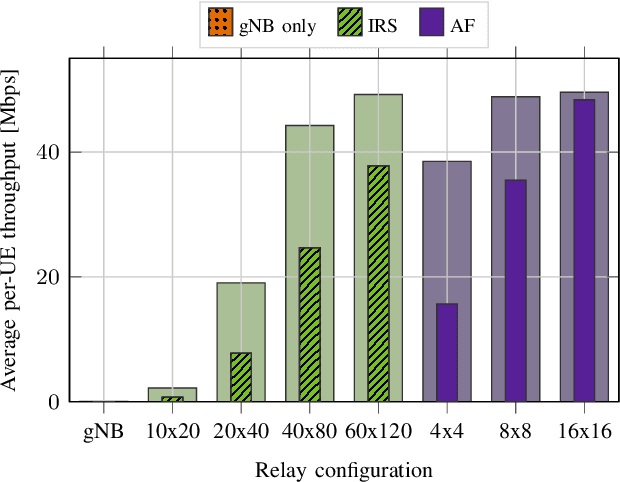

Abstract:The high propagation and penetration loss experienced at millimeter wave (mmWave) frequencies requires ultra-dense deployments of 5th generation (5G) base stations, which may be infeasible and costly for network operators. Integrated Access and Backhaul (IAB) has been proposed to partially address this issue, even though raising concerns in terms of power consumption and scalability. Recently, the research community has been investigating Intelligent Reflective Surfaces (IRSs) and Amplify-and-Forward (AF) relays as more energy-efficient alternatives to solve coverage issues in 5G scenarios. Along these lines, this paper relies on a new simulation framework, based on ns-3, to simulate IRS/AF systems with a full-stack, end-to-end perspective, with considerations on to the impact of the channel model and the protocol stack of 5G NR networks. Our goal is to demonstrate whether these technologies can be used to relay 5G traffic requests and, if so, how to dimension IRS/AF nodes as a function of the number of end users.

Enabling Simulation-Based Optimization Through Machine Learning: A Case Study on Antenna Design

Aug 29, 2019

Abstract:Complex phenomena are generally modeled with sophisticated simulators that, depending on their accuracy, can be very demanding in terms of computational resources and simulation time. Their time-consuming nature, together with a typically vast parameter space to be explored, make simulation-based optimization often infeasible. In this work, we present a method that enables the optimization of complex systems through Machine Learning (ML) techniques. We show how well-known learning algorithms are able to reliably emulate a complex simulator with a modest dataset obtained from it. The trained emulator is then able to yield values close to the simulated ones in virtually no time. Therefore, it is possible to perform a global numerical optimization over the vast multi-dimensional parameter space, in a fraction of the time that would be required by a simple brute-force search. As a testbed for the proposed methodology, we used a network simulator for next-generation mmWave cellular systems. After simulating several antenna configurations and collecting the resulting network-level statistics, we feed it into our framework. Results show that, even with few data points, extrapolating a continuous model makes it possible to estimate the global optimum configuration almost instantaneously. The very same tool can then be used to achieve any further optimization goal on the same input parameters in negligible time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge