Mattia Lecci

An Open Framework to Model Diffraction by Dynamic Blockers in Millimeter Wave Simulations

Jun 10, 2022

Abstract:The millimeter wave (mmWave) band will be exploited to address the growing demand for high data rates and low latency. The higher frequencies, however, are prone to limitations on the propagation of the signal in the environment. Thus, highly directional beamforming is needed to increase the antenna gain. Another crucial problem of the mmWave frequencies is their vulnerability to blockage by physical obstacles. To this aim, we studied the problem of modeling the impact of second-order effects on mmWave channels, specifically the susceptibility of the mmWave signals to physical blockers. With respect to existing works on this topic, our project focuses on scenarios where mmWaves interact with multiple, dynamic blockers. Our open source software includes diffraction-based blockage models and interfaces directly with an open source Radio Frequency (RF) ray-tracing software.

Temporal Characterization of VR Traffic for Network Slicing Requirement Definition

Jun 01, 2022

Abstract:Over the past few years, the concept of VR has attracted increasing interest thanks to its extensive industrial and commercial applications. Currently, the 3D models of the virtual scenes are generally stored in the VR visor itself, which operates as a standalone device. However, applications that entail multi-party interactions will likely require the scene to be processed by an external server and then streamed to the visors. However, the stringent Quality of Service (QoS) constraints imposed by VR's interactive nature require Network Slicing (NS) solutions, for which profiling the traffic generated by the VR application is crucial. To this end, we collected more than 4 hours of traces in a real setup and analyzed their temporal correlation. More specifically, we focused on the CBR encoding mode, which should generate more predictable traffic streams. From the collected data, we then distilled two prediction models for future frame size, which can be instrumental in the design of dynamic resource allocation algorithms. Our results show that even the state-of-the-art H.264 CBR mode can have significant fluctuations, which can impact the NS optimization. We then exploited the proposed models to dynamically determine the Service Level Agreement (SLA) parameters in an NS scenario, providing service with the required QoS while minimizing resource usage.

Temporal Characterization of XR Traffic with Application to Predictive Network Slicing

Jan 18, 2022

Abstract:Over the past few years, eXtended Reality (XR) has attracted increasing interest thanks to its extensive industrial and commercial applications, and its popularity is expected to rise exponentially over the next decade. However, the stringent Quality of Service (QoS) constraints imposed by XR's interactive nature require Network Slicing (NS) solutions to support its use over wireless connections: in this context, quasi-Constant Bit Rate (CBR) encoding is a promising solution, as it can increase the predictability of the stream, making the network resource allocation easier. However, traffic characterization of XR streams is still a largely unexplored subject, particularly with this encoding. In this work, we characterize XR streams from more than 4 hours of traces captured in a real setup, analyzing their temporal correlation and proposing two prediction models for future frame size. Our results show that even the state-of-the-art H.264 CBR mode can have significant frame size fluctuations, which can impact the NS optimization. Our proposed prediction models can be applied to different traces, and even to different contents, achieving very similar performance. We also show the trade-off between network resource efficiency and XR QoS in a simple NS use case.

An Open Framework for Analyzing and Modeling XR Network Traffic

Aug 10, 2021

Abstract:Thanks to recent advancements in the technology, eXtended Reality (XR) applications are gaining a lot of momentum, and they will surely become increasingly popular in the next decade. These new applications, however, require a step forward also in terms of models to simulate and analyze this type of traffic sources in modern communication networks, in order to guarantee to the users state of the art performance and Quality of Experience (QoE). Recognizing this need, in this work, we present a novel open-source traffic model, which researchers can use as a starting point both for improvements of the model itself and for the design of optimized algorithms for the transmission of these peculiar data flows. Along with the mathematical model and the code, we also share with the community the traces that we gathered for our study, collected from freely available applications such as Minecraft VR, Google Earth VR, and Virus Popper. Finally, we propose a roadmap for the construction of an end-to-end framework that fills this gap in the current state of the art.

Machine Learning-aided Design of Thinned Antenna Arrays for Optimized Network Level Performance

Jan 25, 2020

Abstract:With the advent of millimeter wave (mmWave) communications, the combination of a detailed 5G network simulator with an accurate antenna radiation model is required to analyze the realistic performance of complex cellular scenarios. However, due to the complexity of both electromagnetic and network models, the design and optimization of antenna arrays is generally infeasible due to the required computational resources and simulation time. In this paper, we propose a Machine Learning framework that enables a simulation-based optimization of the antenna design. We show how learning methods are able to emulate a complex simulator with a modest dataset obtained from it, enabling a global numerical optimization over a vast multi-dimensional parameter space in a reasonable amount of time. Overall, our results show that the proposed methodology can be successfully applied to the optimization of thinned antenna arrays.

Enabling Simulation-Based Optimization Through Machine Learning: A Case Study on Antenna Design

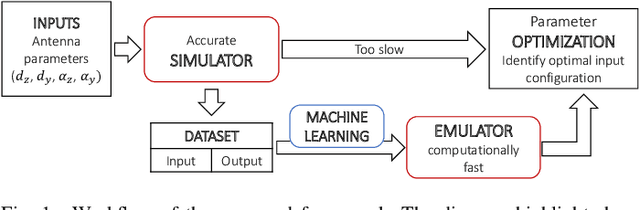

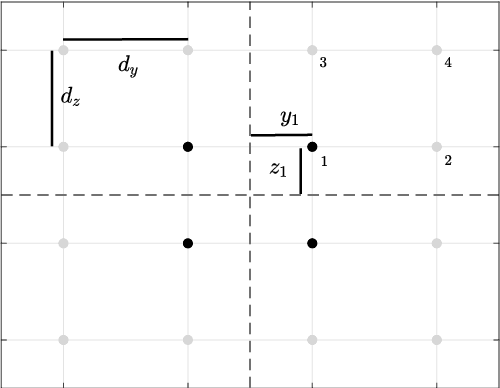

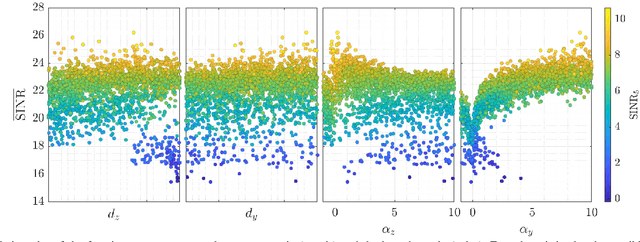

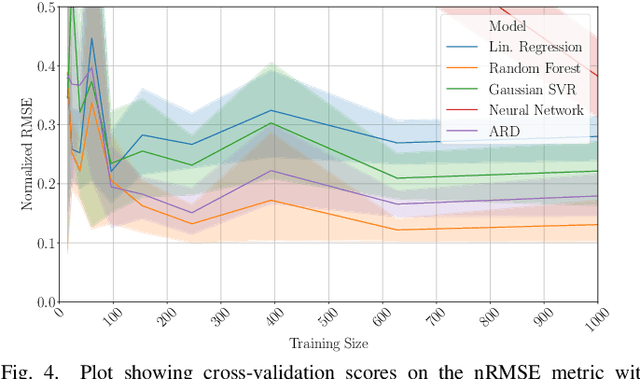

Aug 29, 2019

Abstract:Complex phenomena are generally modeled with sophisticated simulators that, depending on their accuracy, can be very demanding in terms of computational resources and simulation time. Their time-consuming nature, together with a typically vast parameter space to be explored, make simulation-based optimization often infeasible. In this work, we present a method that enables the optimization of complex systems through Machine Learning (ML) techniques. We show how well-known learning algorithms are able to reliably emulate a complex simulator with a modest dataset obtained from it. The trained emulator is then able to yield values close to the simulated ones in virtually no time. Therefore, it is possible to perform a global numerical optimization over the vast multi-dimensional parameter space, in a fraction of the time that would be required by a simple brute-force search. As a testbed for the proposed methodology, we used a network simulator for next-generation mmWave cellular systems. After simulating several antenna configurations and collecting the resulting network-level statistics, we feed it into our framework. Results show that, even with few data points, extrapolating a continuous model makes it possible to estimate the global optimum configuration almost instantaneously. The very same tool can then be used to achieve any further optimization goal on the same input parameters in negligible time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge