Andrea Zanella

Robust Reinforcement Learning over Wireless Networks with Homomorphic State Representations

Aug 11, 2025Abstract:In this work, we address the problem of training Reinforcement Learning (RL) agents over communication networks. The RL paradigm requires the agent to instantaneously perceive the state evolution to infer the effects of its actions on the environment. This is impossible if the agent receives state updates over lossy or delayed wireless systems and thus operates with partial and intermittent information. In recent years, numerous frameworks have been proposed to manage RL with imperfect feedback; however, they often offer specific solutions with a substantial computational burden. To address these limits, we propose a novel architecture, named Homomorphic Robust Remote Reinforcement Learning (HR3L), that enables the training of remote RL agents exchanging observations across a non-ideal wireless channel. HR3L considers two units: the transmitter, which encodes meaningful representations of the environment, and the receiver, which decodes these messages and performs actions to maximize a reward signal. Importantly, HR3L does not require the exchange of gradient information across the wireless channel, allowing for quicker training and a lower communication overhead than state-of-the-art solutions. Experimental results demonstrate that HR3L significantly outperforms baseline methods in terms of sample efficiency and adapts to different communication scenarios, including packet losses, delayed transmissions, and capacity limitations.

Analytical Modeling of Batteryless IoT Sensors Powered by Ambient Energy Harvesting

Jul 28, 2025Abstract:This paper presents a comprehensive mathematical model to characterize the energy dynamics of batteryless IoT sensor nodes powered entirely by ambient energy harvesting. The model captures both the energy harvesting and consumption phases, explicitly incorporating power management tasks to enable precise estimation of device behavior across diverse environmental conditions. The proposed model is applicable to a wide range of IoT devices and supports intelligent power management units designed to maximize harvested energy under fluctuating environmental conditions. We validated our model against a prototype batteryless IoT node, conducting experiments under three distinct illumination scenarios. Results show a strong correlation between analytical and measured supercapacitor voltage profiles, confirming the proposed model's accuracy.

To Train or Not to Train: Balancing Efficiency and Training Cost in Deep Reinforcement Learning for Mobile Edge Computing

Nov 11, 2024

Abstract:Artificial Intelligence (AI) is a key component of 6G networks, as it enables communication and computing services to adapt to end users' requirements and demand patterns. The management of Mobile Edge Computing (MEC) is a meaningful example of AI application: computational resources available at the network edge need to be carefully allocated to users, whose jobs may have different priorities and latency requirements. The research community has developed several AI algorithms to perform this resource allocation, but it has neglected a key aspect: learning is itself a computationally demanding task, and considering free training results in idealized conditions and performance in simulations. In this work, we consider a more realistic case in which the cost of learning is specifically accounted for, presenting a new algorithm to dynamically select when to train a Deep Reinforcement Learning (DRL) agent that allocates resources. Our method is highly general, as it can be directly applied to any scenario involving a training overhead, and it can approach the same performance as an ideal learning agent even under realistic training conditions.

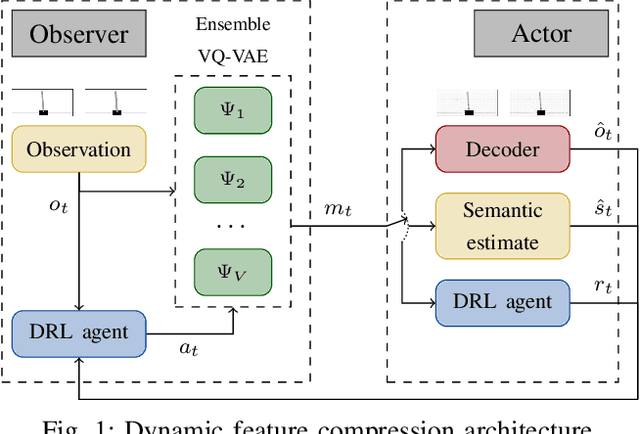

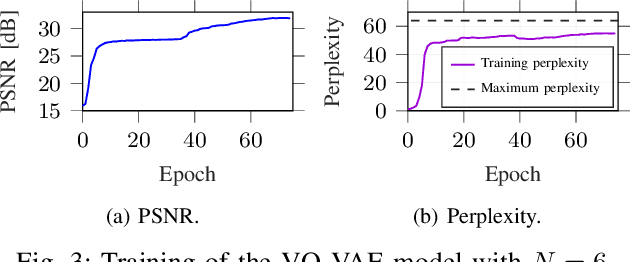

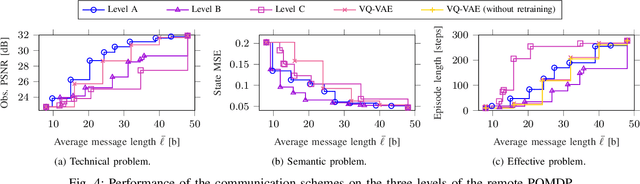

Effective Communication with Dynamic Feature Compression

Jan 29, 2024Abstract:The remote wireless control of industrial systems is one of the major use cases for 5G and beyond systems: in these cases, the massive amounts of sensory information that need to be shared over the wireless medium may overload even high-capacity connections. Consequently, solving the effective communication problem by optimizing the transmission strategy to discard irrelevant information can provide a significant advantage, but is often a very complex task. In this work, we consider a prototypal system in which an observer must communicate its sensory data to a robot controlling a task (e.g., a mobile robot in a factory). We then model it as a remote Partially Observable Markov Decision Process (POMDP), considering the effect of adopting semantic and effective communication-oriented solutions on the overall system performance. We split the communication problem by considering an ensemble Vector Quantized Variational Autoencoder (VQ-VAE) encoding, and train a Deep Reinforcement Learning (DRL) agent to dynamically adapt the quantization level, considering both the current state of the environment and the memory of past messages. We tested the proposed approach on the well-known CartPole reference control problem, obtaining a significant performance increase over traditional approaches.

Push- and Pull-based Effective Communication in Cyber-Physical Systems

Jan 15, 2024Abstract:In Cyber Physical Systems (CPSs), two groups of actors interact toward the maximization of system performance: the sensors, observing and disseminating the system state, and the actuators, performing physical decisions based on the received information. While it is generally assumed that sensors periodically transmit updates, returning the feedback signal only when necessary, and consequently adapting the physical decisions to the communication policy, can significantly improve the efficiency of the system. In particular, the choice between push-based communication, in which updates are initiated autonomously by the sensors, and pull-based communication, in which they are requested by the actuators, is a key design step. In this work, we propose an analytical model for optimizing push- and pull-based communication in CPSs, observing that the policy optimality coincides with Value of Information (VoI) maximization. Our results also highlight that, despite providing a better optimal solution, implementable push-based communication strategies may underperform even in relatively simple scenarios.

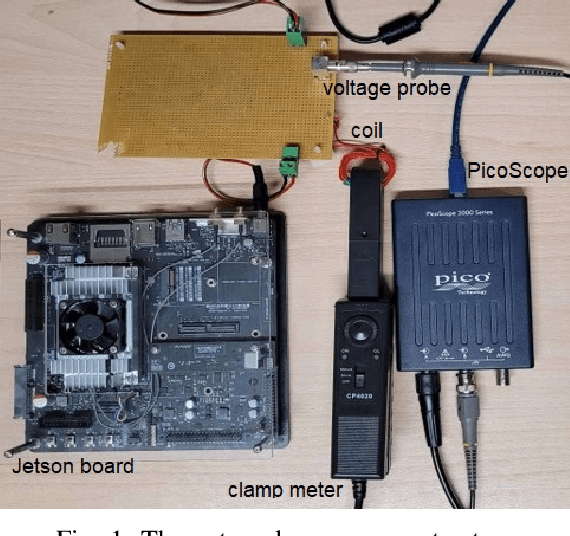

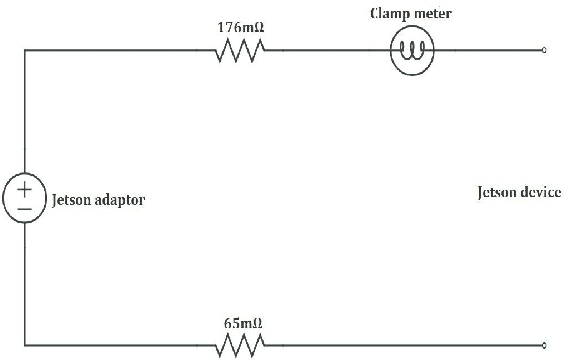

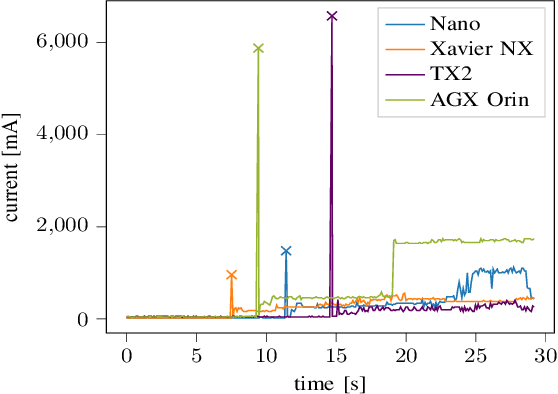

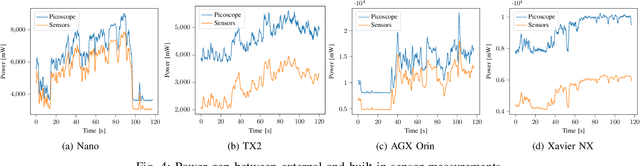

Accurate Calibration of Power Measurements from Internal Power Sensors on NVIDIA Jetson Devices

Jun 19, 2023

Abstract:Power efficiency is a crucial consideration for embedded systems design, particularly in the field of edge computing and IoT devices. This study aims to calibrate the power measurements obtained from the built-in sensors of NVIDIA Jetson devices, facilitating the collection of reliable and precise power consumption data in real-time. To achieve this goal, accurate power readings are obtained using external hardware, and a regression model is proposed to map the sensor measurements to the true power values. Our results provide insights into the accuracy and reliability of the built-in power sensors for various Jetson edge boards and highlight the importance of calibrating their internal power readings. In detail, internal sensors underestimate the actual power by up to 50% in most cases, but this calibration reduces the error to within 3%. By making the internal sensor data usable for precise online assessment of power and energy figures, the regression models presented in this paper have practical applications, for both practitioners and researchers, in accurately designing energy-efficient and autonomous edge services.

Fast Context Adaptation in Cost-Aware Continual Learning

Jun 06, 2023Abstract:In the past few years, DRL has become a valuable solution to automatically learn efficient resource management strategies in complex networks with time-varying statistics. However, the increased complexity of 5G and Beyond networks requires correspondingly more complex learning agents and the learning process itself might end up competing with users for communication and computational resources. This creates friction: on the one hand, the learning process needs resources to quickly convergence to an effective strategy; on the other hand, the learning process needs to be efficient, i.e., take as few resources as possible from the user's data plane, so as not to throttle users' QoS. In this paper, we investigate this trade-off and propose a dynamic strategy to balance the resources assigned to the data plane and those reserved for learning. With the proposed approach, a learning agent can quickly converge to an efficient resource allocation strategy and adapt to changes in the environment as for the CL paradigm, while minimizing the impact on the users' QoS. Simulation results show that the proposed method outperforms static allocation methods with minimal learning overhead, almost reaching the performance of an ideal out-of-band CL solution.

Multi-Agent Reinforcement Learning for Pragmatic Communication and Control

Feb 28, 2023Abstract:The automation of factories and manufacturing processes has been accelerating over the past few years, boosted by the Industry 4.0 paradigm, including diverse scenarios with mobile, flexible agents. Efficient coordination between mobile robots requires reliable wireless transmission in highly dynamic environments, often with strict timing requirements. Goal-oriented communication is a possible solution for this problem: communication decisions should be optimized for the target control task, providing the information that is most relevant to decide which action to take. From the control perspective, networked control design takes the communication impairments into account in its optmization of physical actions. In this work, we propose a joint design that combines goal-oriented communication and networked control into a single optimization model, an extension of a multiagent POMDP which we call Cyber-Physical POMDP (CP-POMDP). The model is flexible enough to represent several swarm and cooperative scenarios, and we illustrate its potential with two simple reference scenarios with a single agent and a set of supporting sensors. Joint training of the communication and control systems can significantly improve the overall performance, particularly if communication is severely constrained, and can even lead to implicit coordination of communication actions.

Semantic and Effective Communication for Remote Control Tasks with Dynamic Feature Compression

Jan 14, 2023

Abstract:The coordination of robotic swarms and the remote wireless control of industrial systems are among the major use cases for 5G and beyond systems: in these cases, the massive amounts of sensory information that needs to be shared over the wireless medium can overload even high-capacity connections. Consequently, solving the effective communication problem by optimizing the transmission strategy to discard irrelevant information can provide a significant advantage, but is often a very complex task. In this work, we consider a prototypal system in which an observer must communicate its sensory data to an actor controlling a task (e.g., a mobile robot in a factory). We then model it as a remote Partially Observable Markov Decision Process (POMDP), considering the effect of adopting semantic and effective communication-oriented solutions on the overall system performance. We split the communication problem by considering an ensemble Vector Quantized Variational Autoencoder (VQ-VAE) encoding, and train a Deep Reinforcement Learning (DRL) agent to dynamically adapt the quantization level, considering both the current state of the environment and the memory of past messages. We tested the proposed approach on the well-known CartPole reference control problem, obtaining a significant performance increase over traditional approaches

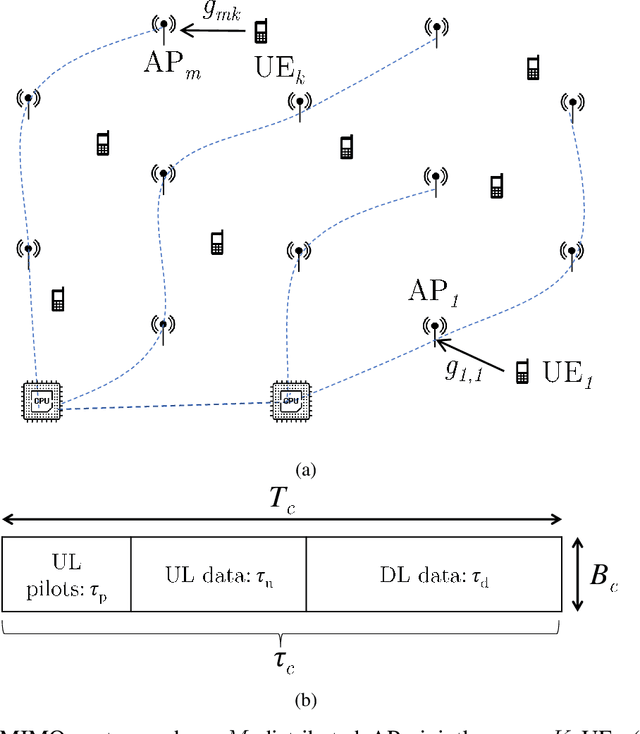

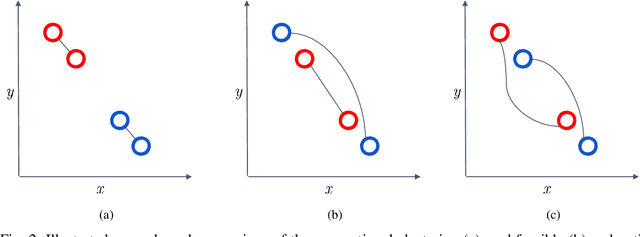

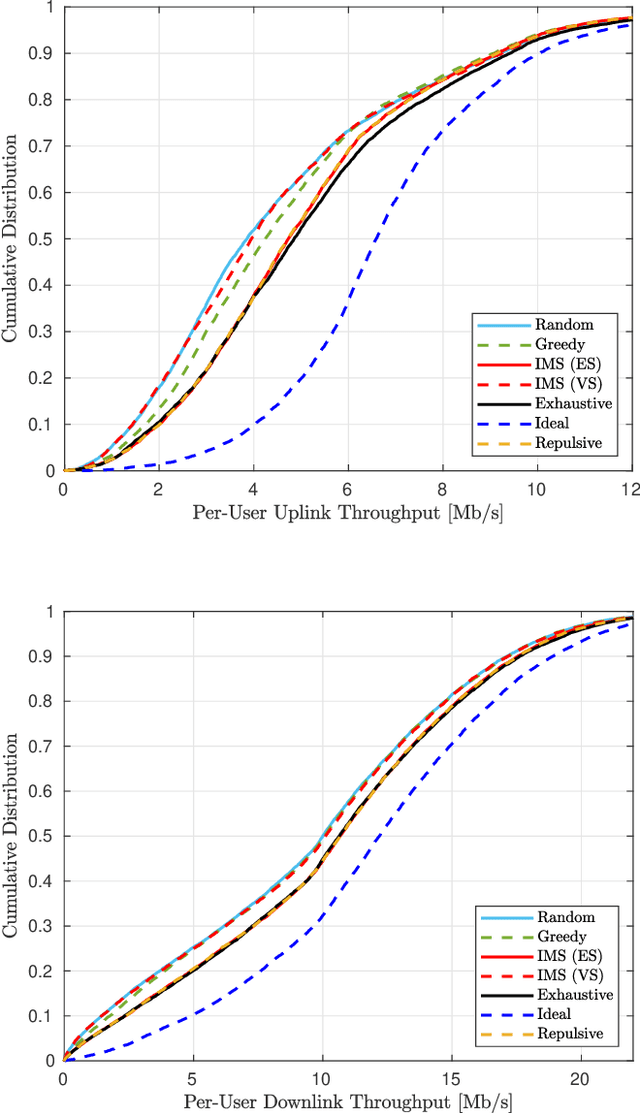

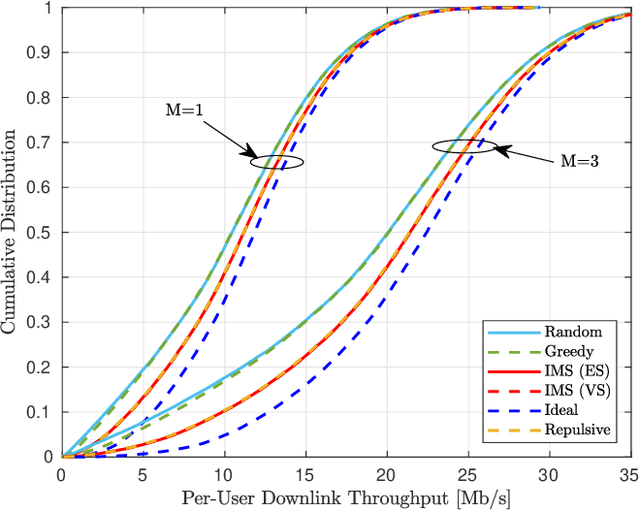

Pilot Reuse in Cell-Free Massive MIMO Systems: A Diverse Clustering Approach

Dec 17, 2022

Abstract:Distributed or Cell-free (CF) massive Multiple-Input, Multiple-Output (mMIMO), has been recently proposed as an answer to the limitations of the current network-centric systems in providing high-rate ubiquitous transmission. The capability of providing uniform service level makes CF mMIMO a potential technology for beyond-5G and 6G networks. The acquisition of accurate Channel State Information (CSI) is critical for different CF mMIMO operations. Hence, an uplink pilot training phase is used to efficiently estimate transmission channels. The number of available orthogonal pilot signals is limited, and reusing these pilots will increase co-pilot interference. This causes an undesirable effect known as pilot contamination that could reduce the system performance. Hence, a proper pilot reuse strategy is needed to mitigate the effects of pilot contamination. In this paper, we formulate pilot assignment in CF mMIMO as a diverse clustering problem and propose an iterative maxima search scheme to solve it. In this approach, we first form the clusters of User Equipments (UEs) so that the intra-cluster diversity maximizes and then assign the same pilots for all UEs in the same cluster. The numerical results show the proposed techniques' superiority over other methods concerning the achieved uplink and downlink average and per-user data rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge